class notes chapter 3

advertisement

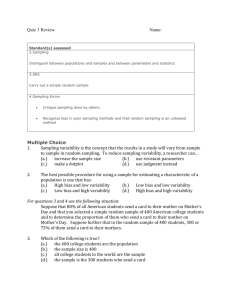

Producing Data What individuals do you want to study? What variables do you wish to measure? What conclusions can you draw? Measuring variables on the individuals you wish to study produces data on these individuals. Exploratory Data Analysis: Examining patterns and trends in data. Statistical Inference: Applying scientific reasoning to the data to answer specific questions with a known degree of confidence. Statistical Inference is only reliable if the data is produced, collected, or arranged according to a definite plan that minimizes the chance of drawing incorrect conclusions. Statistical Design: Methods of producing or collecting data for the purposes of statistical inference. If we wish to address a specific question about a given population, which individuals do we choose to measure? The selection of individuals is called sampling. 1. A random sample: Selecting just a portion of the population such that every individual of the population has the same chance of being selected. 2. A sample of convenience: Selecting just a portion of the population using a criteria that is convenient. 3. A census: Selecting the entire population. A sample of the population is used to represent the whole population. Beware of conclusions based on anecdotes. Anecdotal evidence is unreliable. Data collected on a few haphazardly chosen individuals is often interesting, but rarely represents the population as a whole. Concerns of Sampling Design: Suppose we are interested in knowing the true value of some numeric characteristic of a population. Call this value a parameter. Unless we take a census of the population, the parameter cannot be determined exactly. However, if a sample is chosen to represent the population, then the equivalent characteristic computed on the sample yields and estimate of the parameter. This estimate is called a statistic. A parameter is a numeric characteristic of a population. A statistic is a numeric characteristic of a sample. A statistic is used to estimate a parameter. But since the value of a statistic depends on which sample was chosen, a statistic is subject to variability. Example: Suppose the true proportion of all adult residents of California who support a woman’s right to have an abortion is 68.4% . Let’s pretend that this value is actually unknown to us, and that we wish to estimate this value by sampling. The method of sampling could have an important consequence as far as what our estimate does. Bias: A statistical design is biased if it systematically favors certain outcomes. When there is bias in the statistical design, numerical estimates of parameters will either systematically overshoot (or undershoot) the parameter. Variability: Because statistics depend on samples, the value one obtains would be different had a different sample been selected. Every statistical design has variability. Is it high or low? 1. 5 independent researchers using the same biased methodology might find 68.7% 69.2% 68.1% 70.0% 69.9% Based on independent samples of 500 adults each. Notice the variability seems quite low. 2. 5 independent researchers using an unbiased methodology might find 64.5% 73.6% 61.8% 67.4% 59.9% Based on independent samples of 100 adults each. Notice the variability seems much higher. 3. 5 independent researches using a biased methodology might find 58.8% 59.2% 59.4% 58.3% 59.5% Based on independent samples of 1000 adults each. The variability seems to be very low. Observe that and bias is determined by comparing the “guesses” to the actual value itself. Variability does not depend on the true value of the parameter, but only measures how much the estimates tend to agree with each other. Good statistical design produces data the shows no (or very low) bias and low variability How to get unbiasedness The best way to make a statistical design unbiased is to use random selection from the population of interest. This means that every individual in the population has the same chance of being selected. If a sample of convenience is used, try to make it as random as possible. Assocations: Determining Causation As discussed in chapter 2, if we have determined a definite association between variables x and y, we might like to investigate: Does x cause y? As we have seen, lurking variables are usually present and often confound the issue of what is causing y. An experiment deliberately imposes some treatment on individuals in an attempt to influence the responses. An observational study merely observes individuals and measures variables but does not attempt to influence the responses. As indicated before, the effect of lurking variables cannot be controlled in observational studies. They can be controlled in experiments. The best way to conclude a causal relationship is to design an experiment to control the effect of the lurking variables. Uncontrolled lurking variables often contribute hidden biases that affect the interpretation of the results. Typically, experiments involve selecting at least two groups of experimental units, and applying different levels of the treatment to each group. We observe the responses in each group. Treatment observed response. To minimize biases, structure is often imposed so that the effect of the lurking variables are minimized. Some structures used: Control group, matched pairs design, blind and double blind experimentation, randomization, block designs. Controlling variability: Example: Suppose two women suffering headaches are given a pill, one aspirin and one a placebo. Two hours later the headache is gone only for the woman who had the aspirin. What can we conclude? Answer: Either the pill worked -orThe results were due to chance (or because of lurking variables) As long as the effect of lurking variables is controlled, then the only remaining issue is chance. It is possible that that one woman would have lost her headache even if she had been given a placebo as well. Here the issue is variability. Conceivably, the results of the experiment could have easily gone as follows: both women lost their headaches, neither lost their headache, or only the one taking the placebo lost her headache! The perceived effect depends on the sample that is chosen! This is the issue of variability. How to reduce variability Variability is reduced by replication! A far more compelling result would be obtained as follows: 200 women with high blood pressure were divided into 2 groups of 100 each. One group was given a placebo, the other group was given a new medication. 100 women - - - - - - - > placebo 100 women - - - - - - - - > medication Assume that the effect of the lurking variables were controlled (similar groups, blind experiment, etc.) After 6 weeks, the placebo group showed an average drop in diastolic bp by 2, and the medication group showed an average drop in diastolic bp by 10. Such a drastic drop would rarely be seen in a “control” group of size 100. This gives us confidence (not certainty) that the treatment caused the change in response, and not chance. Replication reduces the chance variation in results. When an observed result is such that it could rarely occur as the result of chance alone, we call it statistically significant. Statistical significance: An effect so large that that is would rarely occur by chance. Statistical significance is rarely achieved unless experiments are performed on many experimental units. So the keys to experimental design: 1. Randomization to reduce bias 2. Replication to reduce variability 3. Depending on the situation: match pairs designs, blocking, dosage levels. All used to decrease the effect of lurking variables. Sampling Designs Recall that a sample is selected for analysis, and results obtained from the sample are used to characterize the population. Usually most sampling is done using a sample of convenience. Most of them introduce biases. Examples include: Favoritism: Choosing a sample that you believe will support a claim one wishes to substantiate. Voluntary Response: People choosing to participate in response to a general appeal. Probability sampling: The best way to achieve low or no bias is when chance is used as the criteria for selecting people. Examples of probability sampling: Simple random sample (SRS): A probability sample in which every person in the population has equal chances of being selected for the sample. Stratified sampling: The population is partitioned into groups. Within each group a SRS or probability sample is used to select the sample. Problems Associated with Sampling Undercoverage: Results when part of the population is left out of the sampling process. Undercoverage may result from telephone surveys or website polling. Nonresponse: Results when selected individuals fail to participate in the study. This usually results in bias towards motivated people who may have strong opinions. Elementary Statistical Inference Recall that when trying to estimate the true value of a parameter of a population, the corresponding statistic calculated on a sample is used to estimate the parameter. Think: Suppose we had selected every conceivable sample of the same size, and calculated the statistic on each sample. We would obtain a list of many values , all of which are “estimates” of the parameter. In reality we do not select every conceivable sample. But we should be aware that our “one sample” yields an estimate that is just one of many possible values. Bias: A systematic tendency for the possible values of the statistic to overshoot (or undershoot) the true value. Variability: The spread of the possible values. If we are convinced that the sampling strategy introduces no bias, we still have to be aware that the value we get from sampling is subject to variability, and thus is likely to be “off” of the true value by an amount subject to the size of the variability. In reality, only a single sample is chosen, and so, keeping in mind that the statistic we calculate is likely to be “off” of the true value, it is customary to report the margin or error as well. Example: If I report that the proportion of adult residents who support a woman’s right to have an abortion as 66.8% 3% Then the 66.8% represents the value that I obtained from my sample. The 3% is the “margin of error” which tells us that the true value is likely to be within 63.8% and 69.3%. Observe that the margin of error reflects the sampling variability. The smaller the sampling variability, the smaller the margin or error.