Unit 6 Notes

advertisement

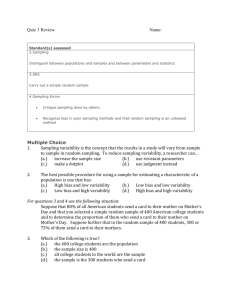

AP Stat Notes – Unit 6: Sampling Distributions The basis for statistical inference is “How often would this method give a correct answer if I used it very many times.” We can use random sampling that involves chance to help us answer this question. This is because the laws of probability answer the question “what would happen if we did this many times?” Think about random sampling as a random phenomenon like rolling dice or flipping a coin. How many times could I roll a pair of dice? Would I need to roll a pair of dice an infinite number of times to get a good idea of what the average sum of the dice would be? Wouldn’t the average sum after say 100 rolls give me a good estimate of the true average sum even though I have only looked at a small slice of the possible rolls? This is the same idea we use in sampling. We can take a random sample of say 100 people from a much larger population and they should give us a good estimate of the true value of the population, even though they only represent a small slice of the whole. Parameter – A number that describes a population. Statistic – A number that describes a sample. Measurement Mean Std Dev Proportion Parameter (or p) Statistic x s p(or p̂ ) A parameter is a fixed number. It is usually unknown because we cannot examine the entire population. A statistic is a number that will be known whenever we take a sample. However it is not fixed and can change from sample to sample. The big question then becomes, “How can I use a number taken from a small sample that will vary for each new sample I choose and use it to estimate a fixed number that represents an entire population?” If the statistic will change with each new sample, how could I possibly say it represents the entire population? This is the idea of sampling variability: the value of the statistic will vary from sample to sample, but if chosen correctly should still be a good representation of the entire population and therefore can be used to estimate the parameter of interest. The sampling distribution of a statistic is the distribution of values taken by the statistic in all possible samples of the same size from the same population. The bias of a statistic The bias of a statistic refers to the center of the sampling distribution. We would like the center of our sampling distribution to be located at the population parameter. A statistic is unbiased if the mean of its sampling distribution is equal to the true value of the parameter being estimated. An unbiased statistic will still sometimes fall above the parameter and sometimes below, but it will have no tendency to overestimate or underestimate the statistic. The variability of a statistic The variability of a statistic is described by the spread of its sampling distribution. This spread is determined by the sampling design and the size of the sample. Larger samples give smaller spread. We would like a statistic that is unbiased and has a little variability as possible. That is to say we want a statistic that will be centered at the parameter we are trying to estimate, and we want that statistic to be as close as possible to that center each time. We can think of our sampling distribution as throwing darts at the dartboard. We want our darts to be centered on the bull’s-eye(unbiased) and the all be close together(low variability). The four dart boards below represent each possible situation. Example 8.2, pg 451 Example 8.3, pg 453 x Sampling Distribution of ___ When the objective of a statistical investigation is to make an inference about the population mean , it is natural to consider the sample mean x as an estimate of . Let x denote the mean of the observations in a random sample of size n from a population having mean and standard deviation . The sampling distribution of x (the collection of all sample means from all possible samples of size n from this population) has a mean denoted by x and a standard deviation denoted by x . General Properties of the Sampling Distribution of x : Rule 1: x = . The mean of the sampling distribution is equal to the mean of the population. Rule 2: x = n . This rule is exact if the population is infinite, and is approximately correct if the population is finite and no more than 10% of the pop. is included in the sample. Rule 3: When the population distribution is normal, the sampling distribution of x is also normal for any sample size n. Rule 4: Central Limit Theorem – When n is sufficiently large, the sampling distribution of x is well approximated by a normal curve, even when the population distribution is not itself normal. The Central Limit Theorem can safely be applied if n exceeds 30. For smaller sample sizes it may still be applied only if the population is believed to be close to normal itself. We can see the application of the Central Limit Theorem below for two different shaped populations. In (a) the population is normal, so no matter what the sample size, the sampling distribution of x is also normal. In (b) the population is strongly skewed to the right. Therefore we need a big enough sample size before we will see a sampling distribution of x that is normal. The Sampling Distribution of a Sample Proportion The objective of some statistical investigations is to draw conclusions about the proportion of individuals or objects in a population that possess a specified property. When this is the case, we use the symbol to denote the proportion of successes in the population. The value of is a number between 0 and 1. Like most parameters, this value is unknown to an investigator. A sample from the population is taken, and the results of that sample are used to estimate the true value of . The statistic that provides a basis for making inferences about is p(or p̂ ), the sample proportion of successes: p = (# of successes in the sample) / n Example 8.7, pg 462 & 8.8 pg 463 General Properties of the Sampling Distribution of p( p̂ ): Rule 1: p . The mean of the sampling distribution of p is equal to the population proportion. Rule 2: p (1 ) n . This rule is exact if the population is infinite, and is approximately correct if the population is finite and no more than 10% of the pop. is included in the sample. Rule 3: When n is large and is not too near 0 or 1, the sampling distribution of p is approximately normal. The further is from 0.5, the larger n must be for a normal approximation to the sampling distribution of p to be accurate. A conservative rule or thumb is: n 5 and n (1 ) 5