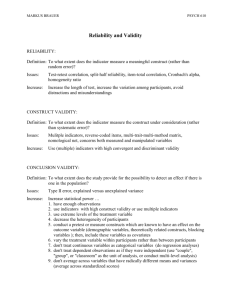

step v-- validating measures

advertisement