Regression Analysis: t90 versus t50

advertisement

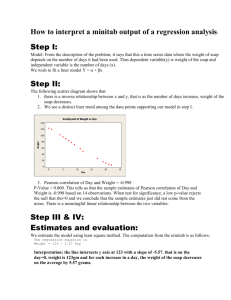

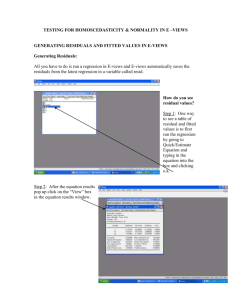

Page 17 Correlation and Regression Correlation and regression is used to explore the relationship between two or more variables. The correlation coefficient r is a measure of the linear relationship between two variables paired variables x and y.. For data, it is a statistic calculated using the formula r= The correlation coefficient is such -1 ≤ r ≤ 1. If y is a linear function of x, then r =1 if the slope is positive and -1 if it is negative. We emphasize that r is a measure of linear relationship, not functional relationship. Example. Here is a small dataset: y 0.00000 1.65831 2.23607 2.59808 2.82843 2.95804 3.00000 2.95804 2.82843 2.59808 2.23607 1.65831 0.00000 Fitted Line Plot y = 2.120 - 0.0000 x S R-Sq R-Sq(adj) 3.0 2.5 2.0 y x -3.0 -2.5 -2.0 -1.5 -1.0 -0.5 0.0 0.5 1.0 1.5 2.0 2.5 3.0 1.5 1.0 0.5 0.0 Mean of y = 2.11983 -3 -2 -1 0 x 1 2 3 Correlations: x, y Pearson correlation r of x and y = -0.000; P-Value = 1.000 You see the relationship of course: x2 + y2 = 9 A more concrete interpretation of r will be given later when we discuss regression. For the moment, view high values of | r | as indicating that the points (x, y) lie nearly on a straight line while low values indicate that no obvious line passes close to all the points. 1.09053 0.0% 0.0% Page 18 Astronomy Example. We use the data from Mukherjee, Feigelson, Babu, etal in “Three types of Gamma-Ray Bursts (The Astrophysical Journal, 508, pp 314-327, 1998), in which there are 11 variables, including 2 measures of burst durations t50 and t90 (times in which 50% and 90% of the flux arrives). It will be used to illustrate concepts in correlation and regression. One would expect, from the context of the example, that the variables t50 and t90 ought to be strongly related. Here is output from Minitab showing the value of r: Correlations: t50, t90 Pearson correlation of t50 and t90 = 0.868 P-Value = 0.000 Regression Analysis: t90 versus t50 The regression equation is t90 = 10.1 + 1.65 t50 Predictor Constant t50 Coef 10.106 1.64553 S = 26.8058 SE Coef 1.066 0.03333 R-Sq = 75.3% T 9.48 49.37 P 0.000 0.000 R-Sq(adj) = 75.3% Analysis of Variance Source Regression Residual Error Total DF 1 800 801 SS 1751506 574841 2326347 MS 1751506 719 F 2437.55 P 0.000 Page 19 Unusual Observations (Partial list!) Obs 2 5 7 10 20 26 44 77 127 130 t50 69 306 30 95 204 61 30 58 19 108 t90 208.576 430.016 381.248 182.016 292.736 221.184 123.392 179.840 110.464 158.080 Fit 123.002 514.242 58.866 166.813 345.530 111.207 58.761 104.783 41.911 187.665 SE Fit 2.031 9.768 1.070 2.847 6.375 1.823 1.069 1.713 0.959 3.248 Residual 85.574 -84.226 322.382 15.203 -52.794 109.977 64.631 75.057 68.553 -29.585 St Resid 3.20R -3.37RX 12.04R 0.57 X -2.03RX 4.11R 2.41R 2.81R 2.56R -1.11 X R denotes an observation with a large standardized residual. X denotes an observation whose X value gives it large influence. Residuals vs Fits for t90 Residuals Versus the Fitted Values (response is t90) Plot shows non constant variance and very unusual standardized residuals. Suggests making transformation on both x and y. We will discuss this later. 12.5 Standardized Residual 10.0 7.5 5.0 2.5 0.0 Transform by taking logs of both variables. -2.5 -5.0 0 100 200 300 Fitted Value 400 500 Correlations: log(t50), log(t90) Pearson correlation of log(t50) and log(t90) = 0.975 P-Value = 0.000 Page 20 Regression Analysis: log(t90) versus log(t50) The regression equation is log(t90) = 0.413 + 0.984 log(t50) Predictor Constant log(t50) Coef 0.413395 0.984235 S = 0.206054 SE Coef 0.008372 0.007863 R-Sq = 95.1% T 49.38 125.17 P 0.000 0.000 R-Sq(adj) = 95.1% Analysis of Variance Source Regression Residual Error Total DF 1 800 801 SS 665.19 33.97 699.15 MS 665.19 0.04 F 15666.85 P 0.000 Unusual Observations (Partial list) Obs 7 12 47 56 60 95 125 141 156 168 175 176 210 215 221 255 272 log(t50) 1.47 0.83 -1.85 -1.72 0.70 0.76 -0.89 -0.11 -1.06 0.20 0.97 0.20 1.02 0.44 -0.72 0.17 1.45 log(t90) 2.58121 1.70600 -1.46852 -1.17393 1.52799 1.79607 -0.04769 0.82321 -0.10018 1.50775 2.23188 1.26102 2.08812 1.38710 -0.71670 1.10285 2.26194 Fit 1.86195 1.22772 -1.41125 -1.28072 1.10066 1.16183 -0.46532 0.30056 -0.63037 0.61430 1.36569 0.61430 1.41832 0.84611 -0.29201 0.57866 1.84404 SE Fit 0.01040 0.00765 0.02008 0.01911 0.00740 0.00750 0.01332 0.00885 0.01445 0.00771 0.00806 0.00771 0.00825 0.00731 0.01219 0.00780 0.01030 Residual 0.71925 0.47828 -0.05727 0.10679 0.42733 0.63425 0.41763 0.52265 0.53019 0.89345 0.86619 0.64673 0.66980 0.54099 -0.42469 0.52419 St Resid 3.50R 2.32R -0.28 X 0.52 X 2.08R 3.08R 2.03R 2.54R 2.58R 4.34R 4.21R 3.14R 3.25R 2.63R -2.06R 2.55R R denotes an observation with a large standardized residual. X denotes an observation whose X value gives it large influence. Page 21 ‘Four in One Plot’ Plot of Residuals vs. Fitted Values Residual Plots for log t90 Residuals Versus the Fitted Values (response is log(t90)) Normal Probability Plot of the Residuals Residuals Versus the Fitted Values 99.99 7 99 5 Residual 90 Percent 6 50 10 1 4 0.01 3 2 -0.5 0.0 0.5 Residual 1.0 1.5 -2 -2 -1 0 1 2 0.5 0.0 1.5 150 1.0 100 3 Fitted Value 50 0 -0.3 0.0 0.3 0.6 0.9 Residual 1.2 -1 0 1 Fitted Value 2 3 Residuals Versus the Order of the Data 200 Residual 0 -1 1.0 -0.5 Histogram of the Residuals 1 Frequency Standardized Residual 1.5 1.5 0.5 0.0 -0.5 1 100 200 300 400 500 600 Observation Order Still some unusual observations but plots looks much better. Before leaving this example, we note the following relationship: Correlations: log t90, FITS1 Pearson correlation r of log t90 and FITS1 = 0.975 P-Value = 0.000 If you look back to page 19, bottom, you will see that the correlation between log t90 and log t50 is also .975. That is, the correlation between the fitted (predicted) values and the observations y is the same as the correlation between the two variables x = log t50 and y = log t90. Brief Overview of Forthcoming Topics: We will first look at general linear regression model analysis in matrix terms. We will discuss some model assumptions and how they can be examined. Then we will present an example with five predictors and some techniques for model fitting. We will then discuss generalized linear models, including logistic and Poisson regression. 700 800