Notes 18

advertisement

Statistics 510: Notes 18

Reading: Sections 6.3-6.5

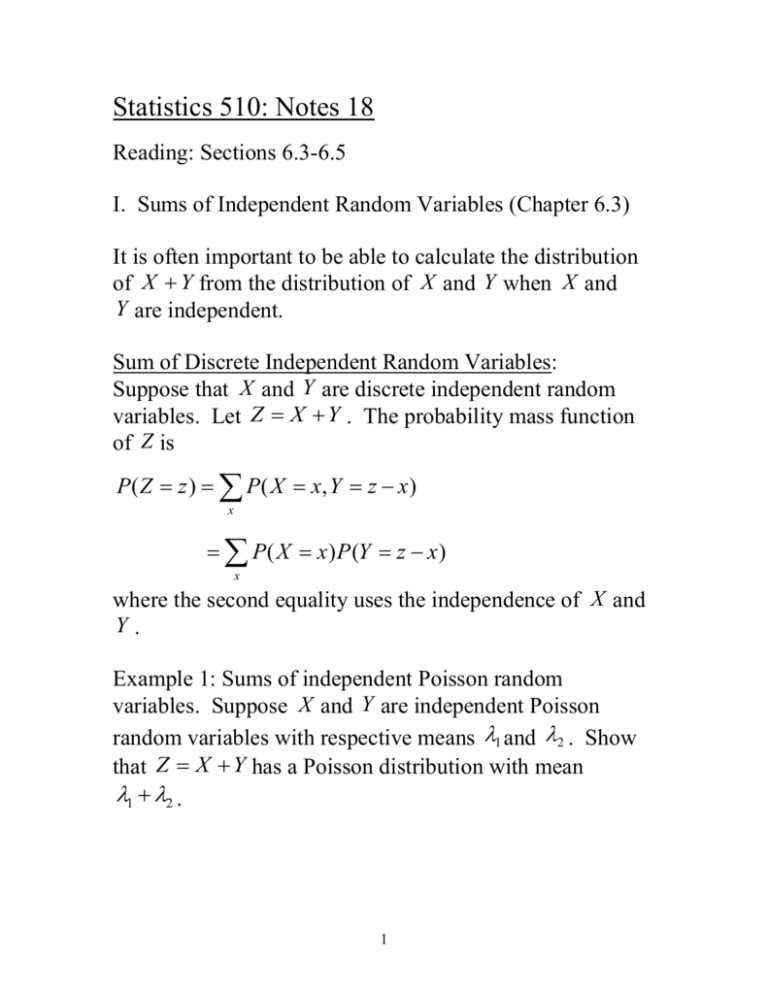

I. Sums of Independent Random Variables (Chapter 6.3)

It is often important to be able to calculate the distribution

of X Y from the distribution of X and Y when X and

Y are independent.

Sum of Discrete Independent Random Variables:

Suppose that X and Y are discrete independent random

variables. Let Z X Y . The probability mass function

of Z is

P ( Z z ) P ( X x, Y z x )

x

P ( X x ) P (Y z x )

x

where the second equality uses the independence of X and

Y.

Example 1: Sums of independent Poisson random

variables. Suppose X and Y are independent Poisson

random variables with respective means 1 and 2 . Show

that Z X Y has a Poisson distribution with mean

1 2 .

1

Sums of Continuous Independent Random Variables:

Suppose that X and Y are continuous independent random

variables. Let Z X Y . The CDF of Z is

FZ (a ) P ( Z a )

P( X Y a)

f XY ( x, y )dxdy

x y a

2

f X ( x) fY ( y )dxdy (using X , Y independent)

x ya

a y

F

a y

X

f X ( x) fY ( y )dxdy

f X ( x)dxfY ( y )dy

(a y ) fY ( y )dy

By differentiating the cdf, we obtain the pdf of Z X Y :

d

f Z (a)

FX (a y ) fY ( y )dy

da

d

F (a y ) fY ( y )dy

da X

f X (a y ) fY ( y )dy

Example 2: Sum of independent uniform random variables.

If X and Y are two independent random variables, both

uniformly distributed on (0,1), calculate the pdf of

Z X Y .

3

Sum of independent normal random variables:

Proposition 6.3.2: If X i , i 1, , n, are independent

random variables that are normally distributed with

2

respective means and variances i , i , i 1, , n, then

n

X

i 1

n

i

is normally distributed with mean

2

i

.

i 1

i

and variance

n

i 1

The book contains a proof of Proposition 6.3.2 that

calculates the distribution of X 1 X 2 using the formula for

the sum of two independent random variables established

above, and then applying induction. We will prove

Proposition 6.3.2 in Chapter 7.7 using Moment Generating

Functions.

4

Example 3: A club basketball team will play a 44-game

season. Twenty-six of these games are against class A

teams and 18 are against class B teams. Suppose that the

team will win each against a class A team with probability

.4 and will win each game against a class B team with

probability .7. Assume also that the results of the different

games are independent. Approximate the probability that

the team will win 25 games or more.

5

II. Conditional Distributions (Chapters 6.4-6.5)

(1) The Discrete Case:

Suppose X and Y are discrete random variables. The

conditional probability mass function of X given Y y j is

the conditional probability distribution of X given Y y j .

The conditional probability mass function of X|Y is

p X |Y ( xi | y j ) P( X xi | Y y j )

P( X xi , Y y j )

P(Y y j )

p X ,Y ( xi , y j )

pY ( y j )

(this assumes P (Y y j ) 0 ). This is just the conditional

probability of the event X xi given that Y y j .

If X and Y are independent random variables, then the

conditional probability mass function is the same as the

unconditional one. This follows because if X is

independent of Y, then

p X |Y ( x | y ) P ( X x | Y y )

P ( X x, Y y )

P (Y y )

P ( X x) P (Y y )

P (Y y )

P( X x)

6

Example 3: In Notes 17, we considered the situation that a

fair coin is tossed three times independently. Let X denote

the number of heads on the first toss and Y denote the total

number of heads.

The joint pmf is given in the following table:

Y

X

0

1

2

3

0

1/8

2/8

1/8

0

1

0

1/8

2/8

1/8

What is the conditional probability mass function of X

given Y? Are X and Y independent?

(2) Continuous Case

If X and Y have a joint probability density function

f ( x, y ) , then the conditional pdf of X, given that Y=y , is

defined for all values of y such that fY ( y) 0 , by

7

f X |Y ( x | y)

f X ,Y ( x, y)

fY ( y ) .

To motivate this definition, multiply the left-hand side by

dx and the right hand side by (dxdy ) / dy to obtain

f X ,Y ( x, y )dxdy

f X |Y ( x | y )dx

fY ( y )dy

P{x X x dx, y Y y dy}

P{ y Y y dy}

P{x X x dx | y Y y dy}

In other words, for small values of dx and dy ,

f X |Y ( x | y ) represents the conditional probability that X is

between x and x dx given that Y is between y and y dy .

The use of conditional densities allows us to define

conditional probabilities of events associated with one

random variable when we are given the value of a second

random variable. That is, if X and Y are jointly continuous,

then for any set A,

P{X A | Y y} f X |Y ( x | y)dx .

A

In particular, by letting A (, a] , we can define the

conditional cdf of X given that Y y by

a

FX |Y (a | y) P( X a | Y y) f X |Y ( x | y)dx .

8

Example 4: Suppose X and Y are two independent random

variables, both uniformly distributed on (0,1). Let

T1 min{ X , Y }, T2 max{ X , Y } . What is the conditional

distribution of T2 given that T1 t ? Are T1 and

T2 independent?

9

10