LINEAR INDEPENDENT VECTORS AND THEIR APPLICATIONS

advertisement

LINEARLY INDEPENDENT VECTORS AND THEIR APPLICATIONS

BY

ROBERT JEFFREY TAYLOR

B.S. Georgia Southern University, 1997

A Project Submitted to the Faculty of the College of Graduate Studies at Georgia

Southern University in Partial Fulfillment of the Requirements of the Degree

Master of Science in Applied Mathematics

Statesboro, Georgia

2006

LINEARLY INDEPENDENT VECTORS AND THEIR APPLICATIONS

BY

ROBERT JEFFREY TAYLOR

________________________________________

Dr. Stephen Damelin, Chairperson

________________________________________

Dr. Grzegorz Michalski, Secondary Chairperson

_________________________________________

Dr. David Stone, Internal Examiner

________________________________________

Dr. Yan Wu, Internal Examiner

__________________________________________

Professor Victor Reiner, External Examiner

__________________________________________

Dr. Jimmy Solomon, Graduate Director

iii

Table of Contents

Abstract……………………………………………………………………………….iv

Acknowledgements …………………………………………………………………..v

Chapter 1:

Introduction…………………………………………………………...1

Chapter 2:

Basic Definitions……………………………………………………...2

Chapter 3:

Properties……………………………………………………………...9

Chapter 4:

k – Independence Theorems ………………………………………….29

Chapter 5:

Applications…………………………………………………………..34

References…………………………………………………………………………….46

iv

Abstract

This thesis studies linearly independent vectors and their applications. We provide an

introduction to the problem, basic definitions, and we establish many new properties sets

of k – independent vectors. We also discuss various applications to combinatorial

designs and graphs.

v

Acknowledgements

I would like to thank my family, friends, and coworkers for all of their encouragement

and support. I’d also like to thank Dr. Steven Damelin, Dr. Grzegorz Michalski, Dr. Yan

Wu, and Dr. David Stone for serving on my research committee. I’d especially like to

show gratitude to Dr. Damelin and Dr. Michalski for all of their guidance and supervision

throughout the last year. I’d also like to thank Professor Victor Reiner for reading this

thesis, and for many useful comments – in particular the topics in Chapter 5.

1

Chapter 1

Introduction

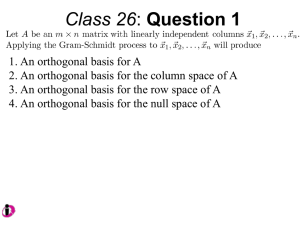

This project deals with the study of k - independent vectors and their applications. In

Chapter 2, we will discuss basic definitions. In Chapter 3, we will look at basic properties

of k – independence. In Chapter 4, we will delve further into the properties of k –

independence using the results of two papers: Damelin, Michalski, Mullen, and Stone and

Damelin, Michalski, and Mullen. In Chapter 5, we will study applications of k –

independence to combinatorial designs and graphs.

2

Chapter 2

Basic Definitions

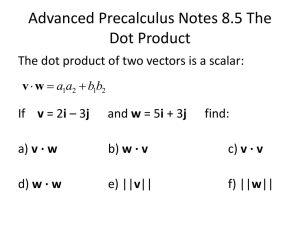

Throughout this paper, V will denote a vector space over a field F. We will also use the

following notation: 0 = the null vector, | X | the cardinality of X, V * V 0

and F * F 0 .

Let X V and l be an integer with 0 l X . We define LC l X , the set of all l – linear

combinations of elements from X, as follows: LC0(X) = {0} , and for l 1,

(1)

l

LC l X = ci xi , where ci F * , xi X , and x1 ,..., xl are all different .

i 1

We shall also use the following symbols:

LCl X LC j X

0 j l

LCl X LC j X

0 j l

as well as their “starred” versions:

LC* l X LC j X

0 j l

LC* l X LC j X .

0 j l

The set of all linear combinations of elements from X is defined by LC X : LC X ( X ) .

The set of all nontrivial linear combinations is defined as LC * X : LC*| X | X .

Next, we will define linear dependence in the terms of the notions introduced above.

However, we begin by introducing conditions D1(X) and D2(X) as follows.

3

D1(X): There exists x X, x LC(X – {x}).

D2(X): 0 LC*(X)

Observation 2.1

For any X V, D1(X) and D2(X) are equivalent.

Proof

D1(X) D2(X): Assume D1(X). Let x0 X be the witness x as in D1(X);

i.e. x0 LCl X x0 , for some l with 0 l X 1 .

Case 1: l = 0. xo LC0 X x0 . 0 , so, 0 x0 1 * x0 LC1 ( X ) LC*(X);

i.e. D2(X) holds.

l

Case 2: l 1 . x0 LCl X xo ; i.e. x0 ci xi where ci F * , for i 1, 2, ...l and

i 1

x1 , x 2, ...xl X x0 are all different. In particular,

l

0 ci xi x0 LCl 1 ( X ) LC*(X);

i 1

i.e. D2(X) holds.

D2(X) D1(X): Assume D2(X). i.e. 0 LCl ( X ) for some l 1 . Say,

l

(1)

0 ci xi

i 1

4

where ci F * and i 1, 2, ...l for x1 ,..., xl X are all different. By solving (1) for x1, we

l

c

get x1 i xi which shows that x1 LCl 1 X x1 LC X x1 ; i.e. x1 is the

c1

i 2

witness x in D1(X).

□

Definition 2.2

X V is said to be linearly dependent, denoted as D(X), if either of the equivalent

conditions, D1(X) or D2(X) is satisfied. If X is not linearly dependent, then it is said to be

linearly independent which is denoted by I(X). Alternatively, X is linearly independent if

it satisfies either of the equivalent conditions:

I1(X): x X , x LC X {x}

I2(X): 0 LC*( X )

which are the negations of D1(X) and D2(X), respectively.

From now on, we assume the dimension of the space V is finite: dim(V) = n, for some n.

Observation 2.3

LC X LC min X ,n X .

Proof

This is clear if X n . Let’s assume then that X n. We need to show that

LC X ( X ) LCn ( X ). LC X LCn is clear because X n. LC X LCn can be

proven by mathematical induction on l X n.

Let Sl be the following statement:

5

X V

X n l LC

X

X LCn X .

We need to verify that the following 2 conditions hold:

1)

S(0)

2)

Sl Sl 1

1) is clear.

S (0): X , X n LC X X LCn X .

To verify 2), assume S l . For some l 0, we need to show that S l 1 holds. Let X be

such that X n l 1 . We need to show

(*) LC X X LCn X .

Since X n, D1(X) is true.

~

Let x X be the witness x in D1(X):

(**)

~

~

x LC X x .

~

Since X x n l , Sl implies

(***) LC

~

~

X x LCn X x .

X x

~

To prove (*), let v LC X X . i.e.

s

v ci xi , where s X and ci F * , xi X where i 1, 2, ...s. We need to show that

i 1

v LCn X .

6

~

Case 1:

xi x for all i 1,2,...s. Then by (***)

v LC

~

~

X x LCn X x LCn X .

X x

~

x x i for some i0 1,2,...l. Let r X , d1 ,...d r F * , and

0

~

Case 2:

r

~

~

y1, ... y r X x be such that x = d i y i . See (*).

i 1

l

Then v ci xi ci xi ci0 xi0

i 1

i i0

r

r

ci xi ci0 d i yi ci xi ci0 d i yi .

i j

i 1

i j

i 1

Then by (***) v LC X x X x LCn X x LCn X . This completes the

□

proof.

Now, we will generalize the notion of linear dependence (and therefore, that of

independence) by realizing conditions of D1 and D2, as follows. k is a positive integer.

D1(X, k):

x X , x LCk X {x}

D2(X, k):

0 LC* k (X)

The next observation can be viewed as a generalization of Observation 2.1. Its proof

7

follows that of Observation 2.1 with extra attention being paid to the number of vectors in

the linear combination.

Observation 2.4

For any X V , D1(X, k) D2(X, k)

Proof

D1(X, k) D2(X, k): Assume D1(X, k). Let x0 X be as in D1(X, k);

i.e. x0 LCl X x0 , for some l with 0 l k .

Case 1: l = 0. xo LC0 X x0 . 0 , so, 0 x0 1 * x0 LC1 ( X ) LC * k X

i.e. D2(X, k) holds.

l

Case 2: 1 l k . x0 LC l X xo ; i.e. x0 ci xi where ci F *and

i 1

xi X x0 , xi where x1 , x2, ...xl are all different. In particular,

l

0 ci xi x0 LCl 1 ( X ) LC * k ;

i 1

i.e. D2(X, k) holds.

D2(X, k) D1(X, k): Assume D2(X, k). i.e. 0 LCl ( X ) for some l 1 . Say,

l

(2)

0 ci xi

i 1

where ci F * , xi X , and x1 ,..., xl X are all different. Solving (2) for x1, we get

l

c

x1 i xi which shows that x1 LC l 1 X x1 LC * k ( X ) ; i.e. x1 as in D1(X, k).

c1

2

□

8

Definition 2.5

X V is said to be k – dependent, denoted as D(X, k), if the equivalent conditions

D1(X, k) and D2(X, k) are satisfied. If X is not k – dependent, then it is said to be k –

independent, denoted as I(X, k).

Let I 1(X, k) and I2(X, k) denote the negation of D1(X, k) and D2(X, k), respectively.

I1(X, k):

I2(X, k):

x X , x LC k X {x}

0 LC * k (X)

In other words, X is k – independent if it either of the equivalent conditions I1(X, k) or

I2(X, k) is satisfied.

9

Chapter 3

Properties of k - Independence

In chapter 2, we defined k – independence. We will now explore some of the properties

of k – independence.

First, we observe that if k / k , then k – independence will imply k / independence.

Observation 3.1

If X is k – independent, then X is k / – independent k / k .

Proof

Suppose k / k and to the contrary X is k / – dependent. Then 0 LC * k / X LC * k X ,

which contradicts the k – independence of X.

□

We will now look more closely at the topic of linear dependence.

Observation 3.2

Let X V with dim(V) = n. The following conditions are equivalent

a) D(X)

b) D(X, n + 1)

c) k n 1, D( X , k )

Proof

ab

From D2 X , 0 LC X . We need to show 0 LCn1 X .

10

From Observation 2, LC X LC min X ,n X LCn X LCn1 X

bc

The proof of this is clear.

c a

Suppose k n 1 is such that D2 X , k . i.e. 0 LC* k ( X ) LC( X ) * . So D2(X) holds.

□

By switching the condition of Observation 3.2 to their negations we obtain the following.

Corollary 3.3

Let X V with dim (V) = n. The following conditions are equivalent

a)

I(X)

b)

I(X, n +1)

c)

k n 1, I(X, k)

Since k n 1, we can now restrict our investigation to k n.

We will next investigate that a set is linearly independent if and only if its subsets are

linearly independent.

Observation 3.4

The following statements are equivalent.

a)

X is k – independent.

b)

Y X , where Y k , Y is linearly independent.

c)

Y X , Y is k – independent.

11

Proof

a b

We proof the equivalent statement: Y X Y k and D2Y D2 X , k

Let Y X with Y k and D2 Y . Then 0 LC * Y LC*k ( X ). So D2(Y) holds.

bc

Y X . We need to show I ( Y, k ; e.g. I2 Y, k . Suppose otherwise; i.e.

0 LC k Y .Say 0 LC *Z Z LC * Z , where Z Y and Z k . In particular we

have D2(Z) which contradicts b.

c a

The implication is clear.

□

Note that by the previous observation and Corollary 3.3, in particular, we can conclude

that a set is linearly independent if and only if each of its subsets is linearly independent.

Now, we can introduce the notion of maximal k – independence.

Definition 3.5

W V is said to be maximal k – independent if every proper superset of W in V is k –

independent.

12

Remark 3.6

Suppose W V is k – independent. W is maximal k – independent if and only if

(*)

v V W , W vis k – dependent.

Proof

Suppose W is maximal k – independent. Let v V W . W vmust be k –dependent

because otherwise the set would be a k – independent proper superset of W which would

contradict maximality of W.

Suppose (*); and suppose to the contrary that W is k – independent, but not maximal, then

i.e. there is some k – independent U V which is a proper subset of W. Let v U W .

Then, W vas a subset of U is k – independent which contradicts (*)

□

From now on F Fq is a finite field, and V is a finitely dimensional vector space over F.

Now, we can take a look at maximal k – independent subsets.

Observation 3.7

Every k – independent subset in V is contained in a maximal k – independent subset of V.

Proof

Let W V be k – independent. We shall construct a maximal k – independent set

U V such that W U . If W is maximal k – independent, then let U = W. Otherwise,

13

by Remark 3.6, there is v0 V W such that W v0 is k – independent. If W v0 is

maximal k – independent, then let U W v0 . Otherwise by Remark 3.6, there is

v1 V W v0 , such that W v0 , v1 is k – independent. If this set is maximal,

then let U W v0 ,v1 .Otherwise, by Remark 3.6, there is v2 V W v0 , v1 such

that W v0 , v1 , v2 is k – independent, etc. In the end, we will have generated a

sequence of v0 , v1 ,...vs of vectors in V, for some s V , such that U W v0 , v1 ,...vs is

maximal k – independent.

□

Combining Observation 3.7 and Corollary 3.3, we see that the k – independent subsets of

V are precisely the subsets of all maximal k – independent subsets of V.

Next, we observe that linear independence is preserved under subsets.

Lemma 3.8

Suppose C W V , where C is a linearly independent subset of W and maximal among

all such subsets of W. Then every element of W is a linear combination of elements of C.

i.e. W LCC .

Proof

Let v W . If v C , then v 1* v LCC . Suppose v W C. C vis linearly

dependent by the maximality of C. So, by D1 C v , C v has an element, call it u,

such that

14

(*)

u LCC v u.

(**)

u ci xi cv, for some m 0, c1 ,...c m , F * , c F , and xi C u.

i.e.

m

i 1

If u v , then by (*) v LC C , and the proof is complete.

If u v, then

(***) u C ,

and the coefficient c in (**) is different from zero. (Otherwise, (**) implies that

u LC(C u). i.e. D1(C) holds – contradictory to the assumption that C is linearly

m

c

1

independent.) Solving (**) for v, we get v u i ai . (cf ***).

c

i 1 c

□

We can generalize this Lemma for k – independence.

Lemma 3.9

Suppose C W V , where C is a k – independent subset of W and maximal among all

such subsets of W. Then every element of W is a linear combination of fewer than k

elements of C.

i.e. W LCk C .

15

Proof

Let v W . If v C , then v 1 * v LCk C . Suppose v W C. C vis linearly

dependent by the maximality of C. So, by D1 C v , C v has an element, call it u,

such that

(●)

u LCk C v u.

(●●)

u ci xi cv, 0 m k , c1 ,...c m , F * , c F , and xi C u.

i.e.

m

i 1

If c 0, then m k 1. If u v , then by (●) v LCk C , and the proof is complete.

If u v, then

(●●●) u C.

since C is k – independent it follows that c 0. (Otherwise, (●●)implies that

u LCk (C u). i.e. D1(C,k) holds. This contradicts the k – independence of C.

m

c

1

Solving (●●) for v, we get v u i ai . In particular, v LCk (C ) (cf●●).

c

i 1 c

□

For a set to be maximal k – independent, every vector must be written as a linear

combination of fewer than k elements of the set.

16

Observation 3.10

Suppose W V is k – independent. Then W is maximal k – independent if and only if

every vector in V can be written as a linear combination of fewer than k elements of W.

i.e.

(*) V LCk W .

Proof

If W is maximal k – independent, then V LCk W by Lemma 3.9 applied to

W V , C U . Now, suppose (*) holds, and to the contrary, W is not maximal k –

independent; i.e. there is a k – independent set, W / , such that W W / V . Let

v W / W . By (*), v LC k (W ) LC k W / v ; i.e. D1 W / , k holds, which

contradicts the k – independence of W / .

□

Observation 3.11

If X is a maximal k – independent subset of V, then X includes a basis (as a subset).

Proof

Let B X such that B is linearly independent and maximal among all linearly

independent subsets of X. We will show that B is a basis of V. i.e. We need to prove that

V LC (B).

By Lemma 3.9 applied to C X , W V , we have V LCk ( X ) LC ( X ). So now we

need to show LC ( X ) LC ( B).

17

By Lemma 3.9 to C B, W X , then X LC B. X LC ( B) LC ( X ) LC ( B).

So V LC (B). B is the basis of V.

□

k – independence is preserved under linear transformations. Recall that

1 Y x V , ( x) Y .

Observation 3.12

Suppose : V V / is a linear transformation and Y V / . If Y is k – independent, then

its pre – image under Y, 1 Y , is k – independent. Moreover, if is one – to – one and,

Y is maximal k – independent, then 1 Y is maximal k – independent.

Proof

First we show k – independence of 1 Y . Suppose, to the contrary, that

r

0 LCk 1 Y , say 0V ci xi , r k , ci F * , and xi 1 Y for i 1 ,..., r. Then,

i 1

r

r

0V 0V ci xi ci xi LCk (Y )

i 1

i 1

which contradicts k – independence of Y and completes the proof of the k– independence

of 1 Y .

Now, suppose that is one – to – one, and Y is maximal k – independent. We will use

Observation 3.10 to show that 1 Y is maximal k – independent.

18

Let v 1 Y . We need to show that v LC k 1 (Y ) . Since v Y (and Y is k independent), Observation 3.10 implies that v LCk Y . Say

(*)

v c y y

yU

where c y F * ,U Y , and U k. Applying 1 , the inverse of , to both sides of (*) we

get v 1 v 1 c y y c y 1 y , in particular, v LC k 1 Y .

yU

yU

□

Corollary 3.13

Suppose : V V / is an isomorphism and X V . Then X is (maximal) k – independent

if and only if X is.

Proof

If X is (maximal) k – independent, then X 1 X is (maximal) k – independent

by Observation 3.12. Note, that the inverse of , call it , is a linear transformation. So

if X is (maximal) k – independent, then X 1 X is (maximal) k – independent by

Observation 3.12 applied to .

□

Corollary 3.14

Every maximal k – independent subset has an isomorphic copy that includes the standard

basis.

19

Proof

Let W be a maximal k – independent subset of V. By Observation 3.11, B / W where

B / is a basis. Let : V V be an isomorphism such that B / B (c.f. *****). By

Corollary 3.13, W is maximal k – independent, and clearly B 1 ( B) (W ).

□

Corollary 3.13 and 3.14 show that maximal k – independent subsets of V which include

the standard basis represent all maximal k – independent subsets of V. From now on, we

will focus on sets of the form W B where B is the standard basis and W is a (maximal)

k – independent subset.

Definition 3.15

We say that U V is a k – extension (of the standard basis) if B U and B U is k

– independent. If in addition B U is maximal k – independent, then we say that U is a k

– completion (of the standard basis).

Observation 3.16

Let Y V be linear independent and a y : y Y F * . If

y Y a y 0.

a

yY

y

y 0 , then

20

Proof

a

Suppose otherwise; i.e.

xY

y

y 0 and a y 0 for some y Y . But then 0 LC * Y ;

i.e. D2(Y) holds which contradicts linear independence of Y.

□

Observation 3.17

Suppose W is linearly independent. Let X 1 , X 2 W and

a x : x X 1 , bx : x X 2 F * .

(*)

If

a

xX 1

x

x

b x , then

xX 2

x

(i)

X1 X 2

(ii)

x X 1 a x bx .

Proof

Suppose a x x

xX 1

b x .

xX 2

x

0

a

xX 1

x

x

b x = a

xX 2

x

xX1 X 2

x

x

(a

xX1 X 2

x

bx ) x

b x .

x

xX 2 X1

By Observation 3.16,

(1) x X 1 X 2 a x 0 . However, a x , bx F * .

(2) x X 1 X 2 a x bx 0

(3) x X 2 X 1 bx 0

By (*), (1) and (3) imply that X 1 X 2 X 2 X 1 ; i.e. (i) holds, and therefore (2)

says (ii) holds.

□

21

Corollary 3.18

Suppose W is linearly independent and a x : x W , bx : x W F . If

a

xW

x

x

b x , then

xW

x

x W a x bx .

(*)

Proof

Let X 1 x W : a x 0= X 2 x W : bx 0 . Then

a

xX 1

x

x a x x bx x bx x , so by Observation 3,17,

xW

xW

xX 2

(i) X 1 X 2

(ii) x X , a x bx

In particular, (*) holds.

□

Definition 3.19

Suppose B is a basis and v a x x . We define the B – support of v, denoted by

xB

supp B v , and the B – norm of v, denoted by v

supp B v : x B : a x 0 and v

B

B

as follows:

: supp B v

e.g. 0 supp 0 0.

B

Note that supp B v , and therefore v B , is well defined; i.e. if

v a x x bx x

xB

xB

22

then x B : a x 0 x B : bx 0. (See Corollary 3.18) Note also that for i 0,1,...n,

LCi B v V : v

B

i ,and that V is a disjoint union of LCi ( B), i 0,1,...n. If B is the

standard basis, then we shall omit the subscript.

If the basis is present in the set, then the following holds.

Observation 3.20

Suppose W B . Then B W is k – independent (i.e. W is a k – extension) if and

only if for every nonempty U W we have

LC|U | U LCk U 1 B ,

or equivalently,

y LC|U | U y k U 1 .

Proof

Suppose B W is k – independent. Let U W be nonempty and y LC|U | U , say

(*) y

b u ,

u U

u

where bu : u U F * . We need to show that y k U 1 . Suppose, to the contrary,

(**) y a x x ,

x X

for some X B and a x : x X F * where

(***) | X | k U 1 .

23

Combining (*) and (**), we get 0 a x x bu u . Since U W is nonempty and

xX

u U

disjoint from X B , this implies that of 0 LC|U* || X | B W . From (***), we

get | U | | X | k 1 . So 0 LC* k B W which contradicts the k – independence of

B W.

Now, to prove the second part of the observation, let us assume that

(●) U W ,U LC|U | U LCk U 1 B .

We will show I2( B W , k ). Suppose otherwise; i.e. 0 LC*k B W , say

(●●) 0 ae e a w w

eD

wU

where D B; U W ; ae : e D F * , a w : w U F * , and | D U | | D | | U | k .

Let y

a

wU

w

w = ae e . So on one hand y LC U , but on the other hand

eD

y D k U . This contradicts (●), since by (●●) and the linear independence of D,

U is nonempty.

□

Corollary 3.21 and 3.22 follow from Observation 3.20 applied to W vand W {v, w} ,

respectively.

24

Corollary 3.21

A singleton, v, is a k – extension if and only if v k i.e. v LCk ( B).

Corollary 3.22

A doubleton, v, w, is a k – extension if and only if v, w LCk ( B) and

LC2 (v, w) LCk 1 B .

Now we’ll examine k – independence in various vector spaces of the form q for

n

various values of k, n, and q. We start with the simple characterizations of k –

independence in cases k = 1, 2.

We will now investigate k =1.

Observation 3.23

W V is1 – independent if and only if 0 W . In particular, there is exactly one maximal

1 – independent subset of V: namely V * V 0 .

Proof

W is 1 – independent if and only if 0 LC*1 (W ) LC1 (W ) and the last condition is

equivalent with 0 W . Since W LC1 (W ) , one direction of the proof is obvious. The

other direction follows from an axiom of vector spaces, namely cv 0 if and only if

c 0 or v 0.

□

25

We will now investigate k = 2. Since 2 – independence implies 1 – independence, by

Observation 3.23, we can focus on subsets of V * .

In the proof of the next observation, we will use the following notation. For

v V , v denotes the linear subspaces generated by v. i.e. a v cv : c F

and v : v 0 cv : c F * .

*

Observation 3.24

For any W V * the following are equivalent.

1.)

W is 2 – independent

2.)

No two elements of W come from the same 1 – dimensional

subspace of W.

Proof

Assume (1). Suppose to the contrary that there are w1 , w2 W v , for some v V * .

Let c1 , c 2 F * be such that wi ci v for i = 1, 2. Then

1

1

0 v v wi w2 LC 2 W which contradicts (1).

c2

ci

26

Assume (2). Suppose, to the contrary, W is 2 – dependent; i.e. 0 LC* 2 W . Say

0 c1 w1 c2 w2 for some w1 , w2 W and c1 , c2 F with at least one of the coefficients,

c

say c1 , being nonzero, then w1 2 w2 w2 , which contradicts (2).

c1

□

By Observations 3.23 and 3.24, it is clear that a subset of V is maximal 2 – independent if

and only if it is a subset of V * and includes exactly one vector from each 1 – dimensional

subspace. In particular, its cardinality must be equal to the number of such subspaces.

This cardinality is given in the next observation.

Observation 3.25

There are

qn 1

n

1 – dimensional subspaces of V Fq .

q 1

Proof

*

Consider P v , v V * . Clearly the number of 1 – dimensional subspaces of V equals

the cardinality of P. Since P forms a partition of V * and v

have q n 1 V * * v

vV

*

*

q 1 , for each v V * , we

P * q 1.

□

27

Now, we will turn our focus to 3 – independence. In particular, P

qn 1

in various

q 1

spaces of the form Fq , with 3 n and q 2,3. ..

n

We will first examine the 3 – independence of Z 2 .

3

Observation 3.25

There is one 3 – completion in Z 2 , and it has a cardinality of 1.

3

Proof

For 3 – independence to hold, the only allowable vector, v, that we could add to the basis

is (1,1,1). B 1,1,1is 3 – independent. Therefore, 1,1,1 is the only 3 – completion of

Z 2 3 .

□

We will now examine the maximal 3 – independence of Z 2 .

4

Observation 3.26

There are two 3 – completions in Z 2 . The first 3 – completion is 1,1,1,1. The second

4

is 1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1).

Proof

From Corollary 3.21, 1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1), (1,1,1,1)are the only possible

nonbasis elements of a 3 - completion. 1,1,1,1 is not linearly independent from any of the

other 4 elements. So, if we let v (1,1,1,1) we must exclude

28

1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1) from the 3 - completion. However,

B 1,1,1,1 is 3 –

independent. So, (1,1,1,1) is a 3 – completion which has a cardinality of 1.

If let v (1,1,1,0) or any element from that level, we already know that we must

exclude 1,1,1,1 . However, 1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1) are all linearly independent

from each other and the basis. So, B 1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1)is 3 –

independent. So, 1,1,1,0, (1,1,0,1), (1,0,1,1), (0,1,1,1) is a 3 – completion which has a

cardinality of 4.

□

29

Chapter 4

k – Independence Theorems

The purpose of this chapter is to summarize the work of two journal articles. The first

article is “The number of linearly independent binary vectors with applications to the

construction of linear codes, hypercubes, orthogonal arrays and (t, m, s) – nets” written

by S.B. Damelin, G. Michalski, G.L. Mullen, and D. Stone. The second article is “The

Cardinality of Sets of k – Independent Vector Spaces over Finite Fields” written by the

aforementioned Damelin, Michalski, and Mullen. For easier reading, we adopt the

notation from those papers.

§ 4.1

2 – independence

Definition 4.1

We will say that a nonempty set A Vn is k – independent, if every nonempty subset of A

that has at most k elements is linearly independent. The family of all k –independent

subsets of Vn will be denoted by Vn k . An earlier discussion from the first article by

Damelin, Michalski, Mullen and Stone (2004) shows that Vn 1 A Vn : 0 A , for

n 1, and Vn (2) Vn 1, for n 2. Also, it is clear from Definition 4.1 that

Vn k1 Vn k 2 , whenever k1 k 2 k n ; i.e. for n 2, we have

Vn 1 Vn 2 ... Vn n.

Note that the least family in this hierarchy, Vn n , includes all linearly independent

subsets of Vn.

30

We are interested in maximum possible sizes of elements of Vn k , and to this end let us

define

Ind (n, k ) : max A : A Vn k .

We have that Ind (n,1) 2 n 1 if n 1, Ind n,2 Ind n,1 if n 2, and for every n 3

(*) 2 n 1 Ind (n,3) ... Ind (n, n) n.

In Theorem 4.2 below we give formulae for Ind (n,3) and for Ind (n,4), where k n m

for some m with 0 m

n

. It turns out that roughly one third of the numbers in (*) are

3

equal to n+1. Moreover, two thirds of the time, namely when n 2 (mod 3), the last

number in (*) that is greater than n+1 and is equal n+2.

Our main result can be stated as follows.

Theorem 4.2

The following formulae hold:

a)

Ind (n,3) 2 n 1, for n 3.

b)

Ind (n, n m) n 1, for n 3m 2, m 0.

c)

Ind (n, n m) n 2, for n 3m i, i 0,1, m 2

Remark 4.3

We note that it is indeed easy to construct sets of vectors satisfying the lower bounds in

Theorem 4.2. For the case b), n =3m+2 , one can construct the required n+1 vectors by

simply using the n unit vectors of length n along with the all ones vector of length n to

give the required n+1 vectors.

31

In the cases from c) where n = 3m and n = 3m + 1, we start with the set of n unit vectors

of length n. In the n = 3m, we add the two vectors (1,...,1,0,,...,0) and (0,...,0,1,...,1)

where we use 2m ones in each case, along with m zeros. The resulting set will then be

n m -independent. In the n = 3m + 1 case, we add the two vectors (1,...,1,0,...,0) and

(0,...,0,1,...,1) where we now use 2m + 1 ones, and the rest zeros. In this case the set will

also be n m independent.

§ 4.2

k – independence

A set of vectors is k-independent if all its subsets with no more than k elements are

linearly independent. We obtain a result concerning the maximal possible cardinality

Ind q n, k of a k-independent set of vectors in the n-dimensional vector space Fqn over

the finite field Fq of order q. Namely we give a necessary and sufficient condition

for Ind q n, k n 1 .

For q a prime power, let Fq denote the finite field of order q, and let Fqn denote the ndimensional vector space of all n-tuples over Fq . For an integer k, with 1 k n we say

that a set of vectors A Fqn is k –independent if all its subsets with at most k elements are

linearly independent. We are interested in the maximal possible cardinality, of a

Ind q n, k , of a k – independent subset of Fqn . It is not hard to see that we have

(*)

q n 1 Ind q n,1 Ind q n,2 ... Ind n n, n n 1.

Indeed, any set of nonzero vectors is 1-independent; (k+1) – independence implies k –

independence; and finally, the (n+1) – element set consisting of the standard basis plus

the “all-ones" vector is clearly n – independent.

32

The first inequality in (*) becomes an equality when q = 2, for over F2 , 2-independence is

equivalent to 1-independence. The general formula for Ind q q 2 was before (see

Observation 3.25 and its preceding paragraph). For completeness, we include it in the

next observation.

Observation 4.4

Let q be a prime power, and n 1an integer. Then

(**)

Ind q n,2

qn 1

.

q 1

In Section 4.1, we investigated formulae for Ind 2 (n, k ) in two extreme cases: the well

known cases when k 3 and the cases when k

2n

. See Theorem 4.2. In this section,

3

we generalize Theorem 4.2 (b). We present a simple condition on q, n and k which is both

necessary and sufficient for Ind q n, k n 1 to hold. Here is our main result.

Theorem 4.5

Let q be a prime power, and let k and n be integers with 2 k n. Then

Ind q n, k n 1 if and only if

q

n 1 k.

q 1

In particular, in the case q = 2, Theorem 4.5 says that the inequality in Theorem 4.1(b) is

not only sufficient, but also necessary.

33

Note also that with q and n fixed, our current result in particular evaluates Ind q n, k for

n 1

the

values of k, where denotes the floor, or largest – integer function.

q 1

In particular, when q = 2, our result evaluates Ind q n, k for all values of k in the range

2n 2

3 n.

3

34

Chapter 5

Applications

In this chapter, we study some applications of 2 – independence and k – independence.

§ 5.1

Applications of 2 – independence

A classic 1938 result of R. C. Bose in the theory of mutually orthogonal latin squares

(MOLS) demonstrated an equivalence between complete sets of MOLS of a given order

and affine planes of the same order. (1938). For further related results see Laywine and

Mullen (2002). Bose’s result has inspired much research on generalizations to other

combinatorial objects with applications in areas as diverse as coding theory,

combinatorial designs, numerical integration and random number generation. We refer

the interested reader to the Laywine and Mullen’s survey (2002) and the references cited

therein for a detailed account of this fascinating subject.

In this section, we will give an application of the results of Theorem 4.2 to the

construction of hypercubes and orthogonal arrays, pseudo t , m, s -nets and linear codes.

Orthogonal Arrays and Hypercubes

A hypercube of dimension n and order b is an array containing b n cells, based upon b

distinct symbols arranged so that each of the b symbols appears the same number of

times, namely

bn

b n 1 times. For 2 k n, a set of k such hypercubes is said to be k –

b

orthogonal if upon superpositioning of the k hypercubes, each of the b k distinct ordered

k-tuples appears the same number of times, i.e

bn

b n k times. Finally a set of r k

k

b

35

such hypercubes is said to be k-orthogonal if any subset of k hypercubes is k-orthogonal.

When k=2 this reduces to the usual notion of pairwise or mutually orthogonal latin

squares of order b. See Mullen (1998), Mullen and Scmid (1996), and Mullen and

Whittle (1992) for further discussion related to sets of orthogonal hypercubes, and in

particular, sets of k-orthogonal hypercubes.

Using our constructions for a set of k – independent binary vectors of length n, we can

build sets of k-orthogonal hypercubes of dimension n. Assume that Ind n, k e and let

a1 x1 ... an xn denote a vector of length n in Bn k a set of k-independent vectors of

length n. One can then construct a binary hypercube C of dimension n by placing the

F 2 field element a1b1 ... a n bn in the cell of the cube C labeled by b1 ,..., bn , where

each bi F 2 . Since each vector in Bn k has at least one nonzero coefficient ai 1, and

since the equation xi b has exactly 2 n 1 solutions in F2n , it is clear that each such vector

represents a binary hypercube of dimension n. Moreover, given k such vectors from

Bn k , the corresponding hypercubes will be k-orthogonal. Since the k vectors are kindependent, this follows from the fact that the k n matrix obtained from the

coefficients of the k vectors will have rank k. Hence each element of F2n will be obtained

exactly 2 n k times, so that the k hypercubes of dimension n are indeed k-orthogonal.

We remind the reader that an orthogonal array of size N, s constraints, b levels, strength k

and index is an s N array A with entries from a set of b distinct elements with the

property that any k N subarray of A contains all possible k 1 columns with the same

frequency . Such an array will be denoted by OA( N , s, b, k ). Mullen gave the following

36

result as Theorem 13 in his 1998 paper. Let b 2, s k , and t 0 be integers. Then there

exists an orthogonal array OA(b t k , s, b, k ) of index b t if and only if there exist s, korthogonal hypercubes of dimension t + k and order b.

Hence if n = 3m + 2, m 0, from Theorem 4.2 we can construct an

OA(2 n , n 1, 2, n m) of index 2 m . Similarly if n 3m i, i 0, 1 , m 2, we can

construct an OA 2 n , n 2, 2, n m of index 2 m .

Pseudo (t, m, s) - nets

There is a connection between sets of k – independent vectors and t , m, s -nets and

pseudo t , m, s -nets. For a fixed integer s 1 , then an elementary interval in base b 2

is an interval of the form

s

E a ( i ) b ( d i ) , (a 1 1)b d i

i 1

with integers d i 0 and integers 0 a i b d i for 1 i s. Given an integer m with

m t 0 , a (t, m, s) – net in base b is a point set of bm points in [0,1)s such that every

elementary interval E of volume b t m contains exactly b t points.

It is well known, see for example Niederreiter (1987), that t , m, s -nets are useful in

numerical analysis; in particular in the approximation of multi-dimensional integrals.

Mullen and Whittle (1992) and Mullen (1998) stated that if k 2 , an orthogonal array

OA b t 2 , s, b, 2 of index bt is equivalent to a t , t 2, s - net in base b. Lawrence

indicated for k 3 orthogonal arrays are however, not equivalent to t, t k , s -nets

37

(1995). Orthogonal arrays are in fact equivalent to so called pseudo nets which are

structures with less uniformity in the distribution of the points than in a (t, m, s) -net. A

pseudo net in base b has the same definition as a (t, m, s) -net in base b except that only a

restricted subset of the elementary intervals is required to contain the proper share of

points.

More specifically, Lawrence defines a point set of bm points in [0, 1)s is a pseudo(t, m, s)net in base b if every elementary interval of volume bt – m satisfying either

i.) all d i 0, 1 or

ii.) d i 0 for exactly one i, for 1 i s

contains exactly bt points of the point set (1995). In addition, a set of bm points in $[0, 1)s

is a weak pseudo (t, m, s)-net in base b if every elementary interval of volume bt-m

satisfying i.) contains exactly bt points of the point set.

We have shown that given a set of s Ind (n, k ) , k-independent vectors of

length n t k , we can construct a set of s, k-orthogonal hypercubes of dimension t + k

and order 2. Such a collection of hypercubes is equivalent to an orthogonal array

OA 2 t k , s, 2, k of index 2 t (Mullen, 1998). Lawrence discovered that the existence of

an OA 2 t k , s, 2, k is equivalent to the existence of a pseudo (t, t + k, s) -net in base 2

Lawrence’s conclusion is listed below in the following theorem. (1995)

38

Theorem 5.1

If s Ind (n, k ) then each of the following equivalent objects can be constructed.

(1) A set of s, k-orthogonal hypercubes of dimension t + k and order 2.

(2) An orthogonal array OA 2 t k , s, 2, k of index 2 t .

(3) A pseudo (t, t + k, s)-net in base 2.

(4) A weak pseudo (t, t + k, s)-net in base 2.

Remark 5.2

Since our construction of sets of k-independent vectors deals only with the case b = 2, we

have stated Theorem 5.1 only for the b = 2 case. We note however that given a set of s / k

– independent vectors of length t + k over the finite field of b elements where b is any

prime power, we can prove the existence of each of the above equivalent combinatorial

objects in which s is replaced by s / and 2 is replaced by b.

Linear Codes

From Lidl and Niederreiter, it is known that a linear code C with a parity check matrix H

has minimum distance d C s 1 if and only if any s columns of H are linearly

independent (1997). We can thus construct a binary linear code C with length Ind (n, k ),

dimension Ind (n, k ) n and minimum distance d C k 1. Hence from Theorem 4.2(c),

if n 3m i, i 0, 1, m 2, we can construct a binary linear code C n of length n + 2,

dimension 2, and minimum distance d Cn n m 1. We note in passing that while the

resulting code has a very small dimension, it has a large minimum distance.

39

As an illustration, if n = 6 = 3(2) so that m = 2, from Theorem 4.2, we know that Ind(6,4)

= 8. Moreover the following set of 8 vectors of length 6 is 4-independent.

1

0

0

0

0

0

0

1

0

0

0

0

0

0

1

0

0

0

0

0

0

1

0

0

0

0

0

0

1

0

0

0

0

0

0

1

1

1

1

1

0

0

0

0

1

1

1

1

From the discussion above we can thus construct a set of 8 binary hypercubes each of

dimension 6, which are 4-orthogonal as well as an orthogonal array OA(2 6 , 8, 2, 4) of

index 22. Moreover, we can also construct a binary linear code C with parameters

8, 2, d C 5

§ 5.2

Applications of k – independence

We will now look at various applications of k – independence.

k – orthogonal Hypercubes

In Section 5.1, numerous applications of Theorem 4.2 were given related to the

construction of hypercubes and orthogonal arrays, pseudo (t , m, s ) -nets, and linear codes.

40

We now present an application of our current results to the construction of sets of

orthogonal hypercubes. By a hypercube of dimension n and order b is meant a

b ... b array consisting of b n cells, based upon b distinct symbols arranged so that each

of the b symbols appears the same number of times, namely

bn

b n 1 times. For

b

2 k n such hypercubes is said to be k-orthogonal if upon superpositioning of the k

hypercubes, each of the b k distinct ordered k-tuples appears the same number of times,

i.e.

bn

b n k . Finally a set of r k such hypercubes is said to be k-orthogonal if any

k

b

subset of k hypercubes is k-orthogonal. When n = k =2 these ideas reduce to the usual

notion of mutually orthogonal latin squares of order b.

Given a set of k-independent vectors of length n over Fq , we can build sets of korthogonal hypercubes of order q and dimension n. Let a1 x1 ... an xn denote a vector of

length n over Fq in a k-orthogonal set. One can then construct a hypercube of order q and

dimension n by placing the field element a1b1 ... a n bn in the cell of the hypercube

labeled by b1 ...bn where each bi Fq . Since each coefficient vector a1 ...a n has at least

one nonzero entry, it is clear that the array represented by the vector is indeed a

hypercube of dimension n and order q.

Moreover, given k such vectors from a k-independent set, the corresponding set of k

hypercubes will be k-orthogonal. This follows from the fact that the k vectors are kindependent over Fq , and hence the k n matrix obtained from the coefficients of the k

41

vectors will have rank k. Hence each element of Fqk will be picked up exactly q n k times,

so the k hypercubes are indeed k-orthogonal. This construction thus yields Ind q (n, k ) , korthogonal hypercubes of dimension n and order q.

Paul Garrett (2003) is the reference for the remaining part of the chapter.

Definition 5.2

A set C Fq is an n, m, d code if

n

●

all codewords have n letters (since they are in Fq )

●

m C ; i.e. there are m codewords.

●

d d C minimum distance of the code

n

:= min d v, w : wt(v - w), v w, and v, w C where wt (x) : number

of nonzero coordinates.

The Goal of Coding Theory

Construct C with d large, m large, and n small. Usually, we phrase this as

rate q (C ) :

log q (m)

n

1 should be large.

Now assume C is an Fq linear code; i.e. C is an Fq subspace of Fq . Then C is

n

called an n, k , d Fq linear code if k dim Fq C . In other words, an [n, k , d ] code

42

k

C is an n, q k , d - code and has rateq (C) = .

n

An [n, k , d ] Fq linear code C can be specified by giving an (n k ) n parity check

matrix

|

H v1

|

|

v2

|

|

v3

|

with the property that rowspace(H) = C : u Fq : u * v 0 v C .

n

Remark 5.3

d d C minimum distance of C.

= minimum number of linearly dependent columns in H

= minimum size of a set of linearly dependent columns of H

Corollary 5.4

Define Ind q N ,l : maximum size of asset of vectors in Fq which are linearly

N

independent l at a time. Then [n , k , d ] Fq linear code Ind q n k , d 1 n.

Gilbert – Varshamov bound

This bound asserts the existence of a linear code with certain parameters, assuming that a

cluster inequality is met. This bound applies only to linear codes.

43

Consider a linear code with alphabet Fq, block size n, dimension k, and minimum

distance d. That is, a generating matrix would be k n : we are looking at [ n, k , d ] codes.

Theorem 5.5

n 1

n 1

n 1

... q 1d 3

q 1d 2

, then an [ n, k , d ] code

If q n k 1 q 1

1

d 3

d 2

over alphabet Fq exists. That is, the block size is n, the dimension is k, and the minimum

distance is d. Specializing to the case q = 2 gives a simpler looking assertion:

Corollary 5.6

n 1 n 1

n 1 n 1

...

, then a binary [ n, k , d ] code exists.

If 2 n k 1

1 2

d 3 d 2

Plotkin

If C is an F2 code (not necessarily linear) and ( n, m, d ) with n < 2d, then m

2d

.

2d n

In particular if C is F2 – linear, say [ n, k , d ] with n 2d where m = 2k, then 2 k

Suppose Ind 2 ( N , l ) i. Then an F2 – linear code C which is [n, k, d].Hence if

i 2l 1, then Plotkin says

2iN

2(l 1)

2(l 1) i

2d

.

2d n

44

2l 1 i 2i N

2(l 1)

log 2 2l 1 i i N log 2 2l 1

an upper bound of i

Singleton bound

If an n, m, d Fq code C , then m q n( d 1) .

So if an [n, k , d ] Fq linear code C, then q k q n ( d 1) ; i.e. k n (d 1) .

Suppose Ind q N , l i; i.e. an Fq – linear which is [n, k, d]. Hence, we must have

i N i l ; i.e. N l. So Singleton says nothing about the Ind q N , l problem.

Girth

If one has a graph G V , E with v V vertices, e E edges, and girth: g = the

minimum number of edges in a cycle (closed path) of G, then we can now obtain a set of

vectors in Fq (for any field Fq ) which are linearly independent taken g 1 at a time

v 1

in the following way.

Examples of Girth

B

C

A

This graph has a girth of g = 4.

E

F

D

45

B

C

This graph has a girth of g = 3.

A

E

D

So, in general we orient the edges of G arbitrarily.

2

a

b

1

3

f

c

5

6

4

The following matrix has e E columns and v V 1 rows.

a

1

2

3

4

5

6

b

c

d

e

f

g

0

0

0

0 1

1 0

1 1 0

0

0 1 0

0 1 1 0

0

0

0

0 1 1 0

0

0

0

0

0

0 1 1 0 1

0

0

0 1 1 0

0

Row 6 is the signed vertex – edge incidence matrix.

Hence q , Ind q v 1, g 1 e. Thus, constructions of dense graphs with large girth

will give lower bounds of Ind q ( N , l ) q.

46

References

Bose, R.C. (1938). On the application of the properties of Galois fields to the problem

of hyper – Greco latin squares. Sankhya, 3, 323 – 338.

Damelin, S.B., Michalski, G. & Mullen, G. “The cardinality of sets k –

independent vectors over finite fields.” To appear in Monatsh. Math.

Damelin, S.B., Michalski, G., Mullen, G.L. & Stone, D. (2004). The number of

linearly independent binary vectors with applications to the construction of

hypercubes and orthogonal arrays, pseudo (t, m, s) – nets and linear codes.

Monatsh. Math., 141, 277 – 288.

Garret, P. (2003). The Mathematics of Coding Theory. Upper Saddle River, NJ:

Prentice Hall.

Lawrence, K.M. (1995). Combinatorial bounds and constructions in the theory of

uniform point distributions in unit cubes, connections with orthogonal arrays and

a poset generalization of a related problem in coding theory. Unpublished Ph.D.

Thesis, University of Wisconsin.

Laywine, C.F. & Mullen, G.L. (2002). A hierarchy of complete orthogonal

structures. Ars Combinatoria, 63, 75 – 88.

Lidl, R. & Niederreiter, H. Finite fields. (1997). Encyclopedia Math and

Applications 2nd edition. Volume 20: Cambridge University Press.

Mullen, G.L. (1998). Orthogonal hypercubes and related designs. Journal of

Statistical Planning and Inference, 73, 177 – 188.

47

Mullen, G.L. & Schmid, W.C. (1996). An equivalence between (t, m, s) – nets and

strongly orthogonal hypercubes. J. Combinatorial Theory, Ser. A., 76, 164 – 174.

Mullen, G.L. & Whittle, G. (1992). Point sets with uniformity properties and

orthogonal hypercubes. Monatsh. Math., 113, 265 – 273.

Niederreiter H. (1987). Point sets and sequences with small discrepancy. Montash.

Math., 104, 273 – 337.