Maximum-likelihood estimation of model parameters

Text S1: Maximum-likelihood estimation of model parameters

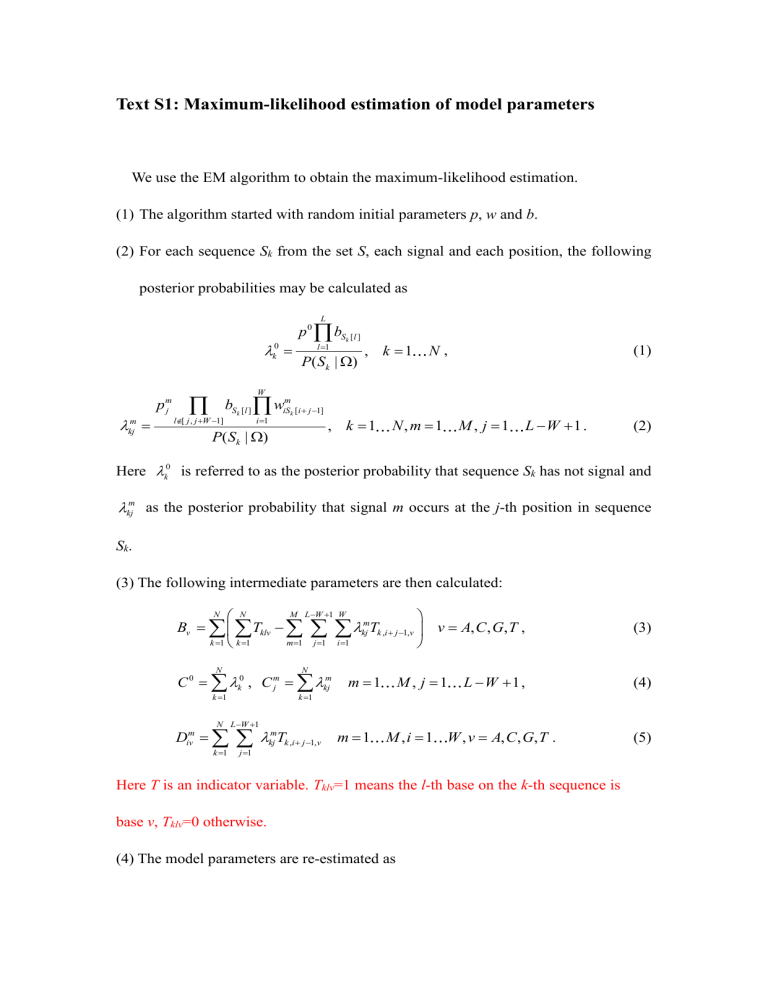

We use the EM algorithm to obtain the maximum-likelihood estimation.

(1) The algorithm started with random initial parameters p , w and b .

(2) For each sequence S k

from the set S , each signal and each position, the following posterior probabilities may be calculated as

k

0

0 p b l

L

1

S k

[ ]

P S k

|

)

, k

1 N , (1)

kj m p m j

l

[ ,

1] b k i

W

1 w m k

P S k

|

)

1]

, k

1 ,

1 ,

1 1 . (2)

Here

k

0 is referred to as the posterior probability that sequence S k

has not signal and

m kj

as the posterior probability that signal m occurs at the j -th position in sequence

S k

.

(3) The following intermediate parameters are then calculated:

B v

k

N

1

k

N

1

T klv

M L W 1 m

1 j

1 i

W

1

m kj

T

,

1, v

v

, , , , (3)

C

0 k

N

1

k

0

, C j m k

N

1

kj m m

1

D iv m k

N L W 1

1 j

1

m kj

T

,

1, v m

1

,

1 L W

1 , (4)

,

1 ,

, , , . (5)

Here T is an indicator variable. T klv

=1 means the l -th base on the k -th sequence is base v , T klv

=0 otherwise.

(4) The model parameters are re-estimated as

b v

B v

B , v , , , v

, (6) p

0

C

0

C

0

M L W 1 j m

, (7) m

1 j

1

C

p m j

C m j

C

0

M L W 1 m

1 j

1

C j m

, m

1 ,

1 1 , (8) w m iv

D m iv

D m , m iv

1 ,

1 ,

, , , . (9)

(5) The algorithm then goes to the next iteration until the parameters converge.

The EM algorithm converges definitely, however it may fall into the local maximums, especially for a complex landscape of the likelihood. Fortunately, we may solve this problem by redoing the estimation many times with random initial parameter values. The final estimation is determined by comparing the likelihood values obtained from all iterations.