Zoology 439 L

advertisement

Simple Statistical Analyses

There are very few simple Yes or No questions in biology. Most biological questions are

answered by gathering quantitative data (rainfall rates, Potassium influx rates, area of an

animal’s home range, etc.) and making INFERENCES from this information. When you begin

working with quantitative data you come in contact with at least three sources of potential errors:

1) BIOLOGICAL VARIABILITY (no two individual animals are exactly alike) and

2) RANDOM EVENTS (or chance occurrences).

3) ERRORS IN MEASUREMENT (misreading instruments, mistakes in note taking

etc.)

How do you determine if your quantitative measurements are accurately describing the “real”

biological situation you are studying? The basic theme of this laboratory is the use of several

simple statistical tests to first, extract worthwhile information about a POPULATION when you

only have data from a small portion (SAMPLE) of the entire population; and secondly, to

determine whether you can say with some degree of confidence that two samples come from

different (or similar) populations. This question is very frequently encountered in many

branches of science.

A POPULATION is considered here to be some set of items, which are all similar and which

reside within a definable boundary. It’s up to the investigator to decide what “population” she is

going to work with. Examples of defined populations include, all residents of Kāne‘ohe between

the ages of 30 and 60; all pineapple plants in a 5 acre plots the heights of all trees on a particular

site; the monthly rainfall values at UH over the last 10 years, etc. Note that individuals or

measurements on individuals may compose a population. Since it is usually impractical, or even

impossible, to measure all of the items under study in a population, (i.e. a CENSUS) you

generally try to describe the characteristics of a population by taking measurements of a portion

of the population. The portion is our SAMPLE. The methods used to obtain unbiased samples

will be extensively dealt with below, but for the moment you need not worry about how you get

your sample, but as you are sampling in this exercise think of the possible sources of bias. Once

the sample is obtained, you will estimate the value of PARAMETERS, which describe the

population and assign some CONFIDENCE LEVEL to these descriptions. Then you will

compare your population estimates to determine if they are different.

II.

Making the Measurements

In the lab you will be provided with several bags containing Koa Haole (Leucaena

leucocephala (Lam. de Wit) seedpods. (See:

http://www.hear.org/starr/hiplants/images/thumbnails/html/leucaena_leucocephala.htm for

images of this plant) Two of the bags of seedpods were collected from one tree, while the third

bag of seedpods came from a different tree. The goal of today’s exercise is to determine which

1

two bags came from the same tree and which bag came from a

different tree.

To do this you will make measurements and observations of the seedpods and analyze the

data you collect. BUT there are far too many seedpods in each bag for you to measure them all

(i.e. do a census) so you will have to work with only some seedpods from each bag – which

ones?? Remember, for the time being you are ignoring the manner in which the seedpods were

collected from the trees; but this is certainly another potential source of bias. In other words the

bags of seedpods are samples from the two trees – one bag of seed pods from one tree and two

bags from the other tree.

A second goal of today’s exercise will be to determine how can you obtain an unbiased

sample of seedpods to measure from the population of seedpods in each bag?

Each bag will be coded with a letter and you will collect and analyze samples from all

three bags. Your first chore will be to determine how to get an unbiased sample from the bag.

This is not an easy task. Your group should discuss this among yourselves and try to

1) think about possible sources of bias in getting the sample

2) devise ways to avoid or reduce each of these sources of bias.

Your next chore will be to determine what the sample size should be. Obviously the total

number of seedpods in the bag is too large for you to be able to measure them all (also remember

the contents of each bag are themselves a sample [of the tree]). On the other hand too small a

sample may not give you a good estimate of the population parameters. Can you devise an

objective mechanism or procedure to tell you what an appropriate sample size should be? What

information would you need to know before you can answer that question?

Before you begin, check with the TA or teacher to go over your sampling protocol.

There are many traits you could determine for the seedpods including chemical and

physical factors. For the purposes of this exercise you will limit the traits measured to two, one

continuous (seedpod length) and one discrete (number of seeds per pod). Continuous variables

are those where any number of values are possible along a spectrum i.e. lengths, weights,

hemoglobin levels etc. Discrete variables are for traits than can be counted i.e. number of bristles

on a fly’s foreleg, number of eggs laid by a frog etc. [However think about this. If you get a

mean density, say the number of coral heads per square meter is that continuous or discrete?]

Measure the length of each pod using metric values (millimeters or centimeters) and also record

the number of seeds in that pod. Record all pertinent data in your lab notebook.

III.

Sample Population Statistics

It is always important to get a “feel” for your data before you spend much time (and

money) in more complex analyses. Simply looking at the data (as in a list of measurements) isn’t

likely to be much help, so graphic visualizations are used. A very basic type of graphic

visualization is the FREQUENCY HISTOGRAM. To make a frequency histogram you need

2

to group the measurement data into discrete intervals. This is more straightforward for discrete

variables (such as number of seeds per pod) but may require some thinking for continuous

variables (such as pod length). The choice of interval is up to you but there is usually some

optimum number of intervals that maximizes the visual information available. This, of course,

may be different in different circumstances. But remember if you want to make comparisons

between data sets you may want to use the same X axis for all of them. Tabulate your data values

in the interval categories to get that the numbers of measurements (pods) in each category.

These are then plotted as histogram with the interval along the horizontal (X) axis and the

frequency along the vertical (Y) axis.

For many biological samples, the resulting frequency distribution is often “bell-shaped”.

This bell-shaped curve is informally referred to as a NORMAL DISTRIBUTION (The real

definition of a normal distribution is given by the relationship between two parameters [which

we will discuss below]). Why should most measurements on biological material be distributed

in such a manner, with most of the measurements clustering about an “average” value, but with a

few extreme values on either side? The answer lies partly in the genetic variability, which is

inherent in all biological material. No two individuals are genetically exactly alike. While

closely related individuals have many of the same genes in common, slight genetic differences

do exist. Thus, if the fur color of rabbits has a genetic basis, then a good camouflage color like

brown will be the most common color in the population. However, a few rabbits will carry genes

for both lighter and darker coat color, especially if fur color is under the control of several genes.

But you also see normal distributions in situations where genetics – or even biology – play no

part so there is more (lots more) to this tendency for values to be distributed this way.

One of the nice things about the normal distribution is that it is symmetrical, so that any

particular normal distribution can be completely specified (or described) if you know just two

parameters, the central value - the MEAN (or average); and the distance from the mean to the

point of inflection - the STANDARD DEVIATION. You are very familiar with the concept of

a mean or an average. The standard deviation is basically a measure of how broadly the values

are scattered around the mean.

Statistical analyses dealing with normal (also called parametric) distributions rely heavily on

these two parameters - mean and standard deviation (or its square - the variance). The majority

of today’s exercise will be devoted to the methods of calculating these statistics and seeing what

can be done with them. But you must always remember that parametric statistics should only be

used when the distribution of data is known to be normal. (See Ch.6 in Sokal & Rolf or

http://mathworld.wolfram.com/NormalDistribution.html and

http://www-stat.stanford.edu/~naras/jsm/NormalDensity/NormalDensity.htmlfor a more

extensive discussion of the normal distribution.

3

IV.

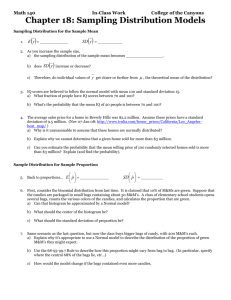

Calculation of sample Mean and Standard Deviation:

If the distribution of sample values is normal, the sample mean and sample standard

deviation may be calculated from the sample measurements by following the procedures given

below.

1. The mean

X̄ = Xi /n

where

X̄

Xi

n

is the symbol for the mean

is the ith individual measurement

is the total number of measurements (the sample size)

is a symbol, which means “the sum of all measurements

from i = 1 to i = n”

2. The standard deviation

S=

{ (Xi - X̄)2 /

(n-1)}

or a similar formula obtained through algebra, which is more convenient when using

calculators,

S=

{ Xi2 –[(X1)2/n] /

(n-1)

where s is the symbol for the standard deviation and the rest of the notation is the same

as given for the mean.

Many pocket calculators are programmed to calculate the mean and standard deviation

directly from your data but you should study these formulae to get an understanding of the

meaning of these statistics. These basic sample descriptive parameters are also easily determined

directly on spreadsheet applications such as MS Excel®. Other statistics (e.g. mode, median,

range, etc.) also have their uses but you will use them less in this lab. Because many applications

make calculating statistical parameters so quick and easy it is important to really know what you

think you are doing before hitting the Ғx (function) key.

V.

Comparing Two Populations

In almost all cases where you compare data obtained from two samples they will be

different, even if they are from the same population. Imagine you flip a coin 10 times and keep a

record of the results (e.g. H T T H T etc.) its unlikely (and more and more unlikely as you flip

4

the coin more and more times) that you will get the same sequence of heads and tails in two sets

of trials. BUT what about the mean number of heads? If it is an honest coin you expect that on

average you will get about 50% heads in a sample of appropriate size (and exactly 50% heads in

a sample of infinite size). But in two sets of 10 coin tosses are you going to always get 5 heads

and 5 tails even if it is an honest coin with no bias in the tossing? There are statistical tests to

help you determine whether two samples that are somewhat different may in fact have come

from the same population (in this example the population of heads and tails available from this

honest coin; in this lab two samples of seed pods). An assumption of the tests you will use in this

exercise is that the underlying distributions are normal. These parametric tests are based on

asking the question

“What are the chances that two unbiased samples taken from the same population will

differ by the amount that my two samples differ?”

Note there are actually two populations under consideration here; the real world

population that you took your samples from, and an ideal population with certain known

features. The tables you will use (or can find in any statistics book) are based (at least

theoretically) on drawing many samples of a given size (n) from this ideal population and

determining how much difference is found. That is (in theory), the table-maker takes thousands

of samples of size 2, thousands of size three, thousands of size four, and so on and so on, and

gets the distribution of the parameters (say mean and standard deviation) of each set of 1000

samples. Since the table-maker knows that the samples were drawn from the same population,

the differences she finds are those expected when two (or more) samples are drawn from what is

really the same source population. As you would expect most of the pairwise differences are

small (e.g. for most pairs of means the differences are not very big) but out of 1000 samples you

would be sure to find a couple of means that were pretty different by chance alone. The tables

you will use are constructed such that for two samples (so far you are dealing with pairwise tests,

but all this stuff can be generalized to more than two samples) of a specific size (n), drawn from

the same population, you can look up the chances (probability) that the differences between your

two samples from the real world are bigger than you would expect if they had been drawn from

the same population. So to enter the table you need to have some statistic (a single number) that

compares your two populations (in this exercise you will use one ratio and one difference) the

sample size [or actually the degree of freedom (in our case df = n-1)] and the level of probability

that will make you happy. What does this happiness depend on? You’ll think back to the ideal

population and the thousands of samples (or pairs of samples with their comparative statistic). As

you saw most sample parameters will be similar (so the ratio will be close to 1 if you are looking

at ratios, or the difference will be close to zero if you are looking at differences), but in a few

cases the numbers will be pretty big. In 1000 pairs of samples from the same population there

will be a few with a really big difference just by chance. With a 1000 sample pairs you can

count how many are greater than some value (what you will come to call the significance level –

though significant to who or what is never really clear). You will find that in 1000 samples

only100 have a difference (or ratio) greater than some value. So then you can say: “In only 10%

(100 out of 1000) of cases of pairs of samples drawn from the same population will the

differences (or ratios) be bigger than this”. Of course you can do this for 5% 1% .01% or

whatever. {Don’t worry about the poor table maker all this is done by algorithms on computers

today}

5

So the end result of all this is that you can decide on some chance of being wrong (That is

saying the difference (or ratio) you found is due to chance when it actually isn’t. For your

edification this acceptance of a false null hypothesis is called a type II error) that you are willing

to accept then set that value as your level of rejection of the null hypothesis (that there is really

no difference). To get this measure of the difference between the estimates of the population

measurements for two samples in this exercise, you will perform two statistical tests. This will

allow you to objectively state (with a certain degree of confidence, say 95% sure) that you think

that your two samples are from one population or two.

The first test indicates whether the differences in variability among measurements in

your two samples (as measured by the standard deviation – or actually the variance) are similar.

If they are not (i.e., there is a different amount of variability in the two samples (the shapes of

the two distributions differ) then you are reasonably sure that you have samples from two

populations (Why?) and need not carry out the second part of the test.

The sample standard deviations are compared arbitrarily by assigning the larger standard

deviation to s1 and the smaller to s2. The F value (which is always equal to or greater than 1.0) is

calculated as [Note here that you are actually using the variance which is defined as the standard

deviation squared]

F = s21 / s22

The next thing you need to know is the degrees of freedom for each sample. These are

calculated as

df1 = n1 – 1

df2 = n2 – 1

You use these values (which are really correction factors which compensate for the fact that you

are using samples rather than the whole population) in Table 2 to obtain the listed F value.

Remember if the two variances are equal the ratio is 1. If they are very similar, the ratio is not

very far from 1. If the F value (the ratio of the two variances) which you calculated, is greater

than the value listed in Table 2, you are 95% confidant that the two samples are from different

populations (if you use the 95% table of course). If this is found to be the case with your

comparison you need not make any further tests and can state you are 95% confident your

samples came from two different populations. Think about this means – If your samples have a

normal distribution and their standard deviations are so different that you get a large F value (for

your sample sizes). You may state with a one in twenty chance of being wrong that the two

samples come from different populations. If the 95% confidence level is not high enough for

you, there is F table available for 99% and even 99.9% confidence!

A calculated F value, which is less, than that taken from the F table indicates there is no

evidence that the samples are from different populations based on the shapes of the distributions.

Note – a small F value does NOT mean the samples ARE from the same population, only that

the shapes of the frequency distributions of the samples do not permit you to feel confident that

they are different. Look carefully at the F table noting how the values change with sample size

and with the selected confidence level. Tables with this statistic can be found in statistics books

or online. The site:http://www.stat.lsu.edu/EXSTWeb/statlab/Tables/TABLES98-F-pg2.html

6

gives an easy to use version. Sometimes available tables do not have the degrees of freedom

listed that you want. If you look at the values in the table you can tell right away if using a df that

is close to yours will make a big difference of not. If you do this choose the more conservative

value. If you want to get a better approximation you can interpolate

(http://www.acted.co.uk/forums/showthread.php?t=657)

When the F test does not suggest the two samples are from different populations you will

then test the difference between the means. To test whether two sample means are estimators of

the same population, you perform a t-test. The calculation is

t =

where

Y1

is the mean of sample 1 (ditto for sample 2)

n1

and

sp

where

s1 2

Y1 – Y2

sp * ( 1/n1 + 1/n2)1/2

is the size of sample 1 (ditto for sample 2)

=

({(df1 * s12) + (df2 * s22)} / (df1 + df2) )1/2

is the square of the standard deviation and is

referred to as the variance of the values.

Note that all the t value is the difference between your two sample means, corrected for

variability in the samples and the sample size. Look in the t-table under the degrees of freedom

given by df1 + df2. If the t value which you calculated is greater (i.e. there is a big difference

between the two means) than the value listed in Table 1, you can be 95% confident (assuming

you are looking in the 95% section) that the means of the two samples are significantly different.

If your calculated t value is smaller than the value from the t-table, you have no evidence that the

samples are significantly different. If your calculated t values are smaller than the value from the

t-table, you have no evidence that the samples come from different populations. Tables for

critical values of the t-statistic can also be easily found :

http://davidmlane.com/hyperstat/t_table.html

You could still be wrong (in fact you will be wrong 1 time out of every 20 tries on

average if you use the 95% confidence level). This is called a type II error - you accept the null

hypothesis (of no difference) when in fact they samples come from different populations. A type

I error is the opposite – you incorrectly reject the null hypothesis when in fact the two samples

did come from the same population. How will selection of a different confidence level affect the

chances of a type I or a type II error? THINK about this.

7

VI.

The chi-square test

If you are working with counts (discrete measures) rather than continuous measurements

(e.g., length, weight, etc.), you can use the Chi-square test to test for deviations from

some EXPECTED result. For example, modern genetic theory tells us that the sex ratio of

most animal populations should be 50% males and 50% females. In a sample of finite

size (such as the students in this class) a departure from this 50:50 ratio is not surprising.

If the sample is big enough and the departure from 50:50 is great enough then you might

suspect something is skewing the sex ratio in the population you sampled. A significant

departure from this 50:50 ratio (your expected result) when you sample a population

indicates that you need to look into the reasons behind such a large deviation from the

expected result. Possible explanations for differing sex ratio include high mortality upon

one sex, sex reversal, or an anomalous genetic system.

VII.

Assignments

There will be three bags of seedpods, labeled A, B, C. Two bags will contain seedpods

from the same population (a single tree in this case), and the other will have pods from a

different population (tree).

1. Divide up into groups of two – three persons each.

2. Take one sample from each of the three boxes.

There are two questions you must ask before you begin (These two questions will be

central to the rest of your semester’s work in this course!)

a) How big a sample will you need to take to be able to have some assurance that your data

will be sufficient? You have seen how the sample size (actually the degree of freedom)

determines the outcome of your statistical tests so how big a sample will you need to be able

to test your data?

b) How do you obtain your sample? That is, assuming you will not use all the seedpods in the

bag, how will you choose which seed pods you will measure?

Do not begin sampling, or measuring until you have

worked out your sample size and your sampling

technique!!

3. Measure the lengths of each pod and also record the number of seeds per pod, for each of the

three samples.

4. Plot the frequency histogram for each sample.

5. Compare all three samples using the F-test.

6. Where appropriate do the t-test.

Which of the three samples is from a different population? How do you know? Are there

any biological clues, which also aid in your decision?

7. You may be given a data set for which you can try out the Chi square test. If so, calculate 2

values for that data set

8

8. From the current ecological literature obtain data suitable for 2 analysis where the author

hasn’t done so. Perform the analysis and discuss your results.

9.

Hirth (Ecological Monographs 33:83-112, 1963) studied two species of lizards inhabiting a

beach in Costa Rica. Each month for four months he captured and sexed all the juveniles and

adults of each species. The data for one species is listed below.

The procedure for using the Chi-square test for the June adult sample is as follows:

Chi-square

(x2) =

(observed – expected)2

expected

The expected values for both males and females is 38 + 58 = 48

2

2

2

Therefore

x

=

(38 – 48)

+

(58 – 48)2

=

4.17

48

48

Compare this value with that given in a chi square table (ex.

http://people.richland.edu/james/lecture/m170/tbl-chi.html) for 1 degree of freedom. The value

4.17 is larger than 3.84, so you can be 95% confident that the June samples deviates significantly

from the expected 50:50 sex ratio. (The degrees of freedom for the X2 test are calculated as the

number of groups minus 1. You had two groups, male and female, so our df is 2 – 1 = 1).

Calculate the X2 value for each of the months and also for the total, for both juveniles and adult

data separately. Which are significantly different? Does the statistical test tell you why they are

different? Another example of the use of the Chi-square test is shown in Krebs on page 375-379.

9