Here

advertisement

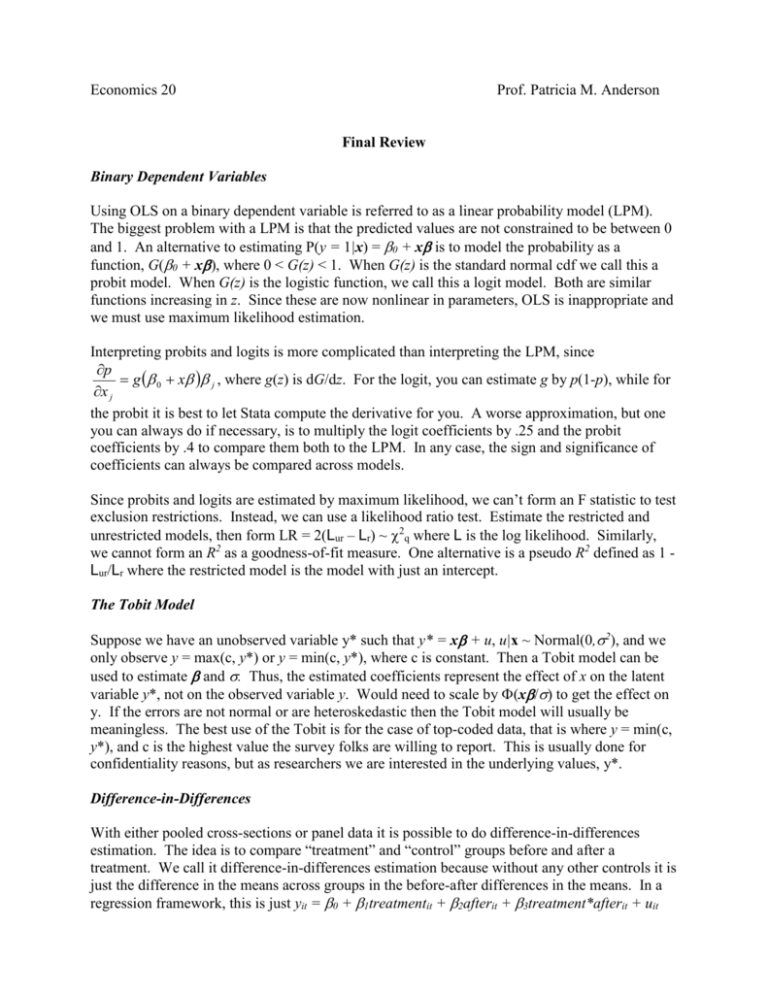

Economics 20 Prof. Patricia M. Anderson Final Review Binary Dependent Variables Using OLS on a binary dependent variable is referred to as a linear probability model (LPM). The biggest problem with a LPM is that the predicted values are not constrained to be between 0 and 1. An alternative to estimating P(y = 1|x) = 0 + x is to model the probability as a function, G(0 + x where 0 < G(z) < 1. When G(z) is the standard normal cdf we call this a probit model. When G(z) is the logistic function, we call this a logit model. Both are similar functions increasing in z. Since these are now nonlinear in parameters, OLS is inappropriate and we must use maximum likelihood estimation. Interpreting probits and logits is more complicated than interpreting the LPM, since p g 0 x j , where g(z) is dG/dz. For the logit, you can estimate g by p(1-p), while for x j the probit it is best to let Stata compute the derivative for you. A worse approximation, but one you can always do if necessary, is to multiply the logit coefficients by .25 and the probit coefficients by .4 to compare them both to the LPM. In any case, the sign and significance of coefficients can always be compared across models. Since probits and logits are estimated by maximum likelihood, we can’t form an F statistic to test exclusion restrictions. Instead, we can use a likelihood ratio test. Estimate the restricted and unrestricted models, then form LR = 2(Lur – Lr) ~ 2q where L is the log likelihood. Similarly, we cannot form an R2 as a goodness-of-fit measure. One alternative is a pseudo R2 defined as 1 Lur/Lr where the restricted model is the model with just an intercept. The Tobit Model Suppose we have an unobserved variable y* such that y* = x + u, u|x ~ Normal(0,2), and we only observe y = max(c, y*) or y = min(c, y*), where c is constant. Then a Tobit model can be used to estimate and . Thus, the estimated coefficients represent the effect of x on the latent variable y*, not on the observed variable y. Would need to scale by (x to get the effect on y. If the errors are not normal or are heteroskedastic then the Tobit model will usually be meaningless. The best use of the Tobit is for the case of top-coded data, that is where y = min(c, y*), and c is the highest value the survey folks are willing to report. This is usually done for confidentiality reasons, but as researchers we are interested in the underlying values, y*. Difference-in-Differences With either pooled cross-sections or panel data it is possible to do difference-in-differences estimation. The idea is to compare “treatment” and “control” groups before and after a treatment. We call it difference-in-differences estimation because without any other controls it is just the difference in the means across groups in the before-after differences in the means. In a regression framework, this is just yit = 0 + 1treatmentit + 2afterit + 3treatment*afterit + uit where 3 is the difference-in-differences in the group means. Why? Think about which dummy variables will be 1 and which will be 0 for each group. This implies the means for each group are as shown, so the differences and difference-in-differences (bottom corner) are as shown. Before After Difference Treatment 0 + 1 0 + 1 + 2+ 3 2+ 3 Control 0 0 + 2 2 Difference 1 1 + 3 3 This method can be expanded to triple differences, the idea being you have an entire “control experiment” to difference from this experiment. In this case you need to add a dummy for being in the true experiment, interacted with all of the above. The triple difference is then the coefficient on the interaction of treatment*after*true experiment. In all cases, additional x variables can be included in the regression to control for differences across groups. Only if there is true random assignment to groups will it be unnecessary to control for other x’s. Unobserved Fixed Effects With true panel data the time dimension is achieved by following the same units over time, as opposed to pooled cross sections, which use a new random sample in each period. True panel data allows us to address the issue of unobserved fixed effects. Consider a model with the error term vit = ai + uit which is a composite of the usual error term and a time constant component. If this unobserved fixed effect, ai, is correlated with the x’s, then it causes OLS to suffer from omitted variable bias. If this omitted variable is truly fixed over time, then we can use panel data to difference it out and obtain consistent estimates. First-differences estimation involves differencing adjacent periods and using OLS on the differenced data. Fixed effects estimation uses quasi-differencing, where in each period we subtract out the mean over time for that individual. This method could be thought of as including a separate intercept for each individual. When there are just 2 periods, first-differences and fixed effects will result in the same estimated coefficients, but if T>2 there will be differences. Both methods are consistent. If ai is not correlated with the x’s, then OLS is unbiased. However, the error terms will be serially correlated implying that the standard errors are wrong. The random effect estimator is a feasible GLS method for obtaining the correct standard errors. Essentially it involves quasidemeaning, so that you end up with a sort of weighted average of OLS and fixed effects. A Hausman test can be used to test whether fixed effects and random effects estimates are different. If they are, then we must reject the null that ai is not correlated with the x’s. An alternative to random effects is to simply scale the standard errors to take into account alternative forms of serial correlation (and heteroskedasticity). That is, to allow for the fact that the observations are clustered, and thus may have correlated errors within the cluster. Instrumental Variables Whenever x is endogenous (because of omitted variables, measurement error, etc.), OLS is biased. In this case, instrumental variables (IV) estimation can be used to obtain consistent estimates. A valid instrument must be strongly correlated with x, but be completely uncorrelated with the error term. IV is also referred to as two-stage least squares (2SLS) because the IV estimates can be obtained in the following manner. First, regress x on the instrument and all of the other exogenous x’s from the model and obtain the predicted values. This first stage regression also allows you to test whether your instrument is correlated with x – it must be significant in this regression. Now run the original model substituting the predicted value of x for x. While this method gives the exact same coefficients as IV, the standard errors are off a bit, so it is preferable not to do IV by hand. The method can be extended to multiple endogenous variables, but it is necessary to have at least one instrument for each endogenous variable. Testing for Endogeneity and Overidentifying Restrictions A version of a Hausman test can be used to test whether x is really endogenous. The idea is that OLS and IV are both consistent if x is not endogenous, so the results can be compared. To do this test, save the residuals from the first stage regression, and include them in the original structural model (leave the potentially endogenous x in). If the coefficient on this residual is significantly different from zero, you can reject the null that x is exogenous. Note that the coefficients on the other variables will be identical to the IV coefficients – this is just another way to think of 2SLS. Hence, if the coefficient on the residual is zero, IV and OLS are the same. While we can use the first stage regression to test if our instrument is correlated with x, and we can use the Hausman test to see if the x is truly endogenous, in general we cannot test whether our instrument is uncorrelated with the error. However, if we have more than one instrument we say the model is overidentified and we can test whether some of the instruments are correlated with the error. To do this, we use IV to estimate the structural model, saving the residuals. Then we regress the residuals on all of the exogenous variables. The LM statistic nR2 ~ q2, where q is the number of extra instruments. Don’t confuse this overidentifying test with the special form of the Breusch-Pagan test for IV models. When testing for heteroskedasticity after IV, you need to regress the residuals squared on all of the exogenous variables. Similarly, testing for serial correlation after IV is a bit different. You need to save the residuals from the IV estimation and then use IV again on the model with the lagged residual included. If you have serial correlation and plan to quasidifference to fix it, you need to use IV on the quasi-differenced model, where the instrument is also quasi-differenced. Simultaneous Equations Simultaneous equations models (SEM) are really just another reason why x might be endogenous, requiring the use of IV. However, it can be a bit complicated to think about identification when you have SEM. Suppose you have the following structural equations: y1 = 1y2 +1z1 + u1 and y2 = 2y1 +2z2 + u2, where the z’s are exogenous. The reduced form equations express the endogenous variables in terms of all of the exogenous variables and are y1 = 1z1 +2z2 + u1 and y2 = 1z1 +2z2 + u2. While we can always estimate these reduced from equations, we can only estimate the structural equation if it is identified. To identify the first equation, there must be variables in z2 that are not in z1. Similarly to identify the second equation there must be variables in z1 that are not in z2. Estimation of an identified equation is by IV, where all of the exogenous variables in the system are the instruments. Sample Selection Correction If a sample is truncated in a nonrandom way, then OLS suffers from selection bias. It’s as if there is an omitted variable for how the observation was selected into the sample. Consistent estimates can be obtained by including , the inverse Mills ratio as a sample selection correction term. After estimating a probit of whether y is observed on variables z, these estimates are used to form . Then you can regress y on x and to get consistent estimates. For this to be identified, x must be a subset of z. This is typically referred to as a Heckman selection correction model, or sometimes a Heckit. Unbiasedness of OLS for Time Series Data Time series data has a temporal ordering, so no longer just have a random sample. Since it is not a random sample, we need to change the assumptions for unbiasedness. Still need to assume the model is linear in parameters, and that there is no perfect collinearity or constant x. Now the zero conditional mean assumption is stronger: E(ut|X) = 0, t = 1, 2, …, n. That is, the error term in any given period is uncorrelated with all of the x’s in all time periods. In this case we say that the x’s are strictly exogenous. With weakly dependent series, contemporary exogeneity will be sufficient – meaning that the error term in any given period is uncorrelated with all of the x’s in that time period. Variance of OLS for Time Series Data Similarly, we need a stronger assumption of homoskedasticity: Var(ut|X) = Var(ut) = 2. This implies that the error variance is both independent of the x’s and constant over time. We also need to assume that there is no serial correlation in the errors: Corr(ut,us|X) = 0 for t ≠ s. Under these Gauss-Markov Assumptions for time series data, OLS is BLUE. Finite Distributed Lag Models Since time series data has a temporal ordering, we can consider using lags of x in our model. A finite distributed lag model of order q will include q lags of x. The coefficient on the contemporaneous x is referred to as the impact propensity and reflects the immediate change in y. The long-run propensity (LRP), which reflects the long-run change in y after a permanent change, is calculated as the sum of the coefficients on x and all its lags. Trends and Seasonality Since we are usually interested in a causal interpretation of the effect of x on y, with time series data it is often necessary to control for general trends and seasonality. If two unrelated series are both trending, we may falsely conclude that they are related – we may have a case of spurious regression. To avoid this problem, we can include a trend term. Similarly, if we think there are seasonal effects, we can include season dummies. For monthly data this may be month dummies, for quarterly data it will likely be quarter dummies, etc. We can obtain the same coefficients by first detrending and/or deseasonalizing the series. To do this, simply regress each series on the trend and/or season dummies, saving the residuals. The residuals are the detrended series. While the coefficients will be the same, the R2 will be much lower. This may be useful, if what you really want to know is how much of y is being explained by just x, not the trend. The idea here is that we are interested in how the movements around the trend are related. Serially Correlated Errors Testing for whether there is AR(1) serial correlation in the errors is straight forward. We want to test the null that = 0 in ut = ut-1 + et, t = 2, …, n. We can use the residuals as estimates of the errors, so just regress the residuals on the lagged residuals. However, this test assumes that the x’s are all strictly exogenous. An alternative, then, is to regress the residuals on the lagged residuals and all of the x’s. This is equivalent to just adding the lagged residual to the original model. Higher order serial correlation can be tested for in a similar manner. Just include more lags of the residual and test for the joint significance. (The LM version of this exclusion restriction test is referred to as a Breusch-Godfrey test – that Breusch gets around!). Correcting for AR(1) serial correlation involves quasi-differencing to transform the error term. If we multiply the equation for time t-1 by , and subtract it from the equation for time t, we obtain: yt - yt-1 = (1 - 0 + 1(xt - xt-1) + et, since et, = ut - ut-1. This is a feasible GLS estimation method, since we must use an estimate of from regressing the residuals on the lagged residuals. Depending on how one treats the first observation, this feasible GLS estimation is referred to as Cochrane-Orcutt or Prais-Winsten estimation. Each of these can be implemented iteratively. In addition to feasible GLS, it is also possible to just scale the standard errors to adjust for arbitrary forms of serial correlation. This is similar to scaling standard errors to be robust to arbitrary forms of heteroskedasticity, rather than doing feasible GLS (i.e. WLS) for a known form of heteroskedasticity. In this case, we refer to serial correlation robust standard errors as Newey-West standard errors. Random Walks An autoregressive process of order one, an AR(1) is characterized as one where yt = yt-1 + et, t = 1, 2, … with et being an iid sequence with mean 0 and variance e2. For this process to be weakly dependent (and hence suitable for appropriate analysis and inference with OLS) it must be the case that || < 1. If = 1, the series is not weakly dependent because the expected value of yt is always y0. We call this a random walk, and say that it is highly persistent. This is also referred to as a case of a unit root process. It is possible for there to be a trend as well – this is referred to as a random walk with drift. We also refer to a highly persistent series as being integrated of order one, or I(1). To transform such a series into a weakly dependent process – referred to as being integrated of order zero, or I(0) – we can first difference it. In order to test for a unit root, that is to see if we have a random walk, we need to do a DickeyFuller test. Regress yt on yt-1 and use the special Dickey-Fuller critical values to determine if the t statistic on the lag is big enough to reject the null of a unit root. It is also possible to do an augmented Dickey-Fuller test, in which we add lags of yt in order to allow for more dynamics. We can also include a trend term, if we think we have a unit root with drift. When including a trend, we need a different set of special Dickey-Fuller critical values. Cointegration If both x and y follow a random walk, a regression of y on x will suffer from the spurious regression problem. That is, the t statistic on x will be significant, even if there is no real relationship. However, it may still be possible to discover an interesting relationship between two I(1) processes. Suppose that there is a such that yt – xt is an I(0) process, then we say that y and x are cointegrated with a cointegration parameter . If we know what is, say from theory, then we just calculate st = yt – xt and do a Dickey-Fuller test on s. If we reject a unit root then y and x are cointegrated. Otherwise, we regress y on x and save the residuals. We then regress ût on ût-1 and compare the t statistic on the lagged residual to the special critical values for the cointegration test. If there is a trend, it can be included in the original regression of y on x, and a different set of critical values is used.