4 Regressions with two explanatory variables.

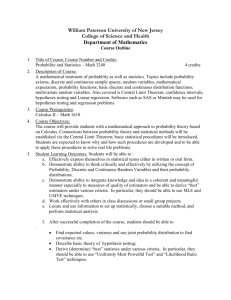

advertisement

4 Regressions with two explanatory variables.

This chapter contains the following sections:

(4.1) Introduction.

(4.2) The gross versus the partial effect of an explanatory variable.

(4.3) R 2 and adjusted R 2 ( R 2 ) .

(4.4)

Hypothesis testing in multiple regression.

4.1

Introduction

In the proceeding sections we have studied in some detail regressions containing

only one explanatory variable. In econometrics with its multitude of dependencies, the

simple regression can only be a showcase. As such it is important and powerful since

the methods applied to this case, carry over to multiple regression with only small and

obvious modifications. However, some important new problems arise which we point

out in this chapter.

As usual we start with the linear form:

(4.1.1)

Yi 0 1 X i1 2 X i 2 i (i 1, 2,..., N ) ,

where we again assume that the explanatory variables X i1 and X i 2 are deterministic and

also that the random disturbances i have the standard properties:

(4.1.2)

E ( i )=0

(4.1.3)

Var( i )= 2

(4.1.4)

Cov( i , j ) 0

i j

The coefficient 0 is the intercept coefficient, 1 is the slope coefficient of

X1 , showing the effect on Y of a unit change in X1 , holding X 2 constant or

controlling for X 2 . Another phrase frequently used is that 1 is the partial effect on Y

of X1 , holding X 2 fixed. The interpretation of 2 is similar, except that X1 and

X 2 change rolls.

1

Since the random disturbances are homoskedastic (i.e. they have the same

variances), the scene is prepared for least square regression. Hence, for arbitrary

values of the structural parameters ̂ 0 , ̂1 and ̂ 2 the sum of squared residuals are

given by:

N

N

i 1

i 0

Q( ˆ0 , ˆ1 , ˆ 2 ) ei2 (Yi ( ˆ0 ˆ1 X i1 ˆ 2 X i 2 )) 2

(4.1.5)

Minimizing Q(ˆ0 , ˆ1 , ˆ2 ) with respect to ˆ0 , ˆ1 , and ˆ2 give the OLS estimators of

0 , 1 and 2 . This is a simple optimization problem and we can state the OLS

estimators directly:

(4.1.5)

ˆ0 Y ˆ1 X1 ˆ2 X 2

(4.1.7)

ˆ1

( xi1Yi )( x i 2 ) ( xi 2Yi )( x i1 xi 2 )

2

D

( xi 2Yi )( x i 2 ) ( xi1Yi )( x i1 xi 2 )

2

(4.1.8)

ˆ2

(4.1.9)

D ( xi1 )( xi 2 ) ( xi1 xi 2 )

D

2

2

2

In these formulas we have used the common notation:

xi1 X i1 X 1

(4.1.10)

,

xi 2 X i 2 X 2

We have also used the useful formulas:

(4.1.11)

x Y x

(4.1.12)

x Y x

i1 i

i2 i

y

i1 i

y

i2 i

where yi Yi Y

You should check for yourself that these formulas hold.

You should also check for yourself that the OLS estimators ̂ 0 , ̂1 , and ̂ 2 are

unbiased, i.e.

2

(4.1.13)

E ( ˆ0 ) 0

(4.1.14)

E ( ˆ1 ) 1

(4.1.15)

E ( ˆ2 ) 2

The variances and covariances of these OLS estimators are readily deduced:

(4.1.16)

Var ( ˆ0 )

X 12Var ( ˆ1 ) X 2Var ( ˆ2 ) 2 X 1 X 2Cov( ˆ1 , ˆ2 )

N

(4.1.17)

Var ( ˆ1 )

(4.1.18)

Var ( ˆ2 )

2

2 S 22

S11S 22 S122

2 S11

S11S22 S122

Using:

(4.1.19)

r12

S12

,

S11S22

1 r 1

The formulas for Var ( ˆ1 ) and Var (ˆ2 ) can be rewritten

(4.1.20)

Var ( ˆ1 )

(4.1.21)

Var ( ˆ2 )

2

S11 (1 r122 )

2

S22 (1 r122 )

Similar calculation show that:

(4.1.22)

Cov( ˆ1 , ˆ2 )

r122 2

S12 (1 r122 )

Evidently, many results derived in the simple regression have immediate

extension to multiple regression. But some new points enter of which we have to be

aware.

4.2 The gross versus the partial effect of an explanatory variable

In order to get insight in this problem, it is enough to start with the multiple

3

regression (4.1.1). Hence, we specify:

(4.2.1)

Yi 0 1 X i1 2 X i 2 i (i 1, 2,..., N )

To facilitate the discussion we assume, for the time being, that also the

explanatory variables X i1 and X i 2 are random variables.

In this situation the above regression is by no means, the only regression we

might think of. For instance, might also consider:

(4.2.2)

Yi 0 1 X i1 u1i

or

(4.2.3)

Yi 0 1 X i 2 u2i

where u1i and u2i are the random disturbances in the regressions of Y on X1

and Y

on X 2

In the regressions (4.2.1) and (4.2.2) 1 and 1 show the impact of X1 on Y,

but obviously 1 and 1 can be quite different. In (4.2.1) 1 shows the effect of

X1 when we control for X 2 . Hence, 1 shows the partial or net effect of X1 on Y. In

contrast, 1 shows the effect of X1 when we do not control for X 2 . Intuitively,

when X 2 is excluded from the regression, 1 will absorb some of the effect of X 2

on Y since there will normally be a correlation between X1 and X 2 . Therefore, we

call 1 the gross effect of X1 . Of course, similar arguments apply to the comparison

of 2 and 1 in the regression (4.2.1) and(4.2.3). As econometricians we often

wish to evaluate the effect of an explanatory variable on the dependent variable. Since

the gross and partial impacts can be quite different, we understand that we have to be

cautious and not too bombastic when we interpret the regression parameters. At the

same time it is evident that this is a serious problem in any statistical application in

the social science.

A comprehensive Danish investigation studied the relation between mortality and

4

jogging. One compared the mortality for two groups, one consisted of regular joggers

and the other of people not jogging. The research worker found that the mortality rate

in the group of regular joggers was considerably lower compared to the group

consisting of non-joggers. This result may be reasonable and expected, but was the

picture that simple? A closer study showed that the joggers were better educated,

smoked less and almost nobody in this group had weight problems. Could these

factors help to explain the lower mortality rate for the joggers? A further study of this

sample showed that although these factors had systematic influences on the mortality

rate, the jogging activity still reduced the mortality rate.

In econometrics or in the social sciences in general similar considerations relate

to almost any applied work. Therefore, we should like to shed some specific light on

the relations between the gross and partial effects. Intuitively, we understand that the

root of this problem is due to the correlation between the explanatory variables, in our

model the correlation between X i1 and X i 2 . So when X i 2 is excluded from the

regression, some of the influence of X i 2 on Yi is captured by X i1 .

In order to be concise, let us assume that

(4.2.4)

X i 2 0 1 X i1 vi

where vi denotes the disturbances term in this regression. ((4.2.4) shows why it is

convenient to assume that X 1 and X 2 are random variables in this illustration).

Using (4.2.4) to substitute for X i 2 in (4.2.1) we attain:

(4.2.5)

Yi ( 0 2 0 ) ( 1 21 ) X i1 ( i 2 vi )

Comparing this equation (4.2.2) we attain:

(4.2.6)

u1i i 2 vi

(4.2.7)

0 0 2 0

(4.2.8)

1 1 21

We

observe

immediately

that

if

there

5

is

no

linear

relation

between

X i1 and X i 2 (i. e. 1 0) , then the gross effect 1 coincides with the partial (net)

effect 1 since in this case 1 1 (see (4.2.8)).

The intercept term 0 will be a mixture of intercepts 0 and 0 and the partial

effect of X i 2 , namely 2 .

Equations (4.2.7) - (4.2.8) show the relations between the structural parameters,

we have still to show that OLS estimators confirm these relations. However, they do!

Let us verify this fact for 1 (see (4.2.8)). We know from (4.1.7) and (4.1.8)

that:

(4.2.9)

ˆ1

S01S 22 S02 S12

S11S 22 S122

(4.2.10)

ˆ2

S02 S11 S01S12

S11S 22 S122

N

N

i 1

i 1

Where S01 x1Yi and S02 xi 2Yi

From section (4.2) we realize that:

(4.2.11)

ˆ 0 Y ˆ1 X 1

(4.2.12)

ˆ1

S01

S11

where ˆ 0 and ˆ1 are obtained by regressing Yi on X i1 .

Similarly, by regressing X i 2 on X i1 (4.2.4) we attain:

(4.2.13)

ˆ0 X 2 ˆ1 X 1

(4.2.14)

ˆ1

S12

S11

Piecing the various equations together we obtain:

(4.2.15)

ˆ1 ˆ 2ˆ1

( S 01S 22 S 02 S12 ) ( S 02 S11 S 01S12 )( S12 / S11)

S11S 22 S122

6

S 01 ( S11S 22 S122 ) / S11 S 01

ˆ1

S11

S11S 22 S122

Thus, we have confirmed that:

(4.2.16)

ˆ1 ˆ1 ˆ 2ˆ1

so that the OLS estimators satisfy (4.2.8). In a similar way we can show that:

(4.2.17)

ˆ 0 ˆ0 ˆ2ˆ0

verifying (4.2.9).

We also observe that S12 0 implies that ˆ1 0 (see (4.2.14)). By (4.2.16) in this

case we have that ˆ 1 ˆ1 .

Therefore, if X i1 and X i 2 are uncorrelated, then the gross and partial effects of X i1

coincide. The specification issue treated in this section is important and interesting,

but at the same time challenging capable of eroding any econometric specification.

Many textbooks treat it under the heading “Omission variable bias”. In my opinion

treating this as a bias problem is not the proper approach. In order to substantiate this

view, we take (4.1.1) as the starting point but now assuming that X i1 and X i 2 are

random. Excluding details we simply assume that the conditional expectation of

Yi given X i1 and X i 2 can be written:

(4.2.18)

E[ Yi X1i , X2i ] 0

1

Xi 1

X

2 i 2

If our model is incomplete in that X i 2 has not been included in the specification,

then, evidently the expression the conditional expectation EYi | X i1 can be written:

(4.2.19)

E[ Yi X1i ] 0 1 Xi 1 2 E

[ Xi 2 Xi ] 1

In general E[ X i 2 X i1 ] can be an arbitrary function of X i1 . However, if we stick

to the linearity assumption by assuming:

(4.2.20)

E[ X i 2 X i1 ] 0 1 X i1

equation (4.2.19) will lead to the regression function:

7

EYi | X i1 0 1 X i1

(4.2.21)

where 0 and 1 are expressed by (4.2.7) and (4.2.8).

The point of this lesson is that (4.2.18) and (4.2.21) are simply two different

regression functions, but on their own perfectly all right regressions. To say that 1

is in any respect biased is simply misuse of language.

4.3

R2

and the adjusted

R 2 ( R 2 )

In section (2.3) we defined the coefficient of determination R 2 . We remember:

R2

(4.3.1)

Explained sum of squares

Sum of squared residuals

1

Total sum of squares

Total sum squares

N

where: Explained sum of squares is equal to: ESS (Yˆi Y ) 2 . Total sum of

i 1

N

squares is equal to: TSS (Yi Y ) 2 . Sum of squared residuals is equal to:

i 1

N

SSR ei2

i 1

Since R 2 never decreases when a new variable is added to a regression, an increase

in R 2 does not imply that adding a new variable actually improves the fit of the

model. In this sense the R 2 gives an inflated estimate of how well the regression fits

the data. One way to correct for this is to deflate R 2 by a certain factor. The outcome

will be the so called adjusted R 2 , denoted R 2 .

The R 2 is a modified version of R 2 that does not necessarily increase when a new

variable is added to the regression equation. R 2 is defined by:

8

(4.3.2)

R2 1

ˆ 2

e

2

i

( N 1) SSR

1 N k 1 1

ˆ (Y )

Var

( N k 1) TSS

(Yi Y )

N 1

N-the number of observations

k-the number of explanatory variables

There are a few things to be noted about R 2 . The ratio

( N 1)

is always

( N k 1)

larger than 1, so that R 2 is always less than R2 . Adding a new variable has two

opposite effects on R 2 . On the one hand, the SSR falls which increases R 2 . On the

other hand the factor

( N 1)

increases. Whether R 2 increases or decreases,

( N k 1)

depends on which of the effects are the stronger. Thirdly, an increase in R 2 does not

necessarily mean that the coefficient of the added variable is statistically significant.

To find out if an added variable is statistically significant, we have to perform a

statistical test for example a t-test. Finally, a high R 2 does not necessarily mean that

we specified the most appropriate set of explanatory variables. Specifying

econometric models are difficult. We face observability and data problems around any

concern, but, in general, we ought to remember that the specified model should have a

sound basis in economic theory.

4.4 Hypothesis testing in multiple regression

We have seen above that adding a second explanatory variable to a regression did

not demand any new principle as regard estimation. OLS estimators could be derived

by an immediate extension of the “one explanatory variable” case. Much of the same

can be said about hypothesis testing. We can, therefore, as well start with a multiple

regression containing k explanatory variables. Hence we specify:

(4.4.1)

Yi 0 1 X i1 2 X i 2 ......... k X ik i

9

where, as usual, i denotes the random disturbances.

Suppose we wish to test a simple hypothesis on one of the slope coefficients, for

example 2 . Hence, suppose we wish to test:

(4.4.2)

against H 1A

H0

2 20

2 20

H A2

H A3

2 20

2 20

In chapter 3.1 we showed in detail the relevant procedures for testing H 0

against these alternatives in the simple regression. Similar procedures can be applied

in this case. We start with test statistic

(4.4.3)

T

ˆ2 20

~ t-distributed with ( N k 1) degrees of freedom when

ˆ ( ˆ2 )

Std

H 0 is true.

ˆ ( ˆ2 ) is an estimator of the

In (4.4.1) ̂ 2 denotes the OLS estimator and Std

standard deviation of ̂ 2 .

In the general case (4.4.1) we only have to remember that in order to get an

unbiased estimator of 2 (the variance of the disturbances) we have to divide by

( N k 1) . Note, in the simple regression k 1 so that ( N k 1) reduces to (N-2).

By similar arguments we deduce that the test statistic T given by (4.4.3) has a

t-distribution with (N-k-1) degrees of freedom when the null hypothesis is true. With

this modification we can follow the procedures described in chapter (3.1). Hence, we

can apply the simple t-tests but we have to choose the appropriate number of degrees

of freedom in the t-distribution; remember that fact!

The t-tests are not restricted to testing simple hypothesis on the intercept or the

various slope parameters, t-tests can also be used to test hypothesis involving linear

combinations of the regression coefficients.

For instance, if we wish to test the null hypothesis:

(4.4.4)

H 0 : 1 2

against H A3 : 1 2

We realize that this can be done with an ordinary t-test. The point is that these

10

hypotheses are equivalent to the hypotheses:

(4.4.5)

~

H 0 : 1 2 0 against

~

H A: 1 2 0

So that if we reject H 0 we should also reject H 0 , etc.

In order to test H 0 against H A3 ,we use the test statistic:

T

(4.4.6)

( ˆ1 ˆ2 ) ( 1 2 )

( ˆ1 ˆ2 )

ˆ ( ˆ1 ˆ2 )

ˆ ( ˆ1 ˆ2 )

Std

Std

When H 0 is true, T will have a t-distribution with (N-k-1) degrees of freedom. Note

ˆ ˆ ˆ

that Std (1 2 ) can be estimated by the formulas:

(4.4.7)

ˆ ( ˆ1 ˆ2 ) Var

ˆ ( ˆ1 ˆ2 )

Std

where

(4.4.8)

ˆ (ˆ1 ˆ2 ) Var

ˆ (ˆ1 ) Var

ˆ (ˆ2 ) 2Cov(ˆ1, ˆ2 )

Var

ˆ ˆ ˆ

When estimates of ̂1 and ̂ 2 and Std (1 2 ) are available, we can easily

compute the value of the test statistic T. After that we continue as with the usual

t-tests.

Although, the t-tests are not solely restricted to the simple situations, we will

quickly face test situations that these tests can not handle. As an example we consider

model from labor market economics: suppose that wages Yi depend on workers’

education X i1 and experience X i 2 . In order to investigate the dependency of Yi on

X i1 and X i 2 , we specify the regression:

(4.4.9)

(Yi ) 0 1 ( X i1 ) 2 ( X i 2 ) 3 X i22 i

(i 1, 2,..., N )

where i denote the usual disturbance terms.

Note that the presence of the quadratic term X i22 does not create problems for

estimating the regression coefficient. It is hardly a small hitch. We only have to define

the new variable:

(4.4.10)

X i 3 X i22

11

The regression (4.4.9) becomes:

(4.4.11)

(Yi ) 0 1 ( X i1 ) 2 ( X i 2 ) 3 X i 3 i

(i 1, 2,..., N )

Suppose now that we are uncertain whether workers’ experience X i 2 has any

effect on the wages Yi . In order settle this issue we have to test a joint null hypothesis,

namely:

(4.4.12)

H 0 : 2 0 and 3 =0

versus H A : 2 0 and/or 3 0

In this case the null hypothesis restricts the value of two of the coefficient, so as a

matter of terminology we can say that the null hypothesis in (4.4.12) imposes two

restrictions on the multiple regression model; namely 2 3 0 . In general, a joint

hypothesis is a hypothesis which imposes two or more restrictions on the regression

coefficients.

It might be tempting to think that we could test the joint hypothesis (4.4.12) by

using the usual t-statistics to test the restriction one at a time. But this testing

procedure will be very unreliable. Luckily, there exist test procedures which manage

to handle joint hypothesis on the regression coefficients.

So, how can we proceed to test the joint hypothesis (4.4.12)? If the null

hypothesis is true, the regression (4.4.11) becomes:

(4.4.13)

Yi 0 1 X i1 i

(i 1, 2,..., N )

Obviously, we have to investigate two regressions, the one given by (4.4.11) and

the other one given by (4.4.13). Since there are no restrictions on (4.4.11) it is called

the unrestricted form, while (4.4.13) is called the restricted form of the regression. It

is very natural to base a test of the joint null hypothesis (4.4.12) on the sum of squared

residuals resulting from these two regression. If (SSR) R denotes this sum of squared

residuals obtained from (4.4.13) and ( SSR)U dente that obtained from (4.4.11), we

will be doubtful about the truth of H 0 if (SSR) R is considerably greater than

( SSR)U . If (SSR) R is only slightly larger than ( SSR)U there is no reason to be

12

doubtful about H 0 .

Since (SSR) R stems from the restricted regression (4.4.13), we obviously have:

(4.4.14)

( SSR) R (SSR)U

In order to test joint hypotheses on the regression coefficients the standard

approach is to use a so-called F-test. In our present example this test is very intuitive.

In the general case it is based on the test-statistic:

F

(4.4.15)

(( SSR) R ( SSR)U ) / r

( SSR)U /( N k 1)

where r denotes the number of restrictions and k denotes the number of explanatory

variables.

In our example above: r =2 and k =3.

If H 0 is true, then F has a so-called Fisher distribution with (r, N-k-1) degrees of

freedom. The numerator has r degrees of freedom, and the denominator (N-k-1).

From (4.4.14) it is obvious that the test-statistic F is concentrated on the positive

axis. Small values of F indicate that H 0

is agreeable with the sample data.

The principal approach to test a joint null hypothesis H 0 against an alternative

H A proceeds as usual:

(i)

Choose a suitable test-statistic

(ii)

Choose a level of significance ( )

(iii)

When H 0 is true, the test-statistic F will have a Fisher-distribution with r and

(N-k-1) degrees of freedom.

(iv)

c

The critical value F in this distribution is determined from the equation:

(4.4.16)

(v)

P{F (r , N k 1) Fc }

When the regressions have been performed, we compute the value of the

test-statistic F̂ .

13

(vi)

c

c

ˆ

ˆ

Decision rule: (a) Reject H 0 if F F (b) Do not reject H 0 if F F .

Fig (4.4.1)!!

(4.4.17)

Fˆ [ Fc , )

reject H 0

(4.4.18)

Fˆ [0, Fc )

do not reject H 0

Applying this test to our null hypothesis (4.4.12), gave the following result:

(4.4.19)

Yi 0.078 0.118 X i1 0.054 X i 2 0.001X i 3 ei

( SSR)u 18.6085

(4.4.20)

Yi 0.673 0.107 X i1 ei

( SSR)r 19.5033

N 100, k 3, N k 1 96, r 2

(4.4.21)

(19.5033 18.6085) / 2

Fˆ

2.308

18.6085 / 96

0.05

c

The critical value F0.05

is determined:

(4.4.22)

c

P{F (2,96) F0.05

} 0.05

Tables of the F-distribution show that F0c.05 3.1

Since Fˆ 2.038 3.1 F0c.05 , there is no reason to reject H 0 . Workers’

experience does not seem to have an impact on workers’ wages in this sample. We can

also compute the P-value for this test in the same way we have learned above. We

observe:

(4.4.23)

P value PF (2, 96) 2.038 0.105

A joint hypothesis which at times might interest us all, is to find out if the

explanatory variables have an impact at all on the dependent variable. Thus, referring

to (4.4.1) we wish to test the null hypothesis:

(4.4.24)

H 0 : 1 2 ......... k 0

14

against H A : at least one j 0

j 1 , 2 , .k. . ,

( SSR)U is computed from the unrestricted regression (4.4.1) , while ( SSR) R is

computed from the restricted regression:

(4.4.25)

Yi 0 i

i 1, 2,..., N

We observe immediately that in the restricted model ̂ 0 is estimated by:

(4.4.26)

ˆ0 Y (implying Yˆi Y )

So that:

(4.4.27)

( SSR) R (Yi Yˆ ) 2 (Yi Y ) 2

The numerator in the test statistic F becomes:

(4.4.28)

( SSR) R ( SSR)U (Yˆi Y ) 2

Hence, the test statistic F reduces to:

(Yˆ Y )

2

ESS

k

k

F

=

(

SSR

)U

( SSR )U /( N k 1)

( N k 1)

i

(4.4.29)

R2

k

=

(1 R 2 )

( N k 1)

(Using the deduction in section (4.3)).

15