Summary Spector

advertisement

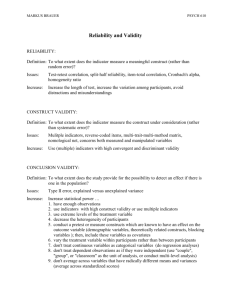

Summated rating scale construction (Spector) – A summary Summary Spector Chapter 1: Introduction Characteristics of a summated rating scale: 1. It contains multiple items (multiple items are summed) 2. Each individual item measures something that has a underlying, quantitative measurement continuum (e.g. attitude) 3. Each item has no right answer 4. Each item is a statement, on which ratings have to be given by respondents Why use multiple item scales? Reliability: single items do not produce responses that are consistent over time. Multiple items improve reliability by allowing random errors of measurement to error out (minimizing impact of one item) Precision: single items are imprecise because they restrict measurement to only two levels. Scope: many measured characteristics are broad in scope and not easily assessed with only one question. A variety of questions enlarges the scope of what is measured. Reliability: does it measure something consistently? Test-retest reliability a scale yields consistent measurement over time Internal consistency reliability multiple items, designed to measure the same construct, will intercorrelate with one another Validity: does the scale measure its intended construct? Steps of scale construction: 1. Define construct: what is the construct of interest, clear and precise definition. What is the scale intended to measure? 2. Design scale: write initial item pool 3. Pilot test: small number of respondents are asked to critique the scale. Based on feedback, the scale is revised 4. Administration and item analysis: sample completes the scale. Coefficient Alpha is calculated, reliability is established initially. 5. Validate and norm the scale Chapter 2: Theory of summated rating scales Classical test theory (CTT) Observed score = True score + Random Error (O=T+E) The larger the error component, the lower the reliability. With multiple items combined into an estimate of the true score, errors tend to average out, leaving a more accurate and consistent (reliable) measurement from time to time. Extension of CTT: O = T+E+B, where B is bias. Example of bias: Social desirability Response sets: tendencies for people to respond to items systematically (e.g. acquiescence response set, tendency to agree with all items regardless content) Chapter 3: Defining the construct Necessary for developing good items and to derive hypotheses for validation purposes. A clear construct makes this easier. Inductive development: clearly define construct, and this construct definition guides the subsequent scale development. Validation takes a confirmatory approach. Preferred for test construction. 1 Summated rating scale construction (Spector) – A summary Deductive development: Items are administered to subject and analyses are used to uncover the construct within the items. Validation takes an exploratory approach. Interpreting results. How to define the construct Literature review, use already existing scales for scale development Homogeneity and dimensionality of constructs The content of complex constructs can only be adequately covered by a scale with multiple subscales. Summated rating scale: Multiple items are combined and analyzed together instead of separately. Chapter 4: Designing the scale Three parts in designing: 1. Number and nature of response choices. Nature agreement, evaluation or frequency. Number generally between 5 and 9. 2. Writing the item stems 3. Instructions to respondents. Giving directions and instruction of the construct or to explain response choices (what is ‘quite often’ and ‘seldom’) Unipolar scales: scale varies from zero to a high positive value (e.g. frequency of occurrence) Bipolar scales: both positive and negative values, with the zero point in the middle (attitudes) In case both positive and negative worded items are used: reverse the scores of the negatively worded items! R = (H+L) – I, where H is largest number, L is lowest number, I is response to item and R is the reversed item. Rules for writing good items: 1. Each item should express one and only one idea 2. Use both positively and negatively worded items. In this way, bias from response tendencies (e.g. acquiescence, tendency to agree or disagree with all items) can be reduced. Acquiescence can produce extreme high or low scores because these people will tend to agree or disagree with all items if they are stated all positive or negative. 3. Avoid colloquialisms, expressions and jargon. 4. Consider the reading level of the respondents (and thus also complexity of items) 5. Avoid the use of negatives to reverse the wording of an item. People can misread (miss) negatives and score the item on the wrong side of the scale. Chapter 5: Conducting the item analysis Goal: producing a tentative version of the scale, suitable for validation. Administer the scale to a sample of respondents (100-200) for the item analysis. Item analysis Purpose: to find items that form an internally consistent scale and to eliminate those items that do not. Internal consistency: measurable property of items that implies that they measure the same underlying construct. Do items intercorrelate? Item-remainder co-efficient: how well does the individual item relate to the other items? Correlation with each item to the sum of the other items. Also: reverse negatively worded items! The items with the highest item-remainder co-efficient are the ones that are retained. Selection of items can take place by adding up to x items (with largest coefficients) or demanding a minimum sized coefficient for the items. The more items, the lower the coefficients can be and still yield a internally consistent scale. 2 Summated rating scale construction (Spector) – A summary Coefficient Alpha (Cronbach): measures the internal consistency reliability of a scale. Function of the number of items and their magnitude of intercorrelation. Coefficient Alpha can be raised by increasing the number of items or by raising their intercorrelation. Even items with low intercorrelations can produce a relatively high coefficient alpha, if there are enough items. If all items represent an underlying construct, then the intercorrelations among them represent the opposite of error. An item that does not correlate with the others is expected to be comprised of error. Error can be averaged out if there are a lot of items. Nunnally: Cronbach’s alpha should be at least 0.70 for a scale to demonstrate internal consistency. Alpha is not a correlation! Alpha should always be positive if the right calculation is made. Coefficient Alpha is about comparing the variance of the total scale score (sum of items) with the variances of individual items. When items are uncorrelated, the variance of the total scale is equal to the sum of variances of all items. See formula! Use both coefficient alpha and item-remainder coefficients for choosing items for a scale (see table 5.1 page 33). When an item-remainder coefficient is negative, a possible score error was made. The previous steps should be re-examined. It might be poorly written, or was inappropriate for the respondents, or the conceptualization of the construct might have weaknesses. External criteria for item selection Selecting or deleting items based on their relations with external (to the scale) criteria. Bias: items are deleted when they relate to bias (the variable of interest here). The scale would be administered to a sample, while measuring the bias variable on the same people. Each item would be correlated with the bias variable. Only items with small or no relations to the bias variable will be selected. Social desirability: people tend to agree with favorable items about themselves and disagree with unfavorable items. Each item is correlated with the scores on SD. Items with a significant correlation are deleted from the scale to develop the scale without SD bias. After the item selection, a tentative version of the scale is ready. Item analysis needs to be done on a second sample, to further establish reliability and validity. When the scale needs more work When an acceptable level of internal consistency is not achieved, it might be caused by the items or the construct definition. See rules for writing good items. Also the construct might contain too many elements, or it was too broad or vague defined. Spearman Brown formula: formula for estimating the number of additional items that should be needed to achieve a given level of internal consistency (coefficient alpha). Based on the assumption that added or deleted items are of the same quality as the initial or retained items. Vice versa the formula can calculate what coefficient alpha will be reached when items are increased or decreased by a certain factor. It works in two ways. See formula! The formula can also be used for decreasing the number of items. Take care that the coefficient alpha does not become to low. Example (table 5.2 page 38): An initial item pool begins with 15 items and after the item analysis, 5 items are left with a coefficient alpha of 0.60. The table indicates that the number of items need to be doubled (10) to achieve 0.75 and tripled to achieve (15) 0.82. Assuming that the newly added items are of the same quality, and only a third of the items will be retained again after the analysis, you need another 15 (5 are left) or 30 (10 are left) to achieve a coefficient alpha of 0.75 or 0.82. 3 Summated rating scale construction (Spector) – A summary Multidimensional scales Many constructs are broad and contain multiple dimensions. Developing the scale is quite the same as developing a unidimensional scale, only now subscales are used. It is best when each item is part of only one subscale. During construct development it is important to determine where constructs overlap and where they are distinct. Where scales share item content, one should be careful in interpreting their intercorrelations. Chapter 6: Validation If a scale is internally consistent, it is reliable: it certainly measures something. But does it measure the intended construct? Hypotheses are developed about the causes, effects, and correlates of the construct. The scale is used to test these hypotheses. Empirical support for the hypotheses implies validity of the scale. When a sufficient amount of data supporting validity is collected, the scale is (tentatively) declared to be construct valid. Empirical validation evidence provides support for theoretical predictions about how the contruct of interest will relate to other constructs. It demonstrates the potential utility of the construct. Validation takes place after the item analysis has been conducted and the items are chosen. Techniques for studying validity Three approaches for establishing validity: 1. Criterion-related validity Involves the testing of hypotheses about how the scale will relate to other variables. 2. Discriminant and convergent validity investigating the comparative strengths or patterns of relations among several variables. Factor analysis is used to explore the dimensionality of a scale. Criterion-related validity Comparing scores on the scale of interest with scores on other variables: criteria. It begins with the generation of hypotheses about relations between the construct of interest and other constructs. The scale is validated against these hypotheses, either generated from existing theory or from own theoretical work. 1. Concurrent validity Simultaneous collection of all data: data from a sample of respondents on the scale of interest and on criteria, hypothesized to relate to the scale of interest. Hypotheses are that the scale of interest will correlate with one or more criteria. Findings that the scale of interest significantly relates with hypothesized variables, are taken as support for validity. Often in this type of validity, the scale of interest is embedded in a questionnaire that contains measures of several variables. 2. Predictive validity Same as concurrent, only data for the scale of interest is collected before the criterion variables. How well can the scale predict future variables? Unlike concurrent, predictive validity demonstrates how well a scale can predict a future variable. For example the prediction of respondents quitting from school, as predicted by their attitudes/personality. 3. Known-groups validity Based on hypotheses that certain groups of respondents will score higher on a scale than others. The criterion is categorical instead of continuous. Means on the scale of interest can be compared among respondents who are at each level of the categorical variable. Hypotheses specify which groups score higher on the scale. E.g. for a scale of job complexity, it is hypothesized that corporate executives will score higher than data-entry clerks. If not, then there is something wrong; the scale is not valid. 4 Summated rating scale construction (Spector) – A summary Two critical features for all criterion-related validity studies: 1. Underlying theory from which hypotheses are drawn must be solid. Also in concurrent validity study: if something goes wrong, you cannot easily discover if something was wrong with the theory or the scale. 2. There must be a good measurement of the criterion in order to conduct a good validity test of a scale. If you don’t find expected relations with criteria, this might as well be because of criterion invalidity rather than scale invalidity. Convergent and discriminant validity Convergent validity different measures of the same construct should relate strongly with each other. Indicated by comparing scores on a scale with an alternative measure of the same contruct. The two measures should correlate strongly. Discriminant validity measures of different constructs should relate only modestly with each other. Multitrait Multimethod matrix (MTMM) page 51 Simultaneously exploring convergent and discriminant validities. At least two constructs are measured, and each has been measured with at least two separate methods. The correlations between subscales measuring the same trait but across methods are the convergent validities. Convergent validity is indicated by the validity diagonal values, which should be statistically significant and relatively large in magnitude. For each subscale it should be larger than other values in its row or column. So, tow measures of the same construct should correlate more strongly with each other than they do with any other construct. Use of factor analysis for scale validation Unidimensional scales factor analysis can be used to explore possible subdimensions within the group of selected items Multidimensional scales factor analysis can be used to verify that the items empirically form the intended subscales Basic aspects of factor analysis Reducing a number of items to a smaller number of underlying groups of items, called factors. These factors can be indicators of separate constructs or of different aspects of a single rather heterogeneous construct. Factors are derived from analyzing the patterns of covariation (or correlation) among items. Groups that interrelate more strongly with each other than to other groups tend to form factors. Results are a function of the items entered. Subscales with more items tend to produce stronger factors, subscales with few items tend to produce weak factors. Items that intercorrelate relatively high are assumed to reflect the same construct (convergent validity). Items that intercorrelate relatively low are assumed to reflect different constructs. Exploratory factor analysis Useful for determining the number of separate components that might exist for a group of items. Goal is to explore the dimensionality of the scale itself. Two questions must be addressed with a factor analysis: 1. the number of factors that represent the items 2. the interpretation of the factors Steps in the factor analysis Principal components are derived, with one factor or component derived for each item analyzed. Each of these initial factors is associated with an eigenvalue, which is the relative proportion of variance accounted for by each factor. The sum of all eigenvalues 5 Summated rating scale construction (Spector) – A summary will equal the number of items (since there is one eigenvalue for each item). If all items would perfectly correlate, they would produce a single factor that will have an eigenvalue equal to the number of items. The other eigenvalues will then equal zero. After it is determined how many factors exist, an orthogonal rotation procedure is applied to the factors. This results in a loading matrix that indicates how strongly each item relates to each factor. A loading matrix contains factor loadings that are correlations of each original variable with each factor. Every variable (rows in the matrix) has a loading for every factor (columns in the matrix). A variable ‘loads’ on a factor if the factor loading is larger than 0.30-0.35 (so the correlation between item and factor is at least 30%) Dooley, page 91: Factor analysis identifies how many different constructs (or factors) are being measured by a test’s items and the extent to which each item in the test is related to (loaded on) each factor. Factor analysis uses the correlations among all items of a test to identify groups of items that correlate more highly among themselves that with items outside the group. Each group of items defines a common factor. Confimatory factor analysis Allows the testing for a hypothesized structure: when the items of subscales are tested to see if they can support the intended subscale structure. With exploratory factor analysis, the ‘best fitting factor structure’ is fit to the data. With confirmatory factor analysis, the structure is hypothesized in advance, and the data are fit to this structure. Conducting the CFA specify in advance the number of factors, the factor(s) on which each item will load and whether or not the factors are intercorrelated. Loadings are again presented in a similar loading matrix. Each element is either set on zero (so it does not load on a factor) or ‘freed’ so that its loading can be estimated by the analysis. Another matrix represents the correlations among factors. The CFA analysis yields estimates of the factor loadings and interfactor correlations. A CFA that fits well indicates that the subscale structure may explain the data, but it does not mean that it actually does. Support for the subscales in an instrument is not very strong evidence that they reflect their intended constructs. Additional evidence is needed. Validation strategy Test validation requires a strategy of collecting as many different types of evidence as possible. The evidence becomes strong if it’s tied to convergent validities based on very different operationalizations (measurements). Accumulation of validation evidence shows that a scale is working as expected. At this point you can say that the test demonstrates construct validity (supported, but never proven. New theory can always bring different findings that make the initial explanation incorrect.) Chapter 7: Reliability and norms Reliability Internal consistency reliability is an indicator of how well the individual items of a scale measure an underlying construct. Coefficient alpha is most often used to measure internal consistency. It is good to replicate the coefficient alpha in subsequent samples. Estimates of reliability across different samples will expand the generalizability of the scale to more subject groups. Test-retest reliability. This type of reliability reflects measurement consistency over time. How well does a scale correlate with itself across repeated administrations to the same respondents? The longer the time period, the lower the test-retest reliability would be expected to be. Calculation: administer a test two times, and identify each respondent by a code. Match the two administrations and calculate a correlation coefficient for the two. 6 Summated rating scale construction (Spector) – A summary Norms In order to interpret the meaning of scores, you need to know something about the distribution of scores in various populations. Normative approach uses the distribution of scores as the frame of reference to interpreted the meaning of a score. Score of individual is compared to the distribution of all scores. Most scales are developed and normed on limited populations. To compile norms, one would collect data with the instrument on as many respondents as possible. Reliability and validity studies will provide data that can be added to the norms of the instrument. To compile norms, one would calculate the mean and standard deviation across all respondents, and the shape of the distribution. Also study subpopulations! The availability of normative data on different groups (males and females, race) increases the likelihood that meaningful comparisons can be made. If you want to determine overall norms for the population, make sure that the sample is representative for the population of interest. 7