Multiple Choice Exams: Not Just for Recall

advertisement

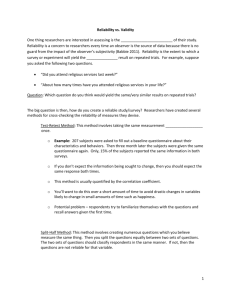

Using Multiple Choice Tests for Assessment Purposes: Designing Multiple Choice Tests to Reflect and Foster Learning Outcomes Terri Flateby, Ph.D. tlflateby@gmail.com Overview of Assessment Process • Select or develop measureable learning outcomes (course or program) • Select or develop measures consistent with the outcomes • Measure learning outcomes • Analyze learning results • Make adjustments in curriculum, instructional strategies, or activities to address weaknesses • Re-evaluate learning outcomes Purposes of Classroom Achievement Tests • Measure Individual Student’s Learning • Evaluate Class Performance • Evaluate Test and Improve Learning • Support Course and Program Outcomes Why Use Multiple-Choice Tests to Measure Achievement of Learning Outcomes? • Efficient – More content coverage in less time – Faster to evaluate – Methods to evaluate test items • In some cases, can provide a proxy to Constructed Response measures Above All Testing and Assessment should Promote Learning To Promote Learning, Tests Must Be: • Valid: Tests should be an Accurate Indicator of Content and Level of Learning (Content validity) • Reliable: Tests Should Produce Consist Results Validity • Tests must measure what you want your students to know and be able to do with the content (reach the cognitive demands of the outcomes). • Tests must be consistent with instruction and assignments, which should foster the cognitive demands. Process of Ensuring Validity • Table of Item Specifications also called Test Blue Print – useful for classroom tests and guiding assessment • Review item performance after administering test Test Blue Print Reflects the Important Content and Cognitive Demands Content/com Knowledge Comprehension Application ponents of and above outcomes 1 2 3 4 Analysis Bloom’s Taxonomy of Educational Objectives (use to develop tests and outcomes) • Evaluation • Synthesis • Analysis • Application • Comprehension • Knowledge Develop Tests to Reflect Outcomes at Program or Course Levels • Create summative test • Develop sets of items to embed in courses indicating progress toward outcomes (formative) • Develop course level tests that reflect program level objectives/outcomes Institutional Outcome/Objective • Students will demonstrate the critical thinking skills of analysis and evaluation in the general education curriculum and in the major. Course Outcome • Students will analyze and interpret multiple choice tests and their results. Constructing the Test Blue Print 1. List important course content or topics and link to outcomes. 2. Identify cognitive levels expected in outcomes. 3. Determine number of items for entire test and each cell based on: emphasis, time, and importance. Base Test Blueprint on: • Actual Instruction • Classroom Activities • Assignments • Curriculum at the Program Level Questions • Validity • How to use Test Blueprint Reliability: Repeatable or Consistent Results • If a test is administered one day and an equivalent test is administered another day the scores should remain similar from one day to another. • This is typically based upon the correlation of the two sets of scores, yet this approach is unrealistic in the classroom setting. Internal Consistency Approach: KR-20 Guidelines to Increase Reliability* • Develop longer tests with well-constructed items. • Make sure items are positive discriminators; students who perform well on tests generally answer individual questions correctly. • Develop items of moderate difficulty; extremely easy or difficult questions do not add to reliability estimations. • *Guide for Writing and Improving Achievement Tests Multiple Choice Items • Refer to handout Guidelines for Developing Effective Items Resources • In Guide for Improving Classroom Achievement Tests, T.L. Flateby • Assessment of Student Achievement, 2008, N.E. Gronlund Allyn and Bacon • Developing and Validating Multiple-Choice Test Items, 2004, Thomas Haladyna; Lawrence Erlbaum Associates • Additional articles and booklets are available at http://fod.msu.edu/OIR/Assessment/multiple-choice.asp Questions • How to ensure Reliability and Validity Evaluate Test Results 1. Kr-20: An outcome of .70 or higher. 2. Item discriminators should be positive 3. Difficulty Index; P-Value. 4. Analysis of Distracters. Item Analysis • Refer to 8 Item handout Use Results for Assessment Purposes • Analyze performance on each item according to the outcome evaluated. • Determine reasons for poor testing performance. – Faulty Item – Lack of Student Understanding • Make adjustments to remedy these problems. Questions • Contact Terri Flateby at tlflateby@gmail.com, 813.545.5027, or http//:teresaflateby.com