here - Wright State University

advertisement

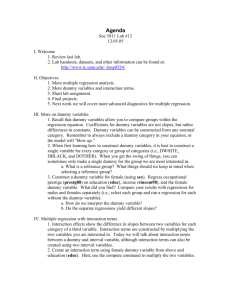

QUESTIONS of the MOMENT... "How does one estimate categorical variables in theoretical model tests using structural equation analysis?" (The APA citation for this paper is Ping, R.A. (2010). "How does one estimate categorical variables in theoretical model tests using structural equation analysis?" [online paper]. http://www.wright.edu/~robert.ping/categorical3.doc) (An earlier version of this paper, Ping, R.A. (2008). "How does one estimate categorical variables in theoretical model tests using structural equation analysis?" [on-line paper]. http://www.wright.edu/~robert.ping/categorical1.doc, is available here.) In structural equation analysis software (e.g., LISREL, EQS, Amos, etc.), the term "categorical variable" usually means an ordinal variable (e.g., an attitude measured by Likert scales), rather than a nominal or "truly categorical" variable (e.g., Marital Status, with categories such as Single, Married, Divorced, etc.), and there is no provision for truly categorical variables. In regression, a (truly) categorical variable is estimated using "dummy" variables. (For example, while the cases in Marital Status, for example, might have the values 1 for Single, 2 for Divorced, 3 for Married, etc., a new variable, Dummy_Single, is created, with cases that have the value 1 if Marital Status = Single, and 0 otherwise. Dummy_Married is similar with cases equal to 1 or 0, etc.) The same approach might be used in structural equation modeling (SEM). However, nominal variables present difficulties in SEM that are not encountered in dummy variable regression. For example, dummy variables violate several important assumptions in SEM: dummy variables are not continuous, and they do not have normal distributions. While ordinal variables from (multi-point) rating scales (e.g., Likert scales), that are not continuous and not normally distributed, are routinely analyzed in theoretical model (hypothesis) tests using SEM, dummy variables with only two values are very non-normal, and the covariances that are customarily analyzed in SEM, are formally incorrect. Point-biserial, tetrachoric, polychoric, etc. correlations are more appropriate. In addition, Maximum Likelihood (ML) structural coefficient estimates are preferred in theoretical model tests, and this estimator also assumes multivariate normality. While ML structural coefficients are believed to be robust to departures from normality (see the citations in Chapter VI. RECENT APPROACHES TO ESTIMATING INTERACTIONS AND QUADRATICS), it is believed that standard errors are not robust to departures from normality (Bollen 1995). (There is some evidence to the contrary (e.g., Ping 1995, 1996), and EQS, for example, provides Maximum Likelihood Robust estimates of structural coefficients and standard errors that appear to be robust to departures from normality (see Cho, Bentler and Satorra 1991).) In "interesting" models (ones with more than a few latent variables) there could be several (truly) categorical variables, each with several categories. Because most SEM software requires at least 1 case per estimated parameter (10 are preferred) (some authors prefer a stricter criterion from regression: multiple cases per covariance matrix entry), the number of dummy variables can empirically overwhelm the model unless the sample is large and the number of dummy variables is comparatively small. For example, in a model that adds 2 categorical variables each with 2 categories to 2 latent variables, 11 additional parameters are required for the additional correlations and/or structural coefficients due to the dummy variables--the loadings and measurement error variances of dummies are assumed to be 1 and 0 respectively. This would require up to 110 addition cases to safely estimate these parameters (5 times more additional cases would be required for the stricter regression criterion involving the asymptotic "correctness" of the covariance matrix). Thus, in addition to samples larger than the customary number of cases used in survey data model tests (e.g., 200-300 cases), "managing" the total number of categories is required (more on this later). With dummy variables, the term "estimates" (e.g., of association and significance) truly may apply. While there is no hard and fast rule, significance thresholds in theoretical model testing with dummy variables and SEM probably should be conservative (above |t| > 2). The results of a categorical model estimation is typically a subset of significant dummy variables (e.g., Single and Divorced). However, an estimate of the significance of the categorical variable from which the dummy variables were formed (e.g., Marital Status) of is not available in the SEM output. Thus, the aggregate effect (e.g., the overall significance) of the dummy variables comprising Marital Status, for example, should be determined to gage hypothesis disconfirmation (an EXCEL spreadsheet to expedite this task is available below). Finally, estimating all the dummy variables jointly does not work in popular SEM software such as LISREL, EQS, AMOS, etc., and a SEM "workaround" is taking longer than anticipated. However, there is a “mixed SEM” estimation procedure for latent variables (LV’s) and truly categorical variables that could be used until an “all-SEM” approach is found. It produces “proper” error-dissatenuated structural coefficients just like (all) SEM does. They are Least Squares estimates rather than Maximum Likelihood estimates, however, but the approach might be preferable to omitting an important categorical variable(s), or analyzing subsets. (For more on the alternatives, see below at the “**” in the left margin.) Using this mixed SEM approach, the steps for estimating the (total) effect of Marital Status, for example, on Y (i.e., “H1: Marital Status affects/changes/etc. Y”) in a surveydata model1 would be to create a survey with an exhaustive list of categories for Marital Status (the number of categories might be reduced later). A large number of cases then should be obtained if possible. When the responses are available, the exhaustive list of categories for Marital Status, for example, should be reduced, if possible (i.e., categories with few cases should be dropped or combined with other categories). Next, dummy variables for Marital Status, for example, should be created with a dummy variable for each category in Marital Status such as Single, Married, Divorced, etc. Specifically, a dummy variable such as Dummy_Single, should be created, with cases that have the value 1 if Marital Status = Single, and 0 otherwise. Dummy_Married would be similar with cases equal to 1 if Marital Status = Married, and 0 otherwise. 1 It turns out that the suggested approach also might be appropriate for an experiment, but that is another story. Dummy_Divorced, etc. also would be similar. There should be 1 dummy variable for each category in the (truly) categorical variable Marital Status, and the sum of the cases that are coded 1 in each categorical variable should equal the number of cases. (For example, the sum of the cases that are coded 1 across the dummy variables for Marital Status should equal the number of cases, the sum of the cases that are coded 1 across the dummy variables for any next categorical variable should equal the number of cases, etc.) In total, there would be 1 different dummy variable for each category in each of the (truly categorical) variables. Then, a least squares regression version of the structural equation containing (all) the dummy variables should be estimated to roughly gage the strength of any ordinal effects. For example, in 1) Y = b1X + b2Z + b3Dummy_Single + b4Dummy_Married + b5Dummy_Divorced + b6Dummy_Separated + b7Dummy_Widowed, the latent variables Y, X and Z would be “specified” using summed, preferably averaged, indicators, and the regression should use the “no origin” option (i.e., regression through the origin). If the regression coefficient for each of the dummy variables is non significant, it is unlikely that Marital Status is significant. However, assuming at least 1 dummy variable was significant, the reliability, validity and internal consistency of the LV’s in the hypothesized model should be gaged. (The dummy variables will have to be assumed to be reliable and valid, and they are trivially internally consistent). In particular, the single-construct measurement model (MM) for each LV should fit the data. (This step is required later for unbiased estimation.) Next, a full MM that omits the dummy variables should be estimated to gage external consistency. Assuming this “no dummies” MM fits the data, full measurement models that omit the dummy variables one at a time should be estimated to further gage external consistency. For example, Dummy_Single should be omitted from the (full) MM for Equation 1. Then, a second full MM containing Dummy_Single but omitting Dummy_Married should be estimated. This should be repeated with each dummy variable. (Experience suggests that in real-world data the parameter estimates in these MM will vary trivially, which suggests that the dummy variables do not materially effect external consistency.) Then, assuming the LV’s are reliable, valid and consistent, the LV’s should be averaged and their error-attenuated covariance matrix (CM) should be obtained using SPSS, SAS, etc. Next, this matrix is adjusted for measurement errors using a procedure suggested by Ping (1996b) (and the “Latent Variable Regression” EXCEL spreadsheet that is available on this web site). For consistent LV’s, the resulting Error-Adjusted (ErrAdj) CM then is used to estimate Equation 1 without omitting dummy variables. Specifically, the error-attenuated/error unadjusted (err-unadj) CM for all the variables in Equation 1 is adjusted for measurement error using the measurement model loadings and measurement error variances from the “no dummies” MM for Equation 1. The resulting Err-Adj CM then is used as input to least squares regression. This procedure was judged to be unbiased and consistent in the Ping (1996b) article, and while it is not as elegant as SEM, it does produce “proper” unbiased and consistent structural coefficients in a model containing LV’s and (truly) categorical variables just like SEM should (but so far doesn’t). Specifically, the parameter estimates from the “no dummies” MM are input to the “Latent Variable Regression” EXCEL spreadsheet that produces the Err-Adj CM matrix using calculations such as Var(ξX) = (Var(X) - θX)/ΛX2 and Cov(ξX,ξZ) = Cov(X,Z)/ΛXΛZ , where Var(ξX) is the desired error-adjusted variance of X (that is input to regression), Var(X) is the error attenuated variance of X (from SAS, SPSS, etc.), ΛX = avg(λX1 + λX2 + ... + λXn), avg = average, and avg(θX = Var(εX1) + Var(εX2) + ... + Var(εXn)), (λ's and εX's are the measurement model loadings and measurement error variances from the “no dummies” MM--1 and 0 respectively for the dummy variables--and n = the number of indicators of the latent variable X), Cov(ξX,ξZ) is the desired error-adjusted covariance of X and Z, and Cov(X,Z) is the error attenuated covariance of X and Z from SPSS, SAS, etc.2 The resulting Err-Adj CM (on the EXCEL spreadsheet) is then input to regression, with the “regression-through-the-origin” option (the no-origin option) (see the spreadsheet for details). Because the coefficient standard errors (SE’s) (i.e., the SE’s of b1, b2, ..., and b7 in Equation 1) produced by the Err-Adj CM are incorrect (they assume variables that are measured without error--e.g., Warren, White and Fuller 1974; see Myers 1986 for additional citations), they must also be corrected for measurement error. A common correction is to adjust the SE from regression using the err-unadj CM by changes in the standard error, RMSE (= [Σ[yi - yi]2]2, where yi and yi. are observed and estimated y’s respectively) from using the Err-Adj CM (see Hanushek and Jackson 1977). Thus the correct SE’s for the Err-Adj CM structural coefficients would involve the SE from regression using the err-unadj CM, and a ratio of the standard error from err-unadj CM regression and the standard error from Err-Adj CM regression, or SEA = SEU*RMSEU/RMSEA , where SEA is the Err-Adj CM regression standard error, SEU is the SE produced by errunadj CM regression, RMSEU is the standard error produced by err-unadj CM regression, and RMSEA is the standard error produced by err-unadj CM regression.3 Then, the structural coefficients of the dummies for each of the categorical variables could be aggregated to adequately test any hypotheses (e.g., “H1: Marital Status affects/changes/etc. Y”) (click here for an EXCEL spread sheet to expedite this process). Specifically, if there are multiple categorical variables, the effects of each dummy variable would be aggregated (e.g., the effects of Marital Status, for example, would be aggregated, then all of the effects of categorical variable 2 would be aggregated ignoring the dummy variable effects of Marital Status, etc.). DISCUSSION 2 These equations make the classical factor analysis assumptions that the measurement errors are independent of each other, and the xi's are independent of the measurement errors. The indicators for X and Z must be consistent in the Anderson and Gerbing (1988) sense. 3 The correction was judged to be unbiased and consistent in Ping (2001). * Several comments may be of interest. There is a categorical variable that could be estimated directly in SEM: a single dichotomous variable (e.g., gender). In this case the model could be estimated using SEM in the usual way (i.e., ignoring the suggested procedure) with the categorical variable specified using a loading of 1 and a measurement error variance of zero. For emphasis ML estimates, ML Robust estimates if possible, should be obtained. In addition because the difficulties introduced by a dichotomous, non-normal variable, the significance threshold for the dichotomous variable probably should be conservative (e.g., t-values probably should be at least 10% higher than usual). ** Because the above procedure is a departure from the usual LV model estimation, it may be useful to discuss the justification for the departure in detail. First, in theoretical model testing, theory is always more important than methodology. Stated differently, theory ought not be altered to suit methodology. Thus, plausible categorical variables should not be ignored simply because they cannot be tested with LISREL, AMOS, etc. Method is important, however—it must adequately test the proposed theory, and thus the proposed methodological approach should be shown to be adequate. The options besides the suggested procedure are to analyze subsets of data for each dummy variable,4 or to omit the categorical variable(s). Not only does omitting a plausible categorical variable to enable analysis with LISREl, AMOS, etc. in the “usual” manner force theory to accommodate method, omitting a focal categorical can reduce the “interestingness” of the model. And, omitting a plausible antecedent of Y in for example in Equation 1, courts biased model estimation results because of the “missing variable problem” (see James 1980).5 6 Analyzing subsets of data for each dummy variable, however, is almost always an inadequate test. First, sequentially testing the dummy variables invites one or more spurious significances—significances that occur by chance because so many tests are performed. In addition, splitting the data into subsets reduces statistical power, increasing the likelihood of falsely disconfirming one or more dummy variables, and thus the hypothesized categorical variable. Further, analyzing subsets provides no means to aggregate the dummy variable estimates, and thus it cannot adequately test a categorical hypothesis such as “Marital Status affects Y.” 4 For example, the data set would be split into a subset of respondents who were single, and another subset of those who were not. Then, the model would be estimated using these subsets, and structural coefficients would be compared between the subsets for significant differences. This would be repeated for respondents who are married, etc. 5 Omitting an important antecedent, in this case a categorical variable, that may be correlated with other model antecedents creates the “missing variables problem.” This can bias structural coefficients because model antecedents are now correlated with the structural error term(s), a violation of assumptions (structural errors contain the variance of omitted variables). A reviewer also might question the “importance” of the categorical variable(s) with logic such as: “Because it usually does not explain much variance in Y, any ‘missing (categorical) variable problem’ is likely comparatively small, so why not just omit the categorical variable and use SEM?” This logic misses the point of theory testing: What are the theoretically justified antecedents of Y, no matter how “important” they are? Besides, the categorical variable could be more “important” in the next study. 6 However, while disqualifying all the alternatives may make the proposed procedure attractive, it does not make it adequate. A formal investigation of any bias and inefficiency (e.g., using artificial data sets) would suggest adequacy. Absent that, comparisons with comparatively “trustworthy” estimates should provide at least hints of adequacy. Thus, to suggest the structural coefficient estimates for the LV’s are adequate, the proposed procedure results could be compared to several SEM models with 1 dummy missing. These model should be ML and LS, and the results should be argued to be “interpretationally equivalent.”7 Because the dummy results will not be equivalent, the step c) (err-unadj CM) regression results should be compared to Err-Adj CM and argued to be “interpretationally equivalent.” In addition to expecting SEM, reviewers expect ML. However, comparing “1 dummy missing” estimates using (ML) SEM and (LS) Err-Adj CM should hint that the (LS) Err-Adj CM estimates for all the dummies might be interpretationally equivalent to SEM estimates were they available.8 Parenthetically, it may not be necessary to burden a first draft of a mixed categorical model with these arguments—reviewers may already be aware of the estimation difficulties. I would simply mention that SEM doesn’t work for the usual approach for categoricals (dummy variables), and the procedure used in the paper was judged to have fewer drawbacks than the alternatives: omitting the categorical variable, or estimating multiple subsets of cases. Because the above procedure has not been formally investigated for any bias or inefficiency, the estimation results of the aggregated effects of each categorical variable probably should be interpreted using a stricter criterion for significance (e.g., t-values probably should be at least 10% higher than usual). (Err-Adj CM regression is known to be unbiased and efficient.) "Managing" the number of categories is typically required in order to minimize the reduction of the asymptotic correctness of the Err-Adj CM caused by the addition of the dummy variables to the model. Specifically, categories with a few cases should be dropped or combined with other categories if possible. For example, if there were few Divorced, Separated and Widowed respondents, those dummies could be replaced by Dummy_Not_Married (i.e., Dummy_Not_Married = 1 if the respondent was Divorced, Separated or Widowed, zero otherwise). The drawback to this combining categories is that the Divorced, Separated and Widowed categories probably should be combined in subsequent studies. Similarly, categories might be combined or ignored to suit an hypothesis. For example, if the study were interested only in married respondents, the Divorced, Separated and Widowed dummies could be replaced by Dummy_Not_Married (i.e., 7 Interpretationally equivalent estimates have the same algebraic sign, and are both are either significant or both are not significant. 8 This appears to beg the question, why insist on ML? ML estimates are preferred in survey-data theory tests because they are more likely to be observed in future studies. Dummy_Not_Married = 1 if the respondent was Divorced, Separated or Widowed, zero otherwise). Afterward, Dummy_Married could be estimated directly using SEM (see “*” in the left margin above). Aggregation is recommended even if it does not seem to be required (e.g., the study may be interest only in single respondents). In fact, it functions as an overall “F-like’ test of the dummies. Specifically, if the aggregation is not significant, any significant dummies probably should be ignored. For emphasis, nearly every SEM study in the Social Sciences has categorical variables in the “Demographics” section of the study questionnaire. Anecdotally, applied researchers routinely analyze this data “post-hoc” (after the study is completed) for “finer grained” views of study results by gender, title, marital status, VALS psychographic category, etc. The suggested procedure above would enable such analyses in theory tests using SEM. Such post-hoc “probing” is within the logic of science as long as the results are clearly presented as having been found after the study was completed (and thus provisional, and in need of disconfirmation in a future study). For example, Marital Status was actually found to be a predictor of exiting propensity in a reanalysis of a study’s data. After an argument supporting a Marital Status hypothesis was created, an (as yet unpublished) new study was conducted to disconfirm this. and several other, new hypotheses. Stated differently, the demographic analysis triggered a new line of thought that resulted in new theory (several new hypotheses). Interested readers are encouraged to read the papers, “But what about Categorical (Nominal) Variables in Latent Variable Models?" “Latent Variable Regression: A Technique for Estimating Interaction and Quadratic Coefficients" and "A Suggested Standard Error for Interaction Coefficients in Latent Variable Regression" on this web site for more details about estimating categoricals in SEM, and Err-Adj CM regression and its adjusted standard error respectively. The additional steps for estimating categoricals with several endogenous variables are available by e-mail. SUMMARY In summary, the steps for estimating an hypothesized effect of Marital Status, for example, on Y (i.e., “H1: Marital Status affects/changes/etc. Y”) in a survey-data model would be to: a) create a survey in the usual way. However, a large number of cases should be obtained, if possible, to permit the addition of the dummy variables. When the responses are available, the number of categories should be reduced, if possible to improve the asymptotic correctness of the model parameter estimates. b) Create dummy variables with 1 dummy variable for each category in the (truly) categorical variable. (The sum of the cases that are coded 1 in each categorical variable should equal the number of cases.) c) Average the LV’s and estimate a least squares regression version of the structural equation containing (all) the dummy variables, using regression through the origin, to roughly gage the strength of any ordinal effects. (If no dummy variables are significant, it is unlikely that the hypothesized categorical variable is significant.) d) If at least 1 dummy variable was significant, the reliability, validity and internal consistency of the LV’s in the hypothesized model should be gaged as usual. e) Estimate a full MM that omits the dummy variables should be estimated to gage the external consistency of the LV’s. If this “no dummies” MM fits the data, full measurement models that omit the dummy variables one at a time should be estimated to further gage external consistency, and to determine if the dummy variables materially effect external consistency. f) Average the LV’s and their error-unadjusted covariance matrix (err-unadj CM) should be obtained using SPSS, SAS, etc. g) Input the parameter estimates from the “no dummies” MM to the “Latent Variable Regression” EXCEL spreadsheet (on this web site) to produce an ErrorAdjusted covariance matrix (Err-Adj CM). h) Input this Err-Adj CM to regression, with the “regression-through-the-origin” option (the no-origin option). i) Correct the coefficient standard errors (SE’s) from the Err-Adj CM regression using the EXCEL spreadsheet. j) Aggregate the structural coefficients of the dummies for each of the categorical variables to adequately test any hypotheses (e.g., “H1: Marital Status affects/changes/etc. Y”). k) Interpret dummy and aggregation results using a stricter criterion for significance (e.g., |t| > 2.2). REFERENCES Bollen, Kenneth A. (1995), "Structural Equation Models that are Nonlinear in Latent Variables: A Least Squares Estimator," Sociological Methodology, 25, 223-251. Cho, C. P., P. M. Bentler, and A. Satorra (1991), Scaled Test Statistics and Robust Standard Errors for Non-Normal Data in Covariance Structure Analysis: A Monte Carlo Study," British Journal of Mathematical and Statistical Psychology, 44, 347-357. Hanushek, Eric A. and John E. Jackson (1977), Statistical Methods for Social Scientists, New York: Academic Press. James, Lawrence R. (1980), "The Unmeasured Variables Problem in Path Analysis," Journal of Applied Psychology, 65 (4), 415-421. Myers, Raymond H. (1986), Classical and Modern Regression with Applications, Boston: Duxbury Press, 211. Ping, R. A. (1995), "A Parsimonious Estimating Technique for Interaction and Quadratic Latent Variables," The Journal of Marketing Research, 32 (August), 336-347. Ping, R. A. (1996a), "Latent Variable Interaction and Quadratic Effect Estimation: A Two-Step Technique Using Structural Equation Analysis," Psychological Bulletin, 119 (January), 166-175. Ping, R. A. (1996b), "Latent Variable Regression: A Technique for Estimating Interaction and Quadratic Coefficients," Multivariate Behavioral Research, 31 (1), 95-120. Warren, Richard D., Joan K. White and Wayne A. -InJournal of the American Statistical Association, 69 (328) December, 886-893.