Chapter 3-9. Random Error and Statistics

Chapter 3-9. Random Error and Statistics

<< This chapter uses too many examples out of Rothman (2002), done that way to quickly prepare a lecture while teaching out of the Rothman text. It needs to be updated with more of my own examples. >>

In the last chapter, we discussed systematic error , which resulted from bias.

In this chapter, we discuss random error , which is addressed using statistics.

Random error can be reduced to zero by making our sample size infinitely large, and to an acceptable degree by using a practical sample size that is sufficiently large.

We account for random error with statistics. That is, we use statistics to assess variability in the data, in an effort to distinguish chance findings from replicable findings. The use of the word

“chance” refers to sampling variability , where variability in the sample results simply from the process of taking a sample.

The claim that random error can be reduced to zero by making our sample infinitely large can be demonstrated by showing the confidence interval around an effect estimate becomes smaller as the sample size increases.

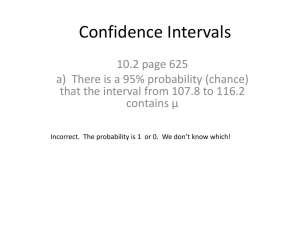

Confidence Intervals

When an estimate is presented as a single value, such as an odds ratio, we refer to it as a point estimate of the population odds ratio. When we compute a confidence interval, we form a interval estimate of the value.

A confidence interval is called an interval estimate , which is a interval

(lower bound , upper bound) that we can be confident covers, or straddles, the true population effect with some level of confidence.

The interpretation of a 95% confidence interval for the odds ratio is (van Belle et al, 2004, p.86):

The probability is 0.95, or 95%, that the interval (lower bound , upper bound) straddles the population odds ratio.

_____________________

Source: Stoddard GJ. Biostatistics and Epidemiology Using Stata: A Course Manual [unpublished manuscript] University of Utah

School of Medicine, 2010.

Chapter 3-9 (revised 16 May 2010) p. 1

Example:

Sulkowski (2000, Table 3) reports the following incidence proportion and confidence interval, expressed as percents: incidence proportion (%): 26/211 = 0.123, or 12.3%

95% CI: 8.2 – 17.5

We can verify this calculation in Stata using the confidence interval calculator for a binomial variable. (When working with a dichotomous variable, we are usually interested in the number of events, or successes, for a given sample size, which is a proportion. In probability theory, a variable that represents the number of events for a given sample size is called a binomial random variable

. Therefore, Stata refers to the incidence proportion CI as a “binomial” CI.)

Statistics

Summaries, tables & tests

Summary and descriptive statistics

Binomial CI calculator

Sample size: 211

Successes: 26

Confidence level: 95

Binomial confidence interval: Exact

OK cii 211 26

-- Binomial Exact --

Variable | Obs Mean Std. Err. [95% Conf. Interval]

-------------+---------------------------------------------------------------

| 211 .1232227 .0226281 .0820953 .1753174

Repeating the confidence interval definition,

The probability is 0.95, or 95%, that the interval (lower bound , upper bound) straddles the population proportion.

The statistical formula for this confidence interval was derived in such a way to be consistent with the long-run average. We can verify this using a Monte Carlo simulation in Stata by taking

10,000 samples of size N=211 from a dichotomous population with mean, or proportion, equal to

0.1232227, and see what the 2.5th and 97.5th percentiles are (the inner 95% of the sample proportions).

Chapter 3-9 (revised 16 May 2010) p. 2

clear set mem 10m set obs 10000 set seed 999 gen v1 = . gen prop = . forvalues x = 1/10000 {

quietly replace v1 = uniform() in 1/211

quietly recode v1 0/.1232227=1 .1232227/1=0 in 1/211

quietly sum v1 in 1/211 , meanonly

quietly replace prop = r(mean) in `x’

} sum prop scalar rmean = r(mean) centile prop , centile (2.5 , 97.5) display "Proportion = " rmean " , 95% CI (" r(c_1) " , " r(c_2) ")"

The result from this Monte Carlo simulation is,

Proportion = .1236654 , 95% CI (.08056872 , .17061612) which agrees to two decimal places with the formula approach, cii 211 26

-- Binomial Exact --

Variable | Obs Mean Std. Err. [95% Conf. Interval]

-------------+---------------------------------------------------------------

| 211 .1232227 .0226281 .0820953 .1753174

Now that we have verified the formula and simulation approaches agree, we can do a shortcut

Monte Carlo simulation by applying the formula to increasing larger sample sizes to verify the assertion:

Random error can be reduced to zero by making our sample size infinitely large, and to an acceptable degree by using a practical sample size that is sufficiently large.

Chapter 3-9 (revised 16 May 2010) p. 3

We will take increasing sample sizes in increments of 100, from a dichotomous population with mean 0.2, compute the proportion and 95% CI for each sample, and then plot the results. clear set mem 10m set obs 10000 set seed 999 gen v1 = . gen prop = . gen lowerci = . gen upperci = . gen n = . local i=0 forvalues x = 100(100)10000{

local i=`i'+1

quietly replace v1 = uniform() in 1/`x'

quietly recode v1 0/.2=1 .2/1=0 in 1/`x'

quietly ci v1 in 1/`x'

quietly replace prop = r(mean) in `i'

quietly replace lowerci = r(lb) in `i'

quietly replace upperci = r(ub) in `i'

quietly replace n = r(N) in `i'

} drop if n==.

*

#delimit ; twoway (line prop n)(line lowerci n)(line upperci n)

, yline(.15(.01).25) xlabel(0(1000)10000)

ylabel(.05(.05).3) xtitle(Sample Size)

ytitle(Proportion)

;

#delimit cr

0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000

Sample Size prop upperci lowerci which illustrates precision (tighter CIs) of the effect estimate (proportion) as the sample size increases, demonstrating that the random error is being reduced.

Chapter 3-9 (revised 16 May 2010) p. 4

Testing Significance With a Confidence Interval

One of the reasons confidence intervals are preferred by many over the p value, and why many journals discourage reporting a p value when the confidence interval is already reported, is that the confidence interval can be used for hypothesis testing.

We will see why, using the risk difference for our example. To do this we need the fact that the chi-square test for a 2

2 table is identical to the z test for comparing two proportions. (see box)

Equivalence of Chi-Square Test for 2

2 Table and the two-proportions Z test (Altman,

1991, pp 257-258).

Given a 2

2 table,

Group 1 Group 2 a b c d a+c = n

1 b+d = n

2

N= n

1

+ n

2

We have p

1

= a/(a+c), p

2

= b/(b+c) , and the pooled proportion is p = (a+b)/N.

Then, the z test for comparing two proportions is given by z

p

1

p

2 p (1

p )

1

1 n n

1 2

Substituting, this is equivalent to z

a a

c

b b

d a b c

N N d a

1

c

b

1

d which, after some manipulation, gives z

(

bc )

2

(

)(

)(

)(

d )

2

Thus, the chi-square with 1 degree of freedom (the 2

2 table case) is identically the square of the z test (the square of the standard normal distribution).

Chapter 3-9 (revised 16 May 2010) p. 5

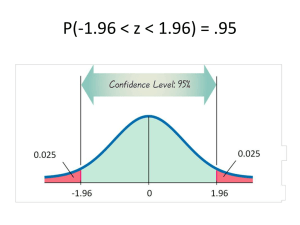

The standard normal distribution has 95% of its values between

1.96, and thus so does the square root of the chi-square test statistic for a 2

2 table.

Any estimate

that is asymptotically normal (its distribution looks like the standard normal distribution when the sample size is sufficiently large) has a confidence interval of the form: z

1

/ 2

SE

The risk difference, which is the numerator of the z test, is asymptotically normal.

Therefore, its confidence interval can be calculated as

RD

RD

z

1

/ 2

SE

RD

We can get the SE directly from the chi-square test statistic using the identity.

p

1

p

2 p (1

p )

1

1 n n

1 2

RD

SE

RD which is equivalent to,

SE

RD

RD and

SE

RD

RD /

The CI for RD is then given by

RD z

1

/ 2

RD /

This is the formula used by Stata when you specify the test based (tb) confidence interval option on the cs command.

For the relative (or multiplicative) effect estimates, the CI is not symmetric around the point estimate, as will be seen in the next chapter.

Chapter 3-9 (revised 16 May 2010) p. 6

Illustrating with the St. John’s Wort data (Rothman, 2002, p.124),

Statistics

Epidemiology and related

Tables for epidemiologists

Cohort study risk ratio, etc. calculator

12 5

47 45

Test based confidence intervals

OK csi 12 5 47 45, tb

| Exposed Unexposed | Total

-----------------+------------------------+------------

Cases | 12 5 | 17

Noncases | 47 45 | 92

-----------------+------------------------+------------

Total | 59 50 | 109

| |

Risk | .2033898 .1 | .1559633

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Risk difference | .1033898 | -.0339333 .2407129 (tb)

Risk ratio | 2.033898 | .7921441 5.222209 (tb)

Attr. frac. ex. | .5083333 | -.2623966 .8085102 (tb)

Attr. frac. pop | .3588235 |

+-------------------------------------------------

chi2(1) = 2.20 Pr>chi2 = 0.1382

The value of z

1

/ 2

for a 95% CI is 1.96. Verifying the lowerbound CI calculation using Stata

RD z

1

/ 2

RD /

display .1033898-1.96*.1033898/sqrt(2.20) display .1033898+1.96*.1033898/sqrt(2.20)

-.03323276

.24001236

We cannot expect better than 2 decimal places of accuracy since we used only two-decimal places for our value of the chi-square test statistic.

Test based confidence intervals have the nice property that they always agree with the p value.

Thus, when the confidence interval does not cover the null value, RD =0, then p < 0.05. When it does cover RD =0, we will have p > 0.05.

All other forms of the confidence interval, such as the exact CI, will not have this property.

Chapter 3-9 (revised 16 May 2010) p. 7

To illustrate, the following 2 x 2 table just barely achieves significance with the chi-square statistic. Asking for a test-based CI, csi 4 4 5175 1417 , tb

| Exposed Unexposed | Total

-----------------+------------------------+------------

Cases | 4 4 | 8

Noncases | 5175 1417 | 6592

-----------------+------------------------+------------

Total | 5179 1421 | 6600

| |

Risk | .0007723 .0028149 | .0012121

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Risk difference | -.0020426 | -.004085 -1.61e-07 (tb)

Risk ratio | .2743773 | .0752906 .9998983 (tb)

Prev. frac. ex. | .7256227 | .0001017 .9247094 (tb)

Prev. frac. pop | .5693939 |

+-------------------------------------------------

chi2(1) = 3.84 Pr>chi2 = 0.0500

Notice the upper CI is -1.61e-07, or -0.000000161, which is just touching 0 to the four decimal place accuracy of the p value.

The exact CI does not match as well the Fisher’s exact test, but is very close. Using a 2 x 2 table that gives a Fisher’s exact p value of 0.0500, csi 4 4 5175 1235 , exact

| Exposed Unexposed | Total

-----------------+------------------------+------------

Cases | 4 4 | 8

Noncases | 5175 1235 | 6410

-----------------+------------------------+------------

Total | 5179 1239 | 6418

| |

Risk | .0007723 .0032284 | .0012465

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Risk difference | -.0024561 | -.0057041 .000792

Risk ratio | .2392354 | .0599152 .9552421

Prev. frac. ex. | .7607646 | .0447579 .9400848

Prev. frac. pop | .6138984 |

+-------------------------------------------------

1-sided Fisher's exact P = 0.0500

2-sided Fisher's exact P = 0.0500

The other two available CIs in Stata, Woolf and Cornfield, are approximate methods design to be more centered around the effect estimate when the null hypothesis is false, rather than to be in agreement with the significance test. In other words, they were designed for estimation, rather than significance testing. (The test based CIs are designed to be centered around the effect estimate when the null hypothesis is true.)

Chapter 3-9 (revised 16 May 2010) p. 8

csi 4 4 5175 1417 , woolf

| Exposed Unexposed | Total

-----------------+------------------------+------------

Cases | 4 4 | 8

Noncases | 5175 1417 | 6592

-----------------+------------------------+------------

Total | 5179 1421 | 6600

| |

Risk | .0007723 .0028149 | .0012121

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Risk difference | -.0020426 | -.0048993 .0008141

Risk ratio | .2743773 | .0687065 1.095717

Prev. frac. ex. | .7256227 | -.0957173 .9312935

Prev. frac. pop | .5693939 |

+-------------------------------------------------

chi2(1) = 3.84 Pr>chi2 = 0.0500

The Cornfield method is only for the odds ratio, using the cc command.

For the record, the above example did not meet the minimum expected frequency rule, so the chisquare test was actually not appropriate (discussed in next chapter).

Exercise (confidence intervals for the odds ratio)

Use the following data from a case-control study.

Exposed Unexposed

Cases 12 controls 50

8

86

In this example, the chi-square test is appropriate since the minimum expected frequency rule is met (no cell of the 2 × 2 table has an expected frequency less than 5).

First, asking for an exact confidence interval,

Statistics

Epidemiology and related

Tables for epidemiologists

Case control odds ratio calculator

12 8

50 86

Exact confidence intervals

OK cci 12 8 50 86

<or. cci 12 8 50 86 , exact

Chapter 3-9 (revised 16 May 2010) p. 9

Proportion

| Exposed Unexposed | Total Exposed

-----------------+------------------------+------------------------

Cases | 12 8 | 20 0.6000

Controls | 50 86 | 136 0.3676

-----------------+------------------------+------------------------

Total | 62 94 | 156 0.3974

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Odds ratio | 2.58 | .8937471 7.760924 (exact)

Attr. frac. ex. | .6124031 | -.1188848 .8711494 (exact)

Attr. frac. pop | .3674419 |

+-------------------------------------------------

1-sided Fisher's exact P = 0.0423

2-sided Fisher's exact P = 0.0541

Second, asking for the Cornfield approximation confidence interval,

Statistics

Epidemiology and related

Tables for epidemiologists

Case control odds ratio calculator

12 8

50 86

Cornfield approximation

OK cci 12 8 50 86, cornfield

Proportion

| Exposed Unexposed | Total Exposed

-----------------+------------------------+------------------------

Cases | 12 8 | 20 0.6000

Controls | 50 86 | 136 0.3676

-----------------+------------------------+------------------------

Total | 62 94 | 156 0.3974

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Odds ratio | 2.58 | 1.010385 6.575707 (Cornfield)

Attr. frac. ex. | .6124031 | .010278 .8479251 (Cornfield)

Attr. frac. pop | .3674419 |

+-------------------------------------------------

chi2(1) = 3.93 Pr>chi2 = 0.0474

Chapter 3-9 (revised 16 May 2010) p. 10

Third, asking the Woolf approximation confidence interval,

Statistics

Epidemiology and related

Tables for epidemiologists

Case control odds ratio calculator

12 8

50 86

Woolf approximation

OK cci 12 8 50 86, woolf

Proportion

| Exposed Unexposed | Total Exposed

-----------------+------------------------+------------------------

Cases | 12 8 | 20 0.6000

Controls | 50 86 | 136 0.3676

-----------------+------------------------+------------------------

Total | 62 94 | 156 0.3974

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Odds ratio | 2.58 | .9877601 6.738883 (Woolf)

Attr. frac. ex. | .6124031 | -.0123915 .8516075 (Woolf)

Attr. frac. pop | .3674419 |

+-------------------------------------------------

chi2(1) = 3.93 Pr>chi2 = 0.0474

Fourth, asking for the test-based confidence interval,

Statistics

Epidemiology and related

Tables for epidemiologists

Case control odds ratio calculator

12 8

50 86

Test-based confidence intervals

OK cci 12 8 50 86, tb

Proportion

| Exposed Unexposed | Total Exposed

-----------------+------------------------+------------------------

Cases | 12 8 | 20 0.6000

Controls | 50 86 | 136 0.3676

-----------------+------------------------+------------------------

Total | 62 94 | 156 0.3974

| |

| Point estimate | [95% Conf. Interval]

|------------------------+------------------------

Odds ratio | 2.58 | 1.007834 6.604657 (tb)

Attr. frac. ex. | .6124031 | .0077734 .8485917 (tb)

Attr. frac. pop | .3674419 |

+-------------------------------------------------

chi2(1) = 3.93 Pr>chi2 = 0.0474

Chapter 3-9 (revised 16 May 2010) p. 11

Summarizing the four approaches

Four approaches to computer 95% CI for odds ratio = 2.58

CI method

Fisher’s

Chi-square 95% CI

Exact test* test* lower bound

Exact

Cornfield p = 0.054 p = 0.054 p = 0.047 p = 0.047

0.894

1.010

95% CI upper bound

7.761

6.576

Woolf

Test-based p = 0.054 p = 0.047 p = 0.054 p = 0.047

0.988

1.008

6.739

6.605

* CI method does not affect p value, only confidence interval.

Notice the Cornfield estimate is the tightest CI. Also, notice the exact and Woolf estimates do not agree with the p value conclusion.

A good practice is to not use the default Exact method when the chi-square test p value is reported, if the conclusions differ, since the reader would question your reported numbers.

Just to see what confidence interval approach logistic regression uses, we will first input the data into Stata using the input/expand method,

Exposed Unexposed

Cases 12 controls 50

8

86 clear input case expos count

1 1 12

1 0 8

0 1 50

0 0 86 end expand count drop count

Fitting a logistic regression,

Statistics

Binary outcomes

Logistic regression (reporting odds ratios)

Model tab: Dependent variable: case

Independent variables: expose

OK logistic case expos

Chapter 3-9 (revised 16 May 2010) p. 12

Logistic regression Number of obs = 156

LR chi2(1) = 3.84

Prob > chi2 = 0.0501

Log likelihood = -57.822612 Pseudo R2 = 0.0321

------------------------------------------------------------------------------

case | Odds Ratio Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

expos | 2.58 1.263834 1.93 0.053 .9877608 6.738878

------------------------------------------------------------------------------

Copying the table from above,

Four approaches to computer 95% CI for odds ratio = 2.58

CI method Fisher’s Chi-square 95% CI

Exact test* test* lower bound

95% CI upper bound

Exact

Cornfield p = 0.054 p = 0.054 p = 0.047 p = 0.047

0.894

1.010

7.761

6.576

Woolf

Test-based p = 0.054 p = 0.054 p = 0.047 p = 0.047

0.988

1.008

* CI method does not affect p value, only confidence interval.

6.739

6.605

We see that logistic regression uses the Woolf method to compute confidence intervals.

References

Altman DG. (1991). Practical Statistics for Medical Research . New York, Chapman &

Hall/CRC.

Rothman KJ. (2002). Epidemiology: An Introduction . Oxford, Oxford University Press.

Sulkowski MS, Thomas DL, Chaisson RE, Moore RD. (2000). Hepatotoxicity associated with antiretroviral therapy in adults infected with human immunodeficiency virus and the role of hepatitis C or B virus infection. JAMA 283(1):74-80. van Belle G, Fisher LD, Heagerty PJ, Lumly T. (2004). Biostatistics: A Metholdogy for the

Health Sciences , 2nd ed, Hoboken, NJ, John Wiley & Sons.

Chapter 3-9 (revised 16 May 2010) p. 13