Chapter 5-4. Linear Regression Adjusted Means

advertisement

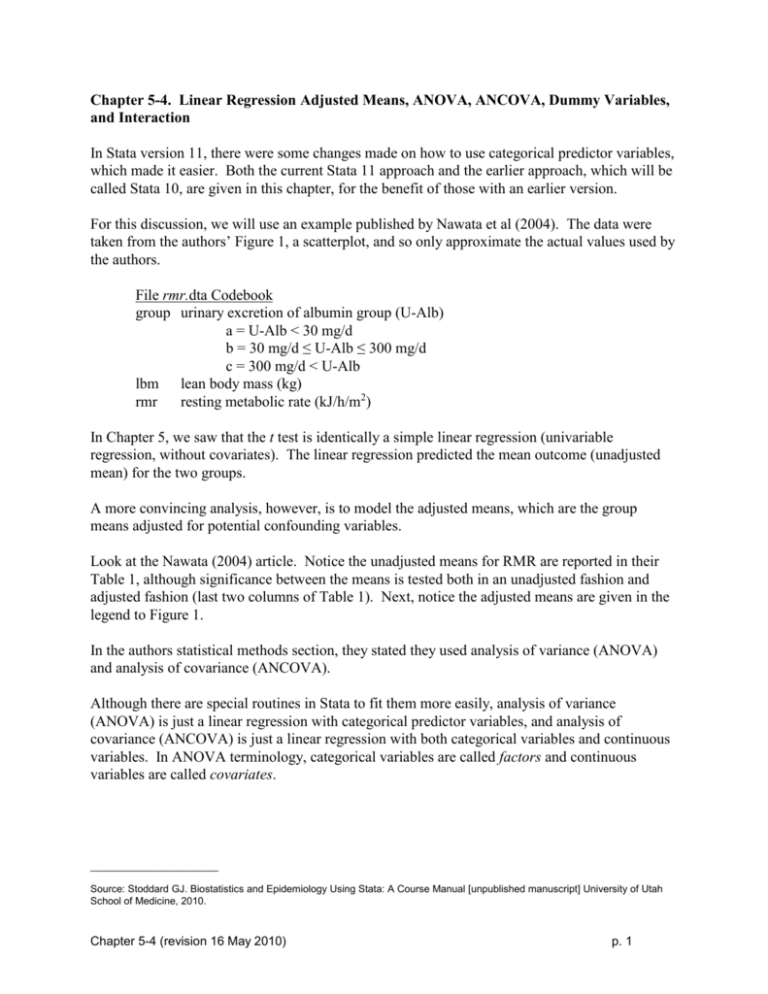

Chapter 5-4. Linear Regression Adjusted Means, ANOVA, ANCOVA, Dummy Variables, and Interaction In Stata version 11, there were some changes made on how to use categorical predictor variables, which made it easier. Both the current Stata 11 approach and the earlier approach, which will be called Stata 10, are given in this chapter, for the benefit of those with an earlier version. For this discussion, we will use an example published by Nawata et al (2004). The data were taken from the authors’ Figure 1, a scatterplot, and so only approximate the actual values used by the authors. File rmr.dta Codebook group urinary excretion of albumin group (U-Alb) a = U-Alb < 30 mg/d b = 30 mg/d ≤ U-Alb ≤ 300 mg/d c = 300 mg/d < U-Alb lbm lean body mass (kg) rmr resting metabolic rate (kJ/h/m2) In Chapter 5, we saw that the t test is identically a simple linear regression (univariable regression, without covariates). The linear regression predicted the mean outcome (unadjusted mean) for the two groups. A more convincing analysis, however, is to model the adjusted means, which are the group means adjusted for potential confounding variables. Look at the Nawata (2004) article. Notice the unadjusted means for RMR are reported in their Table 1, although significance between the means is tested both in an unadjusted fashion and adjusted fashion (last two columns of Table 1). Next, notice the adjusted means are given in the legend to Figure 1. In the authors statistical methods section, they stated they used analysis of variance (ANOVA) and analysis of covariance (ANCOVA). Although there are special routines in Stata to fit them more easily, analysis of variance (ANOVA) is just a linear regression with categorical predictor variables, and analysis of covariance (ANCOVA) is just a linear regression with both categorical variables and continuous variables. In ANOVA terminology, categorical variables are called factors and continuous variables are called covariates. _________________ Source: Stoddard GJ. Biostatistics and Epidemiology Using Stata: A Course Manual [unpublished manuscript] University of Utah School of Medicine, 2010. Chapter 5-4 (revision 16 May 2010) p. 1 Opening the rmr dataset in Stata, File Open Find the directory where you copied the course CD Change to the subdirectory datasets & do-files Single click on rmr.dta Open use "C:\Documents and Settings\u0032770.SRVR\Desktop\ Biostats & Epi With Stata\datasets & do-files\rmr.dta", clear * which must be all on one line, or use: cd "C:\Documents and Settings\u0032770.SRVR\Desktop\" cd "Biostats & Epi With Stata\datasets & do-files" use rmr, clear Click on the data browser icon, and we discover the group variable is alphabetic (called a string variable, for “string of characters”). Stata displays string variables with a red font. Since arithmetic cannot be done on letters, we first need to convert the string variable group into a numeric variable, Data Create or change variables Other variable transformation commands Encode value labels from string variable Main tab: String variable: group Create a numeric variable: groupx OK encode group, generate(groupx) To see what this did, Statistics Summaries, tables & tests Tables Twoway tables with measures of association Row variable: group Group variables: groupx OK tabulate group groupx Chapter 5-4 (revision 16 May 2010) p. 2 | groupx group | a b c | Total -----------+---------------------------------+---------a | 10 0 0 | 10 b | 0 10 0 | 10 c | 0 0 12 | 12 -----------+---------------------------------+---------Total | 10 10 12 | 32 It looks like nothing happened. Actually the variable groupx has values 1, 2, and 3. It is just that value labels were assigned that matched the original variable. Actual value 1 2 3 Label a b c To see this crosstabulation without the value labels, we use, Statistics Summaries, tables & tests Tables Twoway tables with measures of association Row variable: group Group variables: groupx Check Suppress value labels OK tabulate group groupx, nolabel | groupx group | 1 2 3 | Total -----------+---------------------------------+---------a | 10 0 0 | 10 b | 0 10 0 | 10 c | 0 0 12 | 12 -----------+---------------------------------+---------Total | 10 10 12 | 32 This is still a nominal scaled variable, but that is okay for the ANOVA type commands in Stata, which create indicator variables “behind the scenes”. To test the hypothesis that the three unadjusted means are equal for rmr, similar to what Nawata did for his Table 1, we can compute a one-way ANOVA, where one-way implies one predictor variable, which is groupx. Chapter 5-4 (revision 16 May 2010) p. 3 Statistics Linear models and related ANOVA/MANOVA One-way ANOVA Response variable: rmr Factor variable: group <- string variable OK for oneway Output: produce summary table OK oneway rmr group, tabulate | Summary of rmr group | Mean Std. Dev. Freq. ------------+-----------------------------------a | 136.08333 16.940851 12 b | 132.7 19.032428 10 c | 166.5 19.019129 12 ------------+-----------------------------------Total | 145.82353 23.604661 34 Analysis of Variance Source SS df MS F Prob > F -----------------------------------------------------------------------Between groups 7990.92451 2 3995.46225 11.91 0.0001 Within groups 10396.0167 31 335.355376 -----------------------------------------------------------------------Total 18386.9412 33 557.180036 Bartlett's test for equal variances: chi2(2) = 0.1787 Prob>chi2 = 0.915 The p value from the Analysis of Variance table, “Prob > F = 0.0001”, is what Nawata reported in Table 1 on the RMR row, P Value < 0.0001. (Nawata’s data were slightly different.) This was a test of the hypothesis that the three group means are the same: Ho : 1 2 3 To get Nawata’s Adjusted P Value (for LBM), the last column of Table 1, we use an ANCOVA. Stata 10: Linear models and related ANOVA/MANOVA Analysis of variance and covariance Dependent variable: rmr Model: group lbm Model variables: Categorical except the following continuous variables: lbm OK anova rmr group lbm, continuous(lbm) Chapter 5-4 (revision 16 May 2010) // Stata version 10 p. 4 which gives an error message: . anova rmr group no observations r(2000); lbm, continuous(lbm) partial Stata 11: Linear models and related ANOVA/MANOVA Analysis of variance and covariance Dependent variable: rmr Model: group lbm Model variables: Categorical except the following continuous variables: lbm OK anova rmr group lbm // Stata version 11 which gives an error message: . anova rmr group lbm group: may not use factor variable operators on string variables r(109); When you see the error message “no observations”, it usually means that you tried to use a string variable where a numeric variable was required. (We used “group” instead of “groupx”, which the oneway command allows but the anova command does not.) Stata 10: Going back to change this, Linear models and related ANOVA/MANOVA Analysis of variance and covariance Dependent variable: rmr Model: groupx lbm Model variables: Categorical except the following continuous variables: lbm OK anova rmr groupx lbm, continuous(lbm) // Stata version 10 Chapter 5-4 (revision 16 May 2010) p. 5 Number of obs = 34 Root MSE = 17.8777 R-squared = Adj R-squared = 0.4785 0.4264 Source | Partial SS df MS F Prob > F -----------+---------------------------------------------------Model | 8798.55426 3 2932.85142 9.18 0.0002 | groupx | 7734.4293 2 3867.21465 12.10 0.0001 lbm | 807.629752 1 807.629752 2.53 0.1224 | Residual | 9588.38691 30 319.612897 -----------+---------------------------------------------------Total | 18386.9412 33 557.180036 Stata 11: Going back to change this, we use our numeric variable for group, groupx, and we put a “c.” in front of our continuous variable, where the “c.” informs Stata it is a continuous variable. Linear models and related ANOVA/MANOVA Analysis of variance and covariance Dependent variable: rmr Model: groupx c.lbm OK anova rmr groupx c.lbm // Stata version 11 Number of obs = Root MSE 34 R-squared = 17.8777 = 0.4785 Adj R-squared = 0.4264 Source | Partial SS df MS F Prob > F -----------+---------------------------------------------------Model | 8798.55426 3 2932.85142 9.18 0.0002 | groupx | 7734.4293 2 3867.21465 12.10 0.0001 lbm | 807.629752 1 807.629752 2.53 0.1224 | Residual | 9588.38691 30 319.612897 -----------+---------------------------------------------------Total | 18386.9412 33 557.180036 We use the p value for the groupx row, which is “p=0.0001”, in approximate agreement with Nawata’s p=0.0008 (differing only because the datasets differ slightly). In this model, we tested the null hypothesis that the three group means are the same: Ho : 1 2 3 versus H A : 1 2 3 after adjustment for LBM (after controlling for LBM). In other words, we actually tested Ho : adjusted 1 adjusted 2 adjusted 3 versus H A : adjusted 1 adjusted 2 adjusted 3 Chapter 5-4 (revision 16 May 2010) p. 6 Since p=.0001 < 0.05, we reject H0 and accept HA , concluding that at least two of the group’s differ in their mean value. Aside: Review of Hypothesis Testing Recall, that the p value is the probability of obtaining the result that we did in our sample if the null hypothesis H0 is true. If H0 is true, then we observed at least two means that were different from each other just due to sampling variation. It can be attributed to sampling variation, since under H0, no differences in means existed in the population we sampled from, so the sample means differ simply because “they just happen to come out that way” by the process of taking a sample (by chance). H0, then, can be viewed as the mathematical expression consistent with sampling variation, which is what we want to eliminate as an explanation for the observed effect. If this probability is small, p < 0.05, we conclude that sampling variation was too unlikely to be an explanation for the sample result. We then accept the opposite, the alternative hypothesis HA, that there is a difference somewhere among the means in the population we sampled from. We have to always keep in mind the dysjunctive syllogism of research (Ch 5-2, p.1) when accepting HA, however, recognizing that the p value cannot rule out bias or confounding by other confounders as alternative explanations for the observed effect. Chapter 5-4 (revision 16 May 2010) p. 7 The same results could be derived using a linear regression model. Stata 10: We can get the anova procedure to show us the regression model that matches the ANOVA, using the regress option. Linear models and related ANOVA/MANOVA Analysis of variance and covariance Model tab: Dependent variable: rmr Model: groupx lbm Model variables: Categorical except the following continuous variables: lbm Reporting tab: Display anova and regression table OK anova rmr groupx lbm, continuous(lbm) regress anova // Stata 10 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr Coef. Std. Err. t P>|t| [95% Conf. Interval] -----------------------------------------------------------------------------_cons 142.1363 16.17229 8.79 0.000 109.1081 175.1645 groupx 1 -29.91667 7.305324 -4.10 0.000 -44.83613 -14.9972 2 -33.32727 7.660557 -4.35 0.000 -48.97222 -17.68233 3 (dropped) lbm .5454562 .3431357 1.59 0.122 -.1553203 1.246233 -----------------------------------------------------------------------------Number of obs = 34 Root MSE = 17.8777 R-squared = Adj R-squared = 0.4785 0.4264 Source | Partial SS df MS F Prob > F -----------+---------------------------------------------------Model | 8798.55426 3 2932.85142 9.18 0.0002 | groupx | 7734.4293 2 3867.21465 12.10 0.0001 lbm | 807.629752 1 807.629752 2.53 0.1224 | Residual | 9588.38691 30 319.612897 -----------+---------------------------------------------------Total | 18386.9412 33 557.180036 Notice it reports that group 3 was dropped. What was actually dropped was the indicator variable (or dummy variable) for group 3. Group 3 became the referent and was absorbed into the intercept term (_cons). The anova command creates indicator variables for the categorical variables, uses them in the calculation, but does not add them to the variables in the data browser. Chapter 5-4 (revision 16 May 2010) p. 8 Stata 11: We can follow the anova procedure with a regress command to show us the regression model that matches the ANOVA, anova rmr groupx c.lbm // Stata version 11 regress . anova rmr groupx c.lbm // Stata version 11 Number of obs = 34 Root MSE = 17.8777 R-squared = Adj R-squared = 0.4785 0.4264 Source | Partial SS df MS F Prob > F -----------+---------------------------------------------------Model | 8798.55426 3 2932.85142 9.18 0.0002 | groupx | 7734.4293 2 3867.21465 12.10 0.0001 lbm | 807.629752 1 807.629752 2.53 0.1224 | Residual | 9588.38691 30 319.612897 -----------+---------------------------------------------------Total | 18386.9412 33 557.180036 . regress Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 2 | -3.410606 7.654802 -0.45 0.659 -19.0438 12.22258 3 | 29.91667 7.305324 4.10 0.000 14.9972 44.83613 | lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 112.2196 15.87451 7.07 0.000 79.79954 144.6397 ------------------------------------------------------------------------------ Notice it reports that group 1 was dropped. What was actually dropped was the indicator variable (or dummy variable) for group 1. Group 1 became the referent and was absorbed into the intercept term (_cons). The anova command creates indicator variables for the categorical variables, uses them in the calculation, but does not add them to the variables in the data browser. Notice that the anova command provides a single p value to test the equality of the three groups, whereas the regression model uses two p values (one for each of two group indicators). We will see how to combine these into a single p value below. Chapter 5-4 (revision 16 May 2010) p. 9 When modeling a categorical predictor variable, one less indicator than the number of categories is used in the model (see box). ______________________________________________________________________________ Why One Indicator Variable Must Be Left Out Regression models use a data matrix which includes a column of 1’s for the constant term. _cons group1 group2 group3 group 1 1 0 0 1 1 0 1 0 2 1 0 0 1 3 The combination of group1, group2, and group3 indicator variables predict the constant variable exactly. This is, we have perfect colinearity, where Constant = group1 + group2 + group3 With all three indicator variables in the model, it is impossible for the constant term to have an “independent” contribution to the outcome when holding constant all of the indicator terms—the constant term is completely “dependent” upon the indicator terms. Leaving one indicator variable out resolves this problem. That indicator becomes part of the constant (the constant being the combined referent group for all predictor variables in the model). ______________________________________________________________________________ Let’s create the indicator variables for group and verify this is what the anova command did. Data Create or change variables Other variable creation commands Create indicator variables Variable to tabulate: groupx New variables stub: group_ OK tabulate groupx, generate(group_) Notice in the Variables window that three new variables were added: group_1, group_2, and group_3. We can verify these new variables are indicator variables by looking at them in the data browser, or by listing them: Chapter 5-4 (revision 16 May 2010) p. 10 Data Describe data List data Main tab: Variables: groupx group_1-group_3 Options tab: Display numeric codes rather than label values OK list groupx group_1-group_3, nolabel +--------------------------------------+ | groupx group_1 group_2 group_3 | |--------------------------------------| 1. | 1 1 0 0 | 2. | 1 1 0 0 | 3. | 1 1 0 0 | ... 10. | 1 1 0 0 | |--------------------------------------| 11. | 2 0 1 0 | 12. | 2 0 1 0 | 13. | 2 0 1 0 | ... 20. | 2 0 1 0 | |--------------------------------------| 21. | 3 0 0 1 | 22. | 3 0 0 1 | 23. | 3 0 0 1 | ... 32. | 3 0 0 1 | +--------------------------------------+ You can use the space bar (display next page) or enter key (display next line) to scroll through the output. Chapter 5-4 (revision 16 May 2010) p. 11 Stata 10: Now, to duplicate the above model, with the indicator for group 3 left out: Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr Coef. Std. Err. t P>|t| [95% Conf. Interval] -----------------------------------------------------------------------------_cons 142.1363 16.17229 8.79 0.000 109.1081 175.1645 groupx 1 -29.91667 7.305324 -4.10 0.000 -44.83613 -14.9972 2 -33.32727 7.660557 -4.35 0.000 -48.97222 -17.68233 3 (dropped) lbm .5454562 .3431357 1.59 0.122 -.1553203 1.246233 ----------------------------------------------------------------------------- we use, Statistics Linear models and related Linear regression Dependent variable: rmr Independent variables: group_1 group_2 lbm OK regress rmr group_1 group_2 lbm // Stata version 10 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group_1 | -29.91667 7.305324 -4.10 0.000 -44.83613 -14.9972 group_2 | -33.32727 7.660557 -4.35 0.000 -48.97222 -17.68233 lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 142.1363 16.17229 8.79 0.000 109.1081 175.1645 ------------------------------------------------------------------------------ Chapter 5-4 (revision 16 May 2010) p. 12 Stata 11: Now, to duplicate the above model, with the indicator for group 3 left out: Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 2 | -3.410606 7.654802 -0.45 0.659 -19.0438 12.22258 3 | 29.91667 7.305324 4.10 0.000 14.9972 44.83613 | lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 112.2196 15.87451 7.07 0.000 79.79954 144.6397 ------------------------------------------------------------------------------ we use, Statistics Linear models and related Linear regression Dependent variable: rmr Independent variables: group_2 group_3 lbm OK regress rmr group_2 group_3 lbm // Stata version 11 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group_2 | -3.410606 7.654802 -0.45 0.659 -19.0438 12.22258 group_3 | 29.91667 7.305324 4.10 0.000 14.9972 44.83613 lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 112.2196 15.87451 7.07 0.000 79.79954 144.6397 ------------------------------------------------------------------------------ If we used this regression approach, what p value would we use to report in Table 1 (similar to Nawata’s single p value)? Chapter 5-4 (revision 16 May 2010) p. 13 The anova command provided such a single p value (p=0.0001). Number of obs = 34 Root MSE = 17.8777 R-squared = Adj R-squared = 0.4785 0.4264 Source | Partial SS df MS F Prob > F -----------+---------------------------------------------------Model | 8798.55426 3 2932.85142 9.18 0.0002 | groupx | 7734.4293 2 3867.21465 12.10 0.0001 lbm | 807.629752 1 807.629752 2.53 0.1224 | Residual | 9588.38691 30 319.612897 -----------+---------------------------------------------------Total | 18386.9412 33 557.180036 We can get this from a post-estimation approach, after running the linear regression command as well. To test the hypothesis, Ho : adjusted 1 adjusted 2 adjusted 3 we need to constuct a command that looks like: Stata 10: test (_cons+igroup1)=(_cons+igroup2)=_cons Statistics Postestimation Tests Test linear hypotheses Specification 1: tests these coefficients: _cons+group_1 = _cons+group_2 = _cons OK test _cons+group_1 = _cons+group_2 = _cons // Stata Version 10 ( 1) ( 2) group_1 - group_2 = 0 group_1 = 0 F( 2, 30) = Prob > F = Chapter 5-4 (revision 16 May 2010) 12.10 0.0001 p. 14 Stata 11: test (_cons+igroup2)=(_cons+igroup3)=_cons Statistics Postestimation Tests Test linear hypotheses Specification 1: tests these coefficients: _cons+group_2 = _cons+group_3 = _cons OK test _cons+group_2 = _cons+group_3 = _cons // Stata Version 11 . test (_cons+group_2 = _cons+group_3 = _cons) ( 1) ( 2) group_2 - group_3 = 0 group_2 = 0 F( 2, 30) = Prob > F = 12.10 0.0001 We see that the F statistic and p value match those in the above ANOVA model output. Chapter 5-4 (revision 16 May 2010) p. 15 Reporting Adjusted Group Means with Confidence Intervals Reporting adjusted group means is rather clever, because you are able to show how groups differ when controlling for potential confounders. Exercise: Look at the article by Kalmijn et al (2002), which is another article that reports adjusted group means. 1) Notice in their Statistical Analysis Section, they described the use of dummy variable coding in their linear regression model as: “…In addition, subjects who smoked >0-20 pack-years and >20 pack-years were compared to never smokers by including two dummy variables in the regression model. Confounders that were taken into account were age (continuous), sex, education (four dummy categories), body mass index, total cholesterol level, and systolic blood pressure…” 2) Notice in their Table 2 that they are reporting adjusted mean scores. To get the adjusted group means following a regression, we might try: Stata 10: Statistics Postestimation Adjusted means and proportions Main tab: Compute and display predictions for each level of variables: groupx Options tab: Prediction: linear prediction confidence or prediction intervals OK adjust, by(groupx) xb ci Stata 11: The adjust command is no longer listed on the menu, but can be run as a command, adjust, by(groupx) xb ci Chapter 5-4 (revision 16 May 2010) p. 16 ------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: lbm, group_1, group_2 ---------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.083 [125.543 146.623] b | 132.7 [121.154 144.246] c | 166.5 [155.96 177.04] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] Notice, however, that these means are identical to the means from the above one-way ANOVA, which did not adjust for lbm, since lbm was not in that one-way ANOVA model. | Summary of rmr group | Mean Std. Dev. Freq. ------------+-----------------------------------a | 136.08333 16.940851 12 b | 132.7 19.032428 10 c | 166.5 19.019129 12 ------------+-----------------------------------Total | 145.82353 23.604661 34 Analysis of Variance Source SS df MS F Prob > F -----------------------------------------------------------------------Between groups 7990.92451 2 3995.46225 11.91 0.0001 Within groups 10396.0167 31 335.355376 -----------------------------------------------------------------------Total 18386.9412 33 557.180036 Bartlett's test for equal variances: Chapter 5-4 (revision 16 May 2010) chi2(2) = 0.1787 Prob>chi2 = 0.915 p. 17 To get the adjusted mean, we need to hold the covariates constant at some value. Usually, researchers choose the covariate’s overall mean value. Stata 10: Statistics Postestimation Adjusted means and proportions Main tab: Compute and display predictions for each level of variables: groupx Variables to be set to there overall mean value: lbm Options tab: Prediction: linear prediction confidence or prediction intervals OK adjust lbm, by(groupx) xb ci // Stata version 10 Stata 11: Just running the command (not available on the menu), adjust lbm, by(groupx) xb ci // Stata version 10 -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2 Covariate set to mean: lbm = 44.088235 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.268 [125.725 146.81] b | 132.857 [121.31 144.405] c | 166.184 [155.637 176.732] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] These adjusted means are slightly different from those computed above: ---------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.083 [125.543 146.623] b | 132.7 [121.154 144.246] c | 166.5 [155.96 177.04] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] Chapter 5-4 (revision 16 May 2010) p. 18 The adjusted mean is simply the predicted value from the regression equation. Copying the regression equation from above, -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group_1 | -29.91667 7.305324 -4.10 0.000 -44.83613 -14.9972 group_2 | -33.32727 7.660557 -4.35 0.000 -48.97222 -17.68233 lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 142.1363 16.17229 8.79 0.000 109.1081 175.1645 ------------------------------------------------------------------------------ and computing the adjusted group mean for group2, display 142.1363 -29.91667*0 -33.32727*1 + 0.5454562*44.088235 132.85723 which agrees with the adjusted group mean computed above. -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2 Covariate set to mean: lbm = 44.088235 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.268 [125.725 146.81] b | 132.857 [121.31 144.405] c | 166.184 [155.637 176.732] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] What did this model look like? To see how well the model fitted the data, we can overlay the fitted lines onto the original data. First we need a variable containing the predicted values: Statistics Postestimation Predictions, residuals, etc. New variable name: rmr_hat Produce: Fitted values (xb) OK predict rmr_hat, xb Chapter 5-4 (revision 16 May 2010) p. 19 We now want to overlay three scatterplots (one for each group) and three prediction line graphs (one for each group). We could use the graphics menu, which would require setting the the overlaying of six plots. Alternatively, we can copy the following into the do-file editor, and run it (also in Ch 5-4.do) 100 120 140 RMR 160 180 200 sort lbm // always sort on x variable for line graphs #delimit ; twoway (scatter rmr lbm if groupx==1 , msymbol(square) mfcolor(green) mlcolor(green) msize(large)) (scatter rmr lbm if groupx==2 , msymbol(circle) mfcolor(blue) mlcolor(blue) msize(large)) (scatter rmr lbm if groupx==3 , msymbol(triangle) mfcolor(red) mlcolor(red) msize(large)) (line rmr_hat lbm if groupx==1 , clpattern(solid) clwidth(thick) clcolor(green)) (line rmr_hat lbm if groupx==2 , clpattern(solid) clwidth(thick) clcolor(blue)) (line rmr_hat lbm if groupx==3 , clpattern(solid) clwidth(thick) clcolor(red)) , legend(off) ytitle(RMR) xtitle(LBM) ; #delimit cr 30 40 50 LBM 60 70 Notice, the regression procedure assumes all lines are parallel, just shifted vertically for each group, unless interaction terms are added to the model. Chapter 5-4 (revision 16 May 2010) p. 20 Look at Figure 1 in the Nawata article. Our model did not fit the data well at all, compared to what Nawata noticed about these data. (The red line should be slanting downward.) These data are said to interact. That is, the best fitting lines for each group are not parallel. It would be better to let each group have its own slope, as well as its own intercept. We need to first create some interaction variables. Data Create or change variables Create new variable (Version 10): New variable name : lbmXgroup1 (Version 11): Variable name : lbmXgroup1 (Version 10): Contents of new variable: lbm*group_1 (Version 11): Contents of variable: lbm*group_1 OK generate lbmXgroup1 = lbm*group_1 and modifying the command for group 2, generate lbmXgroup2 = lbm*group_2 Fitting a new model with these interaction terms added, Statistics Linear models and related Linear regression Dependent variable: rmr Independent variables: igroup1 igroup2 lbm lbmXgroup1 lbmXgroup2 OK regress rmr group_1 group_2 lbm lbmXgroup1 lbmXgroup2 Source | SS df MS -------------+-----------------------------Model | 10820.5192 5 2164.10384 Residual | 7566.42198 28 270.229356 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 5, 28) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 8.01 0.0001 0.5885 0.5150 16.439 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group_1 | -122.4433 34.5581 -3.54 0.001 -193.2324 -51.65425 group_2 | -100.1493 39.63594 -2.53 0.017 -181.3399 -18.95877 lbm | -.8470919 .6166423 -1.37 0.180 -2.110226 .4160425 lbmXgroup1 | 2.085718 .7644016 2.73 0.011 .5199118 3.651523 lbmXgroup2 | 1.498063 .8819738 1.70 0.100 -.3085782 3.304704 _cons | 204.3368 27.94916 7.31 0.000 147.0855 261.588 ------------------------------------------------------------------------------ Chapter 5-4 (revision 16 May 2010) p. 21 The interaction term for group 1 is significant, and so should be kept in the model. For group 2, it is approaching significant, so it could be kept in or not. Keeping this second term in would not improve the fit by much. Keeping both interaction terms in, let’s see what happens graphically. First saving the new predicted values, Statistics Postestimation Predictions, residuals, etc. New variable name: rmr_hat2 Produce: Fitted values (xb) OK predict rmr_hat2, xb and repeating graph, using the new predicted values sort lbm // always sort on x variable for line graphs #delimit ; twoway (scatter rmr lbm if groupx==1 , msymbol(square) mfcolor(green) mlcolor(green) msize(large)) (scatter rmr lbm if groupx==2 , msymbol(circle) mfcolor(blue) mlcolor(blue) msize(large)) (scatter rmr lbm if groupx==3 , msymbol(triangle) mfcolor(red) mlcolor(red) msize(large)) (line rmr_hat2 lbm if groupx==1 , clpattern(solid) clwidth(thick) clcolor(green)) (line rmr_hat2 lbm if groupx==2 , clpattern(solid) clwidth(thick) clcolor(blue)) (line rmr_hat2 lbm if groupx==3 , clpattern(solid) clwidth(thick) clcolor(red)) , legend(off) ytitle(RMR) xtitle(LBM) ; #delimit cr Chapter 5-4 (revision 16 May 2010) p. 22 100 120 140 RMR 160 180 200 This looks much more like Nawata’s model, except for the one outlying blue point added to the dataset for illustration. 30 40 50 LBM 60 70 Clearly, now, the adjusted mean difference depends very much on what value we hold lbm constant at, since the groups differ more at low values of LBM than at high values. Stata 10: Statistics Postestimation Adjusted means and proportions Compute and display predictions for each level of variables: groupx Variables to be set to there overall mean value: lbm OK adjust lbm, by(groupx) xb ci Stata 11: adjust lbm, by(groupx) xb ci Chapter 5-4 (revision 16 May 2010) p. 23 -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2, lbmXgroup1, lbmXgroup2 Covariate set to mean: lbm = 44.088235 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 135.797 [126.067 145.527] b | 132.456 [121.801 143.11] c | 166.99 [157.242 176.738] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] Compared to the earlier model, without the interaction terms, -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2 Covariate set to mean: lbm = 44.088235 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.268 [125.725 146.81] b | 132.857 [121.31 144.405] c | 166.184 [155.637 176.732] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] it did not change much. This is because regression lines always passes through the group means (X-variable mean and Yvariable mean) and so the slope pivots around it. At the mean, then, the slope is of no consequence. If you wanted to know the adjusted mean values for a point far away from the mean however, it would have a big effect. Stata 10: Statistics Postestimation Adjusted means and proportions Compute and display predictions for each level of variables: groupx Variables to be set to there overall mean value: <leave blank this time> Variables to be set to a specified value: Variable: lbm Value: 57 OK adjust lbm=57, by(groupx) xb ci Chapter 5-4 (revision 16 May 2010) p. 24 Stata 11: adjust lbm=57, by(groupx) xb ci -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2, lbmXgroup1, lbmXgroup2 Covariate set to value: lbm = 57 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 124.859 [105.505 144.214] b | 121.518 [101.735 141.302] c | 156.053 [137.69 174.415] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] Compared to the earlier model, without the interaction terms, -------------------------------------------------------------------------------Dependent variable: rmr Command: regress Variables left as is: group_1, group_2 Covariate set to mean: lbm = 44.088235 ----------------------------------------------------------------------------------------------------------------------------groupx | xb lb ub ----------+----------------------------------a | 136.268 [125.725 146.81] b | 132.857 [121.31 144.405] c | 166.184 [155.637 176.732] ---------------------------------------------Key: xb = Linear Prediction [lb , ub] = [95% Confidence Interval] is very different. Stata’s New Categorical Variable Facility With Version 11, Stata has a much easier way to work with categorical variables. You simply put an “i.” in front of each categorical variable, and Stata will create indicator variables behind the scenes to use in the regression model. The command, regress rmr group_2 group_3 lbm can now be specified as, regress rmr i.groupx lbm Chapter 5-4 (revision 16 May 2010) // Stata version 11 p. 25 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 2 | -3.410606 7.654802 -0.45 0.659 -19.0438 12.22258 3 | 29.91667 7.305324 4.10 0.000 14.9972 44.83613 | lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 112.2196 15.87451 7.07 0.000 79.79954 144.6397 ------------------------------------------------------------------------------ By default, it uses the first category of groupx as the referent group. To specify the second category as the referent group, we can use, “ib2.”, which the “b” stands for “base level”, which is another name for referent category, regress rmr ib2.groupx lbm // Stata version 11 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 1 | 3.410606 7.654802 0.45 0.659 -12.22258 19.0438 3 | 33.32727 7.660557 4.35 0.000 17.68233 48.97222 | lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 108.809 16.05747 6.78 0.000 76.01528 141.6028 ------------------------------------------------------------------------------ o We see that category 2 of groupx was left out this time, which turns it into the referent category. To specify the last category as the referent group, we can use, “ib(last).”, regress rmr ib(last).groupx lbm Chapter 5-4 (revision 16 May 2010) // Stata version 11 p. 26 Source | SS df MS -------------+-----------------------------Model | 8798.55426 3 2932.85142 Residual | 9588.38691 30 319.612897 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 3, 30) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 9.18 0.0002 0.4785 0.4264 17.878 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 1 | -29.91667 7.305324 -4.10 0.000 -44.83613 -14.9972 2 | -33.32727 7.660557 -4.35 0.000 -48.97222 -17.68233 | lbm | .5454562 .3431357 1.59 0.122 -.1553203 1.246233 _cons | 142.1363 16.17229 8.79 0.000 109.1081 175.1645 ------------------------------------------------------------------------------ This left group 3, the last category, out of the model to serve as the referent category. To get the interaction terms, we use the “#” operator. To get the model specified by this command, regress rmr group_1 group_2 lbm lbmXgroup1 lbmXgroup2 we use, regress rmr ib(last).groupx lbm i.groupx#c.lbm // Stata version 11 Source | SS df MS -------------+-----------------------------Model | 10820.5192 5 2164.10384 Residual | 7566.42198 28 270.229356 -------------+-----------------------------Total | 18386.9412 33 557.180036 Number of obs F( 5, 28) Prob > F R-squared Adj R-squared Root MSE = = = = = = 34 8.01 0.0001 0.5885 0.5150 16.439 -----------------------------------------------------------------------------rmr | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------groupx | 1 | -122.4433 34.5581 -3.54 0.001 -193.2324 -51.65425 2 | -100.1493 39.63594 -2.53 0.017 -181.3399 -18.95877 | lbm | -.8470919 .6166423 -1.37 0.180 -2.110226 .4160425 | groupx#c.lbm | 1 | 2.085718 .7644016 2.73 0.011 .5199118 3.651523 2 | 1.498063 .8819738 1.70 0.100 -.3085782 3.304704 | _cons | 204.3368 27.94916 7.31 0.000 147.0855 261.588 ------------------------------------------------------------------------------ Chapter 5-4 (revision 16 May 2010) p. 27 How to Interpret the Interaction Term The file Ch5-4.dta contains the following practice data. This is just for illustration, so we will ignore that the sample size is too small for three predictor variables. 1. 2. 3. 4. 5. 6. 7. 8. +---------------------------+ | group x y xgroup | |---------------------------| | 0 10 200 0 | | 0 12 201 0 | | 0 14 202 0 | | 0 17 203 0 | |---------------------------| | 1 9 320 9 | | 1 15 350 15 | | 1 20 400 20 | | 1 24 475 24 | +---------------------------+ where group = group variable (0 or 1) x = a continuous predictor variable y = a continuous outcome variable xgroup = x by group interaction, created by multiplying x by group. We will see why this strange interaction variable produces something meaningful, and learn how to interpret this interaction term. Chapter 5-4 (revision 16 May 2010) p. 28 The regression model without the interaction term looks like: 0 50 100 150 200 250 300 350 400 450 500 Y -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | 8.356792 2.016724 4.14 0.009 3.172639 13.54095 group | 153.412 19.17877 8.00 0.000 104.1114 202.7126 _cons | 90.7725 29.48489 3.08 0.028 14.97919 166.5658 ------------------------------------------------------------------------------ 10 15 20 25 X With just these two “main effect” terms (main effects as opposed to interaction terms), the model is constrained to fit the data as best it can with the limitation that the slopes are equal (lines parallel) and all the group variable can do is shift the line up or down (change the intercept). -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | 8.356792 2.016724 4.14 0.009 3.172639 13.54095 group | 153.412 19.17877 8.00 0.000 104.1114 202.7126 _cons | 90.7725 29.48489 3.08 0.028 14.97919 166.5658 ------------------------------------------------------------------------------ Y = a + b1(X) + b2(group) = 90.77 + 8.36 (X) + 153.41(group) from the regression table Y = a + b1(X) + b2(group) = 90.77 + 8.36 (X) + 153.41 (0) = 90.77 + 8.36 (X) Y = a + b1(X) + b2(group) = 90.77 + 8.36 (X) + 153.41 (1) = 90.77 + 8.36 (X) + 153.41 for group 0 for group 1 (shifted up by 153.41) while for either group the slope for X remains 8.36. Chapter 5-4 (revision 16 May 2010) p. 29 With the interaction term, the model looks like: 0 50 100 150 200 250 300 350 400 450 500 Y -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | .4299065 3.16022 0.14 0.898 -8.34427 9.204083 group | 19.77166 49.9967 0.40 0.713 -119.0414 158.5847 xgroup | 9.609776 3.479546 2.76 0.051 -.0509926 19.27054 _cons | 195.8037 42.66296 4.59 0.010 77.35236 314.2551 ------------------------------------------------------------------------------ 10 15 20 25 X With both the two “main effect” terms and the interaction term, the model is permitted to have different slopes. Now the distance between the two lines, or the group difference, depends on the covariate X. -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | .4299065 3.16022 0.14 0.898 -8.34427 9.204083 group | 19.77166 49.9967 0.40 0.713 -119.0414 158.5847 xgroup | 9.609776 3.479546 2.76 0.051 -.0509926 19.27054 _cons | 195.8037 42.66296 4.59 0.010 77.35236 314.2551 ------------------------------------------------------------------------------ Y = a + b1(X) + b2(group) + b3(X group) = 195.8 + 0.4(X) +19.8(group) + 9.6 (X group) = 195.8 + 0.4 (X) +19.8 (0) + 9.6 (0) = 195.8 + 0.4 (X) for group 0 Y = a + b1(X) + b2(group) + b3(X group) = 195.8 + 0.4 (X) +19.8 (group) + 9.6(X group) = 195.8 + 0.4 (X) +19.8 (1) + 9.6 (X 1) = 195.8 + 0.4 (X) +19.8+ 9.6 (X) = 195.8 + (0.4+9.6)(X) +19.8 for group 1 Chapter 5-4 (revision 16 May 2010) p. 30 For the reference group, group 1, a simple regression line is fitted. For group 1, the line is shifted up or down by the correct amount at the point X=0 (it extrapolated in this case) and then adds an increment, or decrement, to the slope for the covariate X. Thus the interaction term is how much needs to be added or substracted from the reference group slope to modify it to be the correct slope for group 1. Notice in the main effects model, the covariate X is significant. -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | 8.356792 2.016724 4.14 0.009 3.172639 13.54095 group | 153.412 19.17877 8.00 0.000 104.1114 202.7126 _cons | 90.7725 29.48489 3.08 0.028 14.97919 166.5658 ------------------------------------------------------------------------------ However, in the model with the interaction term, the covariate X is no longer significant. -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------x | .4299065 3.16022 0.14 0.898 -8.34427 9.204083 group | 19.77166 49.9967 0.40 0.713 -119.0414 158.5847 xgroup | 9.609776 3.479546 2.76 0.051 -.0509926 19.27054 _cons | 195.8037 42.66296 4.59 0.010 77.35236 314.2551 ------------------------------------------------------------------------------ Should we drop the X variable from the model, then, because it is not significant? Let’s see what happens if we do. 0 50 100 150 200 250 300 350 400 450 500 Y -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group | 14.0754 24.49134 0.57 0.590 -48.88159 77.03239 xgroup | 10.03968 1.305393 7.69 0.001 6.684064 13.3953 _cons | 201.5 7.326498 27.50 0.000 182.6666 220.3334 ------------------------------------------------------------------------------ 10 15 20 25 X Chapter 5-4 (revision 16 May 2010) p. 31 -----------------------------------------------------------------------------y | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------group | 14.0754 24.49134 0.57 0.590 -48.88159 77.03239 xgroup | 10.03968 1.305393 7.69 0.001 6.684064 13.3953 _cons | 201.5 7.326498 27.50 0.000 182.6666 220.3334 ------------------------------------------------------------------------------ Y = a + b1(group) + b2(X group) = 201.5 +14.1(group) + 10.0(X group) = 201.5 +14.1(0) + 10.0(X 0) = 201.5 for group 0 Y = a + b1(group) + b2(X group) = 201.5 +14.1(group) + 10.0(X group) = 201.5 +14.1(1) + 10.0(X 1) = 201.5 +14.1 + 10.0(X) for group 1 The model seems to do something reasonable for group 1, providing a correct single slope value. However, it forces group 0 to just be a constant. That isn’t too bad for this example, since the slope of X was so close to 0 for group 0 anyway. In a dataset where the slope of the referent group is not close to 0, however, this will provide a terrible fit. Chapter 5-4 (revision 16 May 2010) p. 32 This example illustrates the following rule: Rule About Interaction Terms When an interaction term is included in the model, all of the main effects for the variables that are multiplied to produce the interaction term must be included in the model as well, whether significant or not. If a greater than 2-way interaction term is included, all of the lower-order interaction terms as well as the main effects must remain in the model. (If a three-way interaction is included, then, the model must contain: A , B , C , A*B , A*C , B*C , A*B*C. A formal description of why this rule is needed in found in Chapter 5-8, p.21. How to Report Models With Interaction Terms Interaction terms are difficult to discuss and would confuse nearly any reader if shown as a line in a table that shows all of the coefficients for the multivariable model. What researchers do, then, is state in the text that a significant interaction was found, along with a p value, but never discuss the coefficient associated with the interaction term. Instead, the researcher will go on to show a stratified analysis, stratifying by one of the variables composing the interaction term. This approach is particularly useful, since an interaction means the effect is different for the different levels of the covariate in the group x covariate interaction. Showing these individual subgroups, or strata, is the most informative way to present an interaction. Chapter 5-4 (revision 16 May 2010) p. 33 Exercise. Look at the Kalmijn et al (2002) paper. Under the heading Alcohol Consumption in the Results section (p.939), they state: “There was a significant interaction between sex and alcohol consumption in relation to speed (p = 0.008), indicating that, for women, the association between alcohol consumption and pscyhomotor speed was positive and linear (p-trend < 0.001), whereas for men it was absent (table 4).” Then, in Table 4, they show the results stratified by gender. They actually used alcohol as a ordinal scale variable in the model, rather than 5 indicator variables, to obtain the p-trend. So they did not use interaction terms like we have done in this chapter. The concept of reporting an interaction is the same, however. Linear trend tests are covered in Chapter 5-26 “Trend Tests.” References Chatterjee S, Hadi AS, Price B. (2000). Regression Analysis by Example. 3rd ed. New York, John Wiley and Sons. Hamilton LC. (2003). Statistics With Stata. Updated for Version 7. Belmont CA, Wadsworth Group/Thomson Learning. Kalmijn S, van Boxtel MPJ, Verschuren MWM, et al. (2002). Cigarette smoking and alcohol consumption in relation to cognitive performance in middle age. Am J Epidemiol 156(10):936-944. Nawata K, Sohmiya M, Kawaguchi M, et al. (2004). Increased resting metabolic rate in patients with type 2 diabetes mellitus accompanied by advanced diabetic nephropathy. Metabolism 53(11) Nov: 1395-1398. Chapter 5-4 (revision 16 May 2010) p. 34