Velocity Distributions

advertisement

VELOCITY DISTRIBUTIONS IN “NATURAL” STREAM CHANNELS

÷A SECOND LOOK÷

JOHN L. KELLIHER

UNIVERSITY OF NEW HAMPSHIRE, FLUVIAL HYDROLOGY

5/10/00

INTRODUCTION

Because so much of quantitative fluvial hydrology entails calculations involving

the velocity of a given flow, it is of great interest to gain an ability to estimate what that

velocity will be at any particular time in any specified reach. Velocity is used in the

calculation of discharge through a single cross-section or a reach or river, as well as in

the estimation of the total discharge for the entire basin. It is integral for the estimation

of Manning’s “n”, Chezy’s “C”, energy slope, and other friction factors that make up the

resistance of a given system to a flow. It is also used to estimate stream power, which is

related to bed form development, and mass transport issues such as entrainment and

deposition. Yet another use would be in the estimation of the energy coefficient (). For

these reasons and others, we would like to be able to estimate the mean velocity of a

flow.

Historically, this has been accomplished through several methods. Widely

utilized are the velocity profile method first described by Prandtl (1926) and later by Von

Karman (1930) (Dingman, 1984). The USGS currently uses the six-tenths method for

shallow flows (Y < 0.75 m), whereby the average velocity is assumed to exist at a depth

of 0.6 Y, where Y is the depth of the flow at the site of measurement. For deeper flows

(Y > 0.75 m) the USGS uses the Two-Tenths and Eight-Tenths Method described by

Dingman (1994). Velocity can also be estimated using the Surface Velocity or Float

Method. It can be back calculated through dilution gaging, flumes, and weirs, and slopearea measurements discussed in Dingman (1994). Each of these methods involves some

degree of inaccuracy due to errors involved in the measurement or observation of

velocity. These methods can also be dangerous at high flows due to increased velocity,

turbulence, and debris. Miscalculations can also result from erroneous assumptions about

the resistance of the reach or that of constant discharge through the reach if velocity is

back solved through Manning’s equation or Chezy’s equation.

The main thrust of this research is to estimate a probability distribution for

velocity measurements of natural stream channels. If we know which probability

distribution best describes a sample population, we can use the quantile function, f(x), to

obtain quantile estimates of the sample population from which we will obtain our mean

velocity. Central to this project are L-moments, which are a statistical method of

examining data sets that exhibit large skew, as is commonly found in hydrologic data.

This process will allow us to estimate the probability distribution from which a sample of

velocity is drawn, without having to “get our feet wet” so to speak. If we know the

probability distribution of a sample population, we gain many insights into the data, and

begin to be able to make certain inferences about the data. These insights include

information about the shape, location, scale, and dispersion of the distribution.

STATISTICAL BACKGROUND

Hosking and Wallis (1997) have described probability distributions as the relative

frequency that each sample of a given magnitude occurs in a sample population. In our

example, these samples are velocity measurements. Therefore, x .50 is equivalent to the

median velocity; 50% of the measurements will be greater than x.50, 50% will be less.

Another way that we can quantify probability distributions is through the use of nonexceedence probabilities:

F (xi) = Pr [X xi],

[Eqn. 1]

or the probability that X is exceeded by xi. Here F(xi) is the cumulative distribution

function (cdf) of X and is a monotonically increasing function for 0 F(xi) 1. Thus, if

we know F(xi), we also know every possible value of X. We can also estimate the values

of xi through the use of the probability density function (pdf) of X. The pdf can be shown

to be the derivative of the cdf and can also be plotted as a curve. The area under this

curve and bounded by the X-axis is equal to one, and we can then find the probability that

A xi B by subtracting F(A) from F(B), that is, through an application of the

fundamental theorem of calculus (Walpole, 1982).

Since we most often do not know every possible value of X, we are forced to

estimate these values for a sample population. This is often accomplished through the

use of quantile estimators. For example, the median quantile estimator of a sample

population, x-hat.50 is the value of xi that is described by:

Pr{X < xi} .50 Pr{X xi}.

[Eqn. 2]

By accurately describing the quantiles of the probability distribution, we are

describing how X is distributed, and we can begin to understand some important things

about X. For instance, x-hat.50, or the median value of X, illustrates the central tendency

of the distribution. We can also express the dispersion of the distribution as x-hat.75

minus x-hat.25 as described by Dingman (1994).

The Method of Moments has been traditionally used to describe probability

distributions. This method involves the determination of the sum of the differences

between xi and the mean value of X, exponentiated to various powers. Hosking and

Wallis (1997) describe this as bringing the quantile function to higher powers. For

example, the variance of a distribution (the second moment of the distribution) is:

N

2

(x

i 1

i

)2

N

,

[Eqn. 3]

We can clearly see that the difference between xi and the mean () is brought to

the second power. Similarly, the third and fourth order moments (skew and kurtosis) are

brought to the third and fourth powers, respectively. Therefore extreme values of x i will

yield even more extreme estimates of the higher order moments.

This leads us to a critical problem in using the Method of Moments to evaluate

hydrologic data. Because hydrologic data are often highly skewed, with many extreme

values, the Method of Moments often renders inaccurate estimates of the higher order

statistics. Since probability distributions of a random sample can be estimated by plotting

the coefficient of skewness against the coefficient of kurtosis on a moment-ratio diagram,

these deviations from the true values of skew and kurtosis can lead to invalid estimates of

the probability distribution.

Hosking (1990) and Hosking and Wallis (1997) have suggested a solution to this

problem. They propose to describe a probability distribution of a sample population

through the use of L-moments. L-moments differ from traditional moments in that they

do not involve the exponentiation of the deviation from the mean that was so problematic

in the use of hydrologic data with the Method of Moments. L-moments instead use linear

combinations of the probability-weighted moments (see Greenwood et al. (1979) and

Hosking and Wallis (1997) for a complete description). This means that inferences that

are based on the L-statistics (L-mean, L-variance, L-skewness, and L-kurtosis) have less

bias and should also be more reliable estimators of the shape of the curve that is the

probability distribution of a given sample.

The use of L-moments for the determination of probability distributions of

hydrologic data is becoming more common, because of their robust behaviors with

regard to the highly skewed data often encountered in hydrologic samples. Vogel and

Fennesey (1993) investigated stream discharge in Massachusetts using L-moments, and

found that L-moments could be used to discern from which probability distribution the

streamflow data arose from. In their paper, they compare L-moments with the

traditionally used Method of Moments and concluded that the Method of Moments

provides little information about a sample’s probability distribution. Vogel et al. (1993b)

showed L-moments could be used to estimate stream discharge in Australia. They found

that the continent receives annual precipitation in a bimodal manner. They divided their

total population into two subsets based on this bimodal hetereogeneity. Their analyses

showed that drainage basins that received the bulk of their annual precipitation in the

winter could be modeled by a Generalized Extreme Value (GEV) distribution, and that

basins that obtained the bulk of their precipitation in the summer were better

approximated with a Generalized Pareto distribution (GPA). Other efforts have been

made by Hosking and Wallis (1987a) and by Hosking (1990) to describe how the

scientific community could benefit through the use of L-moments and that L-moments

should be expressly used when analyzing hydrologic data in particular.

Ben-Zvi and Azmon (1997) used L-moment theory to describe extreme

discharges in 68 basins in Israel. While they were able to find that the L-moment method

can provide reasonable estimates of a sample’s probability distribution, additional

statistical analyses needed to be conducted due to the subjectivity of choosing the “best”

distribution. They showed that the Anderson-Darling test could be used to assess how

well a chosen distribution could re-create a sample population. Future research in this

direction could easily be applied in the estimation of velocity distributions in natural

stream channels, or in the estimation of flow duration curves.

Van Gelder and Neykov (1998) used the Method of L-Moments in a study that

attempted to characterize flood events in the Netherlands with regional frequency

analysis. Their report stresses the importance of accurately identifying and defining

homogeneity of a region. Following the examples of Hosking and Wallis (1997), they

used a discordancy measure to distinguish heterogeneity. From this close examination of

homogeneity, their conclusions were that a completely homogeneous region was very

difficult to obtain for the Netherlands because of the varied geography and ocean

influences. However, they also inferred that regional frequency analysis (and the use of

L-moments) was more accurate than single-site statistical surveys.

METHOD

Dingman (1989) showed that the distribution of streamflow velocity data could be

estimated from the Power Law distribution. He explained that knowledge of the

probability distribution of discharge velocities would allow scientists to make estimates

of the energy coefficient, which could then be used to make better estimations of reach

resistance. Haire (1995) collected cross-sectional streamflow velocity data and compared

Dingman’s (1989) approach with the Prandtl-Von Karman Universal Velocity

Distribution Law and a Maximum Entropy model. She showed that the Power Law could

account for over 80% of the variation between observed and predicted streamflow

velocities at the 95% significance level, whereas the Prandtl-Von Karman method could

only account for approximately 70% of the variation. She concluded that none of these

statistical distributions could accurately describe the velocity data at the 95% significance

level, but that some could be used with discretion as the 90% significance level.

This study will re-examine the data collected by Haire. She obtained 27 velocity

distribution sample measurements from 20 streams in New York. Haire generated 22

additional velocity measurements through flume experiments at the Cold Regions

Research and Engineering Laboratory (CRREL) in Hanover, NH. She also included

velocity measurements from previously cited work (Haire, 1995) to obtain a sample

population of N = 81. This study will focus solely on those cross-sections with positive

velocity measurements. Therefore, our sample size is somewhat decreased to N = 39,

with 26 samples taken from the natural cross-sections, and 13 from the flume studies.

This study will use the Method of L-Moments to estimate from which probability

distribution these velocity measurements are drawn. This assumes that the entire data set

is homogeneous, that is that each sample shares the same probability distribution, but this

assumption will be shown to have been erroneous. If each of the samples shares a similar

probability distribution, we can say that these data compose a homogeneous subset of the

original data set. The size of this subset is limited by the size of the total population and

zero. Clearly, we would like to include all the sample data from the population into this

homogeneous subset, but this is often not the case due to heterogeneity of the population.

Monte Carlo simulations will be used to attempt to describe the degree of homogeneity

(or heterogeneity) of the region in a visual manner. Knowing the probability distribution

will enable us to obtain quantile function values of velocity, from which the mean

velocity can be estimated.

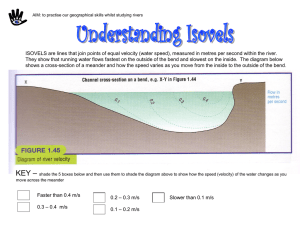

Estimates of the L-skew and L-kurtosis for each of the 39 samples were

calculated from the formulas derived by Hosking (1990). These values were plotted on

an L-moment ratio diagram (Fig. 1) from which we will attempt to determine which of

the possible probability distributions is most likely to be representative of the sample

population. L-moment diagrams are similar to customarily used moment-ratio diagrams.

The L-moment-ratio diagram plots the coefficient of L-skewness against the coefficient

of L-kurtosis. As each probability distribution has a unique plot on this type of diagram,

the distribution that most closely approximates the coefficients of L-skewness and Lkurtosis for each sample in the population is taken to be the probability distribution from

which the entire population is drawn from. To re-iterate, knowledge of the probability

distribution of the sample population provides us with a means to estimate the various

quantile measures for the distribution.

Ideally, we would like the data (the coefficients of L-skewness and L-kurtosis) to

plot such that they fall directly on one of the theoretical distributions on the L-momentratio diagram. In some situations (see Vogel and Fennessey, 1993), the data very closely

approximates a particular distribution and the choice is simple. However, this is not

always the case. Van Gelder and Neykov (1998) and Sankarasubramanian and Srinivsan

(1999) found that their data did not plot in a quasi-linear manner, and in fact, looked

more like a cloud of data points.

This is the case as well with Haire’s (1995) data set. The L-statistics show

increased variation and thus plot as more of a cloud type feature (Fig. 1). This poses some

problems because it appears that several distributions could be used as potential

probability distribution estimates. These include the GPA, GEV, and Pearson Lognormal (PE3) distributions. Because of previous familiarity with the GPA distribution,

this paper will examine how well it can estimate streamflow velocities. We will then

L-Moment Ratio Diagram with Selected Data

0.4

0.35

GPA

0.3

GEV

0.25

GLO

LN3

0.2

L-Ku(x)

PE3

0.15

OLB

Power Law

0.1

Natural

Crrel

0.05

Average

0

-0.5

-0.3

-0.1

-0.05

Total Average

0.1

0.3

0.5

-0.1

L-Sk(x)

FIGURE 1. L-MOMENT RATIO DIAGRAM OF SELECTED DATA SET OF HAIRE (1995). NOTE SCATTER RESULTS IN

AMBIGUOUS DETERMINATION OF A PROBABILITY DISTRIBUTION. AVERAGE L-SKEW AND L-KURTOSIS ARE

PLOTTED FOR BOTH THE SELECTED DATA SET (LIGHT BLUE) AND THE TOTAL DATA SET (ROYAL BLUE).

NOTE ALSO POSSIBLE SUBSETS OF HOMOGENEOUS DATA.

perform a Monte Carlo simulation to assess how close to the GPA our observed data

really is. One possible reason for this variation is due to the likelihood that these sample

data sets do not form a homogeneous subset. It would not take much imagination to see

several subsets in this data as indicated in Figure 1.

Even though several of these cross-sections have L-skew and L-kurtosis values

that indicate that they would probably be better modeled using a different distribution, we

will attempt to model them using the Pareto distribution. This ambiguity is probably

indicative of a non-homogeneous "region", which would make the results of this

"regional frequency analysis" quasi-invalid, but still more statistically sound than (and

therefore preferable to) the Method of Moments (Van Gelder and Neykov, 1998). The

statistical analysis of the other distributions that may fit this data is beyond the scope of

this project, but certainly would be worth pursuing as future research.

THE PARETO DISTRIBUTION

Hosking (1990) describes the generalized Pareto distribution as:

f ( x) 1e (1k ) y

[Eqn 5]

where y = --1log{1-(x-} when 0,

or y = (x-)/

when = 0,

f(x) is the probability density function,

and are parameters related to the location (mean), scale (variance), and

shape (skew) of the data respectively.

The quantile estimators for the GPA distribution are likewise found as:

x(F) = F when 0

[Eqn. 6]

or

x(F) = log (1-F) when = 0,

[Eqn. 7]

where x(F) is the quantile function of the cumulative distribution,

and F is the probability that the actual value of velocity is at most x, or the nonexceedence probability.

The three parameters involved in the above equations are estimated by linear

combinations of the L-moments calculated from the observed values of each of the

samples in the population. The value of kappa can be estimated for each sample in the

population by a linear combination of the L-moment ratios that were used to determine

the probability distribution of the sample population. We can then estimate the other

parameters, and , through linear combinations of kappa and second L-moment, the

same scaling factor (2) that was used to determine the coefficients of L-skewness and L-

kurtosis. Since is a measure of location, it represents a lower bound for the estimation

of velocity. For example, if we were to eliminate any sample that had observed negative

velocities, we would expect that would be 0. Hosking and Wallis (1997) provide a

thorough discussion on the derivation of these parameter values.

Once these parameters are known for each cross-section, it becomes quite trivial

to calculate the quantile estimators of the actual streamflow velocity data. The trick then

is to determine how , , and are related to the cross-sectional geometry or other

factors, so that they can be estimated for ungaged and unmeasured reaches. Once this is

accomplished, we will have a method or estimating streamflow velocity in a cross-section

literally without having to get our feet wet.

MONTE CARLO SIMULATIONS

Now that we have chosen (albeit qualitatively) the Pareto as the distribution that

best describes our data, we need to ensure that this is the correct choice. The test that we

propose involves the use of the parameter values that were generated from the L-moment

analyses just discussed for each cross-section. For each cross-section, we will use the

Pareto distribution quantile equation (Eqn. 6) in a Monte Carlo experiment that will

attempt to re-create our observed data. 99 synthetic velocity distributions were generated

for each sample cross-section for which we have velocity distribution data. Then each of

these simulation distributions was run through a spreadsheet that calculated the Lmoments and parameters () of that simulation of velocity measurements.

Because we know that these simulations follow a Pareto distribution, we know as

well that the calculated L-skew and L-kurtosis values for these simulations should plot on

the Pareto distribution on an L-moment ratio diagram. If we then plot our observed Lskew and L-kurtosis values on this graph as well, we can get an idea of how reasonable

our assumption was about our choice of probability distribution. That is to say, if our

observed values fall within the cloud generated by a random Monte Carlo simulation of

the Pareto distribution, we can assume that the probability is high that we have made the

right choice. Likewise, if our observed values do not fall within this cloud, we know that

the Pareto distribution probably does not describe our data very well.

This test has been performed for the 26 selected natural cross-sectional velocity

distributions. The results of these efforts are included in Appendix 1. These graphs show

that the Monte Carlo simulations tended to fall on or about the GPA line as expected.

The grouping of these 100 simulations varies widely, owing to the variation in the sample

size. Larger sample sizes tended to be more closely grouped than smaller sample sizes,

showing perhaps that larger sample sizes result in less bias. Also plotted on these

diagrams are the L-skew and L-kurtosis values from the observed velocity distributions.

If the observed velocity distributions were indeed distributed in an exact Pareto manner,

we would expect the plot of L-skew and L-kurtosis values estimated from the L-moments

to fall on the line of the GPA.

In fact, many of these observed velocity distribution L-skew/L-kurtosis values fall

either within the cloud of Monte Carlo generated values, or near to it. This is

encouraging because this means that we have at hand a simple method of checking the

validity of assuming a homogeneous sample in regional frequency analysis. The CRREL

flume study data (Appendix 2) also show these characteristics. One flume cross-section

(T31600ML) showed anomalous behavior in that the observed L-skew and L-kurtosis

values did not plot inside or near the cloud of Monte Carlo generated values. This crosssection had a moderate number of sampled observations (N = 100), but had the largest

discharge (1852.96 GPM), high slope (= 0.010), and a relatively low roughness (mesh),

which perhaps contributed to this anomalous behavior. Because this anomaly is so

blatant, we may choose not to include this sample due to its “heterogeneous” behavior.

Other samples where the observed L-moments plotted either outside the cloud or on its

periphery should be further scrutinized for heterogeneous characteristics. These

heterogeneous characteristics may quite possibly show up in the regression analysis of

the channel geometry to estimate the parameters of the Pareto distribution.

MULTIPLE LINEAR REGRESSION ANALYSIS

It was hypothesized that alpha, kappa, and xi are related to channel geometry

and/or other factors. One method of relating these parameters to channel geometry is

through the application of multiple linear regression. The average values of the Pareto

distribution parameters (and that were calculated from the Monte Carlo

simulation were regressed against channel geometry information for each CRREL crosssection using a step-backward approach. Haire’s data (1995) is listed for these selected

cross-sections in Table 1. Again, only samples with non-negative velocities were used,

so N = 13 samples. We did not use Manning’s n or average velocity as regressors

Width

[ft]

T3

T3

T3

T3

T3

T3

T3

T3

T3

T3

T3

T3

T3

100 AH

100 AL

100 TL

1600 AH

1600 AL

1600 ML

1600 TH

1600 BL

900 AL

900 BL

900 MH

900 ML

900 TL

4

4

4

4

4

4

4

4

4

4

4

4

4

Slope

0.010

0.002

0.002

0.010

0.002

0.002

0.010

0.002

0.002

0.002

0.010

0.002

0.002

Area

[ft^2]

1.23

1.36

1.43

2.82

3.46

1.83

3.19

3.56

2.78

2.83

0.94

1.37

2.96

Hydraulic

Radius

[ft]

0.27

0.29

0.30

0.52

0.60

0.37

0.57

0.62

0.52

0.52

0.21

0.29

0.54

Depth

[ft]

0.31

0.34

0.36

0.70

0.86

0.46

0.80

0.89

0.69

0.71

0.24

0.34

0.74

d50

[mm]

0.21

0.21

0.67

0.21

0.21

0.02

0.67

0.67

0.21

0.67

0.02

0.21

0.67

Manning's

n

0.31

0.18

0.17

0.07

0.04

0.02

0.09

0.05

0.05

0.06

0.02

0.02

0.06

Q

GPM

Average

Velocity

[ft/s]

110.68

94.78

89.30

1567.18

1593.01

1852.96

1826.42

1640.59

868.66

684.83

1055.35

1008.57

943.64

Table 1. Channel geometry of CRREL simulations (Haire, 1995).

because velocity is implicit in their calculation and this would introduce multicollinearity

into our model.

Step-backward regression analysis is a process whereby regressors are eliminated

in a sequential manner until the model contains only statistically significant regressors. It

is up to the modeler to specify what significance level they wish to use. In our example

we originally used a 90% significance level. The results of these regression analyses are

0.20

0.16

0.17

1.34

1.09

2.07

1.14

1.01

0.79

0.67

2.36

1.57

0.75

included in Appendix 3. We can see from the studentized residuals that several of the

residuals are potentially outliers. Studentized residuals are distributed such that they

have a zero mean and a unit standard deviation. Therefore, we can use the empirical rule

to estimate how likely that observation could occur by chance. A studentized residual of

greater than about 2.5 is usually indicative of an outlier. Although none of the

studentized residuals in our analyses have values greater than 2.5, several have residuals

that approach 2.0, and these have been highlighted in yellow. These observations with

large studentized residuals may be outliers, but more statistical work would need to be

done to prove this, perhaps by using SAS or some other statistical package to analyze

leverage and /or influence of the observation. A thorough discussion of these statistical

tests is found in Freund and Wilson (1998).

We can see from the multiple linear regression analysis at the 90% significance

level that all three parameters could be regressed against various combinations of channel

geometry. This is proven by examination of the F-test values for each regression

analysis. Since the “significance F” values are less than 0.1, we know that each of these

regression analyses are significant at the 90% level. Once we know that the regression is

valid, we can obtain estimates of the values for each parameter at the 90% significance

level by using the coefficients of each regressor in a linear manner:

= 6.871 + 43.8(AREA) – 52.3(H.RADIUS) –146.7(DEPTH) + .001(Q)

[Eqn. 8],

.90 = 104.4 – 259.9(H.RADIUS) + .054(Q)

[Eqn. 9],

.90 = 18.816 – 10.74(AREA) + .018(Q)

[Eqn. 10].

Appendix 4 shows the results of these analyses in application. These graphs plot

the observed velocities against the predicted velocities, a one-to-one line for the observed

values, and an average velocity measurement taken from the observed data. We used the

estimated parameter values to calculate a Pareto distribution of simulated velocities. We

then compared how these parameters fared at predicting velocity distributions by

comparing the two plots.

We can immediately see that the fits are fair at best. Interestingly, the velocity

distributions generated through the estimated parameters for higher discharges seem to fit

best (See plots for T3 1600 AH, T3 900 ML, T3 1600 TH, T3 1600 ML). The plots for

the smaller discharges don’t appear to fit very well. The results for this were surprising

because of the previous discussion on Monte Carlo simulations and graphing of the Lmoment diagrams in Appendix 2. The only “stream” that was expected to not do well

was T3 1600 ML because it’s observed L-moments plotted outside the cloud of

numerically simulated L-moments.

In many cases, the general slope of the distributions generated by the regression

relationships is the same as that of the observed distribution, but there appears to be a

translation of the curve. By translation, we mean that the curve appears to need to be

shifted left or right to achieve the best fit. At this point the reason for this is unknown.

However, the fit is quite good in several of the cross-sections.

With these conundrums in mind, we opted for a recalculation of the regression for

the parameter estimations at the 95% significance level. Perhaps with these “stricter”

stipulations, we could obtain more rigorous estimations of the parameters.

Unfortunately, at the 95% significance level, we were unable to find any

combination of regressors through the step-backward method that was statistically

significant for Kappa or Alpha. Examination of the regression analyses (Appendix 5)

shows us that the regression for Xi is significant at the 95% level (F-test), and this

provides us with a more rigorous method of estimating this parameter. The equation for

Xi at the 95% significance level is:

0.95 18.816 10.742( AREA) .018(Q) ,

[Eqn. 10]

which is the same equation that we generated at the 90% significance level. Therefore,

we propose to use the equations generated from the multiple linear regression model at

the 90% significance level.

Table 2 further re-iterates the fact that this model doesn’t predict observed

velocities for all the streams, particularly at low flows. Indeed, it predicted velocities that

were up to 400 times the observed velocities. However, the model does perform well for

larger discharges, achieving a lowest % change of about 3.4. The average % change

between the observed and predicted velocities was on the order of 97.4 %. The average

percent change for the largest discharges (T3 1600 XX) was much better (about 14.3 %).

Average Velocity

Observed Estimated Difference % Change

T3 900 BL

T3 900 AL

T3 900 TL

T3 900 MH

T3 1600 BL

T3 900 ML

T3 1600 AH

T3 1600 ML

T3 1600 AL

T3 1600 TH

T3 100 AH

T3 100 AL

T3 100 TL

22.53

24.65

23.69

76.85

31.47

51.10

42.22

65.17

34.66

36.78

6.49

5.15

5.10

5.64

11.92

13.57

53.69

26.64

46.12

38.39

67.38

36.41

51.31

24.33

21.01

25.62

-16.89

-12.73

-10.12

-23.16

-4.83

-4.98

-3.83

2.21

1.76

14.53

17.84

15.86

20.52

-75.0

-51.7

-42.7

-30.1

-15.3

-9.7

-9.1

3.4

5.1

39.5

274.9

307.9

402.4

Table 2. Comparison of model estimates of average

velocities with observed velocities.

We find that for moderate discharges (T3 900 XX), the average percent deviation was

about 42 %, but for lower discharges (T3 100 XX), this percentage is significantly larger

(328 %).

FUTURE STUDIES

Clearly, this study was shown that velocity distributions are difficult to model.

One of the key tenets of regional frequency analysis is that the data sample must form a

homogeneous region (Hosking and Wallis, 1998). When the data is heterogeneous, as is

probably the case in this example, the results of the analyses can be quite spurious. This

study has sparked much interest in this researcher, and the employment of the Monte

Carlo experimentation was very interesting. Future research could include examination

of some of the other probability distributions that appeared to fit the cloud of L-moments,

such as the LN3, PE3, or segregating the sample into homogeneous “regions”. These

regions need not be in a spatial sense, but more as a sense of the characteristics of the

distribution of the data. We can quickly examine this by looking at Appendix 6, which

is essentially Figure 1 without the natural stream samples. This L-moment diagram

shows us how varied the CRREL data set is, which is a good indication that this data set

is not homogeneous. We can clearly see that the data doesn’t fit the Pareto very well,

although some points are close to the line. The average values of the L-moments for this

data set fall into a probabilistic "no man's land," one that is not really modeled by any one

distribution. Perhaps the Wakeby five parameter or the Kappa distribution would do well

with these data because these distributions plot as an area on this type of diagram, and

this might fit our cloud better.

In particular, the three points near the apex of the GPA parabola appear to fit very

well. These points correspond to samples T3 900 BL, T3 1600 AH, and T3 1600 AL.

However, even though these observations should be distributed in a quasi-Pareto manner,

examination of these three samples in Appendix 4 shows that only predicted values T3

1600 AH appears to fit the observed data well.

One interesting thing to note about this is that when we compare the graph of

Appendix 6 with the individual graphs in Appendix 2, we can get an idea of the scale of

the graphs. A point that appears to be "close" to the Pareto in the graphs of Appendix 2

may in reality be quite far from the Pareto distribution, and in fact may well be modeled

better with another distribution. T3 1600 ML, which represents our "best fit" for the

average velocity (% change = 3.4) falls not on the Pareto distribution, but almost directly

on the LN3 plot. The more one looks at this graph, the more one realizes that these data

have serious homogeneity issues…for example, T3 900 BL almost falls directly on the

GPA line, yet still has a % error of about 75%.

For these reasons among others, we recommend that future research be directed at

the proper identification of homogeneity, because this seems to be a central issue in the

use of L-moments. Mis-identification of a probability distribution yields erroneous

estimations of its parameters, which in turn generates incorrect estimates of its

parameters. If we have values for the parameters that are not correct, we obviously

cannot use regression analysis to relate these parameters to the channel geometry or what

have you. Another avenue that will probably be worth exploring would be in trying to

find other factors that could be correlated with the parameter estimates of the chosen

distribution. These would have to be measured in the field, but if we can increase the R2value for the regression equations, then we will also increase our ability to accurately

predict natural velocity distributions in natural stream channels. A final suggestion

would be to investigate the possibility of a non-linear regression model. The estimation

of velocity, although crucial to much of quantitative hydrology will have to wait until we

have a better way of estimating the probability distributions of a given sample population,

and the parameters of such a probability distribution.

REFERENCES:

Ben-Zvi, A. and B. Azmon. 1997. Joint Use of L-moment Diagram and goodness-of-fit

Test: A Case Study of Diverse Series. Journal of Hydrology, 198: 245-259.

Chang, H. H. 1998. Fluvial Processes in River Engineering. Krieger Publishing

Company, Malabar, FL.

Dingman, S. L. 1984. Fluvial Hydrology. W.H. Freeman and Company. New York.

Dingman, S. L. 1989. Probability Distribution of Velocity in Natural Channel CrossSections. Water Resources Research, 25(3), pp. 509-518.

Dingman, S. L. 1994. Physical Hydrology. Prentice Hall. Upper Saddle River, NJ.

Freund, R. J., and W. J. Wilson. Regression Analysis: Statistical Modeling of a

Response Variable. Academic Press. Boston, MA.

Greenwood, J. A. et al. 1979. Probability Weighted Moments: Definition and Relation

to Parameters of Several Distributions Expressable in Inverse Form. Water

Resources Research, 15(5), pp 1049-1054.

Haire, P. K. 1995. “The Ability of Various Models to Describe Velocity Distibutions in

Arbitrarily Selected Natural Stream Channels.” Unpublished Masters Thesis,

UNH, Durham, NH.

Hosking, J. R. M. and J. R. Wallis. 1987. Parameters and Quantile Estimation for the

Generalized Pareto Distribution. Tectnometrics, 29: 339-349.

Hosking, J. R. M. and J. R. Wallis. 1997. Regional Frequency Analysis: An Approach

Based on L-Moments. Cambridge University Press. New York.

Sankarasubramanian, A. and K. Srinivasan. 1999. Investigation and Comparison of

Sampling Properties of L-Moments and Conventional Moments. Journal of

Hydrology, 218, 13-34.

Van Gelder, P. and N. M. Neykov. 1998. Regional Frequency Analysis of Extreme

Water Levels Along the Dutch Coast using L-Moments: A Preliminary Study.

Delft University of Technology, Delft, Netherlands.

Vogel, R. M. and T. A. McMahon, and F. H. S. Chiew. 1993. Floodflow frequency

model selection in Australia. Journal of Hydrology, 146: 421-449.

Vogel, R. M. and N. M. Fennessey. 1993. L-moment Diagrams should replace product

moment diagrams. Water Resources Research, 29: 1745-1752.

Walpole, R. E. 1982. Introduction to Statistics. MacMillan Publishing Company, Inc.

New York.