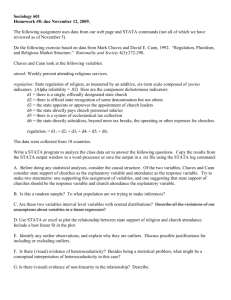

W&L Guide to Stata - The Washington and Lee University Library

advertisement