chp6_sec3_a_ans

advertisement

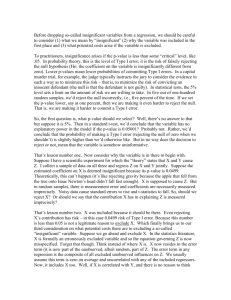

Chapter 6 Section 3 Homework A 6.86 A role as a statistical consultant. You are the statistical expert for a graduate student planning her PhD research. After you carefully present the mechanics of significance testing, she suggests using = 0.25 for the study because she would be more likely to obtain statistically significant results and she really needs significant results to graduate. Explain in simple terms why this would not be a good use of statistical methods. The significance level of 0.25 is not rare enough for a result to be statistically significant. If the p-value for example was 0.20 this would be statistically significant at the 25% level, as the student wants to do. But this would mean the result from the study or a more extreme result would occur about 20% of the time when the null hypothesis (the view we are trying to prove is not correct) is true. Thus, this value is not rare enough for anyone to say that the result suggests the null is not correct. 6.87 What do you know? A research report described two results that both achieved statistical significance at the 5% level. The P-value for the first is 0.049; for the second it is 0.00002. Do the P-values add any useful information beyond that conveyed by the statement that both results are statistically significant? Write a short paragraph explaining your views on this question. While both results are statistically significant we note that one result leaves not doubt as to its “statistical significance”, p-value 0.00002, due to its rarity, while the p-value of 0.049 while rare is not that uncommon. What this problem is trying to bring to mind is what the p-value is suppose to be telling you, which is the amount of evidence we have gathered against the null. The p-value of 0.049 is right at the point where we would should start thinking about rejecting the null (the most likely recommendation is for someone else to repeat the experiment because the p-value of 0.049 looks promising.) While the p-value of 0.00002 leaves no doubt (barring some error in how the procedure was carried out) of the fact that the null hypothesis is very, very unlikely to be correct. 6.88 Interpreting the P-value. A P-value of 0.90 is reported for a significance test for a population mean. Interpret this result. A picture is worth a thousand words here. Let us say that Ha < 0, then a p-value of 0.9, results in x > 0, which is the opposite of what we wanted to show. 0 x Actual location of the sample mean. The sample mean must land here to have evidence against null 6.89 What a test of significance can answer. Explain whether a test of significance can answer each of the following questions. (a) Is the sample or experiment properly designed? I am interpreting this question as, “Can a p-value result tell you if the experiment is properly designed?” The response is no, I can do that by looking at my sample size, how the data is going to be gathered, size of significance level, etc… (b) Is the observed effect compatible with the null hypothesis? I am interpreting this question as, “Can I use the p-value to determine if the model, using the null hypothesis, is plausible?” The answer is yes, that is the whole point of the test. We assume the null is correct, and if our result differs (effect) from the expectation we will determine if that difference is samll enough to say our null value is plausible (compatible with the null hypothesis, no evidence against the null). If the difference is large enough we may say that the null is not correct, thus explaining why we are seeing such a large difference. (c) Is the observed effect important? I am interpreting this question as, “Can I use the p-value to determine if the result is an important one?” And we spent time discussing this in-class, the answer is no. 6.90 Statistical versus practical significance. A study with 7500 subjects reported a result that was statistically significant at the 5% level. Explain why this result might not be particularly large or important. If the sample size is 7500 then the variability of the sample mean, x , will be very small: σ . Suppose 7500 that the null value of = 75, but in reality (not know to us), = 74.5. Then truly ≠ 75, but let’s say that a change of to 74. 5 is not of any importance. You can see from the resulting graph that the p-value from any sample of such a scenario would be nearly 0, a statistically significant result. But the actual difference is very small, from about (0.6, 0.4), “might not be particularly large or important.” 6.91 More on statistical versus practical significance. A study with 14 subjects reported a result that failed to achieve statistical significance at the 5% level. The Pvalue was 0.052. Write a short summary of how you would interpret these findings. The author wants people to understand that if you set the significance level at 5%, but your p-value is 0.052 or 5.2%, technically speaking the result is not statistically significant. However, that is the “textbook” answer. The reality is more complex. You may decide that 5.2% is convincing enough to reject the null; typically you would make this consideration based on some other evidence as well. In other words the 5% serves as a guideline, but why would a p-value of 4.99% be enough to reject the null but not a p-value of 5.01%? You realize that for practical purposes the differences are negligible. 6.92 Vitamin C and colds. In a study to investigate whether vitamin C will prevent colds, 400 subjects are assigned at random to one of two groups. The experimental group takes a vitamin C tablet daily, while the control group takes a placebo. At the end of the experiment, the researchers calculate the difference between the percents of subjects in the two groups who were free of colds. This difference is statistically significant (P = 0.03) in favor of the vitamin C group. Can we conclude that vitamin C has a strong effect in preventing colds? Explain your answer. Again the effect is the actual difference that we experience. At the end of the experiment, the researchers calculate the difference between the percents of subjects in the two groups who were free of colds. So is that difference 2%, 5%,…? Like in the explanation for problem 90, because the sample size is large the actual effect may not need to be large in order for it to be statistically significant. 6.93 How far do rich parents take us? How much education children get is strongly associated with the wealth and social status of their parents, termed "socioeconomic status," or SES, The SES of parents, however, has little influence on whether children who have graduated from college continue their education. One study looked at whether college graduates took the graduate admissions tests for business, law, and other graduate programs. The effects of the parents' SES on taking the LSAT test for law school were "both statistically insignificant and small." (a) What does "statistically insignificant" mean? Statistically significant means that if what we currently believe, when we ran the test, is true then the summary of the data values we sampled is very rare, not what we were expecting; so did we just get unlucky and get a rare result and our belief is still correct? When we say it is statistically significant it says to the reader that we believe chance (getting unlucky and witnessing a rare event) but rather that we got a very common value when what we believe is not true. Statistically insignificant says that we did observe a difference between what we were expecting and what we got from the data but chance alone can explain the observed difference. That is the observed difference is not that unusual. (b) Why is it important that the effects were small in size as well as insignificant? The effect relates to the actual difference in measurements, what we expected and what we viewed. When you consider the actual worth of the numbers the differences were small- effects were small in size. What is the difference in “a” and “b”? In “a” you use probability to measure how far two numbers are away from each other. In “b” you actually use the worth of the numbers themselves, not their probabilities. 6.95 Coaching for the SAT. Every user of statistics should understand the distinction between statistical significance and practical importance A sufficiently large sample will declare very small effects statistically significant. Let us suppose that SAT Mathematics (SATM) scores in the absence of coaching van Normally with mean = 505 and = 100. Suppose further that coaching may change but does not change . An increase in the SATM score from 505 to 508 is of no importance in seeking admission to college, but this unimportant change can be statistically very significant. To see this, calculate the P-value for the lest of Ho: = 505 Ha: > 505 in each of the following situations: (b) By the next year, the service has coached 1000 students; their SATM scores average x = 508. (c) An advertising campaign brings the number of students coached to 10,000; their average score is x =508. 6.102 More on searching for significance. You perform 1000 significance tests using = 0.05. Assuming that all null hypotheses are true, about how many of the test results would you expect to be statistically significant? Explain how you obtained your answer. 0.05(1000) = 50. We would expect about 50 of these tests to be labeled “statistically significant” out of 1000 attempts. 6.103 Interpreting a very small P-value. Assume that you are performing a large number of significance tests. Let n be the number of these tests. How large would n need to be for you to expect about one P-value to be 0.00001 or smaller? Use this information to write an explanation of how to interpret a result that has P = 0.00001 in this setting. The point of this question is to remind you what is assumed to be true throughout the significance test, that the null is assumed to be correct, and thus we act as if it is correct. Thus, if we assume the null is correct how what does a p-value of 0.00001 mean? You would see this particular value 1 in 100,000 observations. So you would need to calculate 100,000 different sample means, on average, in the hope of witnessing the mean that created the p-value of 0.00001 = 1 . 100, 000 So we are left with a dilemma, does the mean we calculated mean we hit the jackpot (that is Ho is correct but we got very lucky by obtaining this sample mean value)? Or do we believe that the reason we witnessed this mean is that the correct mean is some other value for which our sample mean value is actually very common? Most people would opt to believe the second statement.