Lab4 - Regression

advertisement

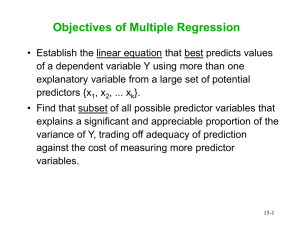

Statistics – Spring 2008 Lab #4– Regression Defined: Variables: Relationship: Example: Assumptions: A model for predicting one variable from other variable(s). IVs is continuous, DV is continuous Relationship amongst variables Can we predict height from weight (or weight from height, or weight from multiple variables, etc). Normality. Linearity. Multicollinearity 1. Graphing - Scatterplot The first step of any statistical analysis is to first graphically plot the data. In terms of regression, if you are only conducting bivariate regression, then the scatterplot will be the same as for correlation. Thus, see “Lab 3 – Correlation” for how to conduct a scatter plot. If you have three variables, you can conduct a 3D scatterplot. The instructions below are for a 3D scatterplot. If you have more than three variables, you can't conduct a scatterplot because it is impossible to see a scatterplot in 4D or 5D or 6D or so forth How do I graph a scatterplot? 1. Select Graphs --> Legacy Dialogs --> Scatter 2. Click “3D Scatter”, and “Define” 3. Move appropriate variables into the “Y axis” and “X axis” and “Z axis” 4. Click OK. Output below is for “commit1” and “commit2” and “commit3” 1 2. Assumptions: Normality. Linearity. Multicollinearity For “Normality” and “Linearity”, see “Lab3 – Correlation”. For Multicollinearity, see below. KEEP IN MIND THAT MULTICOLLINEARITY ONLY APPLIES TO MULTIPLE REGRESSION What is Multicollinearity? When variables are highly correlated you have Multicollinearity. Why is Multicollinearity a problem? When variables are highly correlated in a multiple regression analysis it is difficult to identify the unique contribution of each variable in predicting the dependent variable because the highly correlated variables are predicting the same variance in the dependent variable. In this situation, the “overall” p-value may significant but the p-value for each predictor may not be significant. What is “highly” correlated? Some statisticians say correlations above .70 indicate Multicollinearity, and others say that correlations above .90 indicate Multicollinearity. What do you do when you have Multicollinearity? a. Option 1 – Leave as is, and conduct multiple regression analysis anyway. Multicollinearity only affects the results from the unique effect of each predictor, so if you are only interested in the “overall” effect of the combined predictors, then Multicollinearity is not an issue. b. Option 2 – Remove one of the variables from the analysis. c. Option 3 – Create a new “composite” of the highly correlated variables. How to identify Multicollinearity? You have two approaches. Approach #1 – Run a correlational analysis and look at correlations. Approach #2 – One of the options in SPSS is to calculate multicollinearity when you conduct multiple regression analysis by clicking “Statistics” and clicking “collinearity diagnostics.” The output is shown below for the multiple regression analysis that will be conducted later in this document.. Multicollinearity exists when Tolerance is below .1; and VIF is greater than 10 or an average much greater than 1. In this case, there is not multicollinearity. 3. Bivariate Regression Bivariate regression produces the same result as bivariate correlation. For example, in our dataset we have a variable called “threshold1” which asks: In order to convict a person for a crime, jurors should feel that it is at least _____% likely that the defendant is guilty of the crime. Is “age” related to this question? A correlational analysis produces the output below, r = .106, p = .059 Now, let’s answer the same question using regression 1. Select Analyze --> Regression --> Linear 2. Move “threshold1” into the DV box; move “age” into the IV box. 3. Click OK. 2 Output below is four boxes. a. Variables Entered/Removed tells you the variables in the analysis and how they were entered into the analysis. This box will be helpful later when we do multiple regression. Since we are only looking at bivariate regression, this box will always give the IV name and say “enter” for Method. b. Model Summary gives you R Square, which is the variance explained by the IV, R2 = .011 c. ANOVA tells you whether the overall model is significant, p = .059 d. Coefficients tells you the effect size, beta = .106. e. Notice how this result is the same as for the correlational analysis above. WRITE-UP: a. There was a positive linear relationship between the predictor and outcome variable, = .11, p = .06. The variance explained by the predictor was 1.1% b. Using regression analysis to predict the percentage of guilt jurors feel is necessary to convict a defendant, age was a positive predictor, = .11, p = .06, that explained 1.1% EVALUATION a. You evaluate bivariate regression similarly to correlation, such as looking at direction of the relationships, size of the relationship, and p-value of the relationship. See “Lab3 – Correlation” for more information about interpreting effect size. 4. Multiple Regression Here are the basic steps involved in multiple regression: a. First conduct correlational analysis with all potential variables to find variables to enter into the analysis that are correlated with the DV, but not overly correlated with the IV (e.g., multicollinearity). b. Instead of, or in addition to, the correlational analysis, some people will enter all potential variables simultaneously into multiple regression analysis to see which variables produces unique effect upon the DV, and then conduct another multiple regression analysis with only those variables that produce a unique effect upon the DV. c. If you have a hypothesis, you conduct multiple regression to test that hypothesis, called “confirmatory” analysis because you are determining whether or not your hypothesis is confirmed. d. After testing your hypothesis, you can also do “exploratory” analysis to look at different permutations of the variables. Its called “exploratory” analysis because you are exploring the data beyond your initial hypothesis. 3 For our example, I want to look at the predictors of “threshold1”. I am going to use three predictors: age, sex, and “commit1”. a. “age” is a predictor because I want to see if the older the age, the higher the probability of guilt people believe is necessary convict a person. b. “sex” is a predictor because I want to show you that you can enter “categorical” variables into the analysis. However, keep in mind that the categorical variables need be to dichotomous. If you have a categorical variable with more than 2 categories, you need to create “dummy codings” which reduce the categorical variable into a series of dichotomous variables. I explain later in this document how to “dummy code”, but for right now I want to include “sex” as a predictor to show you how you can enter both continuous and dichotomous variables into the same analysis. c. “commit1” is a predictor because it is theoretically interesting to see how “commit1” is related to “threshold1”. Multiple Regression 1. Select Analyze --> Regression --> Linear 2. Move “threshold1” into the DV box, and move the three predictors into the IV box 3. Click “Statistics” and “collinearity diagnostics”. 4. Click OK. Output below is for two question a. Variables Entered/Removed tells you the variables in the analysis and how they were entered into the analysis. We entered all three predictors simultaneously, so the method is “enter”. b. Model Summary gives you R Square, which is the variance explained by the IV, R2 = .061. In other words, all three predictors together account for 6.1% of the variance in the DV. The “Adjusted R Square” corrects for the number of variables in the analysis. Each predictor explains some variance due to chance, so the more variables in the analysis the higher the “R Square” due to chance. When you have many variables in the analysis you may want to look at Adjusted R Square instead of R Square. c. ANOVA tells you whether the overall model is significant, p = .000. Also, if the overall model is significant, then at least 1 or more of the individual variables will most likely have a significant relationship to the DV. d. Coefficients tells you the UNIQUE effect size for each variables. In this case, all three variables uniquely predict the DV. Age, beta = .111, p = .047 Sex, beta = .172, p = .002 Commit1, beta = .133, p = .017 4 WRITE-UP: a. Multiple regression analysis was conducted to predict the percentage of guilt jurors feel is necessary to convict a defendant. Three predictors were entered simultaneously into the analysis: age, gender, and a question asking what percent of defendants brought to trial did in fact commit the crime. The overall variance explained by the three predictors was 6.1%. Each predictor was positively related to the outcome variable, such as age ( = .11, p = .047), gender ( = .17, p = .002), and percent brought to trial that are in fact guilty ( = .13, p = .017). EVALUATION a. You evaluate multiple regression by first looking at the overall model and variance explained. b. You then evaluate each predictor separately. You evaluate the effect size and p-value just as you would for correlation and bivariate regression, except that with multiple regression the outcome for each predictor is the UNIQUE effect while controlling for the other variables. 5. Dummy coding Regression analysis can only analyze categorical variables that are dichotomous. If you have a categorical variable with more than 2 categories, you then need to convert that categorical variable into a series of dichotomous variables. For example, in our dataset, the “voter” category has four categories: republican, democrat, none, other. The first step in creating a dummy coded variable is to pick a baseline group from which to compare the other groups. Let’s use “none” as our baseline since those subjects have indicated no political group membership, while the other three categories have chosen a group membership. The next step is to determine the number of dichotomous variables you are going to create by subtracting 1 from the total number of categories, which in our case is 4-1=3. When creating the first of three dichotomous variables, you use the “Compute into a different variable” function (see Lab2 – Descriptives for how to use that function) to assign “0” to the baseline group, and “1” to the first group you want to compare to the baseline, which in our case is 1=republican. For that dichotomous variable, the other categories are assigned “0”, so 0=democrat, 0=other. For the second dichotomous variable, assign “0” to the baseline group, and “1” to the second group you want to compare to the baseline, which in our case is 1=democrat. For that dichotomous variable, the other categories are assigned “0”, so 0=republican, 0=other. For the third dichotomous variable, assign “0” to the baseline group, and “1” to the third group you want to compare to the baseline, which in our case is 1=other. For that dichotomous variable, the other categories are assigned “0”, so 0=republican, 0=democrat. Here is how it is coded: Republican Democrat Other None None-republican (variable1) 1 0 0 0 None-democrat (variable2) 0 1 0 0 None-other (variable3) 0 0 1 0 You then enter all three variables into the regression analysis. 5