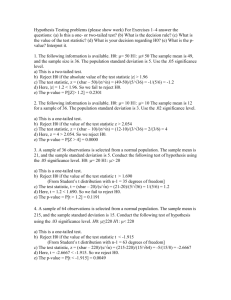

L5: Lecture notes 2 Sample Problems

advertisement

Two samples: (in)dependent and

(non)parametric.

Two independent samples, normal model

Comparing the means or variances of two

(sub)populations. We assume we have:

Two independent random samples (sizes m

and n) from normally distributed population:

X1,..., Xm are independent and Xi ~N(µX, ),

Y1,..., Yn are independent and Yj ~N(µY,

).

The sample means and sample variances are

denoted by:

,

resp.

The observed values as

.

are unbiased estimators of

:

,

=

)=

.

are unbiased estimators of

µX and µY , so

µX - µY

1

are independent so

=

+

So

~ N(

+

) or:

Based on this variable and its distribution we

can derive a confidence interval for

and a test statistic for a specific value of

. But this is only usable if

are known. Usually this is not the case.

If we use

as estimators of

and

, then we apply the Student’s t-distribution.

We distinguish three cases (assumptions):

2

1.

and

are unknown, but equal:

is the best

estimator of the common variance

(

called the pooled sample variance):

is

Similar to the one-sample-problem we derive:

(1- α)100%-CI(

where

and c from

the t(m+n-2)-table such that P(Tm+n-2 ≥ c) =

.

3

The test statistic for the test on

H1 can be one-sided:

is

or

or two-sided:

2.

and

are unknown and different:

4

Test statistic:

Where df = number the degrees of freedom:

df = between min(m-1, n-1) and m + n – 2

Computer software, like SPSS, computes df

without software we use: df = min(m-1, n-1)

(1- α)100%-CI(

=

Where df = min(m-1, n-1) and

c such that P(Tdf ≥ c) = α/2

Note: df = min(m-1, n-1) is a “safe” estimate

of the real number. That is why this is called

“the conservative method”.

3. Large m and n (m > 30 and n > 30):

Use the N(0, 1)-distribution as an

5

approximation, even if the populations are

not normally distributed (robustness!)

Testing the equality of expectations for

m > 30 and n > 30: if

And (also for large m and n):

(1- α)100%-CI(

=

where c is such that P( Z ≥ c) = α/2

Testing the equality of variances

(Levene’s test for the proportion

We test H0:

H1 can be

=

>

,

H0:

<

or

/

):

=1

.

6

Test statistic is the proportion of the sample

variances which has a so called Fisher

distribution with m -1 degrees of freedom in

the numerator and n -1 degrees of freedom

in the denominator.

Notation:

Note 1: If H0 is true T will have values near 1.

Note 2: The Fisher table contains values c,

such that

=α

c in this equation is the critical value for the

right sided alternative H1:

>

The critical value c for the left sided

alternative H1:

<

can be found using:

α

7

Two sided test for H0:

, H1:

If T ≤ c1 or T ≥ c2 , then reject H0 , where:

and

When testing the equality of expectations

using SPSS always first check the p-value of

Levene’s test (α = 0.05) to choose between

equal variances assumed and not assumed.

Two pairwise dependent random samples:

the t-test for the differences.

Whenever we have two observation per object

(individual in the sample), we can compute

8

the differences and apply the t-procedure for

the differences. Usually this is the case when

we have observations per object i before (xi)

and after treatment (yi).

Statistical assumptions for the (dependent)

random samples x1,...,xn and y1,...,yn:

The differences Zi = Yi - Xi (i =1,...,n) are

independent and Zi ~ N(µ, σ2), where expected

difference µ and variance σ2 are unknown.

t-test on H0: µ = 0 (expected difference is 0):

Confidence interval for the expected

difference (c from the t(n-1)-table):

The relation between two-sided tests and

confidence intervals:

9

Suppose θ is the population parameter and we

have a (1-α)100%-CI(θ), based on a sample,

then H0: θ = θ0 will be rejected in favour of

H1: θ θ0 at significance level α if θ0 is in the

interval.

e.g. A one sample t-test:

95%-CI(µ) = (22.4, 28.1) => reject H0: µ = 30

versus H1: µ 30 at 5%-level

(because 30 is not in the interval).

A binomial test:

90%-CI(p) = (0.42, 0.51) => Do not reject

H0: p = ½ versus H1: p ½ at 10%-level.

A two sample t-test:

99%-CI(

) = (-6.8, -1.2), then:

reject

in favour of

at 1%-level,

because the difference 0 is not in the interval.

10

Non-parametric or distribution-free tests

are used in cases where the assumption of a

distribution (having unknown parameters)

does not apply.

These tests offer for example an alternative

method if the assumption of a normal

distribution is evidently incorrect and the

number of observations is small: this can be

the case if the histogram is skewed to the right

or the left or when there are outliers. SPSS

also provides tests on normality.

1. The Sign Test as an alternative for the ttest on the differences for two pairwise

dependent random samples.

Statistical assumptions:

the differences Zi have an unknown (notnormal) distribution. p = the probability of a

positive difference. We test H0: p = ½

versus H1: p > ½ (or p < ½ or p ½)

11

Note: for symmetric distributions of the

differences p = ½ is equivalent to µ = 0, but

for skewed distributions p = ½ is equivalent to

Median = 0. That’s why this test is also called

the sign test on the median.

The test statistic is X = the number of positive

differences: X ~ B(n, ½) if H0: p = ½ is true.

n = the number of observed non-zero

differences (just cancel the zero differences)

The rejection region is:

H1

Rejection region

p>½

{c, c +1, ....., n}

p<½

{0,...., c}

p ½ {0,...., c1} {c2, c2+1, ....., n}

Determine the critical value(s), using:

- For n ≤ 25 the binomial table if available.

- Normal approximation is valid for n > 10:

X is approximately N(½n, ¼n)-distributed.

12

2. The Wilcoxon Rank Sum Test, as a nonparametric alternative for the two

(independent) samples t-test, on the

equality of the expectations

.

The only statistical assumption we need is that

we have two independent random samples of

numerical variables X1,..., Xm and Y1,..., Yn.

We will test H0: the population distributions

are the same

versus

H1: the population distribution of Y is shifted

compared to the distribution of X.

Example: shift to the left H1 :

13

The test statistic, Wilcoxon’s W, is defined as:

W = sum of the ranks of the X-observations

if we order all X- and Y-observations.

One sided alternatives:

Y is shifted to the left H1:

If the alternative is true, the X-values will be

large and W is large: W ≥ c => reject H0.

Y is shifted to the right H1:

If the alternative is true, the X-values will be

small and W is small: W ≤ c => reject H0.

Two sided alternative:

Y is shifted to either side H1:

If the alternative is true, the X-values will be

large or small and W is small or large:

if W ≤ c1 or W ≥ c2, then reject H0.

The distribution of W

We will use a normal approximation of the

14

(discrete) distribution of W and apply

continuity correction if m > 5 and n > 5.

This is an approximate distribution (N=m+n).

The Wilcoxon rank sum test using

1. the critical value c (right sided test):

If W ≥ c , then reject . And c such that:

P(W ≥ c)= P(W ≥ c+½ ) ≈ P(Z ≥

2. the p-value p-value ≤ α => reject

P(W ≥ w)= P(W ≥ w+½ ) ≈ P(Z ≥

)=α

:

)

Note: for m ≤ 5 and/or n ≤ 5 the exact

distribution of W is computed, using

combinatorics (not part of this course).

Ties: if two or more observations have the

same value, then we call this group of

observations a tie. All observations of a tie are

15

assigned the same rank: the mean rank of

these observations. We can use the

distribution above, if there are just a few ties.

16