Are the disturbances in the regressions equations normally distributed

advertisement

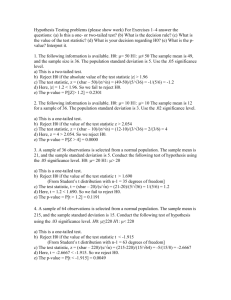

Are the disturbances in the regressions equations normally distributed? Introduction We have already seen that when deducing the distributions of the test statistics and the bounds for confidence intervals for regressions coefficients, we relied heavily on the assumption that the random errors in the regression equation are normally distributed. Although, much of the discussion of choosing appropriate model specifications are motivated by finding sensible economic models, this discussion at the same time aimed at finding specifications in which we could reasonably assume that the random disturbances were normally and independently distributed. We thus understand that it is important to have methods to ascertain if the disturbances entering our regressions are normally distributed. The proper way to settle this issue is, of course, to design a test capable of disclosing the true properties of the random errors. As always with statistical tests we have to start with constructing a suitable test statistic. Since we want to conclude on the basis of this test whether we should reject or not reject the null hypothesis that the random errors are normally distributed, our test statistic must in some way reflect essential characteristic properties of a normally distributed variable. Assuming that the disturbances i are normal with mean zero and variance 2 , we know at once that the density of i is symmetric around zero. For a symmetric distribution we know that every moment of odd order about the mean is equal to zero.In the present application this means that: (1.1) E 3 E 5 ...... E 2 j 1 0 j 1,2,3.... Therefore, it is customary to use the third central moment as a measure of the skewness of a distribution. Hence, if we know that E 3 0 the errors of the regression equation can not be normally distributed. In order to eliminate the impact of different measurement units in the variables, one usually measure the skewness of a distribution by the characteristic: (1.2) 1 3 3 where 3 E 3 and denotes the standard deviation of the variable. A sensible test statistic in this case should depend on skewness or 1 in some way. We note again that if the disturbances i are normally distributed then 1 0 . The second characteristic we want to include into the test statistic, is a parameter that describes the peak values of the density. The point here is that a normal density has a rather “flat” peak as illustrated in the figure below. 1 As a measure of the degree of flattening of a density we usually use the characteristic named kurtosis and defined by: (1.3) 2 4 3 4 where 4 E 4 and is again the standard deviation. For a normal distribution 2 0 . Note that Hill et. al. use 4 / 4 as their definition of kurtosis, but the standard definition is that given by (1.3) (see i.e. K. Sydsæter et.al. “Matematisk formelsamling for økonomer” p. 181). 2 The Jarque-Bera statistic The Jarque-Bera statistic is based on the sample values of the two characteristics (1.2) and (1.3). Let us consider the regression: (2.1) Yi 0 1 X i1 2 X i 2 .... k X ik i i 1,2,....., n OLS regression provides us with estimators ˆ0 , ˆ1 ,....., ˆk . Now, the residuals: (2.2) ei Yi Yˆi i 1,2,....., n are estimates of 1 , 2 ,..., n . Using (2.2) an estimator of skewness (1.2) is given by: (2.3) ˆ1 ˆ 3 ˆ 3 1 1 n 3 ei n i 1 e n 3/ 2 2 i and similarly an estimator of the kurtosis (1.3) is given by: (2.4) ˆ2 1 n 4 ei n i 1 ˆ 4 3 ˆ 4 1 ei2 n 2 3 k 3 where k ˆ 4 / ˆ 4 In order to test the null hypothesis: H 0 : i is N (0, 2 ) and identicall y, independently distributed for all i. 2 Jarque-Bera defined the test statistic: (2.5) k 3 n JB S 2 6 4 2 where S 2 ˆ1 2 When H 0 is true the JB statistic (2.5) has a 2 distribution (asymptotically) with 2 degrees of freedom. Thus, if the estimated value JBˆ of the test statistic is “small” we will not reject H . We will only reject the null hypothesis when the observed value of JBˆ is sufficiently 0 large. What decision should be taken in a particular case depends on the chosen level of significance and the implied threshold value which we find from tables of the 2 distributi on with 2 degrees of freedom. 3