Least Squares Adjustment

advertisement

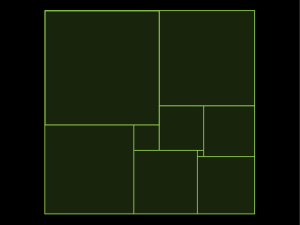

Least Squares Adjustment 1. Mixed Model f ( x, l ) 0 f is made of m equations, x of u unknowns and l of n observations. This model is also known as implicit model. 1.1 Functional Model The model must be linearized around x 0 approximate values for the unknowns and l observed values for the observations. f ( xˆ, lˆ) f ( x 0 , l ) f f ( xˆ x 0 ) x x 0 , l l (lˆ l ) 0 x0 , l v w 0 With w f ( x0 , l ) f x x 0 , l xˆ x 0 f l x 0 , l v lˆ l misclosure vector first design matrix unknown vector (for the linearized problem) second design matrix residual vector 1.2 Stochastic Model The observations are characterized by their variance-covariance matrix Cl . Because sometimes the exact covariance matrix is not known, a scale factor is introduced and two new matrices are derived from the covariance matrix: the cofactor matrix Ql and the weight matrix P. C Cofactor matrix Ql 2l 0 Weight matrix P 02 Cl1 02 is called variance factor or variance of unit weight or apriori variance factor. 1.3 Least Squares Solution Least Squares Adjustment Catherine LeCocq, SLAC – June 2005 – Page 1 Find δ such as v w 0 and v t v minimum. Use Lagrange multipliers k and variation function: ( , v, k ) vt v 2k t ( v w) 2v t 2 k t 0 v 2k t 0 2 t t 2v t t 2wt 0 k By dividing by 2 and transposing t 0 0 t 0 0 v 0 0 k w 0 0 0 By eliminating v 1t t k w 0 0 0 0 By eliminating k t ( 1t ) 1 t ( 1t ) 1 w 0 Giving the unknowns ( t ( 1t )1 )1 t ( 1t )1 w Or 1u with t ( 1t ) 1 and u t (1t )1 w Or again 1u with t 1 and u t 1w assuming 1t Replacing δ into 1t k w 0 gives k k ( 1t )1 ( w) 1 ( w) Replacing k into v t k 0 gives v v 1t ( 1t )1 ( w) 1t 1 ( w) The adjusted quantities are now simply Least Squares Adjustment Catherine LeCocq, SLAC – June 2005 – Page 2 xˆ x 0 lˆ l v The cofactor matrices of all the adjusted quantities can be derived starting with the cofactor matrix of the misclosure vector w f ( x 0 , l ) . Applying the general law of variance-covariance propagation and noting that the jacobian of w is simply the second design matrix leads to: Qw Ql t 1 t The cofactor matrix of the linearized unknowns is obtained by applying the law of variance-covariance propagation to the re-written formula: (t ( 1t ) 1 ) 1 t ( 1t )1 w (t 1) 1 t 1w Q ( t 1) 1 t 1Qw ( 1( t 1) 1 ) ( t 1) 1 Because xˆ x 0 , the cofactor matrix of the adjusted parameters is equal to the cofactor matrix of the linearized unknowns: Qxˆ Q Similarly, the cofactor matrix of the residuals can be computed through the law of variance-covariance propagation to: v 1t ( 1t )1 ( w) (1t 1( t 1)1 t 1 1t 1 ) w Qv Ql t 1 Ql Ql t 1 1t 1 Ql Ql t 1 ( 1t 1 ) Ql Computing the cofactor matrix for the adjusted observation lˆ l v requires the derivation of the jacobian of lˆ and some more matrix multiplications to obtain: Qlˆ Ql Qv Finally, the computation of the minimum of the weighted residuals v t v needs to be performed to complete the least squares analysis. Recalling that v ( 1t 1( t 1)1 t 1 1t 1 ) w leads to: vt v wt ( 1 1( t 1) 1 t 1 ) w The expected value of this quantity is: (vt v) (Tr (vt v)) (Tr ( wt ( 1 1( t 1)1 t 1 ) w)) (Tr (( 1 1( t 1)1 t 1 ) wwt )) Tr ( ( 1 1( t 1)1 t 1 ) ( wwt )) By definition of the variance covariance matrix and by noting that (w) ( wwt ) Cw ( w) ( w)t 02 Qw t t So (v t v) 02 Tr ( 1( t 1) 1 t ) 02 (m u ) The difference m-u is called the degree of freedom and is equal to the number of redundant equations in the model. To be more exact, the degree of freedom is m-rank(A) but this will be covered later. The a posteriori variance of unit weight is: vt v ̂ 02 mu Least Squares Adjustment Catherine LeCocq, SLAC – June 2005 – Page 3 For the situation of 02 unknown, ˆ 02 is used to rescale the covariance matrices for statistical testing purposes. 2. Parametric Model A more common model is given by l f (x) It is also called observation equation model as each observation has its own equation. 2.1 Least Squares Solution This model is a simplified version of the mixed model with So using the same notation as above: v w Qw Ql 1 w f ( x0 ) l ( t )1 t w Q ( t ) 1 Generally the term normal matrix and the notation N are used leading to the well known formula: 1t w 1u Qxˆ Q 1 Qv Ql 1t Qlˆ Ql Qv 1t It is useful to rewrite the formula for the residuals as follows: v 1t w w ( 1t 1 ) w Qv w The matrix Qv is symmetrical and idempotent. Due to the last property, the trace of the matrix is equal to its rank, so the redundancy of the adjustment problem can be written as: r trace (Qv ) The diagonal element i of this matrix Qv is noted ri and represents the contribution of the observation i to the overall system redundancy. 2.2 Statistical Tests Until now, there was no assumption on the distribution of the observations. Now, we will assume that they follow a normal distribution and we will introduce the concept of standardized residuals. v The standardized residual of the observation li is yi i where vi is the regular residual v i and vi is the square root of the i diagonal element of the variance covariance matrix th Cv 02 Qv . Because of the assumption on normality for the observation li , yi should follow a normal distribution of zero mean and one standard deviation. Least Squares Adjustment Catherine LeCocq, SLAC – June 2005 – Page 4 The test to verify this assumption is the 2 goodness of fit test. The standardized residuals are pooled together and an histogram is plotted. The statistics b (a e ) 2 y i i follows a chi2 distribution of degree b-1, ei i 1 where b is the number of histogram classes, ai is the observed frequency in class i and ei is the expected frequency in class i. Failure of the chi2 goodness of fit test is an indication that the observations are not normally distributed and/or that there is a problem in the model. Another test for the assessment of the observations and the model is the test of the weighted quadratic form of vt v r ˆ 2 the residuals. The statistics y 2 20 follows a chi2 distribution of degree r, where 0 0 r is the degree of freedom of the adjustment. 2.3 Sequential Solution Let’s assume that the observations are gathered into two groups and that both groups are uncorrelated. The two linearized systems can be written as follows: v1 1 w1 v2 2 w2 with their own weight matrices 1 and 2 but they can also be grouped together as: v1 1 w1 v w 2 2 2 0 with the total weight matrix 1 0 2 The normalization of the system is: 0 1 t 1t t2 1 1t 1 t22 0 2 2 0 w1 u t w 1t t2 1 1t 1w1 t22 w2 0 2 w2 Least Squares Adjustment Catherine LeCocq, SLAC – June 2005 – Page 5