Probability and Statistics

advertisement

AVU-PARTNER INSTITUTION

MODULE DEVELOPMENT TEMPLATE

PROBABILITY AND STATISTICS

Draft

By

Paul Chege

Version 19.0, 23rd March, 2007

C.

TEMPLATE STRUCTURE

I.

INTRODUCTION

1. TITLE OF MODULE

Probability and Statistics

2. PREREQUISITE COURSES OR KNOWLEDGE

Secondary school statistics and probability.

3. TIME

The total time for this module is 120 study hours.

4. MATERIAL

Students should have access to the core readings specified later. Also, they will need a

computer to gain full access to the core readings. Additionally, students should be able

to install the computer software wxMaxima and use it to practice algebraic concepts.

5. MODULE RATIONALE

Probability and Statistics, besides being a key area in the secondary schools’

teaching syllabuses, it forms an important background to advanced mathematics at

tertiary level. Statistics is a fundamental area of Mathematics that is applied across

many academic subjects and is useful in analysis in industrial production. The study

of statistics produces statisticians that analyse raw data collected from the field to

provide useful insights about a population. The statisticians provide governments

and organizations with concrete backgrounds of a situation that helps managers in

decision making. For example, rate of spread of diseases, rumours, bush fires,

rainfall patterns, and population changes.

On the other hand, the study of probability helps decision making in government

agents and organizations based on the theory of chance. For example:- predicting

the male and female children born within a given period and projecting the amount of

rainfall that regions expect to receive based on some historical data on rainfall

patterns. Probability has also been extensively used in the determination of high,

middle and low quality products in industrial production e.g the number of good and

defective parts expected in an industrial manufacturing process.

2

II.

CONTENT

6. Overview

This module consists of three units:

Unit 1: Descriptive Statistics and Probability Distributions

Descriptive statistics in unit one is developed either as an extension of secondary

mathematics or as an introduction to first time learners of statistics. It introduces the

measures of dispersion in statistics. The unit also introduces the concept of

probability and the theoretical treatment of probability.

Unit 2: Random variables and Test Distributions

This unit requires Unit 1 as a prerequisite. It develops from the moment and moment

generating functions, Markov and Chebychev inequalities, special univariate

distributions, bivariate probability distributions and analyses conditional probabilities.

The unit gives insights into the analysis of correlation coefficients and distribution

functions of random variables such as the Chi-square, t and F.

Unit 3: Probability Theory

This unit builds up from unit 2. It analyses probability using indicator functions. It

introduces Bonferoni inequality random vectors,, generating functions, characteristic

functions and statistical independence random samples. It develops further the

concepts of functions of several random variables and independence of X and S2 in

normal samples order statistics. The unit summarises with the treatment of

convergence and limit theorems.

Outline: Syllabus

Unit 1 ( 40 hours): Descriptive Statistics and Probability Distributions

Level 1. Priority A. No prerequisite.

Frequency distributions relative and cumulative distributions, various frequency

curves, mean, Mode Median. Quartiles and Percentiles, Standard deviation,

symmetrical and skewed distributions. Probability; sample space and events;

definition of probability, properties of probability; random variables; probability

distributions, expected values of random variables; particular distributions; Bernoulli,

binomial, Poisson, geometric, hypergeometric, uniform, exponential and normal.

Bivariate frequency distributions. Joint probability tables and marginal probabilities.

3

Unit 2 ( 40 hours): Random Variables and Test Distributions

Level 2. Priority B. Statistics 1 is prerequisite.

Moment and moment generating function. Markov and Chebychev inequalities,

special Univariate distributions. Bivariate probability distribution; Joint Marginal and

conditional distributions; Independence; Bivariate expectation Regression and

Correlation; Calculation of regression and correlation coefficient for bivariate data.

Distribution function of random variables, Bivariate normal distribution. Derived

distributions such as Chi-Square. t. and F.

Unit 3 ( 40 hours): Probability Theory

Level 3. Priority C. Statistics 2 is prerequisite.

Probability: Use of indicator functions. Bonferoni inequality Random vectors.

Generating functions. Characteristics functions. Statistical independence Random

samples. Multinomial distribution. Functions of several random variables.

The independence of X and S2 in normal samples Order statistics Multivariate

normal distribution. Convergence and limit theorems. Practical exercises.

4

Graphic Organiser

Variance

&

Standard

deviation

Mean,

Mode, and

Median

Frequency

Curves,

Quartiles

Deciles and

Percentiles,

Indicator

functions

DATA

Bonferoni

Inequalities,

random

vectors

Generating

functions,

characteristic

functions &

random samples

Multinomial

distributions,

Functions of

random variables

Probability

Probability

distributions

Multivariate

distribution,

Convergence &

limit theorems

Moment

and

moment

generating

function

Markov and

Chebychev

inequalities

Joint marginal

& conditional

distributions

Univariate

and

Bivariate

distributions

Regression

& correlation

Derived

distributionsChi-square,

t and F

Joint

probability

tables

5

7. General Objective(s)

By the end of this module, the trainee should be able to compute the various measures

of dispersions in statistics and work out probabilities based on laws of probability and

carry out tests on data using the theories of probability

8. Specific Learning Objectives (Instructional Objectives)

Unit 1: Descriptive Statistics and Probability Distributions ( 40 Hours)

By the end of unit 1, the trainee should be able to:

Draw various frequency curves

Work out the mean, mode, median, quartiles, percentiles and standard deviations

of discrete and grouped data

Define and state the properties of probability

Illustrate random variables, probability distributions, and expected values of

random variables.

Illustrate Bernoulli, Binomial, Poisson, Geometric, Hypergeometric, Uniform,

Exponential and Normal distributions

Investigate Bivariate frequency distributions

Construct joint probability tables and marginal probabilities.

Unit 2: Random Variables and Test Distributions ( 40 Hours)

By the end of unit 2, the trainee should be able to:

Illustrate moment and moment generating functions

Analyse Markov and Chebychev inequalities

Examine special Univariate distributions, bivariate probability distributions, Joint

marginal and conditional distributions.

Show Independence, Bivariate expectation, regression and correlation

Calculate regression and correlation coefficient for bivariate data

Show distribution function of random variables.

Examine Bivariate normal distribution

Illustrate derived distributions such as Chi-Square, t, and F.

Unit 3: Probability Theory ( 40 Hours)

By the end of unit 3, the trainee should be able to:

Use indicator functions in probability

Show Bonferoni inequality random vectors

Illustrate generating and characteristic functions

Examine statistical independence random samples and multinomial distribution

Evaluate functions of several random variables

Illustrate the independence of X and S2 in normal samples order statistics

Show multivariate normal distribution

Illustrate convergence and limit theorems.

Work out practical exercises.

6

III.

TEACHING AND LEARNING ACTIVITIES

9. PRE-ASSESSMENT: Basic mathematics is a pre-requisite for Probability and

Statistics.

QUESTIONS

1) When a die is rolled, the probability of getting a number greater than 4 is

1

A.

6

1

B.

3

1

C.

2

D.

1

2) A single card is drawn at random from a standard deck of cards. Find the probability

that is a queen.

1

A.

13

1

B.

52

4

C.

13

1

D.

2

3) Out of 100 numbers, 20 were 4’s, 40 were 5’s, 30 were 6’s and the remainder were

7’s. Find the arithmetic mean of the numbers.

A.

0.22

B.

0.53

C.

2.20

D.

5.30

7

4) Calculate the mean of the following data.

Height (cm) Class mark (x)

60 - 62

61

63 - 65

64

66 - 68

67

69 - 71

70

72 - 74

73

A.

B.

C.

D.

57.40

62.00

67.45

72.25

5) Find the mode of the following data: 5, 3, 6, 5, 4, 5, 2, 8, 6, 5, 4, 8, 3, 4, 5, 4, 8, 2, 5,

and 4.

A.

B.

C.

D.

4

5

6

8

6) The range of the values a probability can assume is

A.

B.

C.

D.

From 0 to 1

From -1 to +1

From 1 to 100

1

From 0 to

2

7) Find the median of the following data: 8, 7, 11, 5, 6, 4, 3, 12, 10, 8, 2, 5, 1, 6, 4.

A.

B.

C.

D.

12

5

8

6

8) Find the range of the set of numbers: 7, 4, 10, 9, 15, 12, 7, 9.

A.

B.

C.

D.

9

11

7

8.88

8

9) When two coins are tossed, the sample space is

A.

B.

C.

D.

H, T and HT

HH, HT, TH, TT

HH, HT, TT

H, T

10) If a letter is selected at random from the word “Mississippi”, find the probability that

it is an “i”

A.

B.

C.

D.

1

8

1

2

3

11

4

11

ANSWER KEY

1.

6.

B

A

2.

7.

A

D

3.

8.

D

B

4.

9.

C

B

5.

10.

B

D

PEDAGOGICAL COMMENT FOR LEARNERS

This pre-assessment is meant to give the learners an insight into what they can

remember regarding Probability and Statistics. A score of less than 50% in the

pre-assessment indicates the learner needs to revise Probability and Statistics

covered in secondary mathematics. The pre-assessment covers basic concepts

that trainees need to be familiar with before progressing with this module. Please

revise Probability and Statistics covered in secondary mathematics to master the

basics if you have problems with this pre-assessment.

9

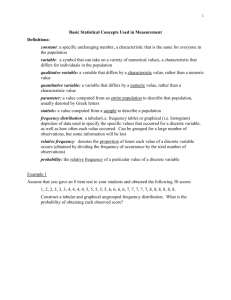

KEY CONCEPTS ( GLOSSARY)

1) Mutually Exclusive: Two events are mutually exclusive if they cannot occur at

the same time.

2) Variance of a set of data is defined as the square of the standard deviation i.e

variance = s2.

3) A trial: This refers to an activity of carrying out an experiment like picking a card

from a deck of cards or rolling a die or dices

4) Sample space: This refers to all possible outcomes of a probability experiment.

e.g. in tossing a coin, the outcomes are either Head(H) or tail(T)

5) A random variable: is a function that assigns a real number to every possible

result of a random experiment.

6) Random sample is one chosen by a method involving an unpredictable

component.

7) Bernoulli distribution: is a discrete probability distribution, which takes value 1

with success probability p and value 0 with failure probability q = 1 − p.

8) Binomial distribution is the discrete probability distribution of the number of

successes in a sequence of n independent yes/no experiments, each of which

yields success with probability .p

9) Hypergeometric distribution: is a discrete probability distribution that describes

the number of successes in a sequence of n draws from a finite population

without replacement.

10) Poisson distribution: is a discrete probability distribution that expresses the

probability of a number of events occurring in a fixed period of time if these

events occur with a known average rate, and are independent of the time since

the last event

11) Correlation: is a measure of association between two variables.

12) Regression: is a measure used to examine the relationship between one

dependent and one independent variable.

13) Chi-square test is any statistical hypothesis test in which the test statistic has a

chi-square distribution when the null hypothesis is true, or any in which the

probability distribution of the test statistic (assuming the null hypothesis is true)

can be made to approximate a chi-square distribution as closely as desired by

making the sample size large enough.

14) Multivariate normal distribution is a specific probability distribution, which can

be thought of as a generalization to higher dimensions of the one-dimensional

normal distribution.

15) t -test is any statistical hypothesis test for two groups in which the test statistic

has a Student's t distribution if the null hypothesis is true

10

STATISTICAL TERMS:

1) Raw data: Data that has not been organised numerically

2) Arrays: An arrangement of raw data numerical data in ascending order of

magnitude.

3) Range: the difference between the largest and the smallest numbers in a data

4) Class intervals: In a range of grouped data e.g 21-30, 31-40 etc, then 21-30 l is

called the class interval.

5) Class limits: In a class interval of 21-30, then 21 and 30 are called class limits.

6) Lower class limits (l.c.l) : In the class interval 21-30, the lower class limit is 21.

7) Upper class limit (u.c.l): in the class interval 21-30, the upper class limit is 30

8) Lower and upper class boundaries: In the class interval 21-30, the lower class

boundary is 20.5 and the upper class boundary is 30.5. These boundaries

assume that theoretically measurements for a class interval 21-30 includes all

the numbers from 20.5 to 30.5.

9) Class Interval: In a class 21-30, then the class interval is the difference between

the upper class limit and the lower class limit i.e. 30.5-20.5 = 10. The class

interval is also known as class width or class size.

10) Class Mark or Mid-point: In a class interval 21-30, the class mark is the

21 30

25.5

average of 21 and 30 i.e

2

11) Frequency Distributions:

large masses of raw data maybe arranged in

classes in tabular form with their corresponding frequencies. e.g.

Mass (kg)

10-19 20-29 30-39 40-49

Number of pupils (f) 5

7

10

6

This tabular arrangement is called a frequency distribution or frequency

table.

12).Cumulative Frequency: For the following frequency distribution, the

cumulative frequencies are calculated as additions of individual frequencies

Mass ( X)

20-24 25-29

30-34

35-39

40-44

Frequency (f)

4

10

16

8

2

Cumulative

4

4+10=14 14=16=30 30+8=38 38+2=40

Frequency( C.F)

Hence the cumulative frequency of a value is its frequency plus

frequencies of all smaller values.

The above table is called a Cumulative Frequency table.

11

13). Relative – Frequency Distributions: In a frequency distribution

Mass ( X)

20-24 25-29 30-34 35-39 40-44

Frequency (f) 4

f

10

16

8

2

40

The relative frequency of a class 25-29 is the frequency of the class

divided by the total frequency of all classes (cumulative frequency) and

generally expressed as a percentage.

Example:

The relative frequency of the class 25-29 =

f

f

100% =

10

100 25%

40

Note: the sum of relative frequencies is 100% or 1.

14).Cumulative Frequency Curves ( Ogive)

Mass ( X)

20-24 25-29

30-34

35-39

40-44

Frequency (f)

4

10

16

8

2

Cumulative

4

4+10=14 14=16=30 30+8=38 38+2=40

Frequency( C.F)

12

From the above Cumulative Frequency table, we can draw a graph of

Cumulative frequency verses the upper class boundaries.

Upper class

24.5

29.5

34.5

39.5

44.5

3

14

30

38

40

boundaries

Cumulative

frequencies

Cumulative frequency

Ogive

45

40

35

30

25

20

15

10

5

0

20

25

30

35

40

45

Upper class limit

Note: From the cumulative frequency data, the first plotting point is ( 24.5, 3). If we

started our graph at this point, it would remain hanging on the y-axis. We create

another point (19.5, 0) as a starting point. 19.5 is the projected upper class

boundary of the preceding class.

13

SHAPES OF FREQUENCY CURVES

Symmetrical or bell-shaped.

Skewed to the right ( positive skewness)

Has equal frequency to the left and right

of the central maximum e.g. normal curve

Skewed to the left ( Negative skewness)

Has the maximum towards the right of

the and the longer tail to the left

Has the maximum towards the left and

the longer tail to the right

J –Shaped

Has the maximum occurring at the right

end

14

Reverse J-Shaped

Has the maximum occurring at the left

end

Bimodal

Has two maxima

U- shaped

Has maxima at both ends

Multimodal

Has more than two maxima.

15

COMPILED LIST OF COMPULSORY READINGS

Reading # 1: Wolfram MathWorld (visited 06.05.07)

Complete reference : http://mathworld.wolfram.com/Probabilty

Abstract : This reference gives the much needed reading material in probability and

statistics. The reference has a number of illustrations that empower the learner through

different approach methodology.

Wolfram MathWorld is a specialised on-line

mathematical encyclopaedia.

Rationale: It provides the most detailed references to any mathematical topic. Students

should start by using the search facility for the module title. At any point students should

search for key words that they need to understand. The entry should be studied

carefully and thoroughly.

Reading # 2: Wikipedia (visited 06.05.07)

Complete reference : http://en.wikipedia.org/wiki/statistics

Abstract : Wikipedia is an on-line encyclopaedia. It is written by its own readers. It is

extremely up-to-date as entries are continually revised. Also, it has proved to be

extremely accurate. The mathematics entries are very detailed.

Rationale: It gives definitions, explanations, and examples that learners cannot access

in other resources. The fact that wikipedia is frequently updated gives the learner the

latest approaches, abstract arguments, illustrations and refers to other sources to

enable the learner acquire other proposed approaches in Probability and Statistics.

Reading # 3: MacTutor History of Mathematics (visited 03.05.07)

Complete reference : http://www-history.mcs.standrews.ac.uk/Indexes

Abstract : The MacTutor Archive is the most comprehensive history of mathematics on

the internet. The resources are rganised by historical characters and by historical

themes.

Rationale: Students should search the MacTutor archive for key words in the topics

they are studying (or by the module title itself). It is important to get an overview of

where the mathematics being studied fits in to the history of mathematics. When the

student completes the course and is teaching high school mathematics, the characters

in the history of mathematics will bring the subject to life for their students. Particularly,

the role of women in the history of mathematics should be studied to help students

understand the difficulties women have faced while still making an important

contribution.. Equally, the role of the African continent should be studied to share with

students in schools: notably the earliest number counting devices (e.g. the Ishango

bone) and the role of Egyptian mathematics should be studied.

16

Compulsory Resources

Resource #1

Maxima.

Complete reference : Copy of Maxima on a disc is accompanying this course

Abstract : The distance learners are occasionally confronted by difficult mathematics

without resources to handle them. The absence of face to face daily lessons with

teachers means that learners can become totally handicapped if not well equipped with

resources to solve their mathematical problems. This handicap is solved by use of

accompanying resource: Maxima.

Rationale: Maxima is an open-source software that can enable learners to solve linear

and quadratic equations, simultaneous equations, integration and differentiation,

perform algebraic manipulations: factorisation, simplification, expansion, etc This

resource is compulsory for learners taking distance learning as it enables them learn

faster using the ICT skills already learnt.

Resource #2 Graph

Complete reference : Copy of Graph on a disc is accompanying this course

Abstract : It is difficult to draw graphs of functions, especially complicated functions,

most especially functions in 3 dimensions. The learners, being distance learners, will

inevitably encounter situations that will need mathematical graphing. This course is

accompanied by a software called Graph to help learners in graphing. Learners

however need to familiarise with the Graph software to be able to use it.

Rationale: Graph is an open-source dynamic graphing software that learners can

access on the given CD. It helps all mathematics learners to graph what would

otherwise be a nightmare for them. It is simple to use once a learner invests time to

learn how to use it. Learners should take advantage of the Graph software because it

can assist the learners in graphing in other subjects during the course and after.

Learners will find it extremely useful when teaching mathematics at secondary school

level.

17

USEFUL LINKS

Useful Link #1

Title : Wikipedia

URL : http:www. http://en.wikipedia.org/wiki/Statistics

Screen capture :

Description: Wikipedia is every mathematician’s dictionary. It is an open-resource that

is frequently updated. Most learners will encounter problems of reference materials from

time to time. Most of the books available cover only parts or sections of Probability and

Statistics. This shortage of reference materials can be overcome through the use of

Wikipedia. It’s easy to access through “Google search”

Rationale: The availability of Wikipedia solves the problem of crucial learning materials

in all branches of mathematics. Learners should have first hand experience of

Wekipedia to help them in their learning. It is a very useful free resource that not only

solves student’s problems of reference materials but also directs learners to other

related useful websites by clicking on given icons. Its usefulness is unparalleled.

Useful Link #2

Title : Mathsguru

URL : http://en.wikipedia.org/wiki/Probability

Screen capture :

Description: Mathsguru is a website that helps learners to understand various

branches of number theory module. It is easy to access through Google search and

provides very detailed information on various probability questions. It offers

explanations and examples that learners can understand easily.

Rationale: Mathsguru gives alternative ways of accessing other subject related topics,

hints and solutions that can be quite handy to learners who encounter frustrations of

getting relevant books that help solve learners’ problems in Probability. It gives a helpful

approach in computation of probabilities by looking at the various branches of the

probability module.

18

Useful Link #3

Title : Mathworld Wolfram

URL : http:www. http://mathworld.wolfram.com/Probability

Screen capture :

Description: Mathworld Wolfram is a distinctive website full of Probability solutions.

Learners’ should access this website quite easily through Google search for easy

reference. Wolfram also leads learners to other useful websites that cover the same

topic to enhance the understanding of the learners.

Rationale:Wolfram is a useful site that provides insights in number theory while

providing new challenges and methodology in number theory. The site comes handy in

mathematics modelling and is highly recommended for learners who wish to study

number theory and other branches of mathematics. It gives aid in linking other webs

thereby furnishing learners with a vast amount of information that they need to

comprehend in Probability and Statistics.

19

1)

UNIT1: 40 HOURS)

DESCRIPTIVE STATISTICS AND PROBABILITY DISTRIBUTIONS

LEARNING ACTIVITY 1

A curious farmer undertakes the following activities in her farm.

1. Plants 80 tree seedlings on 1st March. She measures the heights of the

on 1st December.

2. She weighs all the 40 cows in her farm and records the weights in her

3. She records the daily production of eggs from the poultry section.

4. She records the time taken to deliver the milk to the processing plant.

The records are kept as below.

1.

2.

trees

diary.

Heights of plants in cm

77

76

62

85

63

68

82

67

75

68

74

85

71

53

78

60

81

80

88

73

75

53

95

71

85

74

73

62

75

61

71

68

69

83

95

94

87

78

82

66

60

83

60

68

77

75

75

78

89

96

72

71

76

63

62

78

61

65

67

79

75

53

62

85

93

88

97

79

73

65

93

85

76

76

90

72

57

84

73

86

Weights of goats in kg

Weight

(kg)

118126

127135

136144

145153

154162

163171

172180

No. of

goats

3

5

9

12

5

4

2

20

3.

4.

Number of laid eggs

Eggs

462

480

498

516

534

552

570

588

606

624

No of

days

98

75

56

42

30

21

15

11

6

2

Delivery time of milk to processing plant

Time

minutes

in

No. of days

90100

8089

7079

6069

5059

4049

3039

9

32

43

21

11

3

1

CASE 1:

A local firm dealing with agriculture extension services visits the farmer. She proudly

produces her records. The agricultural officer is very impressed by her good records

but clearly realises that the farmer needs some skills in data management to enable

her make informed decisions based on her farm outputs.

The agricultural officer designs a short course on data processing for all the rural

farmers.

During the course planning stage, the following terms are defined and designed for a

lesson one to the farmers.

a)

b)

c)

d)

e)

f)

Data : The result of observation e.g. height of tree seedlings

Frequency: Rate of occurrence e.g. number of goats weighed.

Mean: The average of a data

Mode: The highest occurring in a data.

Median: In an ascending data, the median is the term occurring at the middle of

the data.

Range: the difference between the highest and the lowest in the data.

LESSON ONE: MEASURES OF DISPERSION

Introduction to Statistics

Descriptive statistics is used to denote any of the many techniques used to

summarize a set of data. In a sense, we are using the data on members of a set to

describe the set. The techniques are commonly classified as:

1. Graphical description in which we use graphs to summarize data.

2. Tabular description in which we use tables to summarize data.

3. Parametric description in which we estimate the values of certain parameters

which we assume to complete the description of the set of data.

21

In general, statistical data can be described as a list of subjects or units and the data

associated with each of them. We have two objectives for our summary:

1. We want to choose a statistic that shows how different units seem similar.

Statistical textbooks call the solution to this objective, a measure of central

tendency.

2. We want to choose another statistic that shows how they differ. This kind of

statistic is often called a measure of statistical variability.

When we are summarizing a quantity like length or weight or age, it is common to

answer the first question with the arithmetic mean, the median, or the mode.

Sometimes, we choose specific values from the cumulative distribution function called

quartiles.

The most common measures of variability for quantitative data are the variance; its

square root, the standard deviation; the statistical range; interquartile range; and the

absolute deviation.

FARMERS LESSONS

The farmers are taught how to compute the

a)

Mean or Average of a data as follows:

Average of a data= Sum total of the data divided by number of items in data.

Example:

Calculate the mean of the following data:

1)

1,3,4,4,5,6,3,7,

Solution: Mean =

2)

1 3 4 4 5 6 3 7

33

=

= 4.125

8

8

650,675, 700, 725, 800, 900, 1050, 1125, 1200, 575

Solution:

650 675 700 725 800 900 1050 1125 1200 575

10

8400

=

10

= 840

Mean =

22

LESSON TWO

MEAN OF DISCRETE DATA

Example:

1) Find the mean of the following data:

X 22 24 25 33 36 37 41

f

5

7

8

4

6

9

11

Solution:

Mean

22(5) 24(7) 25(8) 33(4) 36(6) 37(9) 41(11) 1628

=

= 32.56

5 7 8 4 6 9 11

50

2) Find the mean wage of the workers:

Wage in $

220 250 300 350 375

No. of Workers 12

15

18

20

5

Solution:

220(12) 250(15) 300(18) 350(20) 375(5)

20665

Mean =

=

12 15 18 20 5

70

= $ 295.214

FREQUENCY TABLES AND MEAN OF GROUPED DATA

Example:

The weights of milk deliveries to a processing plant are shown below:

45 49 50 46 48 42 39 47 42 51

48 45 45 41 46 37 46 47 43 33

56 36 42 39 52 46 43 51 46 54

39 47 46 45 35 44 45 46 40 47

23

a) Using class intervals of 5, tabulate this data in a frequency table

b) Calculate the mean mass of the milk delivered.

Solution:

Frequency / Tally table

Class

Tally

Frequency

33- 37

////

4

37-42

///// ///

8

43-47

//// //// //// ///

19

48-52

//// //

7

53-57

//

2

Total

40

c) Mean of a grouped data

Class

Tally

Frequency(f)

Mid-point (x)

fx

33- 37

////

4

33 37

35

2

4 35 = 140

37-42

///// ///

8

40

320

43-47

//// //// //// ///

19

45

855

48-52

//// //

7

50

350

53-57

//

2

55

110

Total

40

Mean =

1775

fx 1775 44.375

f 40

24

DO THIS

Work out the mean of;

1).

63, 65, 67, 68, 69

2).

x

1

2

3 4 5

f(x) 11 10 5 3 1

3).

Weight (x)

4-8 9-13 14-18 19-23 24-28 29-33

Frequency 2

4).

4

7

14

8

5

91,78, 82,73,84

5).

Height (x)

61 64 67 70 73

Frequency 5

18 42 27 8

6).

Weight (x)

Frequency

Answer Key:

1).

66.4

4)

80

2).

5)

30.5-36.5 36.5-42.5 42.5-48.5 48.5-54.5 54.5-60.5

4

2.1

76.45

10

3).

6)

14

27

45

20.6

51.44

25

LESSON THREE

MODE

Example

1) Find the mode of the following data: 1,3,4,4,5,6,1,3,3,2,2,3,3,5

Solution:

The mode of a data is the item that appears most times. In this data, 3 occurs most

times or most frequently i.e. 5 times. Therefore the mode is 3.

2) Find the mode of the following data: 22, 24, 25,22, 27, 22, 25, 30, 25, 31

Solution:

22 and 25 occur three times each. Therefore the modes are 22 and 25. this is called a

bimodal data.

3) Find the mode of the data:

Observation

( X)

0

1

2

3

4

Frequency (

f)

3

7

10

16

11

Solution:

The most occurring observation is 3 i.e. 3 occurs 16 times.

4). Find the modal class of the following data

Weight ( X)

50 – 54 55-59 60-64 65-69 70-74 75-79 80-84

Frequency ( f) 3

6

8

5

15

9

13

Solution:

The modal class is 70-74 because it has the highest frequency of occurrence.

26

DO THIS

Work out the modes or modal classes of the following data;

1) 6, 8, 3,5,2,6,5,9,5

2) 20.4, 20.8, 22.1, 23.4, 19.7, 31.2, 23.4, 20.8, 25.5,23.4

3)

Weight (x)

4-8 9-13 14-18 19-23 24-28 29-33

Frequency 2

4

7

14

8

5

4)

Weight (x)

Frequency

Answer key:

1)

5

2)

30.5-36.5 36.5-42.5 42.5-48.5 48.5-54.5 54.5-60.5

4

23.4

10

3)

19-23

14

4)

27

45

54.5-60.5

LESSON FOUR

MEDIAN

The median is the value in the middle of a distribution e.g. in 1, 2,3,4,5, the median is 3

i.e it comes at exactly in the middle of the distribution. For the data 1,2,2,3,4,5,6,7,7,8;

there are 10 terms and no middle number. In such a case, the median is the average of

the two numbers bordering the centre line

Eg

1,2,2,3,

4

Therefore the median

5

6,7,7, 8

45

= 4.5

2

27

MEDIAN OF A GROUPED DATA

Example

Find the median of the following grouped data

Mass ( X)

20-24 25-29 30-34 35-39 40-44

Frequency (f) 4

10

16

8

2

Solution:

f

40 Therefore the median is the average of the 20th and 21st terms

20 21

= 10.5th term

2

Definition: Lower and Upper Limits of a Class.

The Lower Class Limit ( L.C.L) or lower class boundary and the Upper Class Limits

(U.C.L) or upper class boundary are the lower and upper bounds of a class interval e.g

the lower and upper limits of the class interval 20-24 are 19.5 and 20.5 and the L.C.L

and U.C.L of the class interval 35-39 are 34.5 and 39.5.

Mass ( X)

20-24 25-29

30- 34

35-39

40-44

16

8

2

Frequency (f) 4

10

Cumulative

4+10=14 14 + 16 = 30 30+8=38 39+2 =40

4

Frequency

Procedure for Calculation of the Median

Step 1:

Step 2:

Step 3:

Step 4:

The median occurs in the class interval 30-34

L.C.L and U.C.L of 30-34 are 29.5 and 34.5

Work out the Cumulative Frequency ( C.F)

Work out the class interval as U.C.L – L.C.L

Step 5: To get the 10.5th term.

28

10.5 th term L.C.L of class with median

Summation difference

Class Interval

Class frequency

i.e Summation difference 20.5 – 14 = 6.5 where 14 is the C.F of the class interval 25-29.

6.5

5 = 31.53125.

Step 6: The median = 29.5 +

16

Note that the denominator 16 is the class frequency in the class interval 30-34.

RANGE OF A DATA

The range of a data is simply the difference between the highest and the lowest score in

a data

Example: 23,26,34, 47,63 the range is 63-23=40 and in 121, 65, 78, 203, 298, 174 the

range is 298 – 65= 233.

LESSON FIVE: MEASURES OF DISPERSION

1)

QUARTILES

Data arranged in order of magnitude can be subdivided into four equal portions i.e. 25%

each. The first portion is the lower quartile occurring at 25%. The middle or centre

occurring at 50% is called the median while the third quarter occurring at 75% is called

the upper quartile. The three points are normally referenced as Q 1, Q2 , Q3

respectively.

2)

SEMI –INTERQUARTILE RANGE

The semi-interquartile range or the quartile deviation of a data is defined as

Q Q1

Q 3

2

3)

DECILES

If data arranged in order of magnitude is sub-divided into 10 equal portions ( 10% each),

then each portion constitutes a decile. The deciles are denoted by D1, D2, D3,……D9

4)

PERCENTILES

If data divided arranged in order of magnitude is subdivided into 100 equal portions

(1%each), then the portion constitutes a percentile. Percentiles are denoted as P 1, P2,

P3…, P99

29

THE MEAN DEVIATION

The mean deviation (average deviation), of a set of N numbers X1 ,X2, X3, X4, X5,……,

XN is defined by

N

Xj X

X X = X X , where X is the arithmetic

j 1

Mean deviation (MD) =

=

N

N

mean of the numbers and X X is the absolute value of the deviation of X

Example:

Find the mean deviation of the set

j

from X .

3, 4, 6, 8, 9.

Solution:

3 4 6 8 9 30

6

5

5

36 46 66 86 96

The mean deviation ( X ) =

=

5

Arithmetic mean =

3 2 0 2 3

5

=

3 2 0 2 3 10

5

5

2

THE MEAN DEVIATION OF A GROUPED DATA

For the data

Values

X1 X2 X3 …… XN

Frequencies f1

f2

f3

….

Fm

The mean deviation can be computed as

m

fj Xj X

f XX

j 1

Mean deviation =

XX

N

N

30

THE STANDARD DEVIATION

The Standard deviation of a set of N numbers X1 ,X2, X3, X4, X5,……, XN is denoted by s

and is defined by:

s=

N

2

(X j X )

j 1

N

=

( X X )2

=

N

x2

N

=

( X X )2

where x represents the deviations of the numbers X

from the mean X .

j

It follows that the standard deviation is the root mean square of the deviations

from the mean.

THE STANDARD DEVIATION OF A GROUPED DATA

Values

X1 X2 X3 …… XN

Frequencies f1

f2

f3

….

Fm

The standard deviation is calculated as:

s=

m

2

f j (X X )

j 1

N

2

f (X X )

N

2

fx

( X X )2

N

m

where N= f f .

j

j 1

THE VARIANCE

The variance of a set of data is defined as the square of the standard deviation i.e

variance = s2. We sometimes use s to denote the standard deviation of a sample of a

population and ( Greek letter sigma ) to denote the standard deviation of a

population population. Thus 2 can represent the variance of a population and s2 the

variance of sample of a population.

31

EXAMPLES

1)

Find the Mean and Range of the following data: 5,5,4,4,4,2,2,2

Solutions

n

8) Mean =

N

x =

55 4 4 4 4 2 2 2

356

.

9

n

or

0

0

9

=

52 44 23

9

= 3.56

9) Range 5 – 2 =3.

MEDIAN (MIDDLE )OBSERVATION

Example

Given 13 observations

1,1,2,3,4,4,5,6,8,10,14,15,17

The median falls =

The value

n 1 14

607

2

2

14

= 7th position. The median is 5

2

If n is odd the Median is the value in position

n 1

2

But if it is even, we consider the average of the two middle terms.

10) Example

1,1,2,2,3,4,4,5,6,8,10,14,15,17

The median = Average of the Middle two terms

45

4.5

=

2

32

Median of Grouped Data

When data are grouped the median 2 is the value at or below 50% of the observation

fall.

DO THIS

Find the median of the following data

1.

1,1,2,2,3,4,5,7,7,7,9

2.

7,8,1,1,9,19,11,2,3,4,8

Group Work

1. Study the computation of the variance and standard

from the following example.

Definition

The mean squared deviation from the mean is called variance:

h ( x x) 2

s2

N

Where: x x is deviation from the mean, N is number of observations

s 2 is variance and

s 2 is standard deviation.

Example

Given the data 2,4,5,8,11. Find the variance and the standard deviation.

33

X

2

4

5

8

11

x =5

xx

-4

-2

-1

2

5

( x x) 2

16

4

1

4

25

( x x) 2 =50

30

50

6

52

10

5

5

50

10

Variance= s 2

5

Standard deviation = √10.

So

x

DO THIS

1) Calculate range of the data:

1,1,1,2,2,3,3,3,4,5

10) Calculate the variance and the standard deviation: 1,2,3,4,5

SKEWNESS

Definition: Skewness is the degree of departure from symmetry of a distribution. ( Check

positive and negative skewness above)

For skewed distributions, the mean tends to lie on the same side of the mode as the

longer tail.

PEARSON’S FIRST COEFFICIENT OF SKEWNESS

This coefficient is defined as

mean mod e

X mod e

Skewness=

s tan dard deviation

s

34

PEARSON’S SECOND COEFFICIENT OF SKEWNESS

This coefficient is defined as:

Skewness=

3(mean median)

3( X median)

s tan dard deviation

s

QUARTILE COEFFICIENT OF SKEWNESS

This is defined as:

Quartile coefficient of skewness =

(Q Q ) (Q Q ) Q 2Q Q

3

2

2

1 3

2

1

Q Q

Q Q

3

1

3

1

10-90 PERCENTILE OF SKEWNESS

This is defined as:

(P P ) (P P ) P 2P P

50

50 10 90

50 10

10-90 percentile of skewness = 90

P P

P P

90 10

90 10

Example: Find 25th percentile of the data 1, 2, 3, 4, 5, 6, 7, 9

25th percentile =

(n 1) x0.25

9(.25) 22.5( percentile )

2nd = 2

3rd = 3

2.25 0.25(1) 2 2.25

Find 50th percentile

50th percentile: (8 1) x.50 9(.5) 4.5 percentile

4th = 4

5th = 5

0.5(5) 0.5 4 4.5

The (1) is the range 5 4 1

Group Work

1. Study the computation of percentiles and attempt

the following question..

35

DO THIS

Find the 25th percentile, the 50th percentile, and 90th percentile

46,21,89,42,35,36,67,53,42,75,42,75,47,85,40,73,48,32,41,20,75,48,48,32,52,61,49,50,

69,59,30,40,31,25,43,52,62,50

Answer Key

a)

36

b)

48

c)

73

KURTOSIS

Definition: Kurtosis is the degree of peakedness of a distribution, as compared to the

normal distribution.

EXAMPLES:

1)

LEPTOKURTIC DISTRIBUTION

A distribution having a relatively high peak

2)

PLATYKURTIC DISTRIBUTION

A distribution having a relatively flat top

3). MESOKURTIC DISTRIBUTION

A Normal Distribution – not very peaked or flat topped

36

DO THIS

Find the mode for the data collection:

1)

1,3,4,4,2,3,5,1,3,3,5,4,2,2,2,3,3,4,4,5

2)

Number of marriage per 1000 persons in Africa population for years 1965 – 1975

Year

1965

1966

1967

1968

1969

1970

1971

1972

1973

1974

1975

3)

Rate

9.3

9.5

9.7

10.4

10.6

10.6

10.6

10.9

10.8

10.5

10.0

Number of deaths per 1000 years for years 1960 and 1965 – 1975

1960

1965

1966

1967

1968

1969

1970

1971

1972

1973

1974

1975

9.5

9.4

9.5

9.4

9.7

9.5

9.5

9.3

9.4

9.3

9.1

8.8

SOLUTIONS

1.

3

2.

10.6

3.

9.5

37

READ:

1) An Introduction to Probability by Charles M.

Grinstead pages 247 -263

Exercise on pg 263-267 Nos. 4,7,8,9

PROBABILITY

1) Sample Space and Events

Terminology

a) A Probability experiment

When you toss a coin or pick a card from a deck of playing cards or roll a dice, the act

constitutes a probability experiment. In a probability experiment, the chances are well

defined with equal chances of occurrence e.g. there are only two possible chances of

occurrence in tossing a coin. You either get a head or tail. The head and the tail have

equal chances of occurrence.

b) An Outcome

This is defined as the result of a single trial of a probability experiment e.g. When you

toss a coin once, you either get head or tail.

c). A trial

This refers to an activity of carrying out an experiment like picking a card from a deck of

cards or rolling a die or dices.

d). Sample Space

This refers to all possible outcomes of a probability experiment. e.g. in tossing a coin,

the outcomes are either Head(H) or tail(T) i.e there are only two possible outcomes in

tossing a coin. The chances of obtaining a head or a tail are equal.

e). A Simple and Compound Events

In an experimental probability, an event with only one outcome is called a simple event.

If an event has two or more outcomes, it is called a compound event.

2) Definition of Probability.

Probability can be defined as the mathematics of chance. There are mainly four

approaches to probability;

1) The classical or priori approach

2) The relative frequency or empirical approach

3) The axiomatic approach

4) The personalistic approach

The Classical or Priori Approach

Probability is the ratio of the number of favourable cases as compared to the total likely

cases. Suppose an event can occur in N ways out of a total of M possible ways. Then

the probability of occurrence of the event is denoted by

38

N

. Probability refers to the ratio of possible outcomes to all possible

M

outcomes.

The probability of non-occurrence of the same event is given by {1-p(occurrence)}. The

probability of occurrence plus non-occurrence is equal to one.

If probability occurrence; p(O) and probability of non-occurrence (O’), then

p(O)+p(O’)=1.

p=Pr(N)=

Empirical Probability ( Relative Frequency Probability)

Empirical probability arises when frequency distributions are used.

For example:

Observation ( X)

0

1

2

3

4

Frequency ( f)

3

7

10

16

11

The probability of observation (X) occurring 2 times is given by the formulae

P(2)=

freuency of 2

f (2)

10

10

sum of frequencies f 3 7 10 16 11 47

3) Properties of Probability

a) Probability of any event lies between 0 and 1 i.e. 0 p(O) 1. It follows that

probability cannot be negative nor greater than 1.

b) Probability of an impossible event ( an event that cannot occur ) is always zero(0)

c) Probability of an event that will certainly occur is 1.

d) The total sum of probabilities of all the possible outcomes in a sample space is

always equal to one(1).

e) If the probability of occurrence is p(o)= A, then the probability of non-occurrence

is 1-A.

COUNTING RULES

1) FACTORIALS

Definition: Factorial 4 ! = 4 x 3 x 2 x 1 and 7! = 7 x 6 x 5 x 4 x 3 x 2 x 1

39

2) PERMUTATION RULES

n !

Definition: nPr =

(n r ) !

Examples:

5!

5 x 4 x3x 2 x1

5P3 =

5 x 4 x3 60

(5 3)!

2 x1

8!

8! 8 x7 x6 x5 x 4 x3x 2 x1

8P5 =

8 x7 x6 x5 x 4 6720

(8 5)! 3!

3x 2 x1

3) COMBINATIONS

Definition: nCr =

n !

(n r ) ! r !

Examples:

5!

5 x 4 x3x 2 x1 5 x 4

10

(5 2)!2!

3! 2!

2 x1

10 x9 x8 x7 x 6! 10 x9 x8 x7

10!

10!

210

10C6 =

(10 6)!6! 4! 6!

4 x3x 21x 6!

4 x3x 2 x1

5C2 =

DO THIS

Work out the following;

1).

8P3

2)

8C3

3)

15C10

4)

6C3

5)

15P4

6)

9C3

7)

10C8

8)

7P4

Answer key

1)

5)

336

32 760

2)

6)

56

84

3)

7)

3003

90

4)

8)

20

840

40

RULES OF PROBABILITY

ADDITION RULES

1) Rule 1: When two events A and B are mutually exclusive, then

P(A or B)=P(A)+P(B)

Example: When a is tossed, find the probability of getting a 3 or 5.

Solution: P(3) =1/6 and P(5) =1/6.

Therefore P( 3 or 5) = P(3) + P(5) = 1/6+1/6 =2/6=1/3.

2) Rule 2: If A and B are two events that are NOT mutually exclusive, then

P(A or B) = P(A) + P(B) - P(A and B), where A and B means the number of

outcomes that event A and B have in common.

Example: When a card is drawn from a pack of 52 cards, find the probability that the

card is a 10 or a heart.

Solution:

P( 10) = 4/52 and P( heart)=13/52

P ( 10 that is Heart) = 1/52

P( A or B) = P(A) +P(B)-P( A and B) = 4/52 _ 13/52 – 1/52 = 16/52.

MULTIPLICATION RULES

1) Rule 1: For two independent events A and B, then P( A and B) = P(A) x P(B).

Example: Determine the probability of obtaining a 5 on a die and a tail on a coin in

one throw.

Solution: P( 5) =1/6 and P(T) =1/2.

P(5 and T)= P( 5) x P(T) = 1/6 x ½= 1/12.

2) Rule 2: When to events are dependent, the probability of both events occurring is

P(A and B)=P(A) x P(B|A), where P(B|A) is the probability that event B occurs given that

event A has already occurred.

Example: Find the probability of obtaining two Aces from a pack of 52 cards without

replacement.

Solution: P( Ace) =2/52 and P( second Ace if NO replacement) = 3/51

Therefore P(Ace and Ace) = P(Ace) x P( Second Ace) = 4/52 x 3/51 = 1/221

CONDITIONAL PROBABILITY

P( A and B)

, where

P( B)

P(A and B) means the probability of the outcomes that events A and B have in common.

Example: When a die is rolled once, find the probability of getting a 4 given that an

even number occurred in an earlier throw.

Solution: P( 4 and an even number) = 1/6 ie. P(A and B) =1/6. P(even number) =3/6

=1/2.

P( A and B) 16 1

P( A|B) =

1

P( B)

3

2

The conditional probability of two events A and B is P(A|B) =

41

EXAMPLES:

1) A bag contains 3 orange, 3 yellow and 2 white marbles. Three marbles are selected

without replacement. Find the probability of selecting two yellow and a white marble.

Solution. P( 1st Y) =3/8, P( 2nd Y) = 2/7 and P( W)= 2/6

P(Y and Y and W)=P(Y) x P(Y) x P(W) = 3/8 x 2/7 x 2/6 = 1 / 28

2) In a class, there are 8 girls and 6 boys. If three students are selected at random for

debating, find the probability that all girls.

Solution: P( G) =8/14 and P(B) =6/14. P( 1st G)=8/14, P(2nd G) 7/13 and

6/12.

P( three girls) 8/14 x 7/13 x 6/12= 2/13

P(3rdG)=

3) In how many ways can 3 drama officials be selected from 8 members?

Solution: 8C3 = 56 ways.

4) A box has 12 bulbs, of which 3 are defective. If 4 bulbs are sold, find the probability

that exactly one will be defective.

Solution:

P( defective bulb)= 3C1 and P( non-defective bulbs) = 9C3

3!

9!

x

252

3C1 x 9C3 =

(3 1)!1! (9 3)!3!

P( 4 bulbs from 12) = 12C4 = 495.

P( 1 defective bulb and 3 okey bulbs) = 295/495=0.509.

DO THIS

1) In how many ways can 7 dresses be displayed in a row on a shelf?

2) In how many ways can 3 pens be selected from 12 pens?

3) From a pack of 52 cards, 3 cards are selected. What is the probability that they will

all be diamonds?

Answer Key:

1). 5040

2). 220

3). 0.013

42

READ :An Introduction to Probability & Random Processes

By Kenneth B & Gian-Carlo R, pages

1. 1.20 -1.22

Exercise Chapter 1: Sets, Events & Probability Pg

1.23-1.28 Nos. 1-12 & 14-20

2. 2.1-2.33

Exercise Chapter 2: Finite Processes Pg 2.33 Nos.

1,2,3,13-20, 22-27

3. Introduction to Probability, By Charles M. Grinstead

pages139-141

RANDOM VARIABLES

Random Variables ( r.v)

Definition: A random variable is a function that assigns a real number to

every possible result of a random experiment.

(Harry Frank & Steve C Althoen,CUP, 1994, pg 155)

A random variable is a variable in the sense that it can be used as a placeholder for a

number in equations and inequalities. Its randomness is completely described by its

cumulative distribution function which can be used to determine the probability it takes

on particular values.

Formally, a random variable is a measurable function from a probability space to the

real numbers. For example, a random variable can be used to describe the process of

rolling a fair die and the possible outcomes { 1, 2, 3, 4, 5, 6 }. The most obvious

representation is to take this set as the sample space, the probability measure to be

uniform measure, and the function to be the identity function.

Random variable

Some consider the expression random variable a misnomer, as a random variable is not

a variable but rather a function that maps outcomes (of an experiment) to numbers. Let

A be a σ-algebra and Ω the space of outcomes relevant to the experiment being

performed. In the die-rolling example, the space of outcomes is the set Ω = { 1, 2, 3, 4,

5, 6 }, and A would be the power set of Ω. In this case, an appropriate random variable

might be the identity function X(ω) = ω, such that if the outcome is a '1', then the

random variable is also equal to 1. An equally simple but less trivial example is one in

which we might toss a coin: a suitable space of possible outcomes is Ω = { H, T } (for

heads and tails), and A equal again to the power set of Ω. One among the many

possible random variables defined on this space is

43

Mathematically, a random variable is defined as a measurable function from a sample

space to some measurable space.

CONVERGENCE OF RANDOM VARIABLES

In probability theory, there are several notions of convergence for random variables.

They are listed below in the order of strength, i.e., any subsequent notion convergence

in the list implies convergence according to all of the preceding notions.

Convergence in distribution: As the name implies, a sequence of random variables

converges to the random variable

in distribution if their respective

cumulative distribution functions

function of

, wherever is continuous.

converge to the cumulative distribution

Weak convergence: The sequence of random variables

is said to

converge towards the random variable

weakly if

every ε > 0. Weak convergence is also called convergence in probability.

Strong convergence: The sequence of random variables

converge towards the random variable

strongly if

convergence is also known as almost sure convergence.

for

is said to

Strong

Intuitively, strong convergence is a stronger version of the weak convergence, and in

both cases the random variables

show an increasing correlation with

However, in case of convergence in distribution, the realized values of the random

variables do not need to converge, and any possible correlation among them is

immaterial.

.

Law of Large Numbers

If a fair coin is tossed, we know that roughly half of the time it will turn up heads, and the

other half it will turn up tails. It also seems that the more we toss it, the more likely it is

that the ratio of heads:tails will approach 1:1. Modern probability allows us to formally

arrive at the same result, dubbed the law of large numbers. This result is remarkable

because it was nowhere assumed while building the theory and is completely an

offshoot of the theory. Linking theoretically-derived probabilities to their actual frequency

of occurrence in the real world, this result is considered as a pillar in the history of

statistical theory.

44

The strong law of large numbers (SLLN) states that if an event of probability p is

observed repeatedly during independent experiments, the ratio of the observed

frequency of that event to the total number of repetitions converges towards p strongly

in probability.

In other words, if

are independent Bernoulli random variables taking values 1

with probability p and 0 with probability 1-p, then the sequence of random numbers

converges to p almost surely, i.e.

CENTRAL LIMIT THEOREM

The central limit theorem is the reason for the ubiquitous occurrence of the normal

distribution in nature, for which it is one of the most celebrated theorems in probability

and statistics.

The theorem states that the average of many independent and identically distributed

random variables tends towards a normal distribution irrespective of which distribution

the original random variables follow. Formally, let

variables with means

random variables

, and variances

be independent random

Then the sequence of

converges in distribution to a standard normal random variable.

FUNCTIONS OF RANDOM VARIABLES

If we have a random variable X on Ω and a measurable function f: R → R, then Y = f(X)

will also be a random variable on Ω, since the composition of measurable functions is

also measurable. The same procedure that allowed one to go from a probability space

(Ω, P) to (R, dFX) can be used to obtain the distribution of Y. The cumulative distribution

function of Y is

45

Example

Let X be a real-valued, continuous random variable and let Y = X2. Then,

If y < 0, then P(X2 ≤ y) = 0, so

If y ≥ 0, then

So

PROBABILITY DISTRIBUTIONS

Certain random variables occur very often in probability theory due to many natural and

physical processes. Their distributions therefore have gained special importance in

probability theory. Some fundamental discrete distributions are the discrete uniform,

Bernoulli, binomial, negative binomial, Poisson and geometric distributions. Important

continuous distributions include the continuous uniform, normal, exponential, gamma

and beta distributions.

DISTRIBUTION FUNCTIONS

If a random variable

defined on the probability space (Ω,A,P) is given, we

can ask questions like "How likely is it that the value of X is bigger than 2?". This is the

same as the probability of the event

P(X > 2) for short.

which is often written as

Recording all these probabilities of output ranges of a real-valued random variable X

yields the probability distribution of X. The probability distribution "forgets" about the

particular probability space used to define X and only records the probabilities of various

values of X. Such a probability distribution can always be captured by its cumulative

distribution function

and sometimes also using a probability density function. In measure-theoretic terms, we

use the random variable X to "push-forward" the measure P on Ω to a measure dF on

R. The underlying probability space Ω is a technical device used to guarantee the

46

existence of random variables, and sometimes to construct them. In practice, one often

disposes of the space Ω altogether and just puts a measure on R that assigns measure

1 to the whole real line, i.e., one works with probability distributions instead of random

variables.

DISCRETE PROBABILITY THEORY

Discrete probability theory deals with events which occur in countable sample

spaces.

Examples: Throwing dice, experiments with decks of cards, and random walk.

Classical definition: Initially the probability of an event to occur was defined as number

of cases favorable for the event, over the number of total outcomes possible.

For example, if the event is "occurrence of an even number when a die is rolled", the

probability is given by

, since 3 faces out of the 6 have even numbers.

Modern definition: The modern definition starts with a set called the sample space

which relates to the set of all possible outcomes in classical sense, denoted by

. It is then assumed that for each element

, an intrinsic

"probability" value

is attached, which satisfies the following properties:

1.

2.

An event is defined as any subset

event defined as

of the sample space

. The probability of the

So, the probability of the entire sample space is 1, and the probability of the null event is

0.

The function

mapping a point in the sample space to the "probability" value is

called a probability mass function abbreviated as pmf. The modern definition does

not try to answer how probability mass functions are obtained; instead it builds a theory

that assumes their existence.

47

CONTINUOUS PROBABILITY THEORY

Continuous probability theory deals with events which occur in a continuous sample

space.

If the sample space is the real numbers, then a function called the cumulative

distribution function or cdf

is assumed to exist, which gives

.

The cdf must satisfy the following properties.

1.

is a monotonically non-decreasing right-continuous function

2.

3.

If

is differentiable, then the random variable is said to have a probability density

function or pdf or simply density

For a set

.

, the probability of the random variable being in

is defined as

In case the density exists, then it can be written as

Whereas the pdf exists only for continuous random variables, the cdf exists for all

random variables (including discrete random variables) that take values on .

These concepts can be generalized for multidimensional cases on

.

48

PROBABILITY DENSITY FUNCTION

DISCRETE DISTRIBUTION

If X is a variable that can assume a discrete set of values X1, X2, X3,…….., Xk wih respet

to probabilities p1, p2, p3,……., pk, where p1+ p2 + p3,……., + pk = 1, we say that a

discrete probability distribution for X has been defined. The function p(X), which has the

respective values p1, p2, p3,……., pk for X= X1, X2, X3,…….., Xk is called the probability

function, or frequency function, of X. Because X can assume certain values with given

probabilities, it is often called a discrete random variable. A random variable is also

known as a chance variable or stochastic variable. { Murray R, 2006 pg 130}

CONTINUOUS DISTRIBUTION

Suppose X is a continuous random variable. A continuous random variable X is

specified by its probability density function which is written f(x) where f(x) 0 throughout

the range of values for which x is valid. This probability density function can be

represented by a curve, and the probabilities are given by the area under the curve.

P(X)

X

a

b

The total area under the curve is equal to 1. The are under the curve between the lines

x=a and x=b ( shaded) gives the probability that X lies between a and b, which can be

denoted by P(a<X<b). p(X) is called a probability density function and the variable X is

often called a continuous random variable

Since the total area under the curve is equal to 1, it follows that the probability between

a range space a and b is given by

P ( a X b)

b

f ( x)dx ,

a

which is the shaded area.

49

Note: when computing area from a to b, we need not distinguish

( and ) and ( and ) inequalities. We assume the lines at a and b have no

thickness and its area is zero.

Solved Examples:

1) The continuous random variable X is distributed with probability density function f

defined by

f(x) = kx(16-x2), for 0<x<4.

Evaluate:

a). The value of constant k

b). The probability of range space P(1<X<2)

c). The probability P(x 3)

Solution:

f(x)

x

a

b

For any function f(x) such tha

f(x) 0, for a x b,

and

b

a f ( x)dx 1

may be taken as the probability density function (p.d.f) of a continuous random variable

in the range space a x b.

50

Procedure:

Step 1:

In general, if X is a continuous random variable (r.v) with p.d.f f(x) valid

over the range a x b, then

f ( x)dx 1

all x

b

a

i.e.

f ( x)dx 1

Step 2:

a). To determine k, we use the fact that in f(x) = kx(16-x2), for 0<x<4, then

4

0

kx(16 x 2 )dx 1

4

k 16 x x 3 ) dx 1

k

0

1

64

Step 3:

b).

Find P(1<X<2)

Solution:

2

P(1<X<2)= 1

f ( x)dx

1 2

81

3

(

16

x

x

)

dx

64 1

256

Step 4:

c). To find P(x 3)

51

P ( x 3)

1 4

49

3

(

16

x

x

)

dx

64 3

256

Example 2:

2).

X is the continuous random variable ‘the mass of a substance, in kg, per

minute in an industrial production process’, where

1

x (6 x )

f ( x) 12

0

(0 x 3)

otherwise

Find the probability that the mass is more than 2 kg.

Solution:

X can take values from 0 to 3 only. We sketch f(x), and shade the area

required.

f ( x)

f(x)

1

x (6 x )

12

x

0

2

3

1

x(6 x)dx

2 12

P ( x 2)

1

12

3

2

3

(6 x x 2 ) dx

3

x3

2

3 x

3 2

0.722 (3 d . p )

1

12

The probability that the mass is more than 2 kg is 0.722.

Worked example:

3).

A continuous random variable has p.d.f f(x) where

52

f ( x) kx2 , 0 x 6.

a).

Find the value of k

b).

Find P (2 X 4)

Solution:

a). Since X is a random variable the total probability is 1. i.e.

f ( x ) dx 1

all

6

0

k x2 dx 1

6

k x3

1

3

0

216 k

1

3

3

k

216

Therefore f(x)=

3 2

1 2

x

x , 0 x6

216

72

b).

f ( x)

f(x)

1

x2

72

x

0

2

4

P ( 2 x 4)

1

x3

216

0.259

6

4

2

1

x 2 dx

72

42

Therefore the probability P (2 X 4) = 0.259

53

Worked Example:

4).

The continuous random variable (r.v) has a probability density function(p.d.f)

where

0 x2

k

f ( x) k (2 x 3)

2 x5

0

otherwise

a).

Find the value of the constant k

b).

Sketch y=f(x)

c).

Find P(X 1)

d).

Find P(X>2.5)

Solution:

a).

Since X is a r.v, then

f ( x)dx 1

all x

Therefore

2

0

kx

5

kdx k (2 x 3)dx 1

2

2

0

k x 2 3x

5

2

2k 19k 1

1

k

21

b).

So the p.d.f of X is

1

21

1

( 2 x 3)

f ( x ) 21

0

0 x 2

2 x 5

otherwise

54

SKETCH

1

3

1

21

0

1

2

2.5

3

4

5

1

1

= = 0.048

21 21

c).

P(x 1) = area between zero and 1 = L x W= 1 x

d).

Find P(X>2.5) = area of rectangle + area of trapezium.

=(

1

1

1

2

11

0.131

x 2 ) + ( {0.5}{ } =

2

21 21 84

21

55

REFLECTION: It is important for Mathematics teachers to learn how to use

ICT in Graphs. The link opens up avenue for Maths teachers to learn how

to draw graphs using free software

http://www.chartwellyorke.com/

56

DO THIS

1).

2).

3).

The continuous random variable X has p.d.f f(x) where f(x)= k, 0 x 3 .

a)

Sketch y=f(x)

b).

Find the value of the constant k

c).

Find P(0.5 X 1

The continuous random variable has p.d.f f(x) where f(x)=kx2, 1 x 4 .

a).

Find the value of the constant

b).

Find P(x 2)

c).

Find P(2.5 x 3.5

The continuous random variable has p.d.f f(x) where

k

f ( x) k (2 x 1)

0

a)

b)

c)

d)

0 x2

2 x3

otherwise

find the value of the constant k.

Sketch y=f(x)

Find P(X 2 )

Find P(1 X 2.2)

EXPECTATION

Definition:

If X is a continuous variable (r.v) with probability density function (p.d.f) f(x), then the

expectation of X is E(X) where

E( X )

x

f ( x)dx

all x

NB: E(X) is often denoted by

and referred to as the mean of X

57

Example:

1).

If X is a continuous variable ( r.v) with a p.d.f

f ( x)

1 2

x , 0 x 3,

16

find E(X).

Solution:

E( X )

x

f ( x)dx

all x

3

0

1

{x} x 2 dx

16

3

1 x4

81

1.265

16 4 0

64

2).

If the continuous random variable X has p.d.f

f ( x)

2

(3 x)( x 1),

5

1 x 3,

find E(X).

E( X )

x

f ( x)dx

all x

E ( x)

3

1

2

{x} (3 x)( x 1)dx

5

3

2 x 4 2 x 3 3x 2

5 4

3

2 1

608

60

10.13

58

GENERALISATION:

If g( x) is any function of the continuous random variable r.v X having p.d.f f(x), then

E g ( X )

g ( x) f ( x)dx

all x

and in particular

E( X 2 )

x f x dx

2

all x

The following conclusion s hold

1.

E (a) a

2.

3.

E ( aX ) aE ( X )

E ( aX b) aE ( X ) b

4.

E ( f1 ( X ) f 2 ( X ) E f 2 ( X )

Example:

1).

The continuous random variable X has p.d.f f(x) where f(x)=

1

x, 0 x 3.

2

Find

a).

b).

c).

E(X)

E(X2)

E(2X +3)

Solution:

a)

E( X )

x

f ( x)dx

all x

3

0

1 2

x dx

2

3

1 x3

2

3 0

4 .5

59

b)

E( X

1

2

2

3

0

)

x

2

all x

f ( x ) dx

x 3 dx

3

1 x4

2 4 0

81

10.125

8

c).

E(2X +3) = E (2X) + 3

= 2E(X) +3

= 2(10.125)+5

= 25.25 ( from (b) above)

DO THIS

1).

The continuous random variable X has p.d.f f(x) where

kx

f ( x) k

k ( 4 x )

0

0 x 1

1 x 3

3 x5

otherwise

a).

b)

Find k

Calculate E(X)

2).

The continuous random variable has p.d.f f(x) where f(x) =

a).

b).

c).

d).

Find E(X)

Find E(2X+4)

Find E(X2).

Find E( X2 + 2X – 1).

1

( x 3), 0 x 5

10

60

BERNOULLI DISTRIBUTION

In probability theory and statistics, the Bernoulli distribution, named after Swiss

scientist Jakob Bernoulli, is a discrete probability distribution, which takes value 1 with

success probability p and value 0 with failure probability q = 1 − p. So if X is a random

variable with this distribution, we have:

The probability mass function f of this distribution is

The expected value of a Bernoulli random variable X is

, and its variance is

The kurtosis goes to infinity for high and low values of p, but for p = 1 / 2 the Bernoulli

distribution has a lower kurtosis than any other probability distribution, namely -2.

The Bernoulli distribution is a member of the exponential family.

BINOMIAL DISTRIBUTION

In probability theory and statistics, the binomial distribution is the discrete probability

distribution of the number of successes in a sequence of n independent yes/no

experiments, each of which yields success with probability p. Such a success/failure

experiment is also called a Bernoulli experiment or Bernoulli trial. In fact, when n = 1,

the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis

for the popular binomial test of statistical significance.

Examples

An elementary example is this: roll a die ten times and count the number of 1s as

outcome. Then this random number follows a binomial distribution with n = 10 and p =

1/6.

For example, assume 5% of the population is green-eyed. You pick 500 people

randomly. The number of green-eyed people you pick is a random variable X which

follows a binomial distribution with n = 500 and p = 0.05 (when picking the people with

replacement).

61

EXAMPLES:

1) A coin is tossed 3 times. Find the probability of getting 2 heads and a tail in any

given order.

FORMULA:

We can use the formula

nCx.

(p)x.(1-p)n-x

Where n = the total number of trials

x = the number of successes ( 1,2,…)

p= the probability of a success.

1st) nCx determines the number of ways a success can occur.

2nd) (p)x is the probability of getting x successes and

3rd) (1-p)n-x is the probability of getting n-x failures

Solution:

Tossing 3 times means n=3

Two heads means x=2

P(H)=1/2; P(T)=1/2

1

1

P( 2 heads) = 3C2. ( )2.(1- )3-1 = 3(1/4)(1/2)= 3/8

2

2

DO THIS

1) Find the probability of exactly one 5 when a die is rolled 3 times

2) Find the probability of getting 3 heads when 8 coins are tossed.

3) A bag contains 4 red and 2 green balls. A ball is drawn and replaced 4 times. What

is the probability of getting exactly 3 red balls and 1 green ball.

Answer:

1

5

1).

P( one 5)

= 3C1. ( )1.( )2 = 25/72 = 0.347 i.e n=3, x=1, p=1/6

6

6

1

1

2).