COMBINING ENGINEERING AND STATISTICAL DESIGN

advertisement

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

PROBABILISTIC ENGINEERING DESIGN

Trevor A. Craney

INTRODUCTION

Engineering design relies heavily on complex computer code such as finite element or finite difference

analysis. These complex codes can be extremely time-consuming even with the computing power available today.

Deterministic design employs the idea of either: (a) running this code with input variables at their worst case values,

or (b) running this code with input variables at their nominal values and applying a safety factor to the final result of

the output variable. In the generic sense of modeling a typical response like stress, the result of using either of these

methods is unknown. Assuming the input distributions are correct, applying worst case scenarios is too

conservative. Applying safety factors to a nominal solution can result in either too much or too little conservatism

with no method to compute risk or probability of occurrence.

Probabilistic engineering design relies on statistical distributions applied to the input variables to assess

reliability, or probability of failure, in the output variable by specifying a design point. Any response value passing

beyond this design point (also referred to as the most probable point, or MPP) is considered in the failure region.

This method also allows for reverse calculations such that a specific probability of failure can be specified for the

response. The MPP is then determined by calculating the response value that yields the specified probability of

failure. This concept of designing to reliability instead of designing to nominal is clearly a superior method for

engineering design. By choosing a desired reliability from a distribution on the response, a probabilistic risk

assessment is built into the design process.

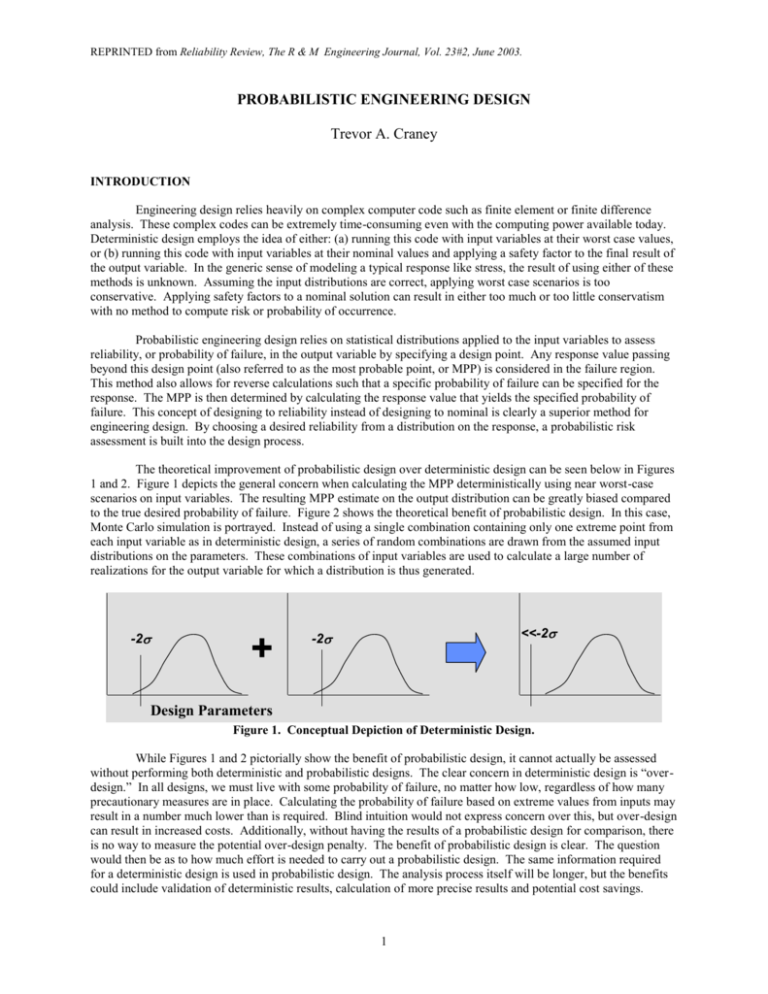

The theoretical improvement of probabilistic design over deterministic design can be seen below in Figures

1 and 2. Figure 1 depicts the general concern when calculating the MPP deterministically using near worst-case

scenarios on input variables. The resulting MPP estimate on the output distribution can be greatly biased compared

to the true desired probability of failure. Figure 2 shows the theoretical benefit of probabilistic design. In this case,

Monte Carlo simulation is portrayed. Instead of using a single combination containing only one extreme point from

each input variable as in deterministic design, a series of random combinations are drawn from the assumed input

distributions on the parameters. These combinations of input variables are used to calculate a large number of

realizations for the output variable for which a distribution is thus generated.

-2

+

<<-2

-2

Design Parameters

Figure 1. Conceptual Depiction of Deterministic Design.

While Figures 1 and 2 pictorially show the benefit of probabilistic design, it cannot actually be assessed

without performing both deterministic and probabilistic designs. The clear concern in deterministic design is “overdesign.” In all designs, we must live with some probability of failure, no matter how low, regardless of how many

precautionary measures are in place. Calculating the probability of failure based on extreme values from inputs may

result in a number much lower than is required. Blind intuition would not express concern over this, but over-design

can result in increased costs. Additionally, without having the results of a probabilistic design for comparison, there

is no way to measure the potential over-design penalty. The benefit of probabilistic design is clear. The question

would then be as to how much effort is needed to carry out a probabilistic design. The same information required

for a deterministic design is used in probabilistic design. The analysis process itself will be longer, but the benefits

could include validation of deterministic results, calculation of more precise results and potential cost savings.

1

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

+

Desired Risk of Failure

Random Samples

Figure 2. Conceptual Depiction of Probabilistic Design.

The remainder of this paper will describe the Monte Carlo simulation (MCS) and response surface

methodology (RSM) approaches to probabilistic design. The RSM approach will include experimental design

selection, model checking based on statistical and engineering criteria, and output analysis. Useful guidelines are

given to ascertain statistical validity of the model, and the results.

MONTE CARLO SIMULATION

Once the input variable distributions have been determined, Monte Carlo simulation follows quite easily.

A large number of random samples are drawn and run through the complex design code. The resulting realizations

create a distribution of the response variable. The distribution fit to the response can be empirical or a fitted

parametric distribution can be used. An advantage of fitting a parametric distribution is that specific probabilities of

interest requiring extrapolation can be estimated. For instance, if one uses 1000 random samples, it would be

impossible to estimate the 0.01th percentile empirically. A parametric distribution would be able to estimate this

value. It would be up to the analyst as to whether the extrapolation is too far beyond the range of the data to

consider trustworthy. Another advantage of fitting a parametric distribution is for ease of implementation in

additional analyses. If a design engineer probabilistically modeled stress as a response and wished to then use stress

as an input distribution to calculate life, programming and calculations would be simplified by using a functional

distribution. Figure 3 shows the flowchart required for MCS.

Generate Random Values

for the Life Drivers

Life Equation or

Design Code

Y = f(X 1, X 2, ... X P)

X1

Output

Variable

Distribution fit

to the Output/

Design Variable

Y1

.

.

.

Yn

Xp

Y

Iterate n Times

Figure 3. Flowchart for MCS Probabilistic Design Method.

While the MCS method is simple, it is also very expensive. As mentioned, the design code can be quite

time consuming. It is not uncommon for a single finite element analysis run required to produce a single

observation to take as long as 10, 20 or 30 minutes. At ten minutes, one thousand simulated realizations would

require just under a full 7-day week to complete. Even at just 30 seconds per run, a simulation of 1000 runs would

require more than eight hours to complete. The MCS method will produce a distribution of the response that can

essentially be viewed as the truth, but the penalty for demanding many runs is severe.

RESPONSE SURFACE METHODOLOGY

While MCS will produce a true distribution of the response, the cost of using that method is clearly in the

number of runs required for the design code. An alternative is to estimate the complex design code with a linear

2

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

model using a small number of runs at specified locations of the input variables. From this linear model, a

simulation of 1000 or 10,000 realizations would run in a matter of seconds on an average computer. The linear

models used here are second-order regression models, also referred to as response surface models. A response

surface model for k input variables can be stated as

k

y 0

k

k

2

ij X i X j ii X i ,

i X i i

1, j 2

i 1

i 1

i j

where y is the response variable, Xi are the input variables, and the ’s are the coefficients to be estimated. The idea

is sound as long as a second-order model can sufficiently estimate the design code. Figure 4 shows a basic

flowchart of this method that is comparable to the flowchart for MCS.

Input Variable Matrix

1

2

3

4

.

.

n

X1

X2

-1

-1

+1

+1

.

.

0

0

0

0

0

.

.

-1

Xp

...

...

...

...

...

...

...

-1

+1

-1

+1

.

.

+1

Generate Random Values

for the Life Drivers

Design Code/

Software

(e.g., FEM, thermal model)

Inputs: X 1 ... X P

Output: Y

Regression Equation

Approximation of

Design Code

Y = f(X 1, X 2, ... X P)

X

1

X

Outputs

Y1

.

.

.

Yn

Output

Variable

Y1

.

.

.

Yn

Distribution fit

to the Output/

Design Variable

Y

p

Iterate n Times

Figure 4. Flowchart for RSM Probabilistic Design Method

Determination of a response surface model is done by setting up a designed experiment for the input

variables. Designed experiments are used to minimize the number of runs required to model combinations of all the

input variables across their ranges to be able to estimate a second-order model. Typical experimental designs used

for RSM are Box-Behnken designs (BBD), central composite designs (CCD), and 3-level full factorial designs

(FF3). The BBD and FF3 each require three levels (points) to be sampled across the range of each variable in

creating the sampling combinations. The CCD uses five levels. The FF3 design matrix has orthogonal columns of

data. Each of the BBD and CCD are near-orthogonal with the degree of non-orthogonality changing based on the

number of variables in the study. An additional design of use is the fractional CCD with at least resolution V. A

CCD can be described as a 2-level full factorial design combined with axial runs and a center point. If the factorial

portion is fractionated, the design requires fewer runs. The interested reader is directed to Myers and Montgomery

(1995) for a thorough explanation of all of the designs and design properties mentioned above.

One criterion for selecting a design is the number of runs required. Table 1 shows the number of runs

required for each of the four designs specified versus the number of input variables being investigated in the model.

Note that the fractional CCD does not exist at the minimum resolution V requirement until at least five input

variables are used. Hence, the number of runs required for the fractional CCD is left blank for those three cases in

3

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

the table. In addition, the BBD does not exist for only two variables and has gaps in its existence due to its method

of construction. If k is the number of input variables in the design, then a FF3 design requires 3 k runs. A CCD

requires 2k + 2k + 1 runs. The formula for the number of runs required in a BBD changes based on the number of

input variables. It is clear from the described problem with MCS that a FF3 design is generally infeasible. This is

also true for a full CCD by the time the number of input variables hits double digits.

# Input Variables

2

3

4

5

6

7

8

9

10

11

12

Box-Behnken

Fractional CCD

(min. Res. V)

CCD

9

15

25

27

43

45

77

79

143

81

273

121

147

531

161

149

1045

177

151

2071

193

281

4121

Table 1. Number of Runs Required by Type of Design.

13

25

41

49

57

3-Level Full

Factorial

9

27

81

243

729

2187

6561

19,683

59,049

177,147

531,441

The number of runs required should not be the only concern in selecting a design. Another concern is the

loss of orthogonality, if any. When the columns of the design matrix are not orthogonal, some degree of collinearity

exists. This causes increased variance in the estimates of the model coefficients and disrupts inference on

determining significant effects in the model. This is of less concern in probabilistic design as prediction is the

primary intended use of the model. However, inference may also be of secondary importance to know which inputs

should be more tightly controlled in the manufacturing process. The variance inflation factor, or VIF, is a useful

measure of collinearity among the input variables. The VIF is measured on each term in the model except the

intercept. In a perfectly orthogonal design matrix, each VIF would have value 1.0. With increasing collinearity in

the model, VIF’s increase. Cutoff values should be utilized to restrict poor designs. If we state this in terms of

green (g), yellow (y) and red (r) lights to relate to good, questionable and poor designs, respectively, a green light

may be stated as the maximum VIF < 2 and a red light may be stated as the maximum VIF > 5 or 10. Under such a

scenario, Table 2 categorizes the designs listed previously in Table 1.

# Input Variables

Box-Behnken

Fractional CCD

(min. Res. V)

CCD

3-Level Full

Factorial

2

1.68 (g)

1.00 (g)

3

1.35 (g)

1.91 (g)

1.00 (g)

4

2.21 (y)

2.21 (y)

1.00 (g)

5

3.15 (y)

1.67 (g)

2.44 (y)

1.00 (g)

6

2.32 (y)

2.25 (y)

2.07 (y)

1.00 (g)

7

2.32 (y)

2.52 (y)

1.53 (g)

1.00 (g)

8

2.56 (y)

1.24 (g)

1.00 (g)

9

3.87 (y)

2.15 (y)

1.11 (g)

1.00 (g)

10

3.69 (y)

2.56 (y)

1.05 (g)

1.00 (g)

11

2.59 (y)

2.73 (y)

1.03 (g)

1.00 (g)

12

3.63 (y)

1.79 (g)

1.01 (g)

1.00 (g)

Table 2. Green-Yellow-Red Light Conditions for RSM Designs with Respect to Maximum VIF.

An additional concern that is a function of the design selection is the spacing of the input variables. Input

variable points falling in a region relatively far outside or away from the rest of the data will have higher leverage in

determining model coefficients. For a model with p terms (including the intercept) and n observations (as

determined by Table 1), leverage values can be determined for each observation (row) in the design matrix. Of the

designs mentioned, leverages are mainly of concern in the CCD. The axial points that extend beyond the face of the

cube are an increasing function of the number of points required to make up the factorial portion of the design. To

4

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

maintain a rotatable CCD, which maintains constant prediction variance at all points that are equidistant from the

center in the design space, the axial points must be at 4 n f , where nf is the number of points comprising the

factorial portion of the design. Designs with maximum leverages less than 2p/n are considered a green light and

designs with maximum leverages greater than 4p/n are considered a red light. Values in between are in the yellow

light region. Results for these considerations, along with 2p/n and 4p/n limits, are displayed in Table 3.

# Input

Box-Behnken

Fractional CCD

CCD

3-Level Full Factorial

Variables

(min. Res. V)

2

1.00 {1.33,2.67} (g)

0.81 {1.33,2.67} (g)

3

1.00 {1.54,3.08} (g)

0.99 {1.33,2.67} (g)

0.51 {0.74,1.48} (g)

4

1.00 {1.20,2.40} (g)

1.00 {1.20,2.40} (g)

0.28 {0.37,0.74} (g)

5

1.00 {1.02,2.05} (g) 0.88 {1.56,3.11} (g) 0.89 {0.98,1.95} (g)

0.14 {0.17,0.35} (g)

6

1.00 {1.14,2.29} (g) 0.97 {1.24,2.49} (g) 0.57 {0.73,1.45} (g)

0.06 {0.08,0.15} (g)

7

1.00 {1.26,2.53} (g) 0.82 {0.91,1.82} (g) 0.56 {0.50,1.01} (y)

0.0285 {0.0329,0.0658} (g)

8

1.00 {1.11,2.22} (g) 0.55 {0.33,0.66} (y)

0.0122 {0.0137,0.0274} (g)

9

1.00 {0.91,1.82} (y) 0.55 {0.75,1.50} (g) 0.54 {0.21,0.41} (r)

0.0051 {0.0056,0.0112} (g)

10

1.00 {0.82,1.64} (y) 0.78 {0.89,1.77} (g) 0.53 {0.13,0.25} (r)

0.0021 {0.0022,0.0045} (g)

11

1.00 {0.88,1.76} (y) 0.99 {1.03,2.07} (g) 0.52 {0.08,0.15} (r)

0.0008 {0.0009,0.0018} (g)

12

1.00 {0.94,1.89} (y) 0.54 {0.65,1.30} (g) 0.52 {0.04,0.09} (r) 0.000326 {0.000342,0.000685} (g)

Table 3. Green-Yellow-Red Light Conditions for RSM Designs with Respect to Maximum Leverage.

Using the information provided in Tables 2 and 3, an appropriate design can be selected prior to compiling

the actual runs and fitting a model. If we assume that a red light on either Table 2 or 3, or a yellow light on both

tables revoke the design, BBD’s are usable for 3-7 input variables, full CCD’s are usable for 2-8 input variables, and

fractional CCD’s are usable for 5-12 input variables. The FF3 design is the basis for comparison so it will always be

in the green light scenario for both the maximum VIF and maximum leverage criteria. Combining this with the

number of runs required in Table 1, the fractional CCD would appear to be the best generic choice, substituting it

with a full CCD for the cases of 2-4 input variables.

Once an appropriate design is selected and the data collected, a response surface model is fit to the data

using the method of least squares. It should be noted, however, that the response data is not truly a random variable.

If the combination of input variables were run more than once through the design code, the response returned will

repeat the same answer. However, this can be ignored for the purpose of modeling as replication will not be used

for any of the points in this process, including the center point. The model fitting process is unaffected by this

information. It simply reduces our usual concern for validation of standard assumptions for the residuals, that they

are iid N(0,2). The analyst should still be concerned about large residuals and constant variance, although less so

as it would simply be model lack of fit being checked. The assumption of normality is also not strictly of concern,

but the analyst would still desire the residuals to be symmetric about a mean of zero with the majority of the data

falling close to zero. Hence, a check of normality is still warranted as an approximation to this concern. The

principal statistical criterion that would concern an analyst is R2, the coefficient of determination. The modeling

technique may not be based on data from a random variable, but a good fit of the model to the data is still evidence

that the response surface model adequately replaces the design code.

The model validation methods mentioned above are all criteria of a statistically good model. However, one

must keep in mind that engineering criteria will also exist in an engineering design. For instance, in such a design

process, one may simply look at the maximum residual in absolute value. If this value is within, say, 0.1% of its

associated actual response value, then the fit of the model can be considered acceptable regardless of any goodnessof-fit criteria based on statistical theory. To continue with a green-yellow-red light scenario for this test and five

statistical tests related to the concerns mentioned above, the following table summarizes a sample of usable analysis

guidelines. A summary of regression diagnostics for the tests in Table 4 can be found in Belsley, Kuh and Welsch

(1980) and Neter, et. al. (1996). A summary for how many yellow light or red light conditions would be allowed to

pass a model should be developed by an analyst before beginning the process. In the event that a model does not

pass, transformations of the response can be attempted to improve the status of the model checks. If no model can

be found to pass the pre-specified criteria, the analyst can still complete a probabilistic analysis by resorting to MCS.

5

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

Test

R2

P-Value for Constant 2

P-Value for Normality

1 - P-Value for Max Cook’s Dist.

P-Value for Max Del. Residual

Max Absolute Residual

Green light

0.95

0.10

0.10

0 .8 / n

0.10 / n

< 0.1% yi

Yellow light

0.90

0.01

0.05

0 .5 / n

0.05 / n

< 0.5% yi

Red light

< 0.90

< 0.01

< 0.05

< 0.5 / n

< 0.05 / n

0.5% y i

Table 4. Green-Yellow-Red Light Conditions for Response Surface Models with Respect to Goodness-of-Fit.

OUTPUT ANALYSIS

Output analysis of a probabilistic design is straightforward regardless of whether MCS or RSM is used. As

stated for MCS, after completing the simulated realizations (using either MCS or RSM), a distribution of the

response variable will have been created. The use of a fitted distribution over an empirical distribution has already

been described in the section on MCS. In many cases, the end result is to estimate an MPP that has an associated

extreme cumulative probability. Standard distributions fit to data include the normal, lognormal, logistic,

loglogistic, smallest extreme value, Weibull, largest extreme value, log largest extreme value, beta, and gamma. In

fitting these distributions, even with a large amount of data, it is not atypical to see the bulk of the data fit well and

the tails of the distributions miss the data. In other data analysis problems, this might be ignored. However, when

prediction in the tails is of primary concern, we require a very good fit to the data. A four parameter distribution

that tends to have greater ability to fit the full range of the data is the Johnson S distribution. Hahn and Shapiro

(1967) actually describe the Johnson S distribution as an empirical distribution because it is based on a

transformation of a standard normal variate. Nevertheless, a smooth density function formula does exist for

calculating any specific probability along a Johnson S distribution.

CONCLUSION

Probabilistic engineering design is a powerful tool to implement when designing to a specified probability

of failure. The penalty of probabilistic design compared with deterministic design is the requirement for more timeconsuming design code runs. However, the main benefit of designing to a specified reliability is that it allows the

user to effectively generate a risk assessment in the design stage. Additionally, the benefit of the RSM techniques to

the MCS technique is a significant reduction in the number of design code runs required while maintaining accurate

and comparable results. The model validation checks presented guarantee that the results for an RSM analysis will

be similar to that of an MCS analysis without the need to physically produce an MCS analysis for comparison.

Finally, other than the engineering design runs required to collect the data, the tools required for both MCS and

RSM can be found in most statistical software packages, thus making these techniques easy to implement.

REFERENCES

Belsley, D., Kuh, E. and Welsch, R. (1980), Regression Diagnostics: Identifying Influential Data and Sources of Collinearity,

Wiley.

Hahn, G.J. and Shapiro, S.S. (1967), Statistical Models in Engineering, Wiley.

Myers, R.H. and Montgomery, D.C. (1995), Response Surface Methodology: Process and Product Optimization Using Designed

Experiments, Wiley.

Neter, J., Kutner, M., Nachtsheim, C. and Wasserman, W. (1996), Applied Linear Statistical Models, 4 th ed., Irwin.

ABOUT THE AUTHOR

Trevor Craney is a Senior Statistician at Pratt & Whitney in East Hartford, CT. He received his BS degree from

McNeese State University in Mathematics and Mathematical Statistics. He received his first MS degree in Statistics from the

University of South Carolina. He received his second MS degree in Industrial & Systems Engineering from the University of

Florida, and he received his third MS degree in Reliability Engineering from the University of Maryland. He is an ASQ Certified

Quality Engineer and an ASQ Certified Reliability Engineer. He may be contacted by email at trevor.craney@pw.utc.com

6

REPRINTED from Reliability Review, The R & M Engineering Journal, Vol. 23#2, June 2003.

7