252conf

advertisement

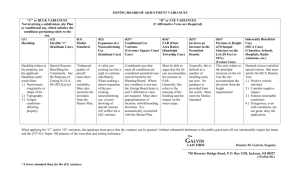

3/06/95 conf CONFIDENCE INTERVALS AND HYPOTHESIS TESTING FOR VARIANCES 1) A Confidence Interval for the Population Variance The Chi-squared 2 distribution refers to the distribution of a sum of z s, that is sums like 2 2 z12 z22 z32 zn2 where z is a N ( 0, 1) variable, that is, it is normal with a mean of zero and a standard deviation of one. The Chi-squared distribution is tabulated according to degrees of freedom (DF) and has mean = DF, variance = 2DF. For example, if a chi-squared statistic has seven degrees of freedom, its mean is 7 and its variance is 14. To find a confidence interval for a population variance, we must first estimate the sample x x x 2 variance, s z xx of one 2 2 n 1 2 nx 2 , where n is the size of the sample. If x is normally distributed, n 1 will be have the standardized normal distribution with a mean of zero and a standard deviation z ~ N 0,1 . The sum of these z's squared will be a chi-squared, z x x 2 2 2 n 1s 2 2 , and will have a 2 distribution with n -1 degrees of freedom. If we are looking for a 95% confidence interval for a variance we can observe that since the 2 2 2 above ratio has a distribution, there must be two numbers, .975 and .025 , that together cut off an interval about the mean that contains 95% of the probability. We can indicate this by saying n 1s 2 2 95% . But if the expression in brackets is true 95% of the time, so is its P .2975 .025 2 inverse, 1 2 1 . And if this is true, it is also true after being multiplied through by n 1s .2975 n 1s 2 2 n 1s 2 So we get .2025 n 1s 2 . confidence level 2 .2025 1 , .2975 n 1s 2 2 2 2 as an interval for the variance, or , more generally, for n 1s 2 2 1 . For example, if n 31 and s 1000 , our 2 degrees of freedom are n 1 30 , so that if the confidence level is 95%, we look up ( 30) ( 30) .2025 46. 979 and .2975 16. 791 . Substituting these into the formula we get 301000 2 301000 2 , which can be reduced to 2 46.999 16.791 0.6386 1000 2 2 1.7867 1000 2 . If we want a confidence interval for the standard deviation instead, we can take the square root of both sides of the equation and say 0.799 1000 1.337 1000 or 799 1337 . 2)Finding values for 2 On most tables of the chi-squared distribution the right hand critical values of appear alone. For example for .025 and 5 degrees of freedom the appropriate critical value of chi-squared is 12.8. 2 This means that any value of above 12.8 is in the right-hand tail of the distribution where only 2.5% of 2 the probability lies. A value of above 12.8 would cause us to reject a null hypothesis for a 2.5% onesided test or a 5% two-sided test. Unfortunately many chi-squared tables give only these upper critical 2 values and leave out lower critical values like .975 , so a table with both upper and lower critical values is included with this document. 2 However, this table does not show all values of above 30 degrees of freedom. For more than 30 degrees of freedom the normal distribution is used to approximate the chi-squared distribution, most 2 commonly by setting z 2 2 2 DF 1 , where DF n 1 . For example, if s 2 1100 , 2 1000 and n 101 then 2 n 1s 2 2 100 1100 1000 110 , then, if this value of 2 is substituted into the above formula for z, we get z 2110 2100 1 220 199 14 .8324 14 .1067 0.7257 . An example of the use of this appears under "Hypothesis Testing for Variances - One Sample." This formula is also substituted into the confidence interval formula to give the following approximate formula for a confidence interval for a standard deviation: s 2 DF s 2 DF , which could also be written as z 2 DF z 2 DF 2 2 s 2 DF . For 2 DF z example, if n 400 , s 1000 and .05, 2 DF = 2400 - 1 = 798 = 28.25 , so 2 1000 28.25 or 935 1075. If a confidence interval for the variance is required, the 28.25 1.96 numbers on both sides of the interval can be squared. This interval should only be used when the values of 2 for the appropriate degrees of freedom are above those available on the chi-squared table. that 3) Hypothesis Testing for Variances - One Sample Suppose that we want to test the statement that the variance of a given population is equal to 2 2 2 some given variance, which we can call 0 . That is our null hypothesis is H 0 : 0 and our alternative hypothesis is H1 : 2 ratio 2 n 1s 2 02 20 . If the underlying data is normally distributed, we use the test , which has the chi-squared distribution with n 1 degrees of freedom (often written 2 2 2n1 ). For a two-tailed test we would pick two values of chi-squared, and , and accept the 1- null hypothesis if 2 lies between them. 2 2 For example, assume that we believe that the distribution of the ages of a group of workers is normal, and we wish to test our belief that the variance is 64. Our data is a sample of 17 workers, and our computations give us a sample variance of 100. Let us set our significance level at 2% and state our H 0 : 2 64 problem as follows: H1 : 2 64 n 17 , DF n 1 16, s 2 100, 20 64 , . 02 We can compute 2 n 1s 2 02 16 100 25 . Since 64 2 . 01 and DF 16, we go to the chi-squared 216 5.812 and .20116 32 .000 . The 'accept' region is between these two values, so we table to find .99 cannot reject the null hypothesis. As a second example, assume that we are testing the same null hypothesis, but the sample size is 73, so that chi-squared has 72 degrees of freedom. Thus we have the following: n 73, DF n 1 72 , s 2 100, 20 64 , . 02 . The formula for the chi-squared statistic gives us 2 n 1s 2 02 72 100 112 .5. If we cannot find an appropriate 64 2 on our table because of the high number of degrees of freedom, we use the z formula suggested in section 2. This is z 2 2 2 DF 1 2112 .5 272 1 225 143 15 .00 11 .96 3.04 . Since this statistic is N 0,1 , it must be between z . Since 2 above the critical value, 2.33, we reject that the p-value is below .005 . 2 . 01 , we use ±2.33. Since 3.04 is H0 . In fact, if we compare 3.04 with a normal table, we can say 4) Tests for Similarity of Variances from Two Samples. If we have two separate samples and want to test to see if their variances are equal, we test a ratio of chi-squares instead of a difference, as we would do as with means or proportions. The ratio of 2 two ' s from the same population has the F distribution, a distribution named after R A. Fisher, one of the founders of modern statistics. This distribution has two parameters: DF1 and DF2, , the degrees of freedom of the first and second samples. A rough sketch is shown here. It shows three different F distributions with their upper 5% critical points. Generally speaking a value of F above the critical point results in a rejection of a null hypothesis. A table of upper critical points is included in this document.. The mean of the F distribution is is 2DF2 2 DF1 DF2 2 , providing DF 2 , assuming that DF2 is greater than 2. Its variance DF 2 1 DF2 is greater than 4. Finally its mode is DF2 DF1 1 , a DF1 DF2 1 DF1 DF2 2 DF2 4 number which, like the mean, is usually very close to 1. Also note that, since the F distribution is a ratio 2 of two ' s (divided by their degrees of freedom), both of which are never negative, a value of F can never be negative. 2 To use the F table, one must know the degrees of freedom for both the numerator and the denominator. Usually each table is for only one value of , generally .01 or .05. The user locates the table for the significance level desired and finds the degrees of freedom for the numerator across the top and the 3,7 degrees of freedom for the denominator down the left side. So the value of F.05 can be found to be 4.35. This document contains tables for significance levels of 1%, 2.5% and 5%. Tables of lower points like 1 3,7 1, DF 2 F.95 are rarely given due to the fact that F1DF DF 2, DF 1 . For example, F 3,7 F.95 1 1 0.112 . This equality is built into the formulas for confidence intervals and 7 ,3 8.89 F.05 hypothesis tests given here. In section 3 we found that 2n1 n 1s 2 02 is an example of the chi2 squared distribution with n-1 degrees of freedom. Thus, the first of two sample variances s1 , when multiplied by n1, its sample size, and 12 , the variance of its parent distribution, will have the chi-squared distribution with 2n2 1 n1 1 degrees of freedom, and we can write 2n1 1 n 1s 22 . 2 22 2 n1 1 If this is true and 2 1 2 2 , the ratio n1 1 2 n2 1 n2 1 n1 1s12 12 s12 s 22 . Similarly, has the F distribution with n1 1 and n2 1 degrees of freedom. Because the tables are basically one-sided, we will do a one-sided test. A researcher compares government lawyers with lawyers in private practice and discovers that the government lawyers have a lower mean salary. The researcher hypothesizes that government lawyers take a lower mean salary because the variance for government lawyers, which serves as a measure of risk, is smaller. Thus, if lawyers in private practice are group 1 and government lawyers are group 2, our hypotheses are: H 0 : 12 22 H 1 : 12 22 Our data set for private lawyers consists of five points, (in thousands of dollars per month), 1.0, 2.0, 1.9, 4.0 2 and 0.5. From these data we compute s1 1. 797 and n1 5. Our data set for government lawyers consists of six points, 0.6, 0.7, 0.8, 1.1, 0.4 and 0.6. From these data we compute s22 0. 056 and n2 6 . Thus the F-ratio is F s12 1. 797 32. 09 . Since DF1 n1 1 4 s22 0. 056 4,5 11 .39 on our F table. Since our calculated F is much larger DF2 n2 1 5, we look up F.01 than our critical value, we reject H0 . 2 2 Since the table is set up for one sided tests, if we wish to test H 0 : 1 2 , we must do two and separate one-sided tests. For example, if we use the data of the previous problem to test for equality of s12 s22 4 ,5 5,4 variances at the 5% level, we would first test 2 against F.025 and then test 2 against F.025 . s2 s1 5) A Confidence Interval for a Ratio of Variances. We can also use the F distribution to form a confidence interval for the ratio 2 n1 1 previous section we can conclude that F ( n1 1,n2 1 ) n1 1 2 n2 1 n2 1 there is a 1 probability that F 12 s22 22 . From the s12 12 s22 . But if this is true, by definition of F , 22 s12 ( n1 1 , n2 1 ) 1 2 12 22 ( n1 1 ,n2 1) F 2 s22 . If we multiply this through by 2 , s1 s22 ( n1 1,n2 1 ) 22 s22 ( n1 1,n2 1) F1 2 2 2 F 2 we get . This is the confidence interval for the ratio of the two s12 1 s1 variances, but because of the one sidedness of the F table, it is much more convenient to write this s 22 22 s 22 ( n1 1, n2 1) 1 F formula as . s12 Fn2 1,n1 1 12 s12 2 2 For example, let us use the data from the previous section : n2 1,n1 1 5, 4 9.36 s 1. 797 , s22 0. 056, n1 5 and n2 6 . Since F.025 F.025 and 2 1 n1 1,n2 1 4,5 7.39 F.025 F.025 , so that our interval is 0. 0033 22 0. 2303 . 12 0.056 1 22 0.056 7.39 or 1.797 9.36 12 1.797 It should go without saying that we can also write this confidence interval formula for a ratio of variances as s12 s 22 12 s12 ( n2 1, n1 1) F . F n1 1, n2 1 22 s 22 2 1 2