Simple Linear Regression: ANOVA table

advertisement

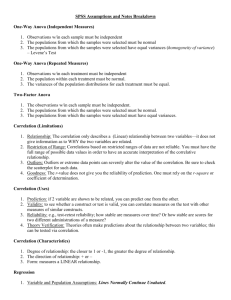

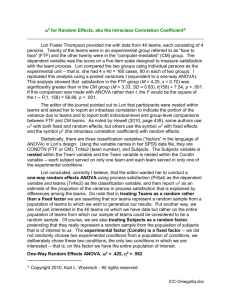

Simple Linear Regression: ANOVA table Hypotheses: Ho: 1 = 0 (use ŷ y to predict y, there is no linear relationship between x and y) HA: 1 0 (use yˆ b0 b1 * x to predict y, there is a stat sig linear relationship between x and y) Assumptions: 1. There is a true or population line (or equation): yi = 0 + 1x + i , where 0 is the y-intercept and 1 is the slope, which defines the linear relationship between the independent variable, x, and the dependent, y. The random deviations, ei’s, allow the points to vary about the true line. (The estimated line is: ŷ i = b0 + b1x.) 2. The i’s have mean zero, e = 0. 3. The standard deviation of the i’s is constant, e is not dependent on the x’s. 4. The i’s are independent of each other. 5. The i’s are normally distributed. We use the residuals, ei’s, to estimate the i’s. Combined, this say each of the i’s are independently, identically distributed N(0, 2) or iid ~ N(0, 2). This means that the y’s are also normal, and each y ~ N( 0+1x, 2). The sole purpose of residual plots is to check these assumptions!!!! NOTE: we now have 2 parameters, 0 and 1 we have to estimate for y which is why the df for the ttest = n2. ANOVA Table for Simple Linear Regression Source df Sum of Squares Mean Squares F value p-value Model 1 ( yˆ i y )2=SSModel SSM = MSM MSG/MSE = F1,n2 Pr(F > F1, n2*) Residual n2 ei2 = SSResidual SSR/( n 2) = MSE Total n 1 (yi y ) = SSTotal SST/( n 1)=MST 2 Residuals are often called errors since they are the part of the variation that the line could NOT explain, so MSR = MSE = sum of squared residuals/df = ˆ 2 = estimate for variance of the population regression line SSTot/(n1) = MSTOT = sy2 = the total variance of the y’s F = t2 for Simple Linear Regression. The larger the F (the smaller the p-value) the more of y’s variation the line explained so the less likely H0 is true. We reject when the p-value < . R2 = proportion of the total variation of y explained by the regression line = SSM/SST = 1 – SSResidual/SST ANALYSIS OF VARIANCE One-Way ANOVA Assumptions: 1. Each of the k population or group distributions is normal. check with a Normal Quantile Plot (or boxplot) of each group 2. These distributions have identical variances (standard deviations). check if largest sd is > 2 times smallest sd 3. Each of the k samples is a random sample. 4. Each of the k samples is selected independently of one another. H0: 1 = 2 = . . . = k vs. HA: not all k means are equal (this will always be the hypotheses, the only difference is # of groups) no effect the effect of the ‘treatment’ is significant ANOVA Table: Source Group(between) Error(within) Total degrees of freedom k-1 N-k N-1 Sum of Squares ni( x i - x )2=SSG (ni – 1)si2 = SSE (xij- x )2= SSTot Mean Squares SSG/(k-1) = MSG SSE/(N-k) = MSE SSTot/(N-1)=MST F value p-value MSG/MSE = Fk-1,N-k Pr(F > Fk-1,N-k *) N = total number of observations = ni, where ni = number of observations for group i The F test statistic has two different degrees of freedom: the numerator = k –1, and the denominator = N – k Fk-1,N-k 2 2 NOTE: SSE/(N-k) = MSE = sp2 = (pooled sample variance) = (n1 1)s1 ... (nk 1)sk = ˆ 2 = estimate for assumed (n1 1) ... (nk 1) equal variance this is the ‘average’ variance for each group SSTot/(N-1) = MSTOT = s2 = the total variance of the data (assuming NO groups) F variance of the (between) samples means divided by the ~average variance of the data, the larger the F (the smaller the p-value) the more varied the means are so the less likely H0 is true. We reject when the pvalue < . R2 = proportion of the total variation explained by the difference in means = SSG SSTot