Day 6 – Distribution of the Sample Mean

advertisement

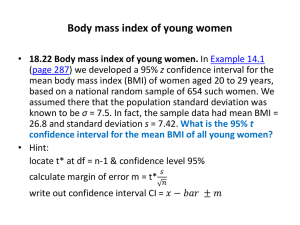

Day 6 – Distribution of the Sample Mean Population Sample μ = Mean X = sample mean = SD s = sample SD o In many investigations the data of interest can take on many possible values. Examples we will consider are attachment loss and DMFS. o With this type of data it is often of interest to estimate the population mean, μ. o A common estimator for μ is the sample mean, X . o In this lecture we will focus on the sampling distribution of X . Distribution of the Sample Mean X is a random variable. Its value is determined partly by which people are randomly chosen to be in the sample. Many possible samples, many possible X ’s mean = 1.78 0 2 4 6 8 10 mean = 1.55 0 mean = 1.6 0 2 4 6 8 2 4 6 8 10 0 2 4 6 8 6 8 10 0 10 2 4 6 8 10 0 2 4 6 8 10 0 0 2 4 6 8 10 4 6 8 10 0 2 4 6 8 10 0 2 4 6 8 10 0 2 4 6 8 10 4 6 8 10 0 2 4 6 8 10 0 2 4 6 8 10 0 2 4 6 8 10 4 6 8 10 2 4 6 8 10 mean = 1.45 0 mean = 1.59 0 2 mean = 1.7 mean = 1.38 mean = 1.61 0 2 mean = 1.73 mean = 1.44 mean = 1.66 mean = 1.64 10 2 mean = 1.6 mean = 1.67 mean = 1.62 mean = 1.7 0 4 mean = 1.56 mean = 1.53 0 2 mean = 1.45 2 4 6 8 10 mean = 1.72 0 2 4 6 8 We only see one! We will have a better idea of how good our one estimate is if we have good knowledge of how X behaves; that is, if we know the probability distribution of X . 10 Central Limit Theorem An important result in probability theory tells us that averages (like X ) all have a certain type of probability distribution, the Normal distribution. Probability distribution of no matter what!* X the Normal distribution , μ The Central Limit Theorem says that the distribution of X will be Normal, no matter what distribution the original data have.* The size of the sample needs to be reasonably large for the Central Limit Theorem to hold. * well, almost all the time anyways Example: Mean attachment levels By computer simulation we randomly chose samples of 100 patients from the mean attachmentlevel data. For each sample of 100, we computed the sample mean. The histogram below presents the distribution of 1000 of such sample means. means from samples of 100 patients 1.2 1.4 1.6 1.8 2.0 average mean attachment level (mm) Note the shape is similar to Normal distribution More about distribution of X The average value of X will be μ. The standard deviation of X is n . o σ is the standard deviation in the population. o n is the number of people in the sample o The standard deviation of X is called the standard error of the mean, or SE (X ) . o The standard error ↓ as n ↑ o The standard error ↑ as σ ↑ Central Limit Theorem Example mean =15.28 sd =5.27 60 mean =15.2 sd =11.78 0 0 200 20 40 600 Frequency 1000 80 Distribution of 4893 current smokers 0 20 40 60 5 10 20 25 30 35 means from samples of size 5 80 mean =15.2 sd =1.68 0 0 20 20 40 40 60 60 mean =15.02 sd =2.59 100 80 Cigarettes/day 15 10 15 20 means from samples of size 20 25 10 12 14 16 18 20 means from samples of size 50 As size of samples increases, variability of means decreases distributions of means look more like Normal distributions Properties of the Sample Mean (Summary) 1. Unbiased: E (X ) Expected value of the sample mean is the true population mean 2. Standard Error: SE ( X ) n SE (X ) is the standard deviation of the sampling distribution of X . 3. Approximate Normality: By the Central Limit Theorem, for large n, the distribution of X should be approximately Normal. In summary, 1- 3 say 2 For large n, X N , n Example: Rosner Birthweight data Rosner Bi rthwei ght Data (T abl e 6.2) μ = 112 oz = 20.6 oz mean = 112 200 190 180 170 160 150 140 130 120 110 100 90 80 70 60 50 40 30 20 std. dev = 20.6 birthweight (oz) The histogram above shows the distribution of birthweights at a Boston hospital. Estimate the probability that the mean birthweight of the next 20 babies born will be greater than 120 oz. X 112 120 112 P( X 120) P PZ 1.74 0.0409 20 . 6 20 . 6 20 20 Now, assuming that the birthweights follow a normal distribution (which they don’t exactly but it does look close), calculate the percentage of births that exceed 120 oz. X 112 120 112 P( X 120) P PZ 0.39 0.3483 20 . 6 20 . 6 Note that we could not do the second calculation the same way with the smoking data as the original distribution is not Normal. But we could do the first! Law of Large Numbers Remember that for large n, X N , n. 2 For any n, the distribution of X is centered around μ. The variation of X decreases as n increases. Thus, as the sample size gets large, the sample mean, X , is forced to be closer and closer to the population mean μ. Larger sample sizes give better estimates of μ. The same is true for the sample standard deviation, s. As the sample size increases, s should get closer to the population standard deviation σ. Standard Error versus Standard Deviation Standard Deviation describes the variability of a population or a sample. Standard Error describes the variability of an estimator that is usually a function of the whole sample. These terms are sometimes used interchangeably, which is incorrect. Confidence Intervals for the Mean If n is large enough, we can use the result that X n ≈ N(0,1) to a construct confidence interval for μ. However, this would result in a formula that requires one to use σ, which, in usual practice, will not be known. We will estimate the population standard deviation, σ, with s, the sample standard deviation. Substituting the random variable s for σ will alter the distribution of the Z score slightly. The distribution of the statistic T X s n is called a “t” distribution with n -1 degrees of freedom and will be denoted by tn-1. t Distribution The t distribution is similar in shape to the standard Normal distribution, but more spread out. Putting s into the statistic adds more variability. The percentiles of t distributions are greater than the respective N(0,1) percentiles. t distributions with lower degrees of freedom (which correspond to smaller sample sizes) are more spread out, and thus have higher percentiles. t distributions with higher degrees of freedom are more similar to the Normal distribution. N(0,1) pdf t(4) pdf 97.5 %ile of N(0,1) 97.5 %ile of t(4) 0.0 1.96 2.78 Confidence Interval for the Mean If X is Normal or n is large, then T X s n has a t distribution with n-1 degrees of freedom. X P tn1, .975 tn1, .975 0.95 s n where tn-1, .975 denotes the 97.5th percentile of a tn-1 distribution. from this, some algebraic maneuvering gives P X tn1, .975 s n X tn1, .975 s n 0.95 . Which says that we are “95% confident” that μ lies in the interval X t n1, .975 s n , X tn1, .975 s n . This 95% confidence interval is a random quantity that will “cover” the true population mean 95% of the time. Example: Chewing gum study Group A was comprised of n=25 children. The sample mean of the change in DMFS was – 0.72. The sample standard deviation, s, is 5.37. The 97.5th percentile of the t24 distribution is t24,.975 = 2.06 (can look up in Table 4 in coursepack or use Excel), thus the 95% confidence interval is 0.72 2.06 5.37 25 , which gives the interval (-2.92, 1.48). “The probability is 95% that true mean change in DMFS for this treatment is contained in the interval (-2.92, 1.48)” Note: the true mean, , is not a random quantity. It is the confidence interval that may vary. See coursepack Figure 8.1 for a pictorial description of this idea. General formula: 100(1-α)% confidence interval for μ, X tn1,1 / 2 s n to X tn1,1 / 2 s n, where t n 1,1 / 2 is the 1-α/2th percentile of a tn-1 distribution. Example: Suppose n = 30, for a 95% confidence interval, α = 0.05. We use tn 1,1 / 2 t 29,0.975 2.05 , the 97.5th percentile, in the formula for a 95% confidence interval. α is meant to indicate the “error” we are willing to live with. That is, when estimating the mean with a 95% confidence interval, we are allowing an α = 5% chance of missing the true mean. It is standard to use the 1-α/2th percentile because we want to split the error evenly to either side of the interval. Example: Find the 99% confidence interval for for the change in DMFS in gum group A. Acceptable error in this case would be α=1%, so we use the 100(1-.01/2)% = 99.5th percentile. From table 4, t24,.995 = 2.80, thus the 99% CI is 0.72 2.80 5.37 25 , which gives the interval (-3.73, 2.29). Note: the 99% confidence interval is larger than the 95% confidence interval. It needs to be wider to have a better chance of covering .