Reliability of Laboratory Measurements

RELIABILITY OF LABORATORY

MEASUREMENTS

In chemistry, as in much of science, many experimental results are quantitative and are expressed as numbers. In some cases the measured quantity has an exact value. An example is the determination of the number of hydrogen atoms in the water molecule. The result must be an integer.

The answer is exactly two. For most measurements, however, there is no definite, exact result. There is always an uncertainty in the measurement caused by human error, the limitations and errors in measuring devices, etc.

It is necessary, therefore, that a scientist be aware of the uncertain nature of her or his results, seek ways of assessing the magnitude of the errors in the measurements, and report the results to others in a way that indicates the degree of confidence that can be placed in the measurements.

Systematic and Random Errors

Experimental errors are often distinguished as being systematic or random. A systematic error is one which is inherent in the experimental procedure or in the measuring instruments. Such an error will cause the measured quantity to be wrong in the same direction (i.e., to be either too large or too small) and by about the same amount each time the experiment is repeated. It is in this sense that such errors are said to be systematic. A random error is one which varies in size and direction between repetitions of the experiment. It may result, for example, from the difficulty in reading an instrument or from failure to reproduce the conditions of the experiment exactly.

An example may clarify the distinction. Let's imagine that a chemist has the task of determining the mass per milliliter of dissolved salts in a saline solution. The chemist decides to do this by delivering the solution from a 25-ml volumetric pipet into a weighed evaporating dish, evaporating the solution to dryness, and reweighing the dish. Each time this determination is repeated the experimenter will probably make a small error in adjusting the meniscus of the solution to the mark on the pipet. This is an example of random error. It might cause the delivery of too much solution one time, and too little the next. Similar errors might be made in determining the mass of the evaporating dish on the balance. Another source of random error would be the failure to evaporate the salt to

29

dryness carefully. Solution might spatter from the dish causing a loss of salt, resulting in too low a mass of salt; or the salt might not be completely dried, resulting in too high an apparent mass.

An example of a systematic error in this determination is the use of a pipet which has been miscalibrated by the manufacturer. If a pipet is used which delivers, say, 24.50 ml instead of 25.00 ml, the measured salt content will be too small by about the same amount, 5 parts in 250, each time the experiment is performed. A systematic error could also result if the balance used in the determination had been miscalibrated and gave consistently faulty values for the mass.

Systematic errors are often difficult to eliminate. The techniques and instruments used must be checked for possible errors. The pipet in the example above could have been calibrated by the experimenter so that its actual volume was known. Carrying out the same determination by two completely independent methods is another good way to check for systematic errors. Random errors are easier to estimate. Because they are random they can be treated by statistical theory. A description of the estimation of random errors is given below.

Precision and Accuracy

The accuracy of a measurement is a measure of the degree to which the measurement approaches the "true value" of the quantity measured. Normally, however, the "true value" is unknown and only indirect estimates of accuracy are possible. For example, the accuracy of a weighing might be estimated if a standard mass of known value were available to test the weighing procedure; or the accuracy of a determination of the percent copper in a brass sample could be established by applying the same procedure to a sample of pure copper.

The precision of a measurement is a measure of its reproducibility. If systematic errors have been eliminated from an experimental measurement we could expect that, while any single measurement might contain random error, the average of a large number of determinations ought to give the "true value". Unfortunately, chemical measurements are often time consuming and only a limited number of repetitions are feasible. Statistical theory of random errors permits us to place an estimate of the reliability of the average of a small group of measurements based on their precision. It should be emphasized again, however, that such an estimate assumes that no systematic error is present. In the presence of systematic errors the estimation of the accuracy of a measurement from its precision is impossible.

30

Significant Figures

In the expression of a quantity by a number, all the digits which are reasonably reliable, except those zeros which are included to express the order of magnitude of the quantity, are termed significant figures. The volume of a box which is known to be between 43.5 and 43.7 cm 3 , for example, might be expressed as 43.6 cm 3 or 43,600 mm 3 or 0.0000436 m 3 . In each case there are only three significant figures—436. The zeros serve only to locate the decimal point. When a zero appears between significant digits, it is itself significant. Thus the figure 708 has three significant figures. When the last significant digit of a number is a zero, in order to be clear, it is necessary to write the number so as to distinguish between significant and non-significant zeros. For example, we might weigh a large object on a balance which is sensitive only to the nearest 10 g. If the object weighs 3400 g, the first three digits are significant but the last zero serves only to locate the decimal point. To avoid the ambiguity about the significance of the zeros, the weight should be expressed either as 3.40 X 10 3 g or as 3.40 kg.

In order to decide how many digits of a measurement are significant, one must consider the way in which the measurement was made. The general rule is that in reading a number from left to right the first doubtful digit is the last significant figure and is normally the last figure which should be written. If the length of an iron bar is measured. with a meter stick marked off in centimeters, as shown in Fig. 26, the first doubtful figure would

Figure 26 be the estimated number of millimeters between the 17 cm mark and the end of the bar.

The length would be expressed to three significant figures, e.g., 17.7 cm. If the meter stick were marked off in millimeters, by measuring very carefully it might be possible to estimate the nearest tenth of a millimeter. Then the length might be expressed as 17.72 cm – to four significant figures.

31

Absolute and Relative Error

Which is the "better" measurement – the length of a 4 meter piece of lumber to within 1 millimeter or the length of a 400 meter running track to within one centimeter?

It is clear that the absolute error, which is the actual error and has the same dimensions as the quantity measured, is not by itself a good indication of the precision of a measurement. Although the first measurement has an absolute error of one millimeter and the second an absolute error 10 times as large, we would probably agree that the latter measurement represents greater care and skill on the part of the measurer. We get into even greater difficulty when we try to compare absolute errors of different dimension, 0.4 ml and .05 grams, for example.

We can more successfully compare errors if we use the relative error, which is the absolute error divided by the value of the measured quantity expressed in the same units.

The relative error is dimensionless and can be expressed as a fraction, a percentage, in parts per thousand, etc. Consider the two measurements compared above. The relative error for the lumber measurement is

0.001 m

4.0 m

= 0.00025

or multiplying by 1000, 0.25 parts per thousand.

The relative error in measuring the running track is

0.01 m

400 m

= 0.000025 or 0.025 ppt.

The use of relative error confirms our intuition that the latter is the "better" or more precise measurement.

Propagation of Errors

More often than not, the result of an experiment is derived by mathematical manipulation of the experimentally measured quantities. For example, in order to analyze for calcium in a sample one can weigh the sample, precipitate the calcium in it as calcium oxalate, and then weigh the CaC

2

O

4

. The raw measurements, the weights of sample and CaC

2

O

4

, are used to calculate the percent calcium. We will need to know how reliable the calculated quantity is, based on the precision of the raw data.

For our purposes the following rules will suffice to indicate how errors are propagated.

32

1. When two numbers are added or subtracted, the error in the answer is the sum of the absolute errors in the original numbers. Thus, the error in the volume of liquid delivered by a buret is the sum of the errors in measuring the initial and final positions of the meniscus.

Final reading 30.64 ± .01 ml

Initial reading 0.89 ± .01 ml

VolumeDelivered 29.75 ± .02 ml

Application of this rule illustrates that addition (or subtraction) gives an answer with an absolute error about the same size as the least precisely known of the addends.

Thus if one calculates the total length of a large object and a small one, it makes little sense to measure the small one very precisely, even if this is relatively easy to do.

39.6 ± 0.1 cm

0.854 ± 0.002 cm

40.4 ± 0.1 cm

It would be just as good, from the standpoint of the error in the total length, to measure the small object to the nearest 0.1 cm.

2. When two numbers are divided or multiplied, the relative error in the answer is the sum of the relative errors in the original numbers. For example, if the weight of a

10.00-g sample of water is known to 0.01 g, or to a fractional error of 1/1000, and the density of water, 0.99715, is known to the fifth decimal place, or to about 1/100,000, the error in the volume of the water calculated from the equation, volume = weight/density, is

1

1000

+

1

=

101

100,000 100,000

Thus, the volume is known to about 1/1000 and should be expressed as 10.00 ml to four significant figures.

Using these rules and estimates of the precision of experimental measurements, it is possible to estimate the errors in the reported results of experiments. These can then be reported with the proper number of significant figures, which gives a reader of the results a rough idea of their precision.

33

Distribution of Measurements

Statistical theory provides a measure more precise than significant figures of the reliability of experimental results. It must be emphasized, however, that the method, strictly speaking, gives an estimate of the precision of the measurement and applies to the accuracy only in the absence of systematic errors.

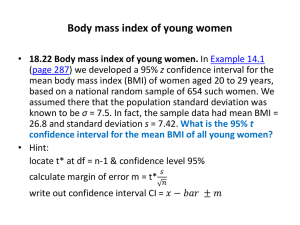

If a measurement, x , is repeated many times, and the errors in the measurement are random, we would expect the individual results to group around the "true value"; just as many measurements would be expected to be too large as too small. The best estimate of the "true value" is the arithmetic mean, x and if the number of trials is very large the difference between the mean and the "true value" approaches zero. The mean, x

is given by

i x i (1) x

n

Figure 27. The Gaussian or Normal Distribution Curve

34

where n is the number of individual measurements, each of which is designated as x i.

If the number of trials is small, e.g., three, the mean may be different from the "true value" since the random errors in the trials might, for example, give three results, all of which are too large. There is no way, other than increasing the number of trials, to defeat this problem and the mean is still the best estimate of the "true value."

Statistical theory, which is supported by experience, tells us exactly how an infinitely large number of measurements group around the mean. The distribution can be represented graphically as shown in Fig. 27 or by means of the Gaussian distribution function p ( x )

1

2

e

x i

2

2

2

(2) x i;

In this equation, p(x) is the probability of obtaining a measurement with the value

µ is the mean value, given this symbol to distinguish it from x the mean of a finite number of measurements; and is the standard deviation of the group of measurements.

The standard deviation of a very large set of n measurements, whose mean is µ is defined as

i

x n i

1

2

(3)

Another interpretation of p(x) is the fraction of a very large number of measurements watch will have a particular value, xi Several things should be noted in

Eqs. 2 and 3 and in Fig. 27. The quantity ( xi – µ) is the deviation of the given measurement from the mean; effectively, the error in the measurement. The square of this quantity has the same value whether the deviation is positive or negative, i.e., whether the measurement is too large or too small. Thus, the curve in Fig. 27 is symmetrical around x i

= . It falls off in the same way for positive as for negative errors. The standard deviation also depends on the squares of the errors or deviations of the measurements. The larger the errors, the greater the scatter in the measurements, the larger the value of , and the slower the fall of p ( x ) as x gets further from µ. Figure 28

35

shows two Gaussian or normal error curves superimposed. The mean value for both curves is 20 but = 1 for one, and = 2 for the other. x

Figure 28. Comparison of Gaussian Curves with

Different Standard Deviations.

We can draw another general conclusion from the curve in Fig. 27. The probability that a measurement lies between two given values, x

1 and x

2 ,

is the area that lies under the curve between x

1 and x

2 .

For the Gaussian curve, 68% of the area under the curve lies within x = µ – and x = µ , 95 % of the area lies between x = µ – 2 and x = µ

+ 2 , and over 99% lies between µ ± 3 . The standard deviation is thus a measure of the reliability of any measurement in the group. We can restate this property of the Gaussian distribution in the following way. If we make one more measurement we can be 68% confident that it will lie within one standard deviation on either side of µ . That is, there is a 68% probability that it will lie within this interval. We can have 95% confidence that it will lie within a distance of 2 on either side of µ, and so forth. This is often stated in the following way: the 95% confidence interval of the measurement is ± 2 .

Thus, if we know for a set of measurements, we can make some meaningful statements about the reliability of a representative measurement.

36

The Student Distribution

The problem of being able to take only limited numbers of chemical measurements remains. The Gaussian curve presupposes an infinite number of random measurements. To distinguish the standard deviation and arithmetic mean of a finite group of measurements from those for the infinite sets of Eqs. 2 and 3, S and x are used to designate these quantities

S = [ (x i

-x) 2 /(n-1)] 0.5

(4)

We were able to give a straight forward interpretation to which is based on the large set of measurements but have to settle in most cases for S which is based on a small dataset. S is an estimate of and any statistical inferences for become approximations when they are applied to S. Furthermore, S becomes a poorer estimate of as the size of the sample decreases. An English statistician who published under the pseudonym

Student addressed this problem. His mathematical solution involves the form of the distribution of errors for variously sized sets of measurements. The form of this distribution depends on the number of measurements taken.

The usual way of making use of the Student distribution to express the reliability of a result from a set of data whose standard deviation is S, is to select a confidence level with which we would like to express the result. We then use a t-table, based on the

Student distribution, which gives a factor, the Student’s t value, by which to multiply S to give the desired confidence interval of that result. The factor to be used depends on both the confidence level selected and the degrees of freedom, f. f equals the number of measurements in the group from which S was calculated minus the number of parameters determined from the data. In this discussion, one parameter, the mean value, is extracted from the data so f = m-1.

89.36 cm

89.24 cm

89.31 cm

3 267.91

x = 89.30 cm = average value

37

S =

(89.36 – 89.30) 2 + (89.24 – 89.30) 2 + (89.31 – (89.30) 2

2

=

(.06) 2 + (–.06) 2 + (.01) 2

=

.00365

= 0.061 cm

2

If we wish to express the error interval within which we can have 95% confidence that a measurement of the length will lie, we refer to the ttable in Appendix H and find the number corresponding to a sample size of 3 at a confidence level of 95%. This number is

4.303.

95% confidence interval = (4.303) (0.061 cm) = ± 0.26 cm

Confidence Interval of the Mean

Occasionally we will be interested in the reliability of a single measurement. This would be the case, for example, if we calibrated a volumetric pipet. The important thing to know is the amount of error in a single delivery from the pipet. More often, however, we make several measurements of the same quantity and we want to estimate the reliability of the average value of these measurements. Again, statistical theory provides the answer to this problem. It can be shown that if the standard deviation of a small set of measurements is S, the standard deviation of the average values of a large number of such sets from the average of all the measurements is

S m

S n

(5) where n is the number of measurements in the small set, and S m

is termed the standard deviation of the mean. S m can be treated in exactly the same way as was described above for S to establish a confidence interval of the mean. Thus, for the example above:

S m

0 .

061 cm

3

0 .

035 cm

The 95% confidence interval of the mean is

(4.303) (0.035) = 0.15 cm

38

Relative Deviations and Confidence Intervals

The standard deviation, the standard deviation of the mean, and the confidence intervals described above are all dimensioned quantities and have the same units as the experimental measurements. To make these quantities independent of the units of the measurement and to make it possible to compare the reliabilities of measured quantities of different sizes, it is customary to express these quantities in relative terms. As indicated earlier, this is done by dividing the appropriate error measure by the mean value of the measured quantity. For our example, the relative standard deviation of the mean is

S m x

=

0.035 cm

89.30 cm

X 1000 = 0.39 parts per thousand

The relative 95% confidence interval of the mean is

0.15 cm

89.30 cm

X 1000 = 1.7 p.p.t.

Significant Figures and Rounding Off of Experimental Results

We can now discuss how to express final experimental results to the correct number of significant figures. The general rules we shall use are these.

I. The standard deviation, standard deviation of the mean, and confidence intervals, whether absolute or relative, should be expressed by two digits starting with the leftmost non-zero digit in these quantities.

II. The average value should be carried out, at the most, to the decimal place corresponding to the leftmost non-zero digit in the standard deviation of the mean, but never to more decimal places than justified by the absolute error as calculated from the individual experimentally measured quantities, using the rules for the propagation of errors.

After you have decided in which decimal place the last significant figure occurs in the average value, it is customary to round off the number in that decimal place. We will use the following rules for rounding off numbers; they are consistent with the conventions used by most electronic calculators.

1. When the first digit dropped is 5 or greater than 5, the last digit retained is increased by 1.

2. When the first digit dropped is less than five, the last digit retained is left unchanged.

39

The following example illustrates these principles and rules. Imagine that we measure the density of a liquid by releasing a known volume from a buret at constant temperature and weighing it. The buret is ruled in 0.1 ml divisions and we measure out about 2 ml in each trial. The data and results are as follows.

Trial Initial

Buret Readings Liquid Liquid Density =

Final Volume Weight wt./vol.

1 0.14 ml 2.09 ml 1.95 ml 2.3540 g.

2 2.09 4.13 2.04 2.4570

3 4.13 6.21 2.08 2.5158

1.2072 g/ml

1.2044

1.2095

4 6.21 8.26 2.05 2.4776 x

= 1.2072 + 1.2044 + 1.2095 + 1.2086

4

= 1.2074 g/ml = average value

1.2086

S = (.0002) 2 + (.0030) 2 + (.0021) 2 + (.0012) 2

3

= 0.002228 g/ml

= Standard Deviation

S m

= 0.002228 = 0.001114 g/ml = Std. Dev. of Mean

4

95% Confidence Interval of the Mean = (3.182)(.001114)

= .003545 g/ml

The average value of the density and the measures of precision have been expressed without regard to significant figures. We see that the leftmost non-zero digit in the measures of precision lies in the third decimal place. Application of Rule I would therefore require us to express these quantities as follows: S = 0.0022 g/ml; S m = 0.0011 g/ml; and 95% confidence interval of the mean = 0.0035 g/ml.

40

Since the leftmost non-zero digit in the standard deviation of the mean (S m

) is in the third decimal place it would appear, from Rule II, that the average should also be expressed to three decimals as 1.207 g/ml. However, in this case, the precision of the results is almost certainly due to good luck as well as good work, as will become apparent when we estimate the absolute error.

The error in each buret reading is about 0.01 ml. The error in the liquid volume is the sum of the errors in the initial and final readings (0.02 ml), corresponding to a relative error of about 1% or 10 ppt. The error in each weighing is about 0.0001 g. The error in the weight of each sample will be the sum of the errors in the initial and final weighings

(0.0002 g), corresponding to a relative error of just over 8 parts in 100,000 or 0.08 ppt.

Clearly the least reliable figure in the volume measurement, and the calculated value of the density can only be known reliably to about 1%, or to the second decimal place. This calculation shows that the absolute error is too large to justify the density value of 1.207 g/ml that was initially suggested by using the value of Sm in Rule II. Instead, we should write the average as 1.21 g/ml. It is worth noting that the design of this experiment would have been greatly improved by using a larger volume of liquid. If we had used a liquid volume of about 40 ml, the relative error in the density could have been reduced to

0.5 ppt.

The most important thing to remember in expressing results is to specify clearly the meaning of the error measurement you use. In the example above the results could be expressed as "a mean of 1.21 g/ml with a standard deviation of the mean of 0.0011 g/ml" or, "the mean value with its 95% confidence interval is 1.21 ± 0.0035 g/ml" or, "a mean value of 1.21 g/ml with a relative 95% confidence interval of 2.9 ppt." Finally, remember that this is a "bad" example in the sense that it was chosen to illustrate fortuitously small, and therefore misleading, error measurements. In most cases the number of significant figures arrived at by the two methods in Rule II will be identical.

41

42