Document

advertisement

Semantic Parsing

Pushpak Bhattacharyya,

Computer Science and Engineering

Department,

IIT Bombay

pb@cse.iitb.ac.in

with contributions from Rajat Mohanty, S. Krishna, Sandeep Limaye

Motivation

• Semantics Extraction has many applications

– MT

– IR

– IE

• Does not come free

• Resource intensive

– Properties of words

– Conditions of relation establishment between words

– Disambiguation at many levels

• Current Computational Parsing less than

satisfactory for deep semantic analysis

Roadmap

• Current important parsers

– Experimental observations

– Handling of difficult language phenomena

• Brief Introduction to the adopted Semantic

Representation:

– Universal Networking Language (UNL)

• Two stage process to UNL generation:

approach-1

• Use of better parser: approach-2

• Consolidating statement of resources

• Observations on treatment of verb

• Conclusions and future work

Current parsers

Categorization of parsers

Output

Constituency

Dependency

Earley Chart

(1970), CYK

(1965-70), LFG

(1970), HPSG

(1985)

Charniack

(2000), Collins

(1999),

Stanford

Link (1991),

Minipar (1993)

Method

Rule Based

Probabilistic

Stanford

(2006), MST

(2005), MALT

(2007)

Observations on some wellknown Probabilistic Constituency

Parsers

Parsers investigated

• Charniak: Probabilistic Lexicalized Bottom-Up

Chart Parser

• Collins: Head-driven statistical Beam Search

Parser

• Stanford: Probabilistic A* Parser

• RASP: Probabilistic GLR Parser

Investigations based on

•

•

•

•

•

•

Robustness to Ungrammaticality

Ranking in case of multiple parses

Handling of embeddings

Handling of multiple POS

Words repeated with multiple POS

Complexity

Handling ungrammatical

sentences

Charniak

• has

labelled

as aux

S

NP

VP

NNP

AUX

VP

Joe

has

VBG

NP

reading

DT

NN

the

book

Joe has reading the book

Collins

• has

should

have

been

AUX

Stanford

• has is treated as

VBZ and not AUX.

RASP

• Confuses as a

case of sentence

embedding

Ranking in case of multiple

parses

Charniak

S

NP

VP

SBAR

NNP

VBD

S

John

said

NP

VP

VB

VBD

NP

PP

Marry

sang

DT

NN

IN

NP

the

song

with

NNP

MaX

John said Marry sang the song with Max

• semantically

correct one

chosen from

among

possible

multiple

parse trees

Collins

Wrong

attachment

Stanford

Same as

Charniak

RASP

• Different

POS Tags,

but parse

trees are

comparable

Time complexity

Time taken

• 54 instances of the sentence ‘This is just to

check the time’ is used to check the time

• Time taken

–

–

–

–

Collins : 40s

Stanford : 14s

Charniak : 8s

RASP : 5s

• Reported complexity

–

–

–

–

Charniack: O(n5)

Collins: O(n5)

Stanford: O(n3)

RASP: not known

Embedding Handling

Charniak

A

S

NP

NP

SBAR

VP

DT

NN

WHNP

S

The

cat

WDT

VP

that

VBD

PP

spilled

IN

NP

SBAR

on

DT

NN

IN

S

the

floor

that

VP

VBD

escaped

VBD

NP

killed

NP

SBAR

DT

NN

WHNP

S

the

rat

WDT

VP

that

VBD

NP

stole

NP

SBAR

DT

NN

WHNP

that

WDT

The cat that killed the rat that stole the milk that spilled on the

floor that was slippery escaped.

S

VP

A

AUX

ADJP

was

slippery

Collins

Stanford

RASP

Handling words with multiple

POS tags

Charniack

S

Time flies like an arrow

NP

VP

NNP

VBZ

PP

Time

flies

IN

NP

like

DT

NN

an

arrow

Collins

Stanford

RASP

• Flies tagged as

noun!

Repeated Word handling

Charniak

S

NP

VP

NNP

VBZ

Buffalo buffaloes

Buffalo buffaloes Buffalo buffaloes buffalo

buffalo Buffalo buffaloes

SBAR

S

NP

VP

NNP

VBZ

SBAR

Buffalo

buffaloes

S

NP

VP

NN

NNP

buffalo

buffalo

NNP

VBZ

Buffalo buffaloes

Collins

Stanford

RASP

• Tags all words as nouns!

Sentence Length

Sentence with 394 words

•

One day, Sam left his small, yellow home to head towards the meat-packing plant where he worked, a task which

was never completed, as on his way, he tripped, fell, and went careening off of a cliff, landing on and destroying

Max, who, incidentally, was also heading to his job at the meat-packing plant, though not the same plant at which

Sam worked, which he would be heading to, if he had been aware that that the plant he was currently heading

towards had been destroyed just this morning by a mysterious figure clad in black, who hailed from the small,

remote country of France, and who took every opportunity he could to destroy small meat-packing plants, due to

the fact that as a child, he was tormented, and frightened, and beaten savagely by a family of meat-packing plants

who lived next door, and scarred his little mind to the point where he became a twisted and sadistic creature,

capable of anything, but specifically capable of destroying meat-packing plants, which he did, and did quite often,

much to the chagrin of the people who worked there, such as Max, who was not feeling quite so much chagrin as

most others would feel at this point, because he was dead as a result of an individual named Sam, who worked at

a competing meat-packing plant, which was no longer a competing plant, because the plant that it would be

competing against was, as has already been mentioned, destroyed in, as has not quite yet been mentioned, a

massive, mushroom cloud of an explosion, resulting from a heretofore unmentioned horse manure bomb

manufactured from manure harvested from the farm of one farmer J. P. Harvenkirk, and more specifically

harvested from a large, ungainly, incontinent horse named Seabiscuit, who really wasn't named Seabiscuit, but

was actually named Harold, and it completely baffled him why anyone, particularly the author of a very long

sentence, would call him Seabiscuit; actually, it didn't baffle him, as he was just a stupid, manure-making horse,

who was incapable of cognitive thought for a variety of reasons, one of which was that he was a horse, and the

other of which was that he was just knocked unconscious by a flying chunk of a meat-packing plant, which had

been blown to pieces just a few moments ago by a shifty character from France.

Partial RASP Parse

•

(|One_MC1| |day_NNT1| |,_,| |Sam_NP1| |leave+ed_VVD| |his_APP$| |small_JJ| |,_,| |yellow_JJ| |home_NN1| |to_TO| |head_VV0| |towards_II| |the_AT| |meatpacking_JJ| |plant_NN1| |where_RRQ| |he_PPHS1| |work+ed_VVD| |,_,| |a_AT1| |task_NN1| |which_DDQ| |be+ed_VBDZ| |never_RR| |complete+ed_VVN| |,_,| |as_CSA|

|on_II| |his_APP$| |way_NN1| |,_,| |he_PPHS1| |trip+ed_VVD| |,_,| |fall+ed_VVD| |,_,| |and_CC| |go+ed_VVD| |careen+ing_VVG| |off_RP| |of_IO| |a_AT1| |cliff_NN1| |,_,|

|land+ing_VVG| |on_RP| |and_CC| |destroy+ing_VVG| |Max_NP1| |,_,| |who_PNQS| |,_,| |incidentally_RR| |,_,| |be+ed_VBDZ| |also_RR| |head+ing_VVG| |to_II|

|his_APP$| |job_NN1| |at_II| |the_AT| |meat-packing_JB| |plant_NN1| |,_,| |though_CS| |not+_XX| |the_AT| |same_DA| |plant_NN1| |at_II| |which_DDQ| |Sam_NP1|

|work+ed_VVD| |,_,| |which_DDQ| |he_PPHS1| |would_VM| |be_VB0| |head+ing_VVG| |to_II| |,_,| |if_CS| |he_PPHS1| |have+ed_VHD| |be+en_VBN| |aware_JJ|

|that_CST| |that_CST| |the_AT| |plant_NN1| |he_PPHS1| |be+ed_VBDZ| |currently_RR| |head+ing_VVG| |towards_II| |have+ed_VHD| |be+en_VBN| |destroy+ed_VVN|

|just_RR| |this_DD1| |morning_NNT1| |by_II| |a_AT1| |mysterious_JJ| |figure_NN1| |clothe+ed_VVN| |in_II| |black_JJ| |,_,| |who_PNQS| |hail+ed_VVD| |from_II| |the_AT|

|small_JJ| |,_,| |remote_JJ| |country_NN1| |of_IO| |France_NP1| |,_,| |and_CC| |who_PNQS| |take+ed_VVD| |every_AT1| |opportunity_NN1| |he_PPHS1| |could_VM|

|to_TO| |destroy_VV0| |small_JJ| |meat-packing_NN1| |plant+s_NN2| |,_,| |due_JJ| |to_II| |the_AT| |fact_NN1| |that_CST| |as_CSA| |a_AT1| |child_NN1| |,_,| |he_PPHS1|

|be+ed_VBDZ| |torment+ed_VVN| |,_,| |and_CC| |frighten+ed_VVD| |,_,| |and_CC| |beat+en_VVN| |savagely_RR| |by_II| |a_AT1| |family_NN1| |of_IO| |meat-packing_JJ|

|plant+s_NN2| |who_PNQS| |live+ed_VVD| |next_MD| |door_NN1| |,_,| |and_CC| |scar+ed_VVD| |his_APP$| |little_DD1| |mind_NN1| |to_II| |the_AT| |point_NNL1|

|where_RRQ| |he_PPHS1| |become+ed_VVD| |a_AT1| |twist+ed_VVN| |and_CC| |sadistic_JJ| |creature_NN1| |,_,| |capable_JJ| |of_IO| |anything_PN1| |,_,| |but_CCB|

|specifically_RR| |capable_JJ| |of_IO| |destroy+ing_VVG| |meat-packing_JJ| |plant+s_NN2| |,_,| |which_DDQ| |he_PPHS1| |do+ed_VDD| |,_,| |and_CC| |do+ed_VDD|

|quite_RG| |often_RR| |,_,| |much_DA1| |to_II| |the_AT| |chagrin_NN1| |of_IO| |the_AT| |people_NN| |who_PNQS| |work+ed_VVD| |there_RL| |,_,| |such_DA| |as_CSA|

|Max_NP1| |,_,| |who_PNQS| |be+ed_VBDZ| |not+_XX| |feel+ing_VVG| |quite_RG| |so_RG| |much_DA1| |chagrin_NN1| |as_CSA| |most_DAT| |other+s_NN2| |would_VM|

|feel_VV0| |at_II| |this_DD1| |point_NNL1| |,_,| |because_CS| |he_PPHS1| |be+ed_VBDZ| |dead_JJ| |as_CSA| |a_AT1| |result_NN1| |of_IO| |an_AT1| |individual_NN1|

|name+ed_VVN| |Sam_NP1| |,_,| |who_PNQS| |work+ed_VVD| |at_II| |a_AT1| |compete+ing_VVG| |meat-packing_JJ| |plant_NN1| |,_,| |which_DDQ| |be+ed_VBDZ|

|no_AT| |longer_RRR| |a_AT1| |compete+ing_VVG| |plant_NN1| |,_,| |because_CS| |the_AT| |plant_NN1| |that_CST| |it_PPH1| |would_VM| |be_VB0| |compete+ing_VVG|

|against_II| |be+ed_VBDZ| |,_,| |as_CSA| |have+s_VHZ| |already_RR| |be+en_VBN| |mention+ed_VVN| |,_,| |destroy+ed_VVN| |in_RP| |,_,| |as_CSA| |have+s_VHZ|

|not+_XX| |quite_RG| |yet_RR| |be+en_VBN| |mention+ed_VVN| |,_,| |a_AT1| |massive_JJ| |,_,| |mushroom_NN1| |cloud_NN1| |of_IO| |an_AT1| |explosion_NN1| |,_,|

|result+ing_VVG| |from_II| |a_AT1| |heretofore_RR| |unmentioned_JJ| |horse_NN1| |manure_NN1| |bomb_NN1| |manufacture+ed_VVN| |from_II| |manure_NN1|

|harvest+ed_VVN| |from_II| |the_AT| |farm_NN1| |of_IO| |one_MC1| |farmer_NN1| J._NP1 P._NP1 |Harvenkirk_NP1| |,_,| |and_CC| |more_DAR| |specifically_RR|

|harvest+ed_VVN| |from_II| |a_AT1| |large_JJ| |,_,| |ungainly_JJ| |,_,| |incontinent_NN1| |horse_NN1| |name+ed_VVN| |Seabiscuit_NP1| |,_,| |who_PNQS| |really_RR|

|be+ed_VBDZ| |not+_XX| |name+ed_VVN| |Seabiscuit_NP1| |,_,| |but_CCB| |be+ed_VBDZ| |actually_RR| |name+ed_VVN| |Harold_NP1| |,_,| |and_CC| |it_PPH1|

|completely_RR| |baffle+ed_VVD| |he+_PPHO1| |why_RRQ| |anyone_PN1| |,_,| |particularly_RR| |the_AT| |author_NN1| |of_IO| |a_AT1| |very_RG| |long_JJ|

|sentence_NN1| |,_,| |would_VM| |call_VV0| |he+_PPHO1| |Seabiscuit_NP1| |;_;| |actually_RR| |,_,| |it_PPH1| |do+ed_VDD| |not+_XX| |baffle_VV0| |he+_PPHO1| |,_,|

|as_CSA| |he_PPHS1| |be+ed_VBDZ| |just_RR| |a_AT1| |stupid_JJ| |,_,| |manure-making_NN1| |horse_NN1| |,_,| |who_PNQS| |be+ed_VBDZ| |incapable_JJ| |of_IO|

|cognitive_JJ| |thought_NN1| |for_IF| |a_AT1| |variety_NN1| |of_IO| |reason+s_NN2| |,_,| |one_MC1| |of_IO| |which_DDQ| |be+ed_VBDZ| |that_CST| |he_PPHS1|

|be+ed_VBDZ| |a_AT1| |horse_NN1| |,_,| |and_CC| |the_AT| |other_JB| |of_IO| |which_DDQ| |be+ed_VBDZ| |that_CST| |he_PPHS1| |be+ed_VBDZ| |just_RR|

|knock+ed_VVN| |unconscious_JJ| |by_II| |a_AT1| |flying_NN1| |chunk_NN1| |of_IO| |a_AT1| |meat-packing_JJ| |plant_NN1| |,_,| |which_DDQ| |have+ed_VHD|

|be+en_VBN| |blow+en_VVN| |to_II| |piece+s_NN2| |just_RR| |a_AT1| |few_DA2| |moment+s_NNT2| |ago_RA| |by_II| |a_AT1| |shifty_JJ| |character_NN1| |from_II|

|France_NP1| ._.) -1 ; ()

What do we learn?

• All parsers have problems dealing with

long sentences

• Complex language phenomena cause

them to falter

• Good as starting points for structure

detection

• But need output correction very often

Needs of high accuracy parsing

(difficult language phenomena)

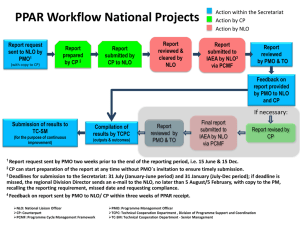

Systems

Language

Phenomena

Empty-PRO Detection

Empty-PRO

Resolution

WH-Trace

Detection

Relative Pronoun Resolution

PP Attachment

Resolution

Clausal Attachment Resolution

Distinguishing Arguments

from Adjuncts

Small Clause Detection

Link Parser Charniak Stanford Machinese MiniPar

Parser

Parser

Syntax

Collins Our System

Parser

No

No

No

No

No

No

No

No

Yes

Yes

No

No

Yes

Yes

Yes

No

No

No

Yes

No

Yes

No

No

No

No

No

Yes

No

No

Yes

No

No

No

Yes

Yes

Yes

No

No

No

No

No

No

No

Yes

No

No

No

Yes

Yes

No

Yes

Yes

No

Yes

No

Yes

Context of our work: Universal

Networking Language (UNL)

A vehicle for machine translation

• Much more demanding than transfer approach or direct approach

Hindi

English

Interlingua

(UNL)

Analysis

French

generation

Chinese

A United Nations project

•

•

•

•

•

Started in 1996

10 year program

15 research groups across continents

First goal: generators

Next goal: analysers (needs solving various ambiguity

problems)

• Current active groups: UNL-Spanish, UNL-Russian,

UNL-French, UNL-Hindi

• IIT Bombay concentrating on UNL-Hindi and UNLEnglish

Dave, Parikh and Bhattacharyya, Journal of Machine Translation, 2002

UNL represents knowledge:

John eats rice with a spoon

Universal words

Semantic relations

attributes

Sentence Embeddings

Mary claimed that she had composed a poem

claim

(icl>do)

@entry.@past

obj

agt

compose

(icl>do)

Mary

(iof>person)

:01

agt

she

@entry.@past

@complete

obj

poem

(icl>art)

Relation repository

• Number 39

• Groups:

– Agent-object-instrument: agt, obj, ins, met

– Time: tim, tmf, tmt

– Place: plc, plf, plt

– Restriction: mod, aoj

– Prepositions taking object: go, frm

– Ontological: icl, iof, equ

– Etc. etc.

Semantically Relatable

Sequences (SRS)

Mohanty, Dutta and

Bhattacharyya, Machine

Translation Summit, 2005

Semantically Relatable Sequences

(SRS)

Definition: A semantically relatable

sequence (SRS) of a sentence is a group

of unordered words in the sentence (not

necessarily consecutive) that appear in the

semantic graph of the sentence as linked

nodes or nodes with speech act labels

Example to illustrate SRS

past tense

bought

“The man bought a

new car in June”

agent

time

object

man

car

the: definite

a: indefinite

modifier

new

June

in: modifier

SRSs from “the man bought a new

car in June”

a.

b.

c.

d.

e.

f.

{man, bought}

{bought, car}

{bought, in, June}

{new, car}

{the, man}

{a, car}

Basic questions

• What are the SRSs of a given sentence?

• What semantic relations can link the words

in an SRS?

Postulate

• A sentence needs to be broken into sets of

at most three forms

– {CW, CW}

– {CW, FW, CW}

– {FW, CW}

where CW refers to content word or a clause

and FW to function word

Language Phenomena and

SRS

Clausal constructs

Sentences: The boy said that he was reading a

novel

a. {the boy}

b. {boy, said}

c. {said, that, SCOPE}

d. SCOPE:{he, reading}

e. SCOPE:{reading, novel}

f. SCOPE:{a, novel}

g. SCOPE:{was, reading}

scope: umbrella for clauses or compounds

Preposition Phrase (PP)

Attachment

“John published the article in June”

{John, published}: {CW,CW}

{published, article}: {CW,CW}

{published, in, June}: {CW,FW,CW}

{the, article}: {FW,CW}

Contrast with

“The article in June was published by John”

{The, article}: {FW,CW}

{article, in, June}: {CW,FW,CW}

{article, was, published}: {CW,CW}

{published, by, John}: {CW,CW}

To-Infinitival

•

PRO element co-indexed with the object him

– “I forced Johni [PRO]i to throw a party”

• PRO element co-indexed with the subject I

– “Ii promised John [PRO]i to throw a party”

• SRSs are

replaced

•

•

•

•

•

•

with “I”

{I, forced}: {CW,CW}

in the 2nd

{forced, John}: {CW,CW}

sentence

{forced, SCOPE}: {CW,CW}

SCOPE:{John, to, throw}: {CW,FW,CW}

SCOPE:{throw, party}: {CW,CW}

go deeper

than surface

SCOPE:{a, party}: {FW,CW}

phenomena

Complexities of that

• Embedded clausal constructs as opposed to

relative clauses need to be resolved

–

–

“Mary claimed that she had composed a poem”

“The poem that Mary composed was beautiful”

• Dangling that

–

I told the child that I know that he played well

Two possibilities

told

told

I

the child

that I

know

that he

Played

well

I

the child

that I know

that that he

Played

well

SRS Implementation

Syntactic constituents to Semantic

constituents

• Used a probabilistic parser (Charniak,

04)

• Output of Charniack parser: tags give

indications of CW and FW

– NP, VP, ADJP and ADVP

CW

– PP (prepositional phrase), IN

(preposition) and DT (determiner)

FW

Observation:

Headwords of sibling nodes form SRSs

“John has

bought

a car.”

(C) VP bought

(C) VP bought

(F) AUX has

(C) VBD bought

SRS:

{has, bought},

{a, car},

{bought, car}

(C) NP car

(F) DT a

has

(C) NN car

bought

a

car

Work needed on the parse

tree

Correction of wrong PP attachment

• “John has published

an article on

linguistics”

• Use PP attachment

heuristics

• Get

{article, on, linguistics}

(C)VP published

(C)VBD published

(F)DT an

(C)NP article

(F) PP on

(C)NNarticle

(F)IN on

published

an

(C)NPlinguistics

article

(C)NNS linguistics

on

linguistics

To-Infinitival

Clause boundary is the VP

node, labeled with SCOPE.

(C)VP forced

Tag is modified to TO, a FW

tag, indicating that it heads

(C)VBD forced

(C) S SCOPE

(C)NP him

a to-infinitival clause.

(C)PRP him

The duplication and insertion

(C)VP

(C)NP him

of the NP node with head him

forced

(depicted by shaded nodes) as

a sibling of the VBD node with

head forced is done to bring out

him

(F)TO toto

(C)PRP him

the existence of a semantic

relation between force and him.

him

to

(C)VP watch

Linking of clauses:

“John said that he was reading a novel”

• Head of S node

marked as Scope

SRS:

{said, that, SCOPE}

(C) VP said

(C)VBD said

• Adverbial clauses

have similar parse

tree structures

except that the

subordinating

conjunctions are

different from that

(F) SBAR that

(F) IN that

said

that

(C) S SCOPE

Implementation

• Block Diagram of the system

Input Sentence

WordNet 2.0

Charniak Parser

Noun classification

Time and Place

features

Scope Handler

Parse Tree

Parse Tree modification and

augmentation with head and scope

information

THAT clause as Subcat property

Sub-categorization Database

Augmented

Parse Tree

Preposition as Subcat property

Attachment Resolver

Semantically Relatable Sets

Generator

Semantically Related Sets

Evaluation

• Used the Penn Treebank (LDC, 1995) as

the test bed

• The un-annotated sentences, actually from

the WSJ corpus (Charniak et. al. 1987),

were passed through the SRS generator

• Results were compared with the

Treebank’s annotated sentences

Results on SRS generation

Parameters matched

(CW,CW)

(CW,FW,CW)

(FW,CW)

Total SRSs

Recall

Precision

0

20

40

60

Recall/Precision

80

100

Parameter

Results on sentence constructs

PP Resolution

Clause linkings

Complement-clause resolution

To-infinitival clause resolution

Recall

Precision

0

20

40

60

Recall/Precision

80

100

SRS to UNL

Features of the system

• High accuracy resolution of different kinds of attachment

• Precise and fine grained semantic relations between

sentence constituents

• Empty-pronominal detection and resolution

• Exhaustive knowledge bases of sub-categorization

frames, verb knowledge bases and rule templates for

establishing semantic relations and speech act like

attributes using

–

–

–

–

–

Oxford Advanced Learner’s Dictionary (Hornby, 2001)

VerbNet (Schuler, 2005)

WordNet 2.1 (Miller, 2005)

Penn Tree Bank (LDC, 1995) and

XTAG lexicon (XTAG, 2001)

Side effect: high accuracy parsing

(comparison with other parsers)

Systems

Language

Phenomena

Empty-PRO Detection

Empty-PRO

Resolution

WH-Trace

Detection

Relative Pronoun Resolution

PP Attachment

Resolution

Clausal Attachment Resolution

Distinguishing Arguments

from Adjuncts

Small Clause Detection

Link Parser Charniak Stanford Machinese MiniPar

Parser

Parser

Syntax

Collins Our System

Parser

No

No

No

No

No

No

No

No

Yes

Yes

No

No

Yes

Yes

Yes

No

No

No

Yes

No

Yes

No

No

No

No

No

Yes

No

No

Yes

No

No

No

Yes

Yes

Yes

No

No

No

No

No

No

No

Yes

No

No

No

Yes

Yes

No

Yes

Yes

No

Yes

No

Yes

Rules for generating

Semantic Relations

CW1

Syntactic

Feature

SynCat POS

- V -

Semantic

Feature

SemCat Lex

V020 - -

FW

CW2

REL(UW1,UW2)

Syntactic Semantic Feature Syntactic Semantic

Feature

Feature

Feature

SynCat POS SemCat Lex SynCat POS SemCat Lex Rel UW1 UW2

- - - into N - - - gol 1

3

- - - within N - TIME - dur 1

3

e.g., “finish within a week”

e.g., “turn water into steam

Rules for generating attributes

String of FWs

CW

has_been

VBG

has_been

VBN

UNL attribute list

generated

@present

@complete

@progress

@present

@complete

@passive

System architecture

Evaluation: scheme

Evaluation: example

Input: He worded the statement carefully.

[unlGenerated:76]

agt(word.@entry, he)

obj(word.@entry, statement.@def)

man(word.@entry, carefully)

[\unl]

F1-Score = 0.945

[unlGold:76]

agt(word.@entry.@past, he)

obj(word.@entry.@past, statement.@def)

man(word.@entry.@past, carefully)

[\unl]

Not heavily

punished

since attributes

are not crucial

to the meaning!!

Approach 2: switch to rule

based parsing: LFG

link

Using Functional Structure from an LFG

Parser

Sentence

John eats a pastry

Functional Structure

(Transfer Facts)

SUBJ (eat, John)

OBJ (eat, pastry)

VTYPE (eat, main)

…

UNL

agt (eat, Ram)

obj (eat, mango)

Lexical Functional Grammar

• Considers two aspects

– Lexical: considers lexical structures and relations

– Functional: considers grammatical functions of different

constituents, like SUBJECT, OBJECT

• Two structures:

– C-structure (Constituent-structure)

– F-structure (Functional-structure)

• Languages vary in C-structure (word order, phrasal

structure) but have the same functional structure

(SUBJECT, OBJECT, etc.)

LFG Structures – example

Sentence: He gave her a kiss.

C-structure

F-structure

XLE Parser

• Developed by Xerox Corporation

• Gives C-structures, F-structures and

morphology of the sentence constituents

• Supports packed rewriting system

converting F-structure to transfer facts,

used by our system

• Works on Solaris, Linux and MacOSX

Notion of Transfer Facts

• Serialized representation of the Functional structure

• Particularly useful for transfer-based MT systems

• We use it as the starting point for UNL generation

Example transfer facts

Transfer Facts - Example

•

Sentence:

The boy ate the apples hastily.

•

Transfer facts (selected):

ADJUNCT,eat:2,hastily:6

ADV-TYPE,hastily:6,vpadv

DET,apple:5,the:4

DET,boy:1,the:0

DET-TYPE,the:0,def

DET-TYPE,the:4,def

NUM,apple:5,pl

NUM,boy:1,sg

OBJ,eat:2,apple:5

PASSIVE,eat:2,PERF,eat:2,-_

PROG,eat:2,-_

SUBJ,eat:2,boy:1

TENSE,eat:2,past

VTYPE,eat:2,main

_SUBCAT-FRAME,eat:2,V-SUBJ-OBJ

Workflow in detail

Phase 1: Sentence to transfer facts

•

Input: Sentence

The boy ate the apples hastily.

•

Output: Transfer facts (selected are shown here)

ADJUNCT,eat:2,hastily:6

ADV-TYPE,hastily:6,vpadv

DET,apple:5,the:4

DET,boy:1,the:0

DET-TYPE,the:0,def

DET-TYPE,the:4,def

NUM,apple:5,pl

NUM,boy:1,sg

OBJ,eat:2,apple:5

PASSIVE,eat:2,PERF,eat:2,-_

PROG,eat:2,-_

SUBJ,eat:2,boy:1

TENSE,eat:2,past

VTYPE,eat:2,main

_SUBCAT-FRAME,eat:2,V-SUBJ-OBJ

Phase 2: Transfer facts to word entry

collection

•

•

Input: transfer facts as in the previous example

Output: word entry collection

Word entry eat:2, lex item: eat

(PERF:-_ PASSIVE:- _SUBCAT-FRAME:V-SUBJ-OBJ VTYPE:main

SUBJ:boy:1 OBJ:apple:5 ADJUNCT:hastily:6 CLAUSE-TYPE:decl

TENSE:past PROG:-_ MOOD:indicative )

Word entry boy:1, lex item: boy

(CASE:nom _LEX-SOURCE:countnoun-lex COMMON:count DET:the:0

NSYN:common PERS:3 NUM:sg )

Word entry apple:5, lex item: apple

(CASE:obl _LEX-SOURCE:morphology COMMON:count DET:the:4

NSYN:common PERS:3 NUM:pl )

Word entry hastily:6, lex item: hastily

(DEGREE:positive _LEX-SOURCE:morphology ADV-TYPE:vpadv )

Word entry the:0, lex item: the

(DET-TYPE:def )

Word entry the:4, lex item: the

(DET-TYPE:def )

Phase 3 (1): UW and Attribute

generation

•

•

•

Input: word entry collection

Output: Universal Words with (some) attributes generated

In our example:

UW (eat:2.@entry.@past)

UW (hastily:6)

UW (boy:1)

UW (the:0)

UW (apple:5.@pl)

UW (the:4)

Example transfer facts and their mapping to UNL attributes

Digression: Subcat Frames, Arguments and

Adjuncts

• Subcat frames and arguments

– A predicate subcategorizes for its arguments, or

arguments are governed by the predicate.

– Example: predicate “eat” subcategorizes for a

SUBJECT argument and an OBJECT argument.

The corresponding subcat frame is

V-SUBJ-OBJ.

– Arguments are mandatory for a predicate.

• Adjuncts

– Give additional information about the predicate

– Not mandatory

– Example: “hastily” in “The boy ate the apples hastily”.

Phase 3(1): Handling of Subcat Frames

• Input:

– Word entry collection

– Mapping of subcat frames to transfer facts

– Mapping of transfer facts to relations or

attributes

• Output: relations and / or attributes

• Example: for our sentence, agt(eat,boy),

obj(eat,apple) relations are generated in

this phase.

Rule bases for Subcat handling – examples (1)

Mapping Subcat frames to transfer facts

Rule bases for Subcat handling – examples (2)

Mapping Subcat frames, transfer facts to relations / attributes

Some simplified rules

Phase 3(2): Handling of adjuncts

• Input:

– Word entry collection

– List of transfer facts to be considered for adjunct

handling

– Rules for relation generation based on transfer facts

and word properties

• Output: relations and / or attributes

• Example: for our sentence, man(eat,

hastily) relation; and @def attributes for

boy, apple are generated in this phase.

Rule bases for adjunct handling –

examples (1)

Mapping adjunct transfer facts to relations / attributes

Some simplified rules

Rule bases for adjunct handling – examples (2)

Mapping adjuncts to relations / attributes based on prepositions

- some example rules

Final UNL Expression

• Sentence:

The boy ate the apples hastily.

• UNL Expression:

[unl:1]

agt(eat:2.@entry.@past,boy:1.@def)

man(eat:2.@entry.@past,hastily:6)

obj(eat:2.@entry.@past,apple:5.@pl.@def)

[\unl]

Design of Relation Generation Rules –

an example

Subject

ANIMATE

INANIMATE

Verb Type

do

agt

be

aoj

Verb Type

occur

aoj

do

occur

be

aoj

aoj

obj

Summary of Resources

Mohanty and Bhatacharyya, LREC 2008

Lexical Resources

Syntactic Argument

database

PPs as

syntactic

arguments

N

V

Clause as

syntactic

arguments

A

Adv

Verb

Knowledgebase

Verb

Senses

Functional

Elements with

Grammatical

attributes

Lexical

Knowledgebase

with

Semantic

attributes

Syntactic and Semantic

Argument mapping

N

Semantic

Argument

Frame

Auxiliary verbs

Determiners

Tense-Aspect morphemes

SRS Generation

V

UNL Expression Generation

A

Adv

Use of a number of lexical data

We have created these resources over a long period

of time from

• Oxford Advanced Learners’ Dictionary (OALD) (Hornby, 2001)

• VerbNet (Schuler, 2005)

• Princeton WordNet 2.1 (Miller, 2005)

• LCS database (Dorr, 1993)

• Penn Tree Bank (LDC, 1995), and

• XTAG lexicon (XTAG Research Group, 2001)

Verb Knowledge Base (VKB) Structure

VKB statistics

• 4115 unique verbs

• 22000 rows (different senses)

• 189 verb groups

Verb categorization in UNL and its relationship to

traditional verb categorization

Traditional

(sytactic)

UNL

Intransitive

Transitive

(has direct

object)

Unergative

(syntactic subject

=semantic agent)

Unaccusative

(syntactic subject

≠ semantic agent)

(semantic)

Do

(action)

Be

(state)

Occur

(event)

Ram pulls the Ram goes home

rope

Ram knows

mathematics

Ram forgot

mathematics

(ergative

languages)

Ram sleeps

Earth cracks

Accuracy on various phenomena

and corpora

Applications

MT and IR

• Smriti Singh, Mrugank Dalal, Vishal Vachani,

Pushpak Bhattacharyya and Om Damani, “Hindi

Generation from Interlingua”, Machine Translation

Summit (MTS 07), Copenhagen, September, 2007.

• Sanjeet Khaitan, Kamaljeet Verma and Pushpak

Bhattacharyya, “Exploiting Semantic Proximity for

Information Retrieval”, IJCAI 2007, Workshop on

Cross Lingual Information Access, Hyderabad, India,

Jan, 2007.

• Kamaljeet Verma and Pushpak Bhattacharyya,

“Context-Sensitive Semantic Smoothing using

Semantically Relatable Sequences”, submitted

Conclusions and future work

• Presented two approaches to UNL

generation

• Demonstrated the need for Resources

• Working on handling difficult language

phenomena

• WSD for correct UW word

URLs

• For resources

www.cfilt.iitb.ac.in

• For publications

www.cse.iitb.ac.in/~pb