slides

advertisement

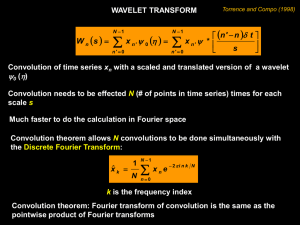

ScatNets UvA -­‐ DeepNet Reading Group October 7th, 14:00 Thomas Mensink The main problem of classifica2on is variance, there exist much to much variability in the data Stéphane Mallat Stéphane Mallat • And for a French Researcher his English is great Keynote at CVPR hOp://techtalks.tv/talks/plenary-­‐talk-­‐are-­‐deep-­‐networks-­‐a-­‐soluSon-­‐to-­‐curse-­‐of-­‐dimensionality/60315/ Part of this variability is due to rigid transla2ons, rota2ons, or scaling. This variability is o=en uninforma2ve for classifica2on J. Bruna & S. Mallat -­‐ PAMI 2013 • Pami SecSon 1 wavelet scaOering | translaSon invariant | deformaSons | high-­‐frequency informaSon | wavelet transform convoluSons | nonlinear modulus and averaging operators | staSonary processes | higher order moments | Fourier power spectrum | rigid translaSons, rotaSons, or scaling | Non-­‐rigid deformaSons | deformaSon invariant | linearize small deformaSons | Lipschitz conSnuous | Fourier transform modulus | Fourier transform instabiliSes | wavelet transforms are not invariant but covariant to translaSons | preserving the signal energy | expected scaOering representaSon (Just) SecSon 1 wavelet scaOering | translaSon invariant | deformaSons | high-­‐frequency informaSon | wavelet transform convoluSons | nonlinear modulus and averaging operators | staSonary processes | higher order moments | Fourier power spectrum | rigid translaSons, rotaSons, or scaling | Non-­‐rigid deformaSons | deformaSon invariant | linearize small deformaSons | Lipschitz conSnuous | Fourier transform modulus | Fourier transform instabiliSes | wavelet transforms are not invariant but covariant to translaSons | preserving the signal energy | expected scaOering representaSon Help! I lack engineering/math skills High-­‐level idea • ConvNet • ScatNet: replace learned layers by predefined scaOering operators ScatNets ScatNets (2) • MathemaScal approach • Signal processing view • On ConvNets / DeepNets • Use known invariance properSes • To construct a deep hierarchical network TranslaSon Invariant Linearize DeformaSons • Small deformaSon x -­‐> x’ -­‐> distance bounded TranslaSon Invariant RepresentaSons • Not stable for deformaSons: – AutocorrelaSons – Fourier Transform Modulus • Stable for deformaSons: – Wavelet Transform Wavelets • Used in the JPG2000 compression scheme ture stated in [Mal12], relating the signal sparsity with the regularity

ews

the scattering

for deterministic

functions and processes,

presentation

in thetransform

transformed

domain.

mathematical

properties.

It invariant,

also studies

these

properties

on signal

image

esentations [Mal12]

construct

stable

and

informative

and obtains

new mathematical

results:

the first

characytions,

cascading

wavelettwo

modulus

decompositions

followed

by one

a lowpass

earities from stability

the second

one giving a partial

ecomposition

operator constraints,

at scale J isand

defined

as

ture stated in [Mal12], relating the signal sparsity with the regularity

presentation inWthe

transformed

{x ⋆ ψλ }λ∈Λdomain.

,

Jx =

J

resentations [Mal12] construct invariant, stable and informative signal

−j r −1 u) and λ = 2j r, with j < J and r ∈ G belongs to a finite

ydj ψ(2

cascading

wavelet modulus decompositions followed by a lowpass

of Rd . Each operator

rotated and

dilated

ecomposition

at scale

J iswavelet

defined thus

as extracts the energy

given scale and orientation given by λ. Wavelet coefficients are not

WJ x =does

{x ⋆not

ψλ }produce

nt, and their average

λ∈ΛJ , any information since wavelets

A translation invariant measure can be extracted out of each wavelet

dj ψ(2−j r −1 u) and λ = 2j r, with j < J and r ∈ G belongs to a finite

oducing a non-linearity which restores a non-zero, informative average

of Rd . Each

rotated

and dilated

extracts

energy

instance

achieved

by computing

thewavelet

complexthus

modulus

and the

averaging

given scale and orientation

given by λ. Wavelet coefficients are not

!

nt, and their average

not produce

any information since wavelets

|xdoes

⋆ ψλ |(u)du

.

A translation invariant measure can be extracted out of each wavelet

oducing

a non-linearity

restores

informative

average

ost

by this

averaging iswhich

recovered

by aanon-zero,

new wavelet

decomposition

instance achieved by computing the complex modulus and averaging

ΛJ of |x ⋆ ψλ |, which produces new invariants by iterating the same

! the wavelet modulus operator corresponding to

λ]x = |x ⋆ ψλ | denote

ny sequence p = (λ1 ,|x

λ2⋆, ...,

a path, i.e, the ordered product

ψλλ|(u)du

.

m ) defines

Wavelets Operators • Two important parts • Wavelet operator: • And translaSon invariant measure 1

&j2

!1

j2

or !2 ¼ 2 r2 , the scale 2 divides the radial axis,

resulting sectors are subdivided into K angular

orresponding to the different r2 . The scale and

ubdivisions are adjusted so that the area of each

s proportional to kj !1 j ? !2 k2 .

much fewer internal and output coefficients.

The norm and distance on a transform T x ¼ fx

output a family of signals will be defined by

X

0 2

kxn & x0n k2 :

kT x & T x k ¼

ScaOering Coefficients n

Two images xðuÞ. (b) Fourier modulus j^

xð!Þj. (c) First-order scattering coefficients Sx½!1 " displayed over the frequenc

ey are the same for both images. (d) Second-order scattering coefficients Sx½!1 ; !2 " over the frequency sectors of Fig. 3

r each image.

ScatNet ND MALLAT: INVARIANT SCATTERING CONVOLUTION NETWORKS

e applied to x computes the first layer of wavelet coefficients modulus U½"1 (x ¼ jx ? "1 j and outputs it

scattering propagator W

e to the first layer signals U½"1 (x outputs first-order scattering coefficients S½"1 ( ¼ U½"1 ( ? !2J

S½;(x ¼ x ? !2J (black arrow). Applying W

e to each propagated signal U½p(x outputs S½p(x ¼ U½p

and computes the propagated signal U½"1 ; "2 (x of the second layer. Applying W

rows) and computes the next layer of propagated signals.

ScatNet Screenshot from cvpr keynote ScatNets A scaOering transform builds nonlinear invariants from wavelet coefficients, with modulus and averaging pooling funcSons. • localized waveforms -­‐> stable to deformaSons • Several layers construct large scale invariants without losing crucial informaSon KS

7Þ

1877

•

ScatNet TABLE

P 1

Percentage of Energy p2P m kS½p(xk2 =kxk2 of

#

Scattering Coefficients on Frequency-Decreasing Paths

Signal processing view: preserve upon

energy of Length

m, Depending

J

l,

8Þ

e

a-

These average values are computed on the Caltech-101 database, with

zero

mean

unit variance

images. depth can be limited • This is and

important: network with a negligible loss of signal energy This scattering energy conservation also proves that the

more sparse the wavelet coefficients, the more energy

Results (1) Screenshot from cvpr keynote Results (2) IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE,

VOL. 35,

N

MNIST classificaSon TABLE 4

Percentage of Errors of MNIST Classifiers, Depending on the Training Size

scattering models can be interpreted as

els computed independently for each class.

discriminative classifiers such as SVM, we

te cross-correlation interactions between

optimizing the model dimension d. Such

and fourth columns give the classificatio

with a PCA or an SVM classification applie

of a windowed Fourier transform. The spa

window is optimized with a cross valida

minimum error for 2J ¼ 8. It correspond

MNIST Example UNA AND MALLAT: INVARIANT SCATTERING CONVOLUTION NETWORKS

g. 7. (a) Image XðuÞ of a digit “3.” (b) Arrays of windowed scattering coefficients S½p'XðuÞ of order m ¼ 1, with u sampled at intervals

xels. (c) Windowed scattering coefficients S½p'XðuÞ of order m ¼ 2.

iginal dataset, thus improving upon previous state-of-thet methods.

To evaluate the precision of affine space models, we

mpute an average normalized approximation error of

The US-Postal Service is another handwritt

dataset, with 7,291 training samples and 2,007 tes

of 16 % 16 pixels. The state of the art is obtain

tangent distance kernels [14]. Table 6 gives results

Preliminary conclusion • Elegant idea and intuiSon – First layers of deep network can be defined using known image / physical properSes • (Quite) difficult maths – Requires knowledge from wavelet transforms, Fourier series, complex numbers etc – Seems not all symbols etc are well explained (for the amateur reader at least). Next session • Keynote CVPR – hOp://techtalks.tv/talks/plenary-­‐talk-­‐are-­‐deep-­‐

networks-­‐a-­‐soluSon-­‐to-­‐curse-­‐of-­‐dimensionality/

60315/ • Taco Cohen: ScaOering and Invariants