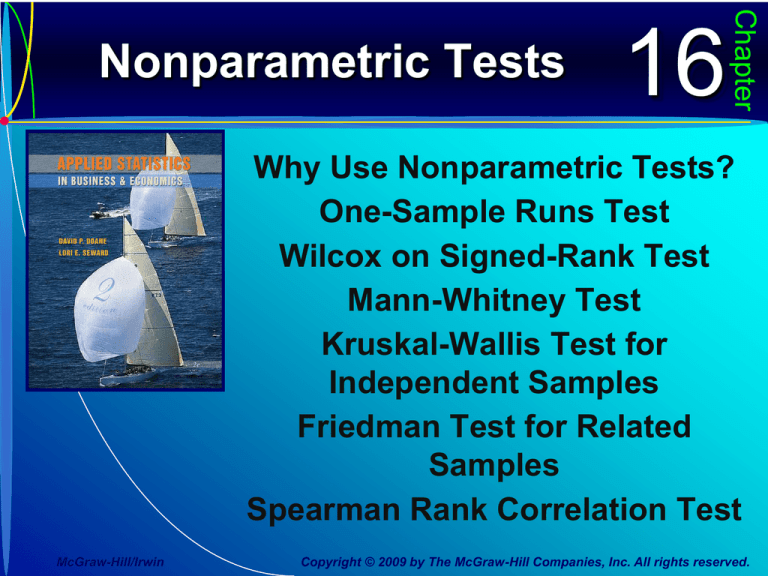

16

Chapter

Nonparametric Tests

Why Use Nonparametric Tests?

One-Sample Runs Test

Wilcox on Signed-Rank Test

Mann-Whitney Test

Kruskal-Wallis Test for

Independent Samples

Friedman Test for Related

Samples

Spearman Rank Correlation Test

McGraw-Hill/Irwin

Copyright © 2009 by The McGraw-Hill Companies, Inc. All rights reserved.

Why Use Nonparametric Tests?

Parametric Tests

•

•

•

16-2

Parametric hypothesis tests require the

estimation of one or more unknown

parameters (e.g., population mean or

variance).

Often, unrealistic assumptions are made

about the normality of the underlying

population.

Large sample sizes are often required to

invoke the Central Limit Theorem.

Why Use Nonparametric Tests?

Nonparametric Tests

•

16-3

Nonparametric or distribution-free tests

- usually focus on the sign or rank of the

data rather than the exact numerical value.

- do not specify the shape of the parent

population.

- can often be used in smaller samples.

- can be used for ordinal data.

Why Use Nonparametric Tests?

Advantages and Disadvantages of

Nonparametric Tests

Table 16.1

16-4

Why Use Nonparametric Tests?

Some Common Nonparametric Tests

Figure 16.1

16-5

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

•

•

•

16-6

The one-sample runs test (Wald-Wolfowitz

test) detects non-randomness.

Ask – Is each observation in a sequence of

binary events independent of its predecessor?

A nonrandom pattern suggests that the

observations are not independent.

The hypotheses are

H0: Events follow a random pattern

H1: Events do not follow a random pattern

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

•

16-7

To test the hypothesis, first count the number

of outcomes of each type.

n1 = number of outcomes of the first type

n2 = number of outcomes of the second type

n = total sample size = n1 + n2

A run is a series of consecutive outcomes of

the same type, surrounded by a sequence of

outcomes of the other type.

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

For example, consider the following series

representing 44 defective (D) or acceptable (A)

computer chips:

DAAAAAAADDDDAAAAAAAADDAAAAAAAADDD

DAAAAAAAAAA

• The grouped sequences are:

•

16-8

A run can be a single outcome if it is preceded

and followed by outcomes of the other type.

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

•

16-9

There are 8 runs (R = 8).

n1 = number of defective chips (D) = 11

n2 = number of acceptable chips (A) = 33

n = total sample size = n1 + n2 = 11 + 33 = 44

The hypotheses are:

H0: Defects follow a random sequence

H1: Defects follow a nonrandom sequence

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

When n1 > 10 and n2 > 10, then the number of

runs R may be assumed to be normally

distributed with mean mR and standard

deviation sR.

calc

16-10

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

The test statistic is:

calc

•

•

16-11

For a given level of significance a, find the

critical value za for a two-tailed test.

Reject the hypothesis of a random pattern if

z < -za or if z > +za .

One-Sample Runs Test

Wald-Wolfowitz Runs Test

•

Decision rule for large-sample runs tests:

Figure 16.2

16-12

Wilcox on Signed-Rank Test

•

•

•

•

16-13

The Wilcox on signed-rank test compares a

single sample median with a benchmark

using only ranks of the data instead of the

original observations.

It is used to compare paired observations.

Advantages are

- freedom from the normality assumption,

- robustness to outliers

- applicability to ordinal data.

The population should be roughly symmetric.

Wilcox on Signed-Rank Test

16-14

•

To compare the sample median (M) with a

benchmark median (M0), the hypotheses are:

•

When evaluating the difference between

paired observations, use the median

difference (Md) and zero as the benchmark.

Wilcox on Signed-Rank Test

•

•

•

16-15

Calculate the difference between the paired

observations.

Rank the differences from smallest to

largest by absolute value.

Add the ranks of the positive differences to

obtain the rank sum W.

Wilcox on Signed-Rank Test

•

•

For small samples, a special table is

required to obtain critical values.

For large samples (n > 20), the test statistic

is approximately normal.

calc

•

•

16-16

Use Excel or Appendix C to get a p-value.

Reject H0 if p-value < a.

Mann-Whitney Test

•

•

•

•

16-17

The Mann-Whitney test is a nonparametric

test that compares two populations.

It does not assume normality.

It is a test for the equality of medians,

assuming

- the populations differ only in centrality,

- equal variances

The hypotheses are

H0: M1 = M2 (no difference in medians)

H1: M1 ≠ M2 (medians differ)

Mann-Whitney Test

Performing the Test

•

•

•

•

16-18

Step 1: Sort the combined samples from

lowest to highest.

Step 2: Assign a rank to each value.

If values are tied, the average of the ranks

is assigned to each.

Step 3: The ranks are summed for each

column (e.g., T1, T2).

Step 4: The sum of the ranks T1 + T2 must be

equal to n(n + 1)/2, where n = n1 + n2.

Mann-Whitney Test

Performing the Test

•

Step 5: Calculate the mean rank sums T1

and T2.

•

Step 6: For large samples (n1 < 10, n2 > 10),

use a z test.

calc

•

16-19

Step 7: For a given a, reject H0 if

z < -za or z > +za

Kruskal-Wallis Test

for Independent Samples

•

•

•

•

16-20

The Kruskal-Wallis (K-W) test compares c

independent medians, assuming the

populations differ only in centrality.

The K-W test is a generalization of the

Mann-Whitney test and is analogous to a

one-factor ANOVA (completely randomized

model).

Groups can be of different sizes if each

group has 5 or more observations.

Populations must be of similar shape but

normality is not a requirement.

Kruskal-Wallis Test

for Independent Samples

Performing the Test

•

•

First, combine the

samples and assign

a rank to each

observation in each

group. For example:

When a tie occurs,

each observation is

assigned the

average of the ranks.

Table 16.7

16-21

Kruskal-Wallis Test

for Independent Samples

Performing the Test

•

•

Next, arrange

the data by

groups and sum

the ranks to

obtain the Tj’s.

Remember,

STj = n(n+1)/2.

Table 16.8

16-22

Kruskal-Wallis Test

for Independent Samples

Performing the Test

•

•

The hypotheses to be tested are:

H0: All c population medians are the same

H1: Not all the population medians are the same

For a completely randomized design with c

groups, the tests statistic is

calc

where n = n1 + n2 + … + nc

nj = number of observations in group j

Tj = sum of ranks for group j

16-23

Kruskal-Wallis Test

for Independent Samples

Performing the Test

•

•

16-24

The H test statistic follows a chi-square

distribution with n = c – 1 degrees of

freedom.

This is a right-tailed test, so reject H0 if H >

c2a or if p-value < a.

Friedman Test for Related

Samples

•

•

•

•

•

16-25

The Friedman test determines if c treatments

have the same central tendency (medians)

when there is a second factor with r levels and

the populations are assumed to be the same

except for centrality.

This test is analogous to a two-factor ANOVA

without replication (randomized block design)

with one observation per cell.

The groups must be of the same size.

Treatments should be randomly assigned

within blocks.

Data should be at least interval scale.

Friedman Test for Related

Samples

•

•

•

16-26

In addition to the c treatment levels that define

the columns, the Friedman test also specifies r

block factor levels to define each row of the

observation matrix.

The hypotheses to be tested are:

H0: All c populations have the same median

H1: Not all the populations have the same median

Unlike the Kruskal-Wallis test, the Friedman

ranks are computed within each block rather

than within a pooled sample.

Friedman Test for Related

Samples

Performing the Test

16-27

•

First, assign a rank to each observation within

each row. For example, within each Trial:

•

When a tie occurs, each observation is

assigned the average of the ranks.

Friedman Test for Related

Samples

Performing the Test

•

Compute the test statistic:

calc

where r = the number of blocks (rows)

c = the number of treatments (columns)

Tj = the sum of ranks for treatment j

16-28

Friedman Test for Related

Samples

Performing the Test

•

•

16-29

The Friedman test statistic F, follows a chisquare distribution with n = c – 1 degrees of

freedom.

Reject H0 if F > c2a or if p-value < a.

Spearman Rank Correlation Test

•

•

•

16-30

Spearman’s rank correlation coefficient

(Spearman’s rho) is an overall

nonparametric test that measures the

strength of the association (if any) between

two variables.

This method does not assume interval

measurement.

The sample rank correlation coefficient rs

ranges from -1 < rs < +1.

Spearman Rank Correlation Test

•

•

16-31

The sign of rs indicates whether the relationship

is

direct – ranks tend to vary in the same

direction, or

inverse – ranks tend to vary in opposite

directions

The magnitude of rs indicated the degree of

relationship. If

rs is near 0 – there is little or no agreement

between rankings

rs is near +1 – there is strong direct agreement

rs is near -1 – there is strong inverse

agreement

Spearman Rank Correlation Test

Performing the Test

•

•

First, rank

each

variable. For

example,

If more than

one value is

the same,

assign the

average of

the ranks.

Table 16.11

16-32

Spearman Rank Correlation Test

Performing the Test

•

•

•

16-33

The sums of ranks within each column must

always be n(n+1)/2.

Next, compute the difference in ranks di for

each observation.

The rank differences should sum to zero.

Spearman Rank Correlation Test

Performing the Test

•

•

16-34

Calculate the sample rank correlation

coefficient rs.

where di = difference in ranks for case i

n = sample size

For a right-tailed test, the hypotheses to be

tested are

H0: True rank correlation is zero (rs < 0)

H1: True rank correlation is positive (rs > 0)

Spearman Rank Correlation Test

Performing the Test

•

If n is large (at least 20 observations), then rs

may be assumed to follow the Student’s t

distribution with degrees of freedom n = n - 1

calc

•

16-35

Reject H0 if t > ta or if p-value < a.

Correlation versus Causation

•

•

•

•

16-36

Caution: correlation does not prove

causation.

Correlations may prove to be “significant”

even when there is no causal relation

between the two variables.

However, causation is not ruled out.

Multiple causes may be present.

Applied Statistics in

Business & Economics

End of Chapter 16

16-37