ML_1 - Reza Shadmehr

advertisement

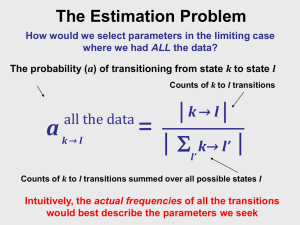

580.691 Learning Theory Reza Shadmehr Maximum likelihood Integration of sensory modalities We showed that linear regression, steepest decent algorithm, and LMS all minimize the cost function J w yn x w y Xw y Xw N n 1 T n 2 T This is just one possible cost function. What is the justification for this cost function? Today we will see that this cost function gives rise to the maximum likelihood estimate if the data is normally distributed. Expected value and variance of scalar random variables E x x 2 2 var x E x E x E x x E x 2 2 xx x 2 E x 2 2 E x x E x 2 E x2 2 x 2 x 2 E x2 x 2 Statistical view of regression • Suppose the outputs y were actually produced by the process: The “true” underlying process y*( n) wT x( n) ,x(n) , y(n) D x(1) , y (1) , x(2) , y (2) , What we measured Our model of the process y ( n) y*( n) y ( n ) wT x ( n ) y Xw ε ε N 0, 2 N 0, 2 I Given a constant X, the underlying process would give us different y every time we observe it. Given each “batch”, we fit our parameters. What is the probability of observing the particular y in trial i? p y (i ) x ( i ) , w , ? Probabilistic view of linear regression • Linear regression expresses the random variable y(n) in terms of the input-independent variation around the mean wT x( n) y (i ) w T x (i ) E y (i ) x(i ) wT x(i ) yˆ (i ) Let us assume: N 0, 2 y x variance Normal distribution Then outputs y given x are: Mean zero N wT x(i ) , 2 y (i ) p y (i ) 2 1 (i ) T (i ) x , w, exp y w x 1/ 2 2 2 2 2 (i ) 1 Probabilistic view of linear regression • As variance, i.e., spread, of the residual increases, our confidence about our model’s guess decreases. p y (i ) N 0, 2 1 0.5 1 0.8 p y (i ) 2 1 (i ) T (i ) x , w, exp y w x 1/ 2 2 2 2 2 (i ) x , w, (i ) 0.6 0.4 0.2 1 2 3 y (i ) 4 5 6 Probabilistic view of linear regression • Example: suppose the underlying process was: y*(i) w*T x(i) • Given some data points, we estimate w and also guess the variance of the noise, we could compute probability of each y that we observed. • In this example, N 0, 2 1 p y ( i ) x (i ) , w , 6 y wT g x 4 0.4 0.3 0.2 0.1 1.5 7 2 0 -1.5 -1 -0.5 0 0.5 1 1.5 y x x 0 -1.5 We want to find a set of parameters that maximize P for all the data. Maximum likelihood estimation • We view the outputs y(n) as random variables that were generated by a probabilistic process that had some distribution with unknown parameters q (e.g., mean and variance). The “best” guess for q is one that maximizes the joint probability that the observed data came from that distribution. P y (1) , P y y ( n) θ n θ ML arg max θ P y y (i ) θ , y ( n) θ P y y (1) θ i 1 Maximum likelihood estimation: uniform distribution • Suppose that n numbers y(i) were drawn from a distribution and we need to estimate the parameters of that distribution. • Suppose that the distribution was uniform. 1 P y y (i ) a for y (i ) 0, a , 0 otherwise. a La Likelihood that the data came from a model with our specific parameter value n 1 P y y (i ) a if a max y (i ) , 0 otherwise an i 1 aML arg max a L a aML max y (i ) Maximum likelihood estimation: exponential distribution p y a La 1 1 exp a a y P y y n (i ) i 1 l a log L a Log-likelihood a0 y0 1 n (i ) a exp y n a a i 1 1 n 1 n (i ) 1 n (i ) n 1 (i ) log y log a y n log a y n a a i 1 a i 1 a i 1 1 dl n 1 n (i ) y 0 da a a 2 i 1 n 1 n (i ) y 2 a a i 1 1 n (i ) aML y n i 1 d 1 log( x) dx x Maximum likelihood estimation: Normal distribution P yy (i ) 2 1 (i ) , exp y 2 2 2 2 1 L , n i 1 P y y (i ) , l , log L , n log i 1 n 1 i 1 2 log 2 2 2 2 2 y n 1 2 2 y n n log 2 n log (i ) y n 2 i 1 2 (i ) i 1 i 1 1 1 (i ) 2 2 2 Now we see that if is a constant, the log-likelihood is proportional to our cost function (the sum of squared errors!) Maximum likelihood estimation: Normal distribution l , n log 2 n log y n 1 (i ) 2 i 1 2 2 dl 1 n 1 n (i ) (i ) 2 y ( 1) y 0 2 2 d 2 i 1 i 1 0 n n y (i ) i 1 1 n (i ) ML y n i 1 n 2 dl n 1 (i ) y 0 d 3 i 1 1/ 2 1 n (i ) 2 ML y n i 1 d 1 log( x) dx x Probabilistic view of linear regression • If we assume that y(i) are independently and identically distributed (I.I.D.), conditional on x(i), then the joint conditional distribution of the data y is obtained by taking the product of the individual conditional probabilities: P y (1) , , y ( n) x(1) , , x( n) , w, P y y (1) x(1) , w, n P y y ( n) x( n) , w , 2 1 (i ) T (i ) exp y w x 1/ 2 2 2 i 1 2 2 1 1 n (i ) 2 T (i ) exp y w x n/2 2 2 i 1 2 2 1 Given our model, we can assign a probability to our observation. We want to find parameters that maximize the probability that we will observe data like the one that we were given. Probabilistic view of linear regression • Given some data D, and two models: (w1,) and (w2,), the better model has the larger joint probability for the actually observed data. 8 8 6 6 4 4 2 2 0 -1.5 -1 P y(1) , -0.5 0 , y(n) x(1) , 0.5 1 1.5 0 -1.5 , x(n) , w1, P y(1) , -1 -0.5 0 , y(n) x(1) , 0.5 1 1.5 , x( n) , w 2 , Probabilistic view of linear regression • Given some data D, and two models: (w,1) and (w,2), the better model has the larger joint probability for the actually observed data. P y(1) , 7 10 7 wT g x 6 6 10 -38 -38 4 10 -38 2 3 10 -38 1 2 10 4 3 0 -1.5 -1 -0.5 0 0.5 1 1.5 1 10 , x(n) , w, -38 5 10 5 , y (n) x(1) , -38 -38 0 0.6 0.8 1 1.2 1.4 The underlying process here was generated with a =1, our model was second order, and our joint probability on this data set happened to peak near =1. The same underlying process will generate different D on each run, resulting in different estimates of w and , despite the fact that the underlying process did not change. P y(1) , 7 6 5 D1 7 10 -38 6 10 -38 , x(n) , w, 5 10 -38 4 3 4 10 -38 2 3 10 -38 1 2 10 0 -1.5 , y (n) x(1) , -38 1 10 -38 -1 -0.5 0 0.5 1 1.5 0 0.6 8 3 10-40 6 2.5 10-40 0.8 1 1.2 1.4 2 10-40 D2 4 -40 1.5 10 1 10-40 2 5 10-41 0 0 -1.5 -1 -0.5 0 0.5 1 0.6 1.5 0.8 1 1.2 1.4 6 10-37 6 5 10-37 4 10-37 4 D3 3 10-37 2 2 10-37 -37 1 10 0 0 -1.5 -1 -0.5 0 0.5 1 1.5 0.6 0.8 1 1.2 1.4 Likelihood of our model • Given some observed data: D • and model structure: x (1) ,x(n) , y(n) , y(1) , x(2) , y(2) , y (i ) y*(i ) wT x(i ) N 0, 2 • Try to find the parameters w and that maximize the joint probability over the observed data: P y(1) , y (2) , , y (n) x(1) , x(2) , L w, P y (1) , y (2) , Likelihood that the data came from a model with our specific parameter values n i 1 , x(n) , w, , y ( n) x(1) , x(2) , P y y (i ) x ( i ) , w , , x( n ) , w , 1 n (i ) 2 T (i ) exp y w x n/2 2 2 2 i 1 2 1 Maximizing the likelihood • It’s easier to maximize the log of the likelihood function. Log-likelihood l w , log L w, log n i 1 log p y n (i ) i 1 y n 1 2 i 1 2 P y y (i ) x (i ) , w , n 2 1 (i ) T (i ) x , w , log exp y w x 1/ 2 2 2 2 i 1 2 (i ) (i ) T (i ) w x 1 log 2 i 1 n 2 2 1/ 2 Finding w to maximize the likelihood is equivalent to finding w so to minimize loss function: (i ) ˆ (i ) Loss y , y y n i 1 (i ) T (i ) w x 2 Finding weights that maximize the likelihood Log-likelihood (mx1) l w, (nxm) 2 y 2 y Xw y Xw log 2 n 1 (i ) 2 i 1 1 2 T (i ) w x log 2 i 1 n 2 2 n T i 1 2 1/ 2 1/ 2 2 1 yT y wT X T y wT X T y wT X T Xw 2 2 dl 1 2 X T y 2 X T Xw 0 dw 2 2 1 yT y yT Xw wT X T y wT X T Xw 2 X T Xw X T y T w ML X X 1 XTy y ( i ) wT x ( i ) ( i ) Above is the ML estimate of w, given model: N 0, 2 (all remaining terms are scalars) Finding the noise variance that maximizes the likelihood l w, 1 2 1 2 y Xw y Xw log 2 n T 2 i 1 n T y Xw y Xw 2 i 1 2 1/ 2 log 2 1/ 2 n dl 1 1 T y Xw y Xw 0 3 d i 1 2 ML log d 1 log( x) dx x 1 T y Xw y Xw n 0 2 1 y Xw T y Xw n Above is the ML estimate of 2, given model: y (i ) wT x(i ) (i ) N 0, 2 The hiking in the woods problem: combining information from various sources We have gone on a hiking trip and taken with us two GPS devices, one from a European manufacturer, and the other from a US manufacturer. These devices use different satellites for positioning. Our objective is to figure out how to combine the information from the two sensors. y a y yb x ya yb (a 4x1 vector) y Cx ε ε N 0, R Ra R 0 0 Rb I C 2x 2 I 2x 2 y N Cx, C var x C T R p y x N C x, R T 1 exp y Cx R 1 y Cx 2 (2 ) 4 R 1 We want to find the position x that maximizes this likelihood. Likelihood function 1 1 T ln p y x 2ln(2 ) ln R y Cx R 1 y Cx 2 2 1 xˆ ML C T R 1C C T R 1y d ln p y x C T R 1y C T R 1Cx dx R 1 R 1 a 0 xˆ ML 0 1 Rb Ra1 Rb1 var y R C T R 1 Ra1 1 Ra1y a Rb1y b Rb1 Our most likely location is one that weighs the reading from each device by the inverse of the device’s probability covariance. In other words, we should discount the reading from each device according to the inverse of each device’s uncertainty. If we stay still and do not move, the variance in our readings is simply due to noise in the devices. C 1 T 1 C R C 1 1 1 Ra Rb 1 var xˆ ML C R C T 1 T R 1 var y R T T 1 C C R C T By combining the information from the two devices, the variance of our estimate is less than the variance of each device. ML estimate Marc Ernst and Marty Banks (2002) were first to demonstrate that when our brain makes a decision about a physical property of an object, it does so by combining various sensory information about that object in a way that is consistent with maximum likelihood state estimation. Ernst and Banks began by considering a hypothetical situation in which one has to estimate the height of an object. Suppose that you use your index and thumb to hold an object. Your haptic system and your visual system report its height. y cx ε y yh ε yv T N 0, R R h2 , 0;0, v2 c 1 1 T 1 h2 1 v2 E xˆML yh yv 1 h2 1 v2 1 h2 1 v2 var xˆML 1 1 h2 1 v2 If the noise in the two sensors is equal, then the weights that you apply to the sensors are equal as well. This case is illustrated in the left column of next figure. On the other hand, if the noise is larger for proprioception, your uncertainty is greater for that sensor and so you apply a smaller weight to its reading (right column of next fig). Equal uncertainty in vision and prop. More uncertain of proprioception Measuring the noise in a biological sensor If one was to ask you to report the height of the object, of course you would not report your belief as a probability distribution. To estimate this distribution, Ernst and Banks acquired a psychometric function, shown in the lower part of the graph. To acquire this function, they provided their subjects a standard object of height 5.5cm. They then presented a second object of variable length and asked whether it was taller than the first object. If the subject represented the height of the standard object with a maximum likelihood estimate, then the probability of classifying the second object as being taller is simply the cumulative probability distribution. This is called a psychometric function. The point of subject equality (PSE) is the height at which the probability function is at 0.5. 0.4 0.3 y1 N , y2 N , h2 2 h p( y) y1 0.2 0.1 ˆ y2 y1 ˆ N , 2 2 2 4 Pr y2 y1 Pr ˆ 0 N x; , 2 h2 dx p ˆ erf ( x) e 8 10 12 0.2 Pr ˆ 0 0.15 0.1 0 2 6 0.25 h y2 0.05 x2 dx -2 2 0 4 6 1 x cdf x; , N t ; , dt 1 erf 2 2 Pr ˆ 0 1 cdf 0; , 2 2 2 Pr ˆ 0 8 h2 1 1 h2 2 x 2 0.8 h2 3 0.6 0.4 h 0.2 -3 -2 -1 1 2 3 The authors estimated that the noise in the haptic sense was four times larger than the noise in the visual sense. h2 4 v2 This implies that in integrating visual and haptic information about an object, the brain should ‘weigh’ the visual information 4 times are much as haptic information. To test for this, subjects were presented with a standard object for which the haptic information indicated a height of 1 and visual information indicated a height of1 Subjects would assign a weight of around 0.8 to the visual information and around 0.2 to the haptic information. To estimate these weights, they presented a second object (for which the haptic and visual information agreed) and ask which one was taller. Summary The “true” underlying process What we measured y*(i ) w*T x(i ) y (i ) y *(i ) N 0, 2 ,x(n) , y(n) D x(1) , y (1) , x(2) , y (2) , Our model of the process y ( i ) wT x ( i ) l w , log P y y (i ) x (i ) , w , n i 1 ML estimate of model parameters, given X: T w ML X X 2 ML 1 XTy 1 y Xw T y Xw n