Entropy Coding of Compressed Sensed Video

advertisement

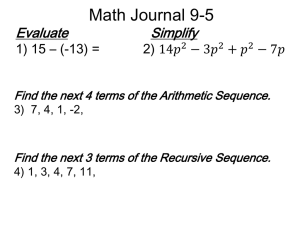

Entropy Coding of Video Encoded by Compressive Sensing Yen-Ming Mark Lai, University of Maryland, College Park, MD (ylai@amsc.umd.edu) Razi Haimi-Cohen, Alcatel-Lucent Bell Labs, Murray Hill, NJ (razi.haimi-cohen@alcatel-lucent.com) August 11, 2011 Why is entropy coding important? Transmission is digital 101001001 channel 101001001 101001001 channel 101001001 1101 channel 1101 Input video Output video Break video into blocks Deblock Take compressed sensed measurements L1 minimization Quantize measurements Arithmetic encode channel Arithmetic decode Statistics of Compressed Sensed blocks + - - + - + - + 5 3 4 1 3 1 13 Input (integers between 0 and 255) 2 2 = 18 CS Measurement (integers between -1275 and 1275) Since CS measurements contain noise from pixel quantization, quantize at most to standard deviation of this noise standard deviation of noise from pixel quantization in CS measurements 1 N 12 N is total pixels in video block We call this minimal quantization step the “normalized” quantization step 1 N 12 What to do with values outside range of quantizer? quantizer range large values that rarely occur CS measurements are “democratic” ** Each measurement carries the same amount of information, regardless of its magnitude ** “Democracy in Action: Quantization, Saturation, and Compressive Sensing,” Jason N. Laska, Petros T. Boufounos, Mark A. Davenport, and Richard G. Baraniuk (Rice University, August 2009) What to do with values outside range of quantizer? quantizer range Discard values, small PSNR loss since occurrence rare Simulations 2 second video broken into 8 blocks 60 frames (2 seconds) 288 pixels 352 pixels Ratio of CS measurements 0.15 0.25 0.35 0.45 to number of input values PSNR Bit Rate “Normalized” quantization step multiplier 1 10 100 200 500 PSNR Bit Rate Range of quantizer (std dev of measured Gaussian distribution) 1.0 1.5 2.0 PSNR Bit Rate 3.0 Processing Time • 6 cores, 100 GB RAM • 80 simulations (5 ratios, 4 steps, 4 ranges) • 22 hours total – 17 minutes per simulation – 8.25 minutes per second of video Results Fraction of CS measurements outside quantizer range 2.7 million CS measurements Fraction of CS measurements outside quantizer range How often do large values occur theoretically? 34.13% 34.13% 2.14% 0.135% 13.59% 13.59% 2.14% 0.135% How often do large values occur in practice? (theoretical) 2.7 million CS measurements (0.135%) 0.037% What to do with large values outside range of quantizer? quantizer range Discard values, small PSNR loss since occurrence rare Discarding values comes at bit rate cost 1001010110110101010011101000101 discard 0010100001010101000101001000100 0100100010110010101010010101010 1001010110110101010011101000101 discard Bits Per Measurement, Bits Per Used Measurement Bits Per Measurement, Bits Per Used Measurement 9.4 bits Best Compression (Entropy) of Quantized Gaussian Variable X h( X ) log 9.4 bits 1 log 2 e 2 17 .4 bits 2 1 N 12 Arithmetic Coding is viable option ! Fix quantization step, vary standard deviation Faster arithmetic encoding, less measurements PSNR versus Bit Rate (10 x step size) Fixed bit rate, what should we choose? 18.5 minutes, 121 bins 2.1 minutes, 78 bins 2.1 minutes, 78 bins Fix standard deviation, vary quantization step Increased arithmetic coding efficiency, more error PSNR versus Bit Rate (2 std dev) Fixed PSNR, which to choose? Master’s Bachelor’s PhD Demo Future Work • Tune decoder to take quantization noise into account. make use of out-of-range measurements • Improve computational efficiency of arithmetic coder Questions? Supplemental Slides (Overview of system) Input video Output video Break video into blocks Deblock Take compressed sensed measurements L1 minimization Quantize measurements For each block: 1) Output of arithmetic encoder 2) mean, variance 3) DC value 4) sensing matrix identifier Arithmetic encode channel Arithmetic decode Supplemental Slides (Statistics of CS Measurements) “News” Test Video Input • Block specifications – 64 width, 64 height, 4 frames (16,384 pixels) – Input 288 width, 352, height, 4 frames (30 blocks) • Sampling Matrix – Walsh Hadamard • Compressed Sensed Measurements – 10% of total pixels = 1638 measurements Histograms of Compressed Sensed Samples (blocks 1-5) Histograms of Compressed Sensed Samples (blocks 6-10) Histograms of Compressed Sensed Samples (blocks 11-15) Histograms of Compressed Sensed Samples (blocks 21-25) Histograms of Compressed Sensed Samples (blocks 26-30) Histograms of Compressed Sensed Samples (blocks 16-20) Histograms of Standard Deviation and Mean (all blocks) Supplemental Slides (How to Quantize) Given a discrete random variable X, the fewest number of bits (entropy) needed to encode X is given by: 1 H ( X ) p( xi ) log i p( xi ) For a continuous random variable X, differential entropy is given by 1 dx h( X ) p( x) log p ( x) Differential Entropy of Gaussian function of variance 1 2 h( X ) log 2 e 2 maximizes entropy for fixed variance i.e. h(X’) <= h(X) for all X’ with fixed variance Approximate quantization noise as i.i.d. with uniform distribution w2 q ~ U 0, 12 where w is width of quantization interval. Then, w 2 Var(M ) Var(weighted X ) i 12 i 1 2 N Variance from initial quantization noise How much should we quantize? w 2 Var(M ) Var(weighted X ) i 12 i 1 2 N input pixels are integers 2 1 Var ( weighted X ) N 12 measurement matrix is Walsh-Hadamard Supplemental Slides (Entropy of arithmetic encoder) Input video Break video into blocks Take compressed sensed measurements Output video What compression to expect from arithmetic coding? Deblock L1 minimization Quantize measurements Arithmetic encode channel Arithmetic decode Entropy of Quantized Random Variable X - continuous random variable X - uniformly quantized X (discrete) N H ( X ) h( X ) log 1 log 2 e 2 2 Entropy of Quantized Random Variable X - continuous random variable X - uniformly quantized X (discrete) quantization step size H ( X ) h( X ) log differential (continuous) entropy Entropy of Quantized Random Variable 2 - 5667^2 (average variance of video blocks) N - 16,384 pixels in video block 1 log 2 e 2 14.5 bits 2 N H ( X ) h( X ) log 7.5 bits 7 bit savings from quantization Supplemental Slides (Penalty for wrong distribution) Input video Break video into blocks Take compressed sensed measurements Output video What is penalty for using wrong probability distribution? Deblock L1 minimization Quantize measurements Arithmetic encode channel Arithmetic decode Let p and q have normal distributions p ~ N ( p , p ) 2 q ~ N (q , q ) 2 Then, ( p q ) p q 2 2 D( p || q) 2 q 2 2 q ln p Assume random variable X has probability distribution p but we encode with distribution q Entropy of random variable EL( X ) 1 H ( X ) D( p || q) Expected length of wrong Kullback-Leiber codeword divergence (penalty) Worst case scenario for video blocks of “News” p ~ N (340, 28002 ) 2 q ~ N (360,11200 ) ( p q ) p q 2 2 D( p || q) 2 q 2 2 q ln p 1.33 bits EL( X ) 1 H ( X ) D( p || q) H ( x) 2.33 bits Summary Input video Break video into blocks Take compressed sensed measurements Quantize measurements Output video What are statistics of measurements? Gaussian with different means and variances Arithmetic encode channel Deblock L1 minimization Arithmetic decode Input video Break video into blocks Take compressed sensed measurements Quantize measurements Output video How much should we quantize? By square root of total number of pixels in video block Arithmetic encode channel Deblock L1 minimization Arithmetic decode Input video Break video into blocks Take compressed sensed measurements Quantize measurements Output video What compression to expect from arithmetic coding? Deblock L1 minimization bits/measurement •14.5 (integer quantization) •7 bits/measurement (quantization) Arithmetic encode channel Arithmetic decode Input video Break video into blocks Take compressed sensed measurements Quantize measurements Output video What is penalty for using wrong probability distribution? For “News”, must send extra 2 bits/measurement Arithmetic encode channel Deblock L1 minimization Arithmetic decode