Statistical analysis of

expression data:

Normalization, differential

expression and multiple testing

Jelle Goeman

Outline

Normalization

Expression variation

Modeling the log Fold change

Complex designs

Shrinkage and empirical Bayes (limma)

Multiple testing (False Discovery Rate)

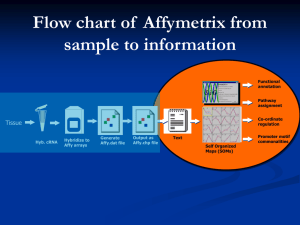

Measuring expression

Platforms

Microarrays

RNAseq

Common:

Need for normalization

Batch effects

Why normalization

Some experimental factors cannot be

completely controlled

Amount of material

Amount of degradation

Print tip differences

Quality of hybridization

Effects are systematic

Cause variation between samples and

between batches

What is normalization?

Normalization =

An attempt to get rid of unwanted systematic

variation by statistical means

Note 1: this will never completely succeed

Note 2: this may do more harm than good

Much better, but often impossible

Better control of the experimental conditions

How do normalization methods work?

General approach

1. Assume: data from an ideal experiment would

have characteristic A

E.g. mean expression is equal for each sample

Note: this is an assumption!

2. If the data do not have characteristic A, change

the data such that the data now do have

characteristic A

E.g. Multiply each sample’s expression by a factor

Example: quantile normalization

Assume:

“Most probes are not differentially expressed”

“As many probes are up and downregulated”

Reasonable consequence:

The distribution of the expression values is identical for

each sample

Normalization:

Make the distribution of expression values identical for

each sample

Quantile normalization in practice

Choose a target distribution

Typically the average of the measured distributions

All samples will get this distribution after normalization

Quantile normalization:

Replace the ith largest expression value in each sample by the

ith largest value in the target distribution

Consequence:

Distribution of expressions the same between samples

Expressions for specific genes may differ

Less radical forms of normalization

Make the means per sample the same

Make the medians the same

Make the variances the same

Loess curve smoothing

Same idea, but less change to the data

Overnormalizing

Normalizing can remove or reduce true

biological differences

Example: global increase in expression

Normalization can create differences that are not

there

Example: almost global increase in expression

Usually: normalization reduces unwanted

variation

Batch effects

Differences between batches are even stronger

than between samples in the same batch

Note: batch effects at several stages

Normalization is not sufficient to remove batcheffects

Methods available (comBat) but not perfect

Best: avoid batch effects if possible

Confounding by batch

Take care of batch-effects in experimental

design

Problem: confounding of effect of interest

by batch effects

Example: Golub data

Solution: balance or randomize

Expression variation

Differential expression

Two experimental conditions

Treated versus untreated

Two distinct phenotypes

Tumor versus normal tissue

Which genes can reliably be called differentially

expressed?

Also: continuous phenotypes

Which gene expressions are correlated with phenotype?

Variation in gene expression

Technical variation

Variation due to measurement technique

Variability of measured expression from experiment to

experiment on the same subject

Biological variation

Variation between subjects/samples

Variability of “true” expression between different

subjects

Total variation

Sum of technical and biological variation

Reliable assessment

Two samples always have different expression

Maybe even a high fold change

Due to random biological and technical variation

Reliable assessment of differential expression:

Show: fold change found cannot be explained by

random variation

Assessment of differential expression

Two interrelated aspects:

Fold change:

How large is the expression difference found?

P-value:

How sure are we that a true difference exists?

LIMMA:

Linear models for gene expression

Modeling variation

How does gene expression depend on

experimental conditions?

Can often be well modeled with linear models

Limma:

linear models for microarray analysis

Gordon Smyth, W. and E. Hall Institute, Australia

Multiplicative scale effects

Assumption: effects on gene expression work in

a multiplicative way (“fold change”)

Example: treatment increases gene expression

of gene MMP8 by a factor 2

“2-fold increase”

Treatment decreases gene expression of gene

MMP8 by a factor 2

“2-fold decrease”

Multiplicative scale errors

Assumption: variation on gene expression

works in a multiplicative way

A 2-fold increase by chance is just as

likely as a 2-fold decrease by chance

When true expression is 4, measuring 8 is

as likely as measuring 2

Working on the log scale

When effects are multiplicative, log-transform!

Usual in microarray analysis: log to base 2

Remember: log(ab) = log(a)+log(b)

2 fold increase = +1 to log expression

2 fold decrease = -1 to log expression

Log scale makes multiplicative effects symmetric

½ and 2 are not symmetric around 1 (= no change)

-1 and +1 are symmetric around 0 (= no change)

A simple linear model

Example: treated and untreated samples

Model separately for each gene

Log Expression of gene 1: E1

E1 = a + b * Treatment + error

a: intercept = average untreated logexpression

b: slope = treatment effect

Modeling all genes simultaneously

E1 = a1 + b1 * Treatment + error

E2 = a2 + b2 * Treatment + error

…

E20,000 = a20,000 + b20,000 * Treatment +

error

Same model, but

Separate intercept and slope for each gene

And separate sd sigma1, sigma2, … of error

Estimates and standard errors

Gene 1: Estimates for a1, b1 and sigma1

Estimate of treatment effect of gene 1

b1 is the estimated log fold change

standard error s.e.(b1) depends on sigma1

Regular t-test for H0: b1=0:

T = b1/s.e.(b1)

Can be used to calculate p-values.

Just like regular regression, only 20,000 times

Back to original scale

Log scale regression coefficient b1

Average log fold change

Back to a fold change: 2^b1

b1= 1 becomes fold change 2

b1 = -1 becomes fold change 1/2

Confounders

Other effects may influence gene

expression

Example: batch effects

Example: sex or age of patients

In a linear model we can adjust for such

confounders

Flexibility of the linear model

Earlier: E1 = a1 + b1 * Treatment + error

Generalize:

E1 = a1 + b1 * X + c1 * Y + d1 + Z + error

Add as many variables as you need.

Variance shrinkage

Empirical Bayes

So far: each gene on its own

20,000 unrelated models

Limma: exchange information between

genes

“Borrowing strength”

By empirical Bayes arguments

Estimating variance

For each gene a variance is estimated

Small sample size: variance estimate is

unreliable

Too small for some genes

Too large for others

Variance estimated too small: false positives

Variance estimated too large: low power

Large and small estimated variance

Gene with low variance estimate

Likely to have low true variance

But also: likely to have underestimated variance

Gene with high variance estimate

Likely to have high true variance

But also: likely to have overestimated variance

Limma’s idea:

Use information from other genes to assess whether

variance is over/underestimated

True and estimated variance

Variance model

Limma has a gene variance model

All gene’s variances are drawn at random

from an inverse gamma distribution

Based on this model:

Large variances are shrunk downwards

Small variances are shrunk upwards

Effect of variance shrinkage

Genes with large fold change and large

variance

More power

More likely to be significant

Genes with small fold change and small

variance

Less power

Less likely to be significant

Limma and sample size

Shrinkage of limma only effective for small

sample size (< 10 samples/group)

Added information of other genes

becomes negligeable if sample size gets

large

Large samples: Doing limma is the same

as doing regression per gene

Differential expression in RNAseq

RNAseq data: counts

Gene id

Y1

Y2

Y3

Y4

Y5

Y6

Y7

Y8

Y9

Y10

ENSG0000

0110514

69

178

101

58

101

31

165

108

70

1

ENSG0000

0086015

115

52

86

88

146

84

59

85

86

0

ENSG0000

0115808

285

190

467

295

345

532

369

473

423

5

ENSG0000

0169740

502

184

363

195

403

262

225

332

136

3

ENSG0000

0215869

0

7

0

0

0

0

0

2

0

0

ENSG0000

0261609

20

31

76

20

25

158

23

18

23

1

ENSG0000

0169744

488

529

470

505

1137

373

1392

3517

192

1

ENSG0000

0215864

1

0

0

0

0

0

0

0

0

0

Modelling count data

Distinguish three types of variation

Biological variation

Technical variation

Count variation

Count variation is important for lowexpressed genes

Generally biological variation most

important

Overdispersion

Modelling count data: two stages

1. Model how gene expression varies from

sample to sample

2. Model how the observed count varies by

repeated sequencing of the same sample

Stage 2 is specific for RNAseq

Two approaches

Approach 1: Model the count variation and the

between-sample variation

edgeR

Deseq

Approach 2: Normalize the count data and

model only the biological variation

Voom + limma

Approach 3: Model count variation only

Popular but very wrong!

Multiple testing

20,000 p-values

Fitting 20,000 linear models

Some variance shrinkage

Result:

20,000 fold changes

20,000 p-values

Which ones are truly differentially

expressed?

Multiple testing

Doing 20,000 tests: risk false positive

20,000 times

If 5% of null hypotheses is significant,

expect 1,000 significant by pure chance

How to make sure you can really trust the

results?

Bonferroni

Classical way of doing multiple testing

Call K the number of tests performed

Bonferroni: significant = p-value < 0.05/K

“Adjusted p-value”

Multiply all p-values by K, compare with 0.05

Advantages of Bonferroni

Familywise error control

=Probability of making any type I error < 0.05

With 95% chance, list of differentially

expressed genes has no errors

Very strict

Easy to do

Disadvantages of Bonferroni

Very strict

“No” false positives

Many false negatives

It is not a big problem to have a few false

positives

Do validation experiments later

False discovery rate

(Benjamini and Hochberg)

FDR = expected proportion of false

discoveries among all discoveries

Control of FDR at 0.05 means in the long

run experiments average about 5% type I

errors among the reported genes

Percentage: longer lists of genes are

allowed to have more errors

Benjamini and Hochberg by hand

1. Order the p-values small to large

Example: 0.0031, 0.0034, 0.02, 0.10, 0.65

2. Multiply the k-th p-value by m/k, where m is the number

of p-values, so

0.0031 * 5/1, 0.0034 * 5/2, 0.02 * 5/3, 0.10 * 5/4, 0.65 * 5/5

which becomes

0.0155, 0.0085, 0.033, 0.125, 0.65

3. If the p-values are no longer in increasing order, replace

each p-value by the smallest p-value that is later in the

list. In the example, we replace 0.0155 by 0.0085. The

final Benjamini-Hochberg adjusted p-values become

0.0085, 0.0085, 0.033, 0.125, 0.65

FDR warnings

FDR is susceptible to cheating

How to cheat with FDR?

Add many tests of known false null

hypotheses…

Result: reject more of the other null

hypotheses

Example limma results

Conclusion

Testing for differentially expressed genes

Repeated application of a linear model

Include all factors in the model that may

influence gene expression

Limma: additional step “borrowing strength”

Don’t forget to correct for multiple testing!