Slides - CARMA - Wayne State University

advertisement

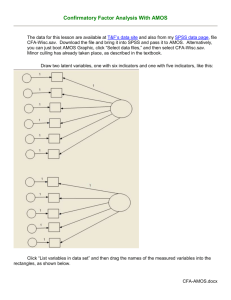

1 Causal Analysis and Model Confirmation Dr. Larry J. Williams Dean’s Research Chair and Professor of Management/Psychology CARMA Director, Wayne State University April 26, 2013: Honoring the Career Contributions of Professor Larry James 2 SEM: A Tool for Theory Testing δ1 x1 δ2 x2 δ3 x3 lx11 lx21 lx31 x1 LV1 11 φ21 δ4 x4 lx42 δ5 x5 lx52 lx62 δ6 x6 x2 LV2 φ32 δ7 x7 lx73 δ8 x8 lx83 lx93 δ9 x9 z1 φ31 x3 LV3 12 z2 21 h1 LV4 h2 LV5 ly11 ly21 ly31 13 y1 e1 y2 e2 ly42 ly52 ly62 y3 y4 e3 e4 y5 e5 y6 e6 Figure 1. Example Composite Model (Includes Path and Measurement Models) 3 • Who is familiar with this type of model? • Was not always the caseLarry’s workshops His journal publications • A cynical view of contemporary SEM practices A parameter estimate represents a “causal” effect A good fitting model is “the” model • Fancy software/fast processors does NOT mean good science! • Science would be improved by revisiting Larry’s work 4 • Key principles of model confirmation/disconfirmation Models lead to predictions Confirmation occurs if predictions match data Confirmation implies support for a model, but does not prove it is THE model • Today: towards improved causal analysis Revisit key work on model comparisons and the role of the measurement model Share results from two recent publications based on that work Provide recommendations based on old and new 5 James, Mulaik, and Brett (1982) • Conditions 1-7: appropriateness of theoretical models Formal statement of theory via structural model Theoretical rationale for causal hypotheses Specification of causal order Specification of causal direction Self contained functional equations Specification of boundaries Stability of structural model • Condition 8: Operationalization of variables • Condition 9 and 10: Empirical confirmation of predictions Not well understood by many management researchers: Do models with good fit have significant paths? Do models with bad fit have non-significant paths? 6 • Condition 9: Empirical support for functional equations Tests whether causal parameters in model are different from zero Implemented by comparing theoretical model to a restricted path (structual model), with paths in the model forced to zero Hoping for a significant chi-square difference test (rejecting null H0) This is global test, individual parameter tests also important ***Weighted in favor of confirming predictions- power • Condition 10: Fit of model to data Tests whether paths left out of model are important Implemented by comparing theoretical model to a less restricted model that adds omitted paths Hoping to fail to reject the null that these paths are zero Fit index: non-statisical assessment of model, practical judgment ***The omitted parameter test saves considerable fuss, while the fit approach is more subtle Mulaik, James, Van Alstine, Bennett, Lind, & Stilwell (1989) • Proposed that goodness-of-fit indices usually heavily influenced by goodness of fit of measurement model (foreshadowed in James, Mulaik, & Brett, 1982) • And indices reflect, to a much lesser degree, the goodness of fit of the causal portion of the model • Thus, possible to have models with overall GFI values that are high, but for which causal portion and relations among latent variables are misspecified • Introduced RNFI; demonstrated it with artificial data 8 Williams & O’Boyle (2011) • Can lack of focus of global fit indices like CFI and RMSEA on path component lead to incorrect decisions about models – models with adequate values but misspecified path components? • Requires simulation analysis- know “true model”, evaluate misspecified models, examine their CFI and RMSEA values • Examined four representative composite models, two of these were examined using both 2 and 4 indicators per latent variable, results in 6 examples • Focus: what types of models have CFI and RMSEA values that meet the “gold standards”? 9 Ex.1: Duncan, et al., 1971 ξ1 ξ2 η1 ξ3 ξ4 ξ5 ξ6 η2 Ex. 2: Ecob (1987) Ex. 3: Mulaik et al., (1989) ξ1 η1 ξ2 ξ3 ξ4 η3 η2 Ex. 4: MacCallum (1986) ξ1 ξ2 ξ3 η1 η2 Results: Are there misspecified models with CFI>.95 or RMSEA<.08? Example 1: MT-4 Example 2: MT-6 Example 3 (2 indicators): MT-5 Example 3 (4 indicators): MT-7 Example 4 (2 indicators): MT-2 Example 4 (4 indicators): MT-4 Conclusion: multiple indicator models with severe misspecifications can have CFI and RMSEA values that meet the standards used to indicate adequate fit, this creates problems for testing path model component of composite model and evaluating theory 14 • Also investigated two alternative indices based on previous work by James, Mulaik & Brett (1982), Sobel and Bohrnstedt (1985), Williams & Holahan (1994) , Mulaik et al. (1989), and McDonald & Ho (2002) • Goal was to develop indices that more accurately reflect the adequacy of the path model component of a composite model • NSCI-P an extension of the RNFI of Mulaik et al. (1989), RMSEA-P originally proposed by McDonald & Ho (2002) • We calculated values for correctly and incorrectly specified models presented earlier 15 • RMSEA-P: Remember null H0 is that MT is true, hope is to not reject MT should have value <.08 (if not Type I error), MT-X should have values >.08 (if not Type II error) Results- All MT had values < .08 (no Type I errors) Misspecified models (MT-x): success (all values >.08) with 5 of 6 examples Example 1: MT-2 and MT-1 retained (RMSEA-P<.08) other 5 examples: MT-1 and all other MT-x had RMSEA-P>.08 16 Notes: Example 1 had #indicators/latent variable ratio of 1.25, all others > 2.0 Results with confidence intervals also supportive • NSCI-P values for MT approached 1.0 in all examples (within rounding error) average drop in value in going from MT to MT-1 was .043 17 O’Boyle & Williams (2011) • Of 91 articles in top journals, 45 samples with information needed to do RMSEA decomposition to yield RMSEA-P, examine with real data if models with good composite fit have good path model fit Mean value of RMSEA-P = .111 Only 3 < .05, 15 < .08 recommended values RMSEA-P Confidence intervals (CI): 19 (42%) have lower bound of CI >.08 Reject close and reasonable fit, models bad Only 5 (11%) have upper bound of CI <.08 Fail to reject close fit, models good • Possibilities/suspicions of James and colleagues supported 18 • What is role of fit indices in broader context of model evaluation? • James, Mulaik, & Brett (1982) and Anderson & Gerbing (1988) stress MT comparison with MSS via χ2 difference test • Fit indices were supposed to supplement this model comparison as test of constraints in path model (former more subtle, latter saves considerable fuss) Only 3 of 43 did this test 30 were significant (MT includes significant misspecifications) For 26 of these, CFI and RMSEA values met criteria “good” fit conclusion, counter to χ2 difference test • Researchers need to save the fuss, be less subtle 19 Conclusions: Toward Improved Practices • Use composite model fit indices (CFI, RMSEA), cutoffs? • Use indicator residuals • Include focus on path model relationships Chi-square difference test: MSS -MT Specialized fit indices (NSCI, RMSEA-P) • Do not forget other model diagnostics • Remember that a path left out is a H0 and should be supported theoretically , always alternative models 20 • And we should remember that scholars like Larry (and his collaborators) created the “path” for many of us who followed by: (a) helping create the discipline of organizational research methods, (b) providing educational leadership, (c) conducting exemplary scholarship to “model” our work after, and (d) supporting many others and helping them “fit” in as their careers developed • On behalf of all of us, and CARMA, thanks! 21 Key References • Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103, 411-423 • James, L. R., Mulaik, S. A., & Brett, J. (1982). Causal analysis: Models, assumptions, and data. Beverly Hills, CA: Sage Publications. • McDonald, R. P., & Ho, M. H. R. (2002). Principles and practice in reporting structural equation analyses. Psychological Methods, 7, 64-82. • Mulaik, S. A., James, L. R., Van Alstine, J., Bennett, N., Lind, S., & Stilwell, C. D. (1989). Evaluation of goodness-of-fit indices for structural equation models. Psychological Bulletin, 105, 430-445. • O’Boyle & Williams (2011). Decomposing model fit: Measurement versus theory in organizational research using latent variables. Journal of Applied Psychology, 96, 1, 1-12. • Williams & O’Boyle (2011). The myth of global fit indices and alternatives for assessing latent variable relations. Organizational Research Methods, 14, 350-369. 22