Probabilistic Robotics - Faculty of Computer Science

advertisement

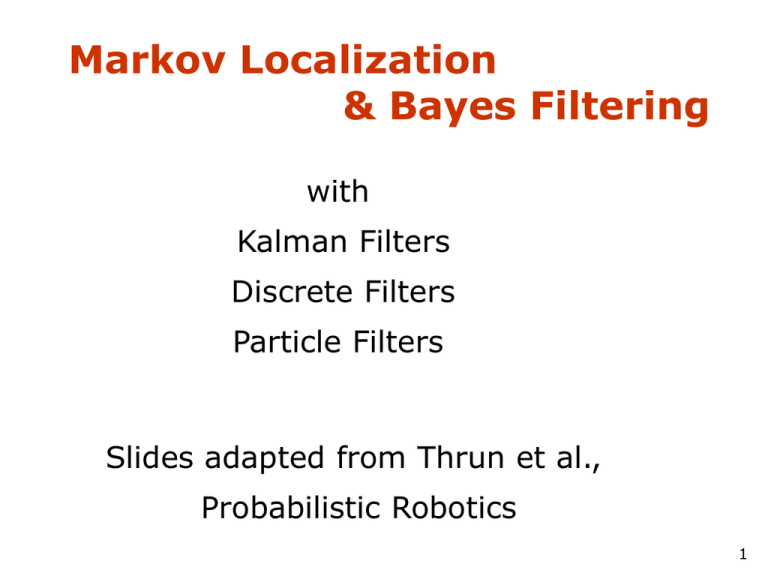

Markov Localization

& Bayes Filtering

with

Kalman Filters

Discrete Filters

Particle Filters

Slides adapted from Thrun et al.,

Probabilistic Robotics

1

Control Scheme for Autonomous

Mobile Robot

Dalhousie Fall 2011 / 2012 Academic Term

•

•

•

•

•

•

Introduction

Motion

Perception

Control

Concluding Remarks

LEGO Mindstorms

Autonomous Robotics

CSCI 6905 / Mech 6905 – Section 3

Faculties of Engineering & Computer Science

2

Control Scheme for Autonomous

Mobile Robot – the plan

•

•

•

•

•

•

Introduction

Motion

Perception

Control

Concluding Remarks

LEGO Mindstorms

– Thomas will cover generalized Bayesian filters for

localization next week

– Mae sets up the background for him, today, by

discussing motion and sensor models as well as robot

control

– Mae then follows on Bayesian filters to do a specific

example, underwater SLAM

Dalhousie Fall 2011 / 2012 Academic Term

Autonomous Robotics

CSCI 6905 / Mech 6905 – Section 3

Faculties of Engineering & Computer Science

3

Markov Localization

The robot doesn’t know where it is. Thus, a reasonable initial believe

of it’s position is a uniform distribution.

4

Markov Localization

A sensor reading is made (USE SENSOR MODEL) indicating a door at

certain locations (USE MAP). This sensor reading should be integrated

with prior believe to update our believe (USE BAYES).

5

Markov Localization

The robot is moving (USE MOTION MODEL) which adds noise.

6

Markov Localization

A new sensor reading (USE SENSOR MODEL) indicates a door at

certain locations (USE MAP). This sensor reading should be integrated

with prior believe to update our believe (USE BAYES).

7

Markov Localization

The robot is moving (USE MOTION MODEL) which adds noise. …

8

Bayes Formula

P ( x, y ) P ( x | y ) P ( y ) P ( y | x ) P ( x )

P( y | x) P ( x) likelihood prior

P( x y )

P( y )

evidence

9

Bayes Rule

with Background Knowledge

P( y | x, z ) P( x | z )

P( x | y, z )

P( y | z )

10

Normalization

P( y | x) P( x)

P( x y )

P( y | x) P( x)

P( y )

1

1

P( y )

P( y | x)P( x)

x

Algorithm:

x : aux x| y P( y | x) P( x)

1

aux x| y

x

x : P( x | y ) aux x| y

11

Recursive Bayesian Updating

P( zn | x, z1,, zn 1) P( x | z1,, zn 1)

P( x | z1,, zn)

P( zn | z1,, zn 1)

Markov assumption: zn is independent of z1,...,zn-1 if

we know x.

P( zn | x) P( x | z1,, zn 1)

P( x | z1,, zn)

P( zn | z1,, zn 1)

P( zn | x) P( x | z1,, zn 1)

1...n

P( z | x) P( x)

i

i 1...n

12

Putting oberservations and

actions together: Bayes Filters

• Given:

• Stream of observations z and action data u:

dt {u1, z1 , ut , zt }

• Sensor model P(z|x).

• Action model P(x|u,x’).

• Prior probability of the system state P(x).

• Wanted:

• Estimate of the state X of a dynamical system.

• The posterior of the state is also called Belief:

Bel( xt ) P( xt | u1 , z1 , ut , zt )

13

Graphical Representation and

Markov Assumption

p( zt | x0:t , z1:t , u1:t ) p( zt | xt )

p( xt | x1:t 1, z1:t , u1:t ) p( xt | xt 1, ut )

Underlying Assumptions

• Static world

• Independent noise

• Perfect model, no approximation errors

14

Bayes Filters

z = observation

u = action

x = state

Bel( xt ) P( xt | u1, z1 , ut , zt )

Bayes

P( zt | xt , u1, z1, , ut ) P( xt | u1, z1, , ut )

Markov

P( zt | xt ) P( xt | u1, z1, , ut )

Total prob.

P( zt | xt ) P( xt | u1 , z1 , , ut , xt 1 )

P( xt 1 | u1 , z1 , , ut ) dxt 1

Markov

P( zt | xt ) P( xt | ut , xt 1 ) P( xt 1 | u1 , z1 , , ut ) dxt 1

Markov

P ( zt | xt ) P ( xt | ut , xt 1 ) P ( xt 1 | u1 , z1 , , zt 1 ) dxt 1

P( zt | xt ) P( xt | ut , xt 1 ) Bel ( xt 1 ) dxt 1

15

•Prediction

bel ( xt ) p ( xt | ut , xt 1 ) bel ( xt 1 ) dxt 1

•Correction

bel( xt ) p( zt | xt ) bel( xt )

Bel ( xt ) Filter

P( zt | xt ) Algorithm

P( xt | ut , xt 1 ) Bel ( xt 1 ) dxt 1

Bayes

2.

Algorithm Bayes_filter( Bel(x),d ):

0

3.

If d is a perceptual data item z then

1.

4.

5.

6.

7.

8.

9.

For all x do

Bel' ( x) P( z | x) Bel( x)

Bel' ( x)

For all x do

Bel' ( x) 1Bel' ( x)

Else if d is an action data item u then

10.

11.

For all x do

12.

Return Bel’(x)

Bel ' ( x) P( x | u , x' ) Bel ( x' ) dx '

17

Bayes Filters are Familiar!

Bel ( xt ) P( zt | xt ) P( xt | ut , xt 1 ) Bel ( xt 1 ) dxt 1

• Kalman filters

• Particle filters

• Hidden Markov models

• Dynamic Bayesian networks

• Partially Observable Markov Decision

Processes (POMDPs)

18

19

Probabilistic Robotics

Bayes Filter Implementations

Gaussian filters

SA-1

Gaussians

p( x) ~ N ( , 2 ) :

1 ( x )

1

p( x)

e 2

2

Univariate

2

2

-

Linear transform of Gaussians

X ~ N ( , 2 )

2 2

Y

~

N

(

a

b

,

a

)

Y aX b

Multivariate Gaussians

X ~ N ( , )

T

Y

~

N

(

A

B

,

A

A

)

Y AX B

X 1 ~ N ( 1 , 1 )

2

1

1

1

2 ,

p( X 1 ) p( X 2 ) ~ N

1

1

X 2 ~ N ( 2 , 2 )

1 2

1 2

1 2

• We stay in the “Gaussian world” as long as we

start with Gaussians and perform only linear

transformations.

Discrete Kalman Filter

Estimates the state x of a discrete-time

controlled process that is governed by the

linear stochastic difference equation

xt At xt 1 Btut t

with a measurement

zt Ct xt t

23

Linear Gaussian Systems: Initialization

• Initial belief is normally distributed:

bel( x0 ) N x0 ; 0 , 0

24

Linear Gaussian Systems: Dynamics

• Dynamics are linear function of state and

control plus additive noise:

xt At xt 1 Btut t

p( xt | ut , xt 1) N xt ; At xt 1 Btut , Rt

bel( xt ) p( xt | ut , xt 1 )

bel( xt 1 ) dxt 1

~ N xt ; At xt 1 Bt ut , Rt ~ N xt 1 ; t 1 , t 1

25

Linear Gaussian Systems: Observations

• Observations are linear function of state

plus additive noise:

zt Ct xt t

p( zt | xt ) N zt ; Ct xt , Qt

bel( xt )

p( zt | xt )

bel( xt )

~ N zt ; Ct xt , Qt

~ N xt ; t , t

26

Kalman Filter Algorithm

1.

Algorithm Kalman_filter( t-1, t-1, ut, zt):

2.

3.

4.

Prediction:

t At t 1 Bt ut

t At t 1 AtT Rt

5.

6.

7.

8.

Correction:

Kt t CtT (Ct t CtT Qt )1

t t Kt ( zt Ct t )

t (I Kt Ct )t

9.

Return t, t

27

Kalman Filter Summary

• Highly efficient: Polynomial in

measurement dimensionality k and

state dimensionality n:

O(k2.376 + n2)

• Optimal for linear Gaussian systems!

• Most robotics systems are nonlinear!

28

Nonlinear Dynamic Systems

• Most realistic robotic problems involve

nonlinear functions

xt g (ut , xt 1 )

zt h( xt )

29

Linearity Assumption Revisited

30

Non-linear Function

31

EKF Linearization (1)

32

EKF Linearization (2)

33

EKF Linearization (3)

34

EKF Linearization: First Order

Taylor Series Expansion

• Prediction:

g (ut , t 1 )

g (ut , xt 1 ) g (ut , t 1 )

( xt 1 t 1 )

xt 1

g (ut , xt 1 ) g (ut , t 1 ) Gt ( xt 1 t 1 )

• Correction:

h( t )

h( xt ) h( t )

( xt t )

xt

h( xt ) h( t ) H t ( xt t )

35

EKF Algorithm

1. Extended_Kalman_filter( t-1, t-1, ut, zt):

2.

3.

4.

Prediction:

t g (ut , t 1 )

t At t 1 Bt ut

t Gt t 1GtT Rt

t At t 1 AtT Rt

5.

6.

7.

8.

Correction:

Kt t HtT (Ht t HtT Qt )1

t t Kt ( zt h(t ))

9.

Return t, t

t ( I Kt Ht )t

h( t )

Ht

xt

Kt t CtT (Ct t CtT Qt )1

t t Kt ( zt Ct t )

t (I Kt Ct )t

g (ut , t 1 )

Gt

xt 1

36

Localization

“Using sensory information to locate the robot

in its environment is the most fundamental

problem to providing a mobile robot with

autonomous capabilities.”

[Cox ’91]

• Given

• Map of the environment.

• Sequence of sensor measurements.

• Wanted

• Estimate of the robot’s position.

• Problem classes

• Position tracking

• Global localization

• Kidnapped robot problem (recovery)

37

Landmark-based Localization

38

EKF Summary

• Highly efficient: Polynomial in

measurement dimensionality k and

state dimensionality n:

O(k2.376 + n2)

• Not optimal!

• Can diverge if nonlinearities are large!

• Works surprisingly well even when all

assumptions are violated!

39

Kalman Filter-based System

• [Arras et al. 98]:

• Laser range-finder and vision

• High precision (<1cm accuracy)

[Courtesy of Kai Arras]

40

Multihypothesis

Tracking

41

Localization With MHT

• Belief is represented by multiple hypotheses

• Each hypothesis is tracked by a Kalman filter

• Additional problems:

• Data association: Which observation

corresponds to which hypothesis?

• Hypothesis management: When to add / delete

hypotheses?

• Huge body of literature on target tracking, motion

correspondence etc.

42

MHT: Implemented System (2)

Courtesy of P. Jensfelt and S. Kristensen

43

Probabilistic Robotics

Bayes Filter Implementations

Discrete filters

SA-1

Piecewise

Constant

45

Discrete Bayes Filter Algorithm

2.

Algorithm Discrete_Bayes_filter( Bel(x),d ):

0

3.

If d is a perceptual data item z then

1.

4.

5.

6.

7.

8.

9.

For all x do

Bel' ( x) P( z | x) Bel( x)

Bel' ( x)

For all x do

Bel' ( x) 1Bel' ( x)

Else if d is an action data item u then

10.

11.

For all x do

12.

Return Bel’(x)

Bel' ( x) P( x | u, x' ) Bel( x' )

x'

46

Grid-based Localization

47

Sonars and

Occupancy Grid Map

48

Probabilistic Robotics

Bayes Filter Implementations

Particle filters

SA-1

Sample-based Localization (sonar)

Particle Filters

Represent belief by random samples

Monte Carlo filter, Survival of the fittest,

Condensation, Bootstrap filter, Particle filter

Filtering: [Rubin, 88], [Gordon et al., 93], [Kitagawa 96]

Estimation of non-Gaussian, nonlinear processes

Computer vision: [Isard and Blake 96, 98]

Dynamic Bayesian Networks: [Kanazawa et al., 95]d

Importance Sampling

Weight samples: w = f / g

Importance Sampling with Resampling:

Landmark Detection Example

Particle Filters

Sensor Information: Importance Sampling

Bel( x) p( z | x) Bel ( x)

p( z | x) Bel ( x)

w

p ( z | x)

Bel ( x)

Robot Motion

Bel ( x)

p( x | u x' ) Bel ( x' )

,

d x'

Sensor Information: Importance Sampling

Bel( x) p( z | x) Bel ( x)

p( z | x) Bel ( x)

w

p ( z | x)

Bel ( x)

Robot Motion

Bel ( x)

p( x | u x' ) Bel ( x' )

,

d x'

Particle Filter Algorithm

1. Algorithm particle_filter( St-1, ut-1 zt):

2. St ,

0

3. For i 1 n

Generate new samples

4.

Sample index j(i) from the discrete distribution given by wt-1

5.

Sample xti from p( xt | xt 1, ut 1 ) using xtj(1i ) and ut 1

6.

wti p( zt | xti )

Compute importance weight

7.

wti

Update normalization factor

8.

St St { xti , wti }

Insert

9. For i 1 n

10.

wti wti /

Normalize weights

Particle Filter Algorithm

Bel ( xt ) p( zt | xt ) p( xt | xt 1 , ut 1 ) Bel ( xt 1 ) dxt 1

draw xit1 from Bel(xt1)

draw xit from p(xt | xit1,ut1)

Importance factor for xit:

targetdistribution

w

proposaldistribution

p( zt | xt ) p( xt | xt 1 , ut 1 ) Bel ( xt 1 )

p( xt | xt 1 , ut 1 ) Bel ( xt 1 )

p( zt | xt )

i

t

Motion Model Reminder

Start

Proximity Sensor Model Reminder

Laser sensor

Sonar sensor

Initial Distribution

63

After Incorporating Ten

Ultrasound Scans

64

After Incorporating 65

Ultrasound Scans

65

Estimated Path

66

Localization for AIBO

robots

Limitations

• The approach described so far is able

to

• track the pose of a mobile robot and to

• globally localize the robot.

• How can we deal with localization

errors (i.e., the kidnapped robot

problem)?

68

Approaches

• Randomly insert samples (the robot

can be teleported at any point in

time).

• Insert random samples proportional

to the average likelihood of the

particles (the robot has been

teleported with higher probability

when the likelihood of its observations

drops).

69

Global Localization

70

Kidnapping the Robot

71

Summary

• Particle filters are an implementation of

•

•

•

•

recursive Bayesian filtering

They represent the posterior by a set of

weighted samples.

In the context of localization, the particles

are propagated according to the motion

model.

They are then weighted according to the

likelihood of the observations.

In a re-sampling step, new particles are

drawn with a probability proportional to

the likelihood of the observation.

73