csci5221-youtube-netflix

advertisement

Large-scale Internet Video Delivery:

YouTube & Netflix Case Studies

• Rationale:

– Why Large Content Delivery System

– YouTube & Netflix as two case studies

• Reverse-engineering YouTube Delivery Cloud

– Briefly, Pre-Google Restructuring (up to circa 2008)

– Google’s New YouTube Architectural Design

• Unreeling Netflix Video Streaming Service

– Cloud-sourcing: Amazon Cloud Services & CDNs

CSci5221: YouTube and Netflix Case Studies

Content is the King!

• This lecture focuses primarily on video content

• Two large-scale online video services as case

studies:

– YouTube: a globally popular (excluding China), “user-

generated” (short) video sharing site

– Netflix: feature-length video streaming service (movies &

TV shows), mostly in North America

CSci5221: YouTube and Netflix Case Studies

Why YouTube?

World’s largest (mostly user-generated) global (excl.

China) video sharing service

– More than 13 million hours of video were

uploaded during 2010 and 35 hours of video

are uploaded every minute.

– More video is uploaded to YouTube in 60

days than the 3 major US networks created

in 60 years

– 70% of YouTube traffic comes from

outside the US

– YouTube reached over 700 billion playbacks

in 2010

– YouTube mobile gets over 100 million views

a day

• By some estimates, 5%-10% global (interAS) Internet traffic (2007-2009 estimate)

– up to 20% HTTP traffic (2007 estimate)

CSci5221: YouTube and Netflix Case Studies

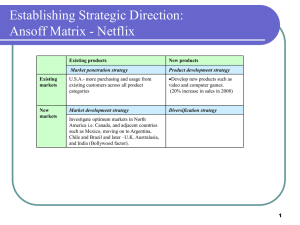

What Makes Netflix Interesting?

• Commercial, feature-length movies and TV shows

- and not free; subscription-based

• Nonetheless, Netflix is huge!

-

•

~25 million subscribers

~20,000 titles (and growing)

consumes 30% of peak-time downstream bandwidth in North America

Netflix has an interesting (cloud-sourced) architecture

CSci5221: YouTube and Netflix Case Studies

Why Video Delivery Systems?

• Unlike other content, video exerts huge loads on

networks!

• Video delivery system is a complex distributed

system!

– Not only networks, but also streaming servers, storage, ….

– Backend data centers, distributed cache/edge server

infrastructure

– User interests and demand drive the system: sociotechnical interplay, business models & economics

• Specifically, how does such a system scale?

How complex is the system: system components involved?

How does it handle geographically diverse user requests?

Where are the caches? How does it handle cache misses?

How does it perform load-balancing and handle/avoid

hotspots?

CSci5221: YouTube and Netflix Case Studies

Why Video Delivery Systems?

• Specifically, how does such a system scale?

How does it adapt to network conditions, e.g. bw changes?

How does it meet user performance expectations such as

quality of video or low-latency

Of both socio-technical significance/impact and purely

academic interest

– Interplay with ISPs: traffic engineering & routing

policy issues, etc.

How does YouTube’s or Netflix’s huge traffic affect my

traffic matrix?

How will my traffic matrix change if YouTube or Netflix

traffic dynamics changes?

– Network (or Internet) economics

cooperation and competition among various players involved

CSci5221: YouTube and Netflix Case Studies

Why Video Delivery Systems …

Of both socio-technical significance/impact and purely

academic interest

– Network (or Internet) economics

cooperation and competition among various players involved

– Design of Internet-scale large content distribution

How does a "best-practice" system work? Key design principles?

What can be improved upon? Limitations of Internet

architecture?

Insights into “future” content-centric network design?

– Shedding light on user interests and social behavior

We will come back to some of these points in the latter

part of this course

CSci5221: YouTube and Netflix Case Studies

Part I:

“Reverse-Engineering”

YouTube Delivery Cloud

CSci5221: YouTube and Netflix Case Studies

“Reverse-Engineering”

YouTube Delivery Cloud

• First: we will quickly give an overview of the

original YouTube architecture

– Fair simple: use 7 data centers + CDN

– Questions: how to perform load balancing?

• Interplay with ISPs: impact on ISP traffic matrix

– See [A+:IMC10] for details

• Focus on: the current YouTube architecture after

Google “re-structuring” (see [A+:YouTube])

– Active measurement platform based on PlanetLab, etc.

– Infer and deduce how Google re-structures YouTube

– Understand the YouTube delivery dynamics, e.g., how

Google handles load-balancing, cache misses, etc.

CSci5221: YouTube and Netflix Case Studies

YouTube Video Delivery Basics

Front end

web-servers

2. HTTP reply

containing html to

construct the web page 4. HTTP reply

and a link to stream the FLV stream

FLV file

Video-servers

(front end)

Internet

1. HTTP GET request

for video URL

3. HTTP GET

request

for FLV stream

User

CSci5221: YouTube and Netflix Case Studies

www.youtube.com

CSci5221: YouTube and Netflix Case Studies

Embedded Flash Video

CSci5221: YouTube and Netflix Case Studies

Original YouTube

Video Delivery Architecture

Prior to Google Re-structuring

A Fairly Simple Architecture!

• Three (logical) web servers (IP addresses)

– located near San Jose, CA

• Seven data centers consisting of thousands of

flash video servers

– located across continental US only

• In addition, use Limelight CDN to help video

distribution

CSci5221: YouTube and Netflix Case Studies

YouTube Data Center Locations

(prior Google Re-structuring)

Internet

CSci5221: YouTube and Netflix Case Studies

Traffic Dynamics and Interplay

between ISP and Content Provider (CP)

Traffic

from

YouTube

to clients

(via ISP)

LA

DC

NYC

Traffic

from

clients

(via ISP)

to

YouTube

It was reported that by 2007

YouTube

was paying

$1 million/month ISP-X’s

or more

PoP

PEER ISP

Customer ISP

to ISPs to carry its video traffic to users!

CSci5221: YouTube and Netflix Case Studies

•

Load Balancing Strategies

Random Data Center Selection

+ Pros: simple, no information is required

- Cons: Uneven load distribution if data centers have different “capacities”

•

Location-Based approach

+ Pros: Smaller latency, Less traffic in ISP backbone

+ used by large number of content providers and CDNs for serving

- Cons: potentially unbalance traffic distribution

•

(Location-Agnostic) Resource based approach

+ Pros: better division of traffic among multiple data centers based on the

capacity of each data center

- Cons: latency

•

Hybrid (location + resource) Approach

+ Pros: can balance servers and user latency (to some extent)

- Cons: more complex mechanisms

CSci5221: YouTube and Netflix Case Studies

YouTube Load Balancing Strategy

among Data Centers?

Looking at traffic from clients to YouTube video

servers (or rather data centers)

• Group clients based on “locations”

– PoPs or IP prefixes

• Construct the “location” (PoP/IP prefix) to YouTube

data center traffic matrix (M)

• The properties (e.g., rank) of this matrix can tell us

what load-balancing strategy YouTube uses:

– if random load-balancing is used, what property does M

have?

– if latency-aware (or location-aware) load-balancing, what

property does M have?

CSci5221: YouTube and Netflix Case Studies

YouTube Load-Balancing Strategy

Conclusion:

YouTube likely employs some form of proportional loadbalancing => “resource-based” load-balancing

Another

implication

about pre-Google

architecture:

•Proportionality

is approximately

similarYouTube

to # of video

servers

videos

are replicated at all 7 locations!

seenYouTube

in each data

center

CSci5221: YouTube and Netflix Case Studies

18

YouTube Video Delivery Architecture

after Google Re-structuring

How Google engineers re-design YouTube?

to leverage

• Google’s huge infrastructure

– including globally distributed “edge servers & caches”

• and Google’s engineering talents

Especially, how to handle

• Enormous scale -- both in terms of #videos and #users

• Video delivery dynamics

– hot and cold videos, cache misses, etc.

– load-balancing among cache locations, servers, etc.

– relations with ISPs

CSci5221: YouTube and Netflix Case Studies

Active Measurement Platform

• 470 PlanetLab nodes

video playback using

emulator

DNS resolution

proxy servers

ping measurement

• 840 Open DNS resolvers

DNS resolution

• 48 node local compute

cluster

video playback using

standard browser

data analysis

CSci5221: YouTube and Netflix Case Studies

Google’s New YouTube

Video Delivery Architecture

Three components

• Videos and video id space

• Physical cache hierarchy

•

three tiers: primary,

secondary, & tertiary

primary caches:

“Google locations” vs.

“ISP locations”

Layered organizationImplications:

of

a) YouTube videos are not replicated at all locations!

namespaces

b) only replicated at (5) tertiary cache locations

representing “logical”

c)video

Google

likely utilizes some form of location-aware

servers

load-balancing

(among primary cache locations)

five “anycast”

namespaces

two “unicast” namespaces

YouTube Video Id Space

• Each YouTube video is assigned a unique id

e.g., http://www.youtube.com/watch?v=tObjCw_WgKs

• Each video id is 11 char string

• first 10 chars can be any alpha-numeric values [0-9, a-z,

A-Z] plus “-” and “_”

• last char can be one of the 16 chars {0, 4, 8, ..., A, E, ...}

Video id space size: 6411

Video id’s are randomly

distributed in the id

space

CSci5221: YouTube and Netflix Case Studies

Physical Cache Hierarchy & Locations

~ 50 cache locations

• ~40 primary locations

• including ~10 non-

Google ISP locations

• 8 secondary locations

• 5 tertiary locations

Geo-locations using

• city codes in unicast hostnames,

e.g., r1.sjc01g01.c.youtube.com

• low latency from PLnodes (< 3ms)

• clustering of IP addresses using

P: primary

S: secondary

T: Tertiary

latency matrix

Layered Namespace Organization

Two types of namespaces

– Five “anycast” namespaces

• lscache: “visible” primary ns

• each ns representing fixed

# of “logical” servers

• logical servers mapped to

physical servers via DNS

– 2 “unicast” namespaces

• rhost: google locations

• rhostisp: ISP locations

• mapped to a single server

Static Mappings between and among

Video Id Space and Namespaces

– Video id’s are mapped to anycast

namespaces via a fixed mapping

• video id space “divided” evenly

among hostnames in each

namespace

– Mappings among namespaces are

also fixed

• 1-to-1 between primary and

secondary (192 hostnames

each)

• 3-to-1 between primary or

secondary to tertiary (64

names)

– Advantages:

• URLs for videos can be generated w/o info about physical video servers

• thus web servers can operate “independently” of video servers

CSci5221: YouTube and Netflix Case Studies

YouTube Video Delivery Dynamics:

Summary

• Locality-aware DNS resolution

• Handling load balancing & hotspots

– DNS change

– Dynamic HTTP redirection

– local vs. higher cache tier

• Handling cache misses

– Background fetch

– Dynamic HTTP redirection

CSci5221: YouTube and Netflix Case Studies

Logical to Physical Server Mappings

via Location-Aware DNS Resolution

• Anycast hostnames

resolve to different

IPs from different

location

• YouTube DNS

generally returns

“closest” IP

– in terms of latency

CSci5221: YouTube and Netflix Case Studies

Logical to Physical Server Mappings

via Location-Aware DNS Resolution

• YouTube may introduce

additional IPs at busy hours

• Can use multiple IPs

continuously per location

CSci5221: YouTube and Netflix Case Studies

Cache Misses and Load Balancing

• Cache misses mostly handled via

“background fetch”

– 3 ms vs 30+ ms

• Sometimes via HTTP redirections

– also used for load-balancing

– redirections follows cache

hierarchy

• cache misses in an ISP primary

cache locations re-directed to a

Google primary cache location

– up to 9 re-directions

(tracked via a counter in URL)

CSci5221: YouTube and Netflix Case Studies

HTTP Redirections

Cache misses vs Load Balancing

• Redirection probability for “hot” vs “cold” videos

• Redirections via unicast names are used mostly

among servers within same location:

– likely for load balancing

CSci5221: YouTube and Netflix Case Studies

YouTube Study Summary

• YouTube: largest global video sharing site

• “Reverse-Engineering” YouTube Delivery Cloud

– comparative study of pre- vs. post-Google restructuring

• Google’s YouTube design provides an interesting case

study of large-scale content delivery system

– employs a combination of various “tricks” and mechanisms

to scale with YouTube size & handle video delivery

dynamics

– represents some “best practice” design principles?

• [Video quality adaptation: users have to select

manually!]

• Interplay with ISPs and socio-technical interplay

• Lessons for “future” content-centric network design

– shed light on limitations of today’s Internet architecture

CSci5221: YouTube and Netflix Case Studies

Part II:

Unreeling Netflix Architecture

and Tales of Three CDNs

CSci5221: YouTube and Netflix Case Studies

What Makes Netflix Interesting?

• Commercial, feature-length movies and TV shows

and not free; subscription-based

• Nonetheless, Netflix is huge!

25 million subscribers and ~20,000 titles (and growing)

consumes 30% of peak-time downstream bandwidth in North

America

• A prime example of cloud-sourced architecture

Maintains only a small “in-house” facility for key functions

e.g., subscriber management (account creation, payment, …)

user authentication, video search, video storage, …

Akamai, Level 3 and Limelight

Majority of functions are sourced to Amazon cloud (EC2/S3)

DNS service is sourced to UltraDNS

Leverage multiple CDNs for video delivery

• Can serve as a possible blue-print for future system design

• (nearly) “infrastructure-less” content delivery -- from Netflix’s

POV

• CSci5221:

minimize

capex/opex

infrastructure, but may lose some

YouTube

and Netflix Case of

Studies

“control” in terms of system performance, …

What are the Key Questions?

• What is overall Netflix architecture?

what components is out/cloud-sourced?

namespace management & interaction with CDNs

• How does it perform rate adaption & utilize multiple

CDNs?

esp., CDN selection strategy

• Does Netflix offer best user experience based upon the

bandwidth constraints? If not, what can be done?

Challenges in conducting measurement & inference:

Unlike YouTube, Netflix is not a free, “open” system:

•

•

only subscribers can access videos

most communications are encrypted; authentication, DRM, …

CSci5221: YouTube and Netflix Case Studies

Measurement Infrastructure

And Basic Measurement Methodology

•Create multiple user/subscriber accounts

•13 “client” computers located in different cities in US

5 different ISPs: residential/corporate networks

non-proxy mode and proxy mode (via PlanetLab)

play movies and capture network traces (via tcpdump)

e.g., to discover the communication entities and resources

involved

“tamper data” plugin to read manifest files

conduct experiments to study system behavior & dynamics

e.g., how Netflix selects CDNs & adapts to changes in network

bandwidth

Also conducted measurements/experiments via mobile

devices (iPhone/iPad, Nexus 1 Android Phone) over WiFi

CSci5221: YouTube and Netflix Case Studies

Netflix Architecture

• Netflix has its own “data center” for certain crucial operations

(e.g., user registration, billing, …)

• Most web-based user-video interaction, computation/storage

operations are cloud-sourced to Amazon AWS

• Video delivery is out/cloud-sourced to 3 CDNs

• Users need to use MS Silverlight player for video streaming

CSci5221: YouTube and Netflix Case Studies

Netflix Architecture: Video Streaming

• Manifest file comes from Netflix's control server

• Periodic updates are sent to the control server

• Frequent downloads in the beginning, becomes

periodic after buffer is filled

CSci5221: YouTube and Netflix Case Studies

Netflix Videos and Video Chunks

• Netflix uses a numeric ID to identify each movie

– IDs are variable length (6-8 digits): 213530, 1001192,

70221086

– video IDs do not seem to be evenly distributed in the

ID space

– these video IDs are not used in playback operations

• Each movie is encoded in multiple quality levels, each is

identified by a numeric ID (9 digits)

– various numeric IDs associated with the same movie appear to

have no obvious relations

CSci5221: YouTube and Netflix Case Studies

Netflix Videos and Video Chunks

• Videos are divided in “chunks” (of roughly 4 secs),

specified using (byte) “range/xxx-xxx?” in the URL path:

Limelight:

http://netflix-094.vo.llnwd.net/s/stor3/384/534975384.ismv/range/057689?p=58&e=1311456547&h=2caca6fb4cc2c522e657006cf69d4ace

Akamai:

http://netflix094.as.nflximg.com.edgesuite.net/sa53/384/534975384.ismv/range/0

-57689?token=1311456547_411862e41a33dc93ee71e2e3b3fd8534

Level3:

http://nflx.i.ad483241.x.lcdn.nflximg.com/384/534975384.ismv/range/057689?etime=20110723212907&movieHash=094&encoded=06847414df0656e6

97cbd

• Netflix uses a version of (MPEG-)DASH l for video

streaming

CSci5221: YouTube and Netflix Case Studies

DASH: dynamic adaptive streaming over HTTP

• Not really a protocol; it provides formats to enable efficient

and high-quality delivery of streaming services over the

Internet

– Enable HTTP-CDNs; reuse of existing technology (codec, DRM,…)

– Move “intelligence” to client: device capability, bandwidth

adaptation, …

• In particular, it specifies Media Presentation Description (MPD)

CSci5221: YouTube and Netflix Case Studies

Ack & ©: Thomas Stockhammer

DASH Data Model and Manifest Files

• DASH MPD:

Segment Info

Initialization Segment

http://www.e.com/ahs-5.3gp

Media Presentation

Period, start=0s

Period,

•start=100

•baseURL=http://www.e.com/

…

…

Period, start=100s

Representation 1

500kbit/s

…

Representation 2

Period, start=295s

…

100kbit/s

…

Media Segment 1

Representation 1

•bandwidth=500kbit/s

•width 640, height 480

…

Segment Info

duration=10s

Template:

./ahs-5-$Index$.3gs

start=0s

http://www.e.com/ahs-5-1.3gs

Media Segment 2

start=10s

http://www.e.com/ahs-5-2.3gs

Media Segment 3

start=20s

http://www.e.com/ahs-5-3.3gh

…

• Segment Indexing: MPD only; MPD+segment; segment onlyMedia Segment 20

start=190s

Segment Index in MPD only

<MPD>

...

<URL

sourceURL="seg1.mp4"/>

<URL

sourceURL="seg2.mp4"/>

</MPD> <MPD>

...

<URL sourceURL="seg.mp4" range="0-499"/>

<URL sourceURL="seg.mp4" range="500999"/>

</MPD>

http://www.e.com/ahs-5-20.3gs

seg1.mp4

seg2.mp4

...

seg.mp4

Ack & ©: Thomas Stockhammer

Netflix Videos and CDN Namespaces

Netflix video streaming is handled directly by CDNs

• How are Netflix videos mapped to CDN namespaces & servers?

Limelight:

http://netflix-094.vo.llnwd.net/s/stor3/384/534975384.ismv/range/057689?p=58&e=1311456547&h=2caca6fb4cc2c522e657006cf69d4ace

Akamai:

http://netflix094.as.nflximg.com.edgesuite.net/sa53/384/534975384.ismv/r

ange/0-57689?token=1311456547_411862e41a33dc93ee71e2e3b3fd8534

gap

Level3:

http://nflx.i.ad483241.x.lcdn.nflximg.com/384/534975384.ismv/range/057689?etime=20110723212907&movieHash=094&encoded=06847414df0656e697cbd

CSci5221: YouTube and Netflix Case Studies

Netflix Videos and CDN Namespaces

Netflix video streaming is handled directly by CDNs

• Netflix video to CND namespace mapping is CDN-specific

– Limelight and Akamai use the last 3 digits of video IDs to

generate hostnames (up to 1000 hostnames)

– Level 3 uses some form of hash to generate the hostnames

• Each CDN resolves its own hostnames to IPs of DASH

servers

– Each CDN performs its own locality-aware mapping and loadbalancing

• All CDN-specific hostnames (and part of URLs) are pushed

to Netflix control servers (in Amazon AWS), and

embedded in manifest files

CSci5221: YouTube and Netflix Case Studies

Netflix Manifest Files

• A manifest file contains metadata

• Netflix manifest files contain a lot of information

o

o

o

Available bitrates for audio, video and trickplay

MPD and URLs pointing to CDNs

CDNs and their "rankings"

<nccp:cdn>

<nccp:name>level3</nccp:name>

<nccp:cdnid>6</nccp:cdnid>

<nccp:rank>1</nccp:rank>

<nccp:weight>140</nccp:weight>

</nccp:cdn>

<nccp:cdn>

<nccp:name>limelight</nccp:name>

<nccp:cdnid>4</nccp:cdnid>

<nccp:rank>2</nccp:rank>

<nccp:weight>120</nccp:weight>

</nccp:cdn>

<nccp:cdn>

<nccp:name>akamai</nccp:name>

<nccp:cdnid>9</nccp:cdnid>

<nccp:rank>3</nccp:rank>

<nccp:weight>100</nccp:weight>

</nccp:cdn>

CSci5221: YouTube and Netflix Case Studies

Netflix Manifest Files …

A section of the manifest containing the base URLs, pointing to

CDNs

<nccp:downloadurls>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>9</nccp:cdnid>

<nccp:url>http://netflix094.as.nflximg.com.edgesuite.net/sa73/531/943233531.ismv?token=131145

6547_e329d4271a7ff72019a550dec8ce3840</nccp:url>

</nccp:downloadurl>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>4</nccp:cdnid>

<nccp:url>http://netflix094.vo.llnwd.net/s/stor3/531/943233531.ismv?p=58&amp;e=1311456547&amp;h=8adaa52cd06db9

219790bbdb323fc6b8</nccp:url>

</nccp:downloadurl>

<nccp:downloadurl>

<nccp:expiration>1311456547</nccp:expiration>

<nccp:cdnid>6</nccp:cdnid>

<nccp:url>http://nflx.i.ad483241.x.lcdn.nflximg.com/531/943233531.ismv?etime=20110723212907

&amp;movieHash=094&amp;encoded=0473c433ff6dc2f7f2f4a</nccp:url>

</nccp:downloadurl>

</nccp:downloadurls>

Manifest File Analysis & CDN Ranking

We collected a large number of manifest files

•Our analysis reveals the following:

different CDN rankings for different user accounts

does not change over time, geographical location or computer

•Why does Netflix use a static CDN ranking for each user?

Is it based upon performance?

Is there a single best CDN?

Does CDN performance remain unchanged over time?

Does this strategy give users the best possible experience?

CSci5221: YouTube and Netflix Case Studies

Netflix: Adapting to Bandwidth Changes

• Two possible approaches

Increase/decrease quality level using DASH

Switch CDNs

• Experiments

Play a movie and systematically throttle available

bandwidth

Observe server addresses and video quality

• Bandwidth throttling using the “dummynet” tool

Throttling done on the client side by limiting how fast it

can download from any given CDN server

First throttle the most preferred CDN server, keep

throttling other servers as they get selected

CSci5221: YouTube and Netflix Case Studies

Adapting to Bandwidth Changes

• Lower quality levels in response to lower bandwidth

• Switch CDN only when minimum quality level cannot be

supported

• Netflix seems to use multiple CDNs only for failover

purposes!

CSci5221: YouTube and Netflix Case Studies

CDN Bandwidth Measurement

• Use both local residential hosts and PlanetLab nodes

13 residential hosts and 100s PlanetLab nodes are used

Each host downloads small chunks of Netflix videos from all

three CDN servers by replaying URLs from the manifest files

• Experiments are done for several hours every day for about

3 weeks

total experiment duration was divided into 16 second intervals

the clients downloaded chunks from CDN 1, 2 and 3 at the

beginning of seconds 0, 4 and 8.

at the beginning of the 12th second, the clients tried to

download the chunks from all three CDNs simultaneously

• Measure bandwidth to 3 CDNs separately as well as the

combined bandwidth

• Perform analysis at three time-scales

average over the entire period

daily averages

instantaneous bandwidth

CSci5221: YouTube and Netflix Case Studies

There is no Single Best CDN

CSci5221: YouTube and Netflix Case Studies

Bandwidth (and Order)

Change over Days

Residential host

CSci5221: YouTube and Netflix Case Studies

PlanetLab node

Instantaneous Bandwidth Changes

Residential host

CSci5221: YouTube and Netflix Case Studies

PlanetLab node

Will “Intelligent” CDN Selection Help?

• How much can we improve if we have an oracle telling us the

optimal CDN to use at each instance?

• Oracle-based “upper bound” yields significant

improvement over "average" CDN

• Best CDN (based initial measurements) close to the

“upper bound"

CSci5221: YouTube and Netflix Case Studies

Selecting “Best” CDN via Initial

Bandwidth Measurement Incurs Little

Overhead !

Just 2 measurements are enough!

CSci5221: YouTube and Netflix Case Studies

“Intelligent” CDN Selection …

We can improve user experiences by performing more

“intelligent” CDN selection

•One strategy: selecting “best” CDN using initial measurements!

•What about using multiple CDNs simultaneously?

Average improvement is 54% and 70% for residential hosts and

PlanetLab nodes over the best CDN!

CSci5221: YouTube and Netflix Case Studies

Netflix Study Summary

• Netflix employs an interesting cloud-sourced architecture

Amazon AWS cloud + 3 CDNs for video streaming

• Netflix video streaming utilizes DASH

enables it to leverage CDNs

performs adaptive streaming for feature-length movies

allows DRM management (handled by Netflix + MS Silverlight)

• Load-balancing, cache misses or other video delivery

dynamics are handled internally by each CDN

• Netflix uses a static ranking of CDNs (per user)

multiple CDNs are used mostly for the fail-over purpose

how the static ranking is determined is still a mystery (to us!)

• More “intelligent” CDN selection is possible to further

improve user-perceived quality (and make them happier!)

As higher resolution/3D movies come into picture, these

improvements will have a considerable impact

CSci5221: YouTube and Netflix Case Studies

A Few Words on Lessons Learned

YouTube vs. Netflix: two interesting & contrasting

video delivery architectures

•Likely many factors determine the choice of the

architecture and system design!

•Both architectures separate “user-video” interaction

from video delivery

user video search and other operations handled by “web

servers”

•DNS plays a crucial role in both systems

careful “namespace” design is required

both in terms of DNS hostnames, also URLs

mapping videos to DNS hostnames (logical servers)

mapping DNS hostnames to IP addresses (physical

servers)

CSci5221: YouTube and Netflix Case Studies

A Few Words on Lessons Learned

Google’s YouTube delivery architecture:

for “user-generated” short videos; “open” system

•

•

•

•

no “explicit” DRM management issues

but still need to handle “unauthorized” content publishing

•

employ a variety of mechanisms to handle load-balancing,

cache misses, etc.: DNS resolution, HTTP re-direction,

internal fetch

mostly web browser and flash-based

leverage its own in-house CDN infrastructure

•YouTube flash-based video delivery does not support

“dynamic” adaptive streaming

users need to explicitly & manually select video quality level

each (short) video is delivered as a single Flash file

It’s interesting to see how Google will adapt the current

YouTube architecture to support feature-length video ondemand service!

CSci5221: YouTube and Netflix Case Studies

A Few Words on Lessons Learned

Netflix’s cloud-sourced video delivery architecture

subscription based; does not own most content

• DRM management is a must

DASH-compliant architecture – more flexible

requires separate client players

allow adaptive streaming; different codec, etc.

enable (third-party) CDNs

but require separate channels for “manifest” file delivery

Does not require huge investment in infrastructure

leverage “expertise” of other entities

but lose some level of control

Also need “profit-sharing” with the other entities

potential conflicts: e.g., Amazon has its own video

streaming service (Amazon Instant Video)!

Netflix OpenConnect initiative

Start building its own delivery infrastructure

CSci5221: YouTube and Netflix Case Studies

Looking Forward …

•

Making content “first-class” citizen on the Internet?

currently, specified by a URL, thus tied to “hostname”

DNS resolution is (generally) content (or server load)

oblivious

e.g., Akamai employs sophisticated mechanisms for DNS

resolution

cache miss may occur and re-direction may be needed

either done either internally or externally

New content-centric network architectures?

• So far, both YouTube and Netflix scale well with the

growth in videos and users

•

•

scalability of either video delivery system is ultimately

limited by the CDN infrastructure(s): whether Google’s own

or big 3 CDNs

Must account for or accommodate “business interests”

Economic viability is critical to the success of any system

architecture!

CSci5221: YouTube and Netflix Case Studies