Achmea - LIACS Data Mining Group

Data mining in Health Insurance

Introduction

• Rob Konijn, rob.konijn@achmea.nl

– VU University Amsterdam

– Leiden Institute of Advanced Computer Science (LIACS)

– Achmea Health Insurance

• Currently working here

• Delivering leads for other departments to follow up

– Fraud, abuse

• Research topic keywords: data mining/ unsupervised learning / fraud detection

2

Outline

• Intro Application

– Health Insurance

– Fraud detection

• Part 1: Subgroup discovery

• Part 2: Anomaly detection (slides partly by Z. Slavik, VU)

Intro Application

• Health Insurance Data

• Health Insurance in NL

– Obligatory

– Only private insurance companies

– About 100 euro/month(everyone)+170 euro (income)

– Premium increase of 5-12% each year

Achmea: about 6 million customers

Funding of Health Insurance Costs in the Netherlands rijksbijdrage verzekerden 18-

2 mld inkomensafh.

bijdrage werkgevers 17 mld

18 mld vereveningsbijdrage verzekerde nominale premie 18+:

- rekenpremie (~ € 947/vrz): 12 mld

- opslag (~ € 150/vrz) : 2 mld

30 mld zorguitgaven

Verevenings-model

Mannen

• By population characteristics

– Age

– Gender

– Income, social class

– Type of work

• Calculation afterwards

– High costs compensation

(>15.000 euro)

0 - 4 jr

5 - 9 jr

10 - 14 jr

15 - 17 jr

18 - 24 jr

25 - 29 jr

30 - 34 jr

35 - 39 jr

40 - 44 jr

45 - 49 jr

50 - 54 jr

55 - 59 jr

60 - 64 jr

65 - 69 jr

70 - 74 jr

75 - 79 jr

80 - 84 jr

85 - 89 jr

90 jr e.o.

1,400

1,026

907

964

892

870

905

980

1,044

1,183

1,354

1,639

1,885

2,394

2,826

3,244

3,349

3,424

3,464

Vrouwen

1,210

936

918

1,062

1,214

1,768

1,876

1,476

1,232

1,366

1,532

1,713

1,905

2,201

2,560

2,886

3,018

3,034

3,014

Fraude in de zorg

Introduction Application:

The Data

• Transactional data

– Records of an event

– Visit to a medical practitioner

• Charged directly by medical practioner

• Patient is not involved

• Risk of fraud

Transactional Data

• Transactions:

Facts

– Achmea:

About 200 mln transactions per year

• Info of customers and practitioners: dimensions

Different levels of hierarchy

• Records represent events

• However, for example for fraud detection, we are interested in customers, or medical practitoners

• See examples next pages

• Groups of records: Subgroup Discovery

• Individual patients/practioners: outlier detection

Different types of fraud hierarchy

• On a patient level, or on a hospital level:

Handling different hierarchy

• Creating profiles from transactional data

• Aggregating costs over a time period

– Each record: patient

• Each attribute i =1 to n: cost spent on treatment i

• Feature construction, for example

– The ratio of long/short consults (G.P.)

– The ratio of 3-way and 2 way fillings (Dentist)

– Usually used for one-way analysis

Different types of fraud detection

• Supervised

– A labeled fraud set

– A labeled non-fraud set

– Credit cards, debit cards

• Unsupervised

– No labels

– Health Insurance, Cargo, telecom, tax etc.

Unsupervised learning in Health

Insurance Data

• Anomaly Detection (outlier detection)

– Finding individual deviating points

• Subgroup Discovery

– Finding (descriptions of) deviating groups

• Focus on differences and uncommon behavior

– In contrast to other unsupervised learning methods

• Clustering

• Frequent Pattern mining

Subgroup Discovery

• Goal: Find differences in claim behavior of medical practitioners

• To detect inefficient claim behavior

– Actions:

• A visit from the account manager

• To include in contract negotiations

– In the extreme case: fraud

• Investigation by the fraud detection department

• By describing deviations of a practitioner from its peers

– Subgroups

Patient-level, Subgroup Discovery

• Subgroup (orange): group of patients

• Target (red)

– Indicates whether a patient visited a practitioner (1), or not (0)

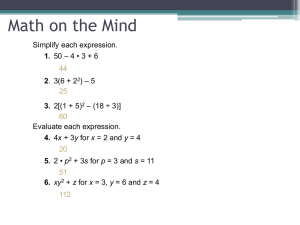

Subgroup Discovery: Quality Measures

• Target Dentist: 1672 patiënten

– Compare with peer group, 100.000 patients in total

• Subgroup V11 > 42 euro : 10347 patients

– V11: one sided filling

• Crosstable

V11 >= 42 rest totaal target dentist rest

871 9476 totaal

10347

801 88852

1672 98328

89653

100000

The cross table

• Cross table in data

V11 >= 42 rest total target dentist

871

801

1672

• Cross table expected:

• Assuming independence

V11 >= 42 rest total target dentist

173

1499

1672 rest total

9476 10347

88852 89653

98328 100000 rest total

10174 10347

88154 89653

98328 100000

Calculating Wracc and Lift

•

•

•

V11 >= 42 rest total target dentist

871

801

1672 rest total

9476 10347

88852 89653

98328 100000

V11 >= 42 rest total target dentist

173

1499

1672 rest total

10174 10347

88154 89653

98328 100000

Size subgroup = P(S) = 0.10347, size target dentist = P(T) = 0.01672

Weighted Relative ACCuracy (WRAcc) = P(ST) – P(S)P(T) = (871 –

173)/100000 = 689/100000

Lift = P(ST)/P(S)P(T) = 871/173 = 5.03

Example dentistry, at depth 1, one target dentist

ROC analysis, target dentist

Making SD more useful: adding prior knowledge

• Adding prior knowledge

– Background variables patient (age, gender, etc.)

– Specialism practitioner

– For dentistry: choice of insurance

• Adding already known differences

– Already detected by domain experts themselves

– Already detected during a previous data mining run

Prior Knowledge, Motivation

Example, influence of prior knowledge

The idea: create an expected cross table using prior knowledge

Quality Measures

• Ratio (Lift)

• Difference

(WRAcc)

• Squared sum

(Chi-square statistic)

Example, iterative approach

• Idea: add subgroup to prior knowledge iteratively

• Target = single pharmacy

• Patients that visited the hospital in last 3 years removed from data

• Compare with peer group (400,000 patients), 2929 patiënts of target pharmacy

• Top subgroup : “B03XA01 ( Erythropoietin )>0 euro” subgroup

B03XA01 > 0 rest

1 ‘target’ pharmacy

T rest

T 1297

F

224

F 1632 396,847

Next iteration

• Add “B03XA01 (EPO) >0 euro” to prior knowledge

• Next best subgroup: “N05AX08 (Risperdal)>= 500 euro”

Figure describing subgroup:

N05AX08 > 500

Left: target pharmacy, right: other pharmacies

Addition: adding costs to quality measure

– M55: dental cleaning

– V11: 1-way filling

– V21: polishing

• Cost of treatments in subgroup 370 euro (average)

• 791 more patients than expected

• Total quality 791*370 = 292,469 euro

Iterative approach, top 3 subgroups

V12: 2-sided filling

V21: polishing

V60: indirect pulpa covering

V21 and V60 are not allowed on the same day

Claim back (from all dentists): 1.3 million euro

3d isometrics, cost based QM

Other target types: double binary target

• Target 1: year: 2009 or 2008

• Target 2: target practitioner

• Pattern:

– M59: extensive (expensive) dental cleaning

– C12: second consult in one year

• Crosstable:

Other target types: Multiclass target

• Subgroup (orange): group of patients

• Target (red), now is a multi-value column, one value per dentist

Multiclass target, in ROC Space

Anemaly Detection

The example above contains a contextual anomaly...

Outline Anomaly Detection

• Anomalies

– Definition

– Types

– Technique categories

– Examples

• Lecture based on

– Chandola et al. (2009). Anomaly

Detection: A Survey

– Paper in BB

38

Definition

• “Anomaly detection refers to the problem of finding patterns in data that do not conform to expected behavior ”

• Anomalies, aka.

– Outliers

– Discordant observations

– Exceptions

– Aberrations

– Surprises

– Peculiarities

– Contaminants

39

Anomaly types

Point anomalies

– A data point is anomalous with respect to the rest of the data

40

Not covered today

• Other types of anomalies:

– Collective anomalies

– Contextual anomalies

• Other detection approaches:

– Supervised learning

– Semi supervised

• Assume training data is from normal class

• Use to detect anomalies in the future

We focus on outlier scores

• Scores

– You get a ranked list of anomalies

– “We investigate the top 10”

– “An anomaly has a score of at least 134”

– Leads followed by fraud investigators

• Labels

42

Detection method categorisation

1. Model based

2. Depth based

3. Distance Based

4. Information theory related (not covered)

5. Spectral theory related (not covered)

43

Model based

• Build a (statistical) model of the data

• Data instances occur in high probability regions of a stochastic model, while anomalies occur in low probability regions

• Or: data instances have a high distance to the model are outliers

• Or: data instances have a high influence on the model are outliers

Example: one way outlier detection

• Pharmacy records

• Records represent patients

• One attribute at a time:

– This example: attribute describing the costs spent on fertility medication (gonodatropin) in a year

• We could use such one way detection for each attribute in the data

Example, model = parametric probability density function

Example, model = non-parametric distribution

• Left: kernel density estimate

• Right: boxplot

Example: regression model

Other models possible

• Probabilistic

– Bayesian networks

• Regression models

– Regression trees/ random forests

– Neural networks

• Outlier score = prediction error (residual)

Depth based methods

• Applied on 1-4 dimensional datasets

– Or 1-4 attributes at a time

• Objects that have a high distance to the “center of the data” are considered outliers

• Example Pharmacy:

– Records represent patients

– 2 attributes:

• Costs spent on diabetes medication

• Costs spent on diabetes testing material

Example: bagplot, halfspace depth

Distance based (nearest neighbor based)

• Assumption:

– Normal data instances occur in dense neighbourhoods, while anomalies occur far from their closest neighbours

Similarity/distance

• You need a similarity measure between two data points

– Numeric attributes: Eucledian, etc.

– Nominal: simple match often enough

– Multivariate:

• Distance using all attributes

• Distance between attribute values, then combine

Example, dentistry data

• Records represent dentists

• Attributes are 14 cost categories

– Denote the percentage of patients that received a claim from the category

Option 1:

Distance to k th neighbour as anomaly score

Option 2:

Use relative densities of neighbourhoods

• Density of neighbourhood estimated for each instance

• Instances in the low density neighbourhoods are anomalous, others normal

• Note:

– Distance to k th neighbour is an estimate for the inverse of density (large distance low density)

– But this estimates outliers in varying density neighbourhoods badly

56

LOF

• Local Outlier Factor : Average local density of k nearest neighbours

Local density of instance

• Local density:

– k divided by the volume of the smallest hypersphere centred around the instance, containing k neighbours

• Anomalous instance:

– Local density will be lower than that of the k nearest neighbours

57

Example LOF outlier, dentistry

3. Clustering based a.d. techniques

• 3 possibilities;

1. Normal data instances belong to a cluster in the data, while anomalies do not belong to any cluster

– Use clustering methods that do not force all instances to belong to a cluster

• DBSCAN, ROCK, SSN

2. Distance to the cluster center = outlier score

3. Clusters with too few points are outlying clusters

59

K-means with 6 clusters, centers of the dentistry data set

• Attributes: percent of patient that received claim from cost category

• Clusters correspond to specialism

1.

Dentist

2.

Orthodontist

3.

Orthodontist

(charged by dentist)

4.

Dentist

5.

Dentist

6.

Dental hygenist

Combining Subgroup Discovery and

Outlier Detection

• Describe regions with outliers using SD

• Identify suspicious medical practitioners

• 2 or 3 step approach to describe outliers:

1. Calculate outlier score

2. Use subgroup discovery to describe regions with outliers.

3. (optional) identify the involved medical practitioners

Example output:

• Look at patients with ‘P30>1050 euro’ for practitioner number 221

• Left: all data, right: practitioner 221

Descriptions of outliers:

LOCI outlier score

• 1. Calculate outlier score

– LOCI is a density based outlier score

• 2. Describe outlying regions

• Result top subgroup:

– Orthodontics (dentist) 0.044

^ Orthodontics 0.78

– Group of 9 dentists with an average score of 3.9

Conclusions

• Health insurance: Interesting application domain

– Very relevant

• Outlier Detection and Subgroup discovery are useful