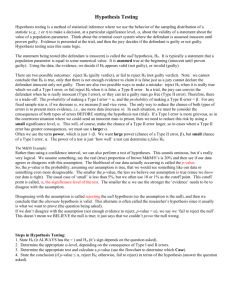

Type I and II Errors ppt

advertisement

More About Type I and Type II Errors O.J. Simpson trial: the situation • O.J. is assumed innocent. • Evidence collected: size 12 Bruno Magli bloody footprint, bloody glove, blood spots on white Ford Bronco, the knock on the wall, DNA evidence from above, motive(?), etc… O.J. Simpson trial: jury decisions • In criminal trial: The evidence does not warrant rejecting the assumption of innocence. Behave as if O.J. is innocent. • In civil trial: The evidence warrants rejecting the assumption of innocence. Behave as if O.J. is guilty. • Was an error made in either trial? Errors in Trials Truth Jury Decision “Innocent” Guilty Innocent Guilty OK ERROR ERROR OK If O.J. is innocent, then an error was made in the civil trial. If O.J. is guilty, then an error was made in the criminal trial. Errors in Hypothesis Testing Truth Decision Null hypothesis Do not reject null OK Reject null TYPE I ERROR Alternative hypothesis TYPE II ERROR OK Definitions: Types of Errors • Type I error: The null hypothesis is rejected when it is true. • Type II error: The null hypothesis is not rejected when it is false. • There is always a chance of making one of these errors. We’ll want to minimize the chance of doing so! Example: Grade inflation? Is there evidence to suggest the mean GPA of college undergraduate students exceeds 2.7? H0: μ = 2.7 HA: μ > 2.7 Random sample of students Data n = 36 s = 0.6 and computeX Decision Rule Set significance level α = 0.05. If p-value < 0.05, reject null hypothesis. Let’s consider what our conclusion is based upon different observed sample means… If X 2.865 Reject null since p-value is (just barely!) smaller then 0.05. If X 2.95 Reject null since p-value is smaller then 0.05. If X 3.00 Reject null since p-value is smaller then 0.05. Alternative Decision Rule • “Reject if p-value 0.05” is equivalent to “Reject if the sample average, X-bar, is larger than 2.865” • X 2.865 is called “rejection region.” Type I Error Minimize chance of Type I error... • … by making significance level small. • Common values are = 0.01, 0.05, or 0.10. • “How small” depends on seriousness of Type I error. • Decision is not a statistical one but a practical one. P(Type I Error) in trials • Criminal trials: “Beyond a reasonable doubt”. 12 of 12 jurors must unanimously vote guilty. Significance level set at 0.001, say. • Civil trials: “Preponderance of evidence.” 9 out of 12 jurors must vote guilty. Significance level set at 0.10, say. Example: Serious Type I Error • New Drug A is supposed to reduce diastolic blood pressure by more than 15 mm Hg. • H0: μ = 15 versus HA: μ > 15 • Drug A can have serious side effects, so don’t want patients on it unless μ > 15. • Implication of Type I error: Expose patients to serious side effects without other benefit. • Set = P(Type I error) to be small 0.01 Example: Not so serious Type I Error • Grade inflation? • H0: μ = 2.7 vs. HA: μ > 2.7 • Type I error: claim average GPA is more than 2.7 when it really isn’t. • Implication: Instructors grade harder. Students get unhappy. • Set = P(Type I error) at, say, 0.10. Type II Error and Power • Type II Error is made when we fail to reject the null when the alternative is true. • Want to minimize P(Type II Error). • Now, if alternative HA is true: – P(reject|HA is true) + P(not reject|HA is true) =1 – “Power” + P(Type II error) = 1 – “Power” = 1 - P(Type II error) Type II Error and Power • “Power” of a test is the probability of rejecting null when alternative is true. • “Power” = 1 - P(Type II error) • To minimize the P(Type II error), we equivalently want to maximize power. • But power depends on the value under the alternative hypothesis ... Type II Error and Power (Alternative is true) Power • Power is probability, so number between 0 and 1. • 0 is bad! • 1 is good! • Need to make power as high as possible. Maximizing Power … • The farther apart the actual mean is from the mean specified in the null, the higher the power. • The higher the significance level , the higher the P(Type I error), the higher the power. • The smaller the standard deviation, the higher the power. • The larger the sample, the higher the power. That is, factors affecting power... • Difference between value under the null and the actual value • P(Type I error) = • Standard deviation • Sample size Strategy for designing a good hypothesis test • Use pilot study to estimate std. deviation. • Specify . Typically 0.01 to 0.10. • Decide what a meaningful difference would be between the mean in the null and the actual mean. • Decide power. Typically 0.80 to 0.99. • Use software to determine sample size. Using JMP to Determine Sample Size – DOE > Sample Size and Power Using JMP to Determine Sample Size – One Sample Mean P(Type I Error ) = Error Std Dev = “guessimate” for standard deviation (s or s) Enter values for one or two of the quantities: 1) Difference to detect d |Ho mean – HA mean| = |m0 - m| 2) Sample Size = n 3) Power = P(Reject Ho|HA true) =1-b Using JMP to Determine Sample Size – DOE > Sample Size and Power For .05, d .20, s = .60 and leaving Power and Sample Size empty we obtain a plot of Power vs. Sample Size (n). Here we can see: Power .80 n 75 students Power .90 n 96 students Power .95 n 120students Using JMP to Determine Sample Size – DOE > Sample Size and Power (JMP Demo) If sample is too small ... • … the power can be too low to identify even large meaningful differences between the null and alternative values. – Determine sample size in advance of conducting study. – Don’t believe the “fail-to-reject-results” of a study based on a small sample. If sample is really large ... • … the power can be extremely high for identifying even meaningless differences between the null and alternative values. – In addition to performing hypothesis tests, use a confidence interval to estimate the actual population value. – If a study reports a “reject result,” ask how much different? The moral of the story as researcher • Always determine how many measurements you need to take in order to have high enough power to achieve your study goals. • If you don’t know how to determine sample size, ask a statistical consultant to help you. The moral of the story as reviewer • When interpreting the results of a study, always take into account the sample size.