Chapter 12

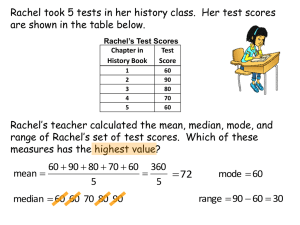

advertisement

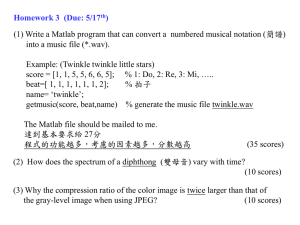

Understanding Research Results Chapter 12 Why do we use statistics • Statistics are used to describe the data • When comparing groups in our experiment, we look for statistically significant differences between our conditions. This tells us whether or not our experimental condition has an effect. • Statistics are analyzed, and statistical significance takes into account the number of subjects when analyzing for differences. Review of Scales and Measurement • Nominal Scale Variables – Have no numerical or quantitative properties – The levels are simply different categories or groups – Many independent variables in experiments are nominal (e.g. gender, marital status, hand dominance)code or assign a number to the group (male=1, female=2) Ordinal Scale Variables – These variables involve minimal quantitative distinctionscan rank order levels of the variable from lowest to highest. – Rank the stressors in your life from lowest to hightest. • • • • • Exams Grades Significant other Parental approval Scholarship – All we know is the order, the strength of the stressor is not known. Interval and Ratio Scale Variables – With interval scale variables, the intervals between the levels are equal in size – There is no zero point indicating the absence of somethingas in there is no absence of mood. – Ratio scale variables have both equal intervals and a absolute zero point that indicates the absence of the variable being measured time, weight, length and other physical measures are the best examples of ratio scales. – Interval and ratio data are conceptually different but the statistical procedures used to analyze the data are exactly the same. – With both interval and ratio scales the data can be summarized using the mean…eg. The average mood of the group was 6.5. Analyzing the Results of Research Investigations • Comparing group percentages • Correlating scores of individuals on two variables • Comparing group means Comparing Group Percentages • Type of analysis you can run when you have two distinct groups or populations you are comparing and the variable is nominal as in whether or not males vs. females helped or not (nominal-yes vs. no). • If you analyzed whether males and females differ in the way they helped you would count the number of times females helped, and then the number of times males helped and get a percentage of each. • Next we would run statistical analysis on the differences between the percentages to see if the groups were significantly different. Interpreting Statistical Analysis • For example: In the current study if there were 30 females and 30 males in each condition, and 24 females helped, as opposed to 21 males, • We would be able to say that 80% of the females elected to help as compared to 70% of the males. Next we would run statistical analysis on 80% vs. 70% using a simple t-test. • The t-test takes into consideration the number of participants in the group. Therefore with a t (58)=1.56. • In order for the difference to be significant with 50 subjects, the t score, with 60 participants would have to be at least a 2.0, (according to the tables at the back of the book (p361) • Therefore, there was no significant difference between males and females in a helping situation. Correlating Individual Scores • Used when you don’t have distinct groups of subjects. • Instead, individual subjects are measured on two variables, and each variable has a range of numerical values correlation between personality types and profession. • Two variables per subject with the variables given different numerical values. (could be something that already has numerical values test performance and blood pressure) • Does NOT imply causation, only a relationship. Comparing Group Means • Compare the mean of one group to that of a second group to determine if there are any differences between the two groups. • Again, statistical analysis is performed, based on the number of groups, and the number of participants to determine if the difference is statistically significant and therefore conclusions can be made about treatment and control groups and whether or not the hypothesis was supported. Which Statistics to use • T-statitistic -statitistical measure for assessing whether or not there is a difference between two groups. • Formuala: • t= group difference within group difference F-statistic (ANOVA) • Statistical measure for assessing whether or not two or more groups are equal. • Cannot use a t-test with more than two groups. • Also used to analyze an interaction. • It is a ratio of two types of variance • Formula F= systematic variance (variability b/w groups) Error Variance (variability within groups) Degrees of Freedom • The critical value for both the t and the F-value are based on degrees of freedom, which are the number of participants minus the number of conditions. • N1+N2-number of groups=degrees of freedom • For example: 30 +30 -2 =58 • The critical value for 58 degrees of freedom can be looked up in the table. The t or F-value for the experiment must be higher than the critical value in order for the experiment to be statistically significant. • Reporting t(58)=1.67 if the critical value for 58 degrees of freedom is 2.00, then the t-value is not significant, which means there is no significant difference between the treatment and the control group, and therefore the hypothesis was not supported. Descriptive Stats • allows researchers to make precise statements about the data. Two statistics are needed to describe the data • a single number to describe the central tendency of the data (usually the mean) • a number that describes the Variability , or how widely the distribution of scores is spread. Central Tendency • Mean-appropriate only when the scores are measured on an interval or ratio score. – Obtained by adding all the scores and dividing by the number of scores. • Median-score that divides the group in half—appropriate when using an ordinal scale as it takes into account only the rank order of the scores. Can also be useful for ratio and interval scales. (50% above and 50% below the median) the middle score in an ordered distribution – Order your scores from lowest to highest (or vice versa) – Count down thru the distribution and find the score in the middle of the distribution. This is the median – Median gives you more info than the mode, but it doesn’t take into account the magnitude of the scores above and below the median – Two distributions can have the same median and yet be very different – The median is usually used when the mean is not a good choice • Mode-most frequent score in the distribution…. – is the only measure that can be used for nominal data. – is simple to calculate but the only info it gives is what is the most frequent score if we gave a test to 15 people and 75 was the score that we saw most often and 6 people got a 75, the mode would be 6does not use the actual value on the scale, it just indicates the most frequently occurring value Variability • Number that shows the amount of spread in the entire distribution. First calculate the variance of the individual scores from the mean. • Variance (s2) –indicates the average deviation of scores from the mean. • s2 = (X-X*)2 x = each indiv score • n-1 x* = the mean of the distribution • n = number of scores • Standard Deviation • To calculate the SD, simply take the square root of the variance • Appropriate for only interval and ratio scores as it uses the actual values of the scores. • Tells us something about how the data is clustered. Correlating Coefficients • Is important to know whether a relationship between variables is relatively weak or strong. • Correlation coefficient is a statistic that describes how strongly variables are related to one another. • To do this you must use a measure of association The Pearson Product-Moment Correlation Coefficient – Pearson r provides an index of the direction and magnitude of the relationship between two sets of scores – Generally used when your dependent measures are scaled on an interval or a ratio scale can be used when numbers are assigned to variables Meyers Briggs scores and scores for professions. – The value of the Pearson r can range from +1 thru 0 to -1 – The sign of the coefficient tells you the direction of the relationship – The magnitude of the correlation coefficient tells you the degree of linear relationship between your two variables – The sign is unrelated to the magnitude of the relationship and simply indicates the direction of the relationship – Relationships do not imply causation. Factors that affect the Pearson Correlation Coefficient • Outliers • Restricted Range • E.g., compared IQ and GPA in college students as opposed to high school studentsmore homogeneous as IQ must be above a certain level to be intelligent enough to get into college. • Shape of the distribution Regression Equations and Predictions • simple correlation techniques can establish the direction and degree of relationship between two variables. • linear regression can estimate values of a variable based on knowledge of the values of others variables. • Equation Y = a + bX • Y = predicted score (what we are looking for) • b = slope of the regression line (weighting value) • X = known score • a = constant (y-intercept) Multiple Correlation • Used when you want to focus on more than two variables at a time. (symbolized as R instead of r) • Used to combine a number of predictor variables to increase the accuracy of prediction of a given criteria. • Using multiple predictor variables usually results in greater accuracy of the prediction than when a single predictor is considered alone as in the above formula. • Y =a + b1x1 + b2x2 + b3x3 + b4x4 Frequency distribution • Indicates the number of individuals that receive each possible score. • Graphing – Pie Charts – Bar Graphs – Polygons