Chapter 8 Part A Power Point

advertisement

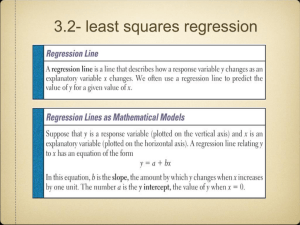

Chapter 8: Linear Regression— Part A A.P. Statistics Linear Model • Making a scatterplot allows you to describe the relationship between the two quantitative variables. • However, sometimes it is much more useful to use that linear relationship to predict or estimate information based on that real data relationship. • We use the Linear Model to make those predictions and estimations. Linear Model Normal Model Allows us to make predictions and estimations about the population and future events. Linear Model Allow us to make predictions and estimations about the population and future events. It is a model of real data, as long as that data has a nearly symmetric distribution. It is a model of real data, as long as that data has a linear relationship between two quantitative variables. Linear Model and the Least Squared Regression Line • To make this model, we need to find a line of best fit. • This line of best fit is the “predictor line” and will be the way we predict or estimate our response variable, given our explanatory variable. • This line has to do with how well it minimizes the residuals. Residuals and the Least Squares Regression Line • The residual is the difference between the observed value and the predicted value. • It tells us how far off the model’s prediction is at that point • Negative residual: predicted value is too big (overestimation) • Positive residual: predicted value is too small (underestimation) Residuals Least Squares Regression Line • The LSRL attempts to find a line where the sum of the squared residuals are the smallest. • Why not just find a line where the sum of the residuals is the smallest? – Sum of residuals will always be zero – By squaring residuals, we get all positive values, which can be added – Emphasizes the large residuals—which have a big impact on the correlation and the regression line Scatterplot of Math and Verbal SAT scores Scatterplot of Math and Verbal SAT scores with incorrect LSRL Scatterplot of Math and Verbal SAT scores with correct LSRL Simple Regression Model of Collection 1 Response attribute (numeric): Verbal_SAT Predictor attribute (numeric): Math_SAT Sample count: 6 Equation of least-squares regression line: Verbal_SAT = 1.11024 Math_SAT 75.424 Correlation coefficient, r = 0.954082 r-squared = 0.91027, indicating that 91.027% of the variation in Verbal_SAT is accounted for by Math_SAT. The best estimate for the slope is 1.11024 +/- 0.4839 at a 95 % confidence level. (The standard error of the slope is 0.174288.) When Math_SAT = 0 , the predicted value for a future observation of Verbal_SAT is 75.4244 +/- 288.073. Correlation and the Line (Standardized data) • LSRL passes through z x and z y • LSRL equation is: zˆ y rz x “moving one standard deviation from the mean in x, we can expect to move about r standard deviations from the mean in y .” Interpreting Standardized Slope of LSRL LSRL of scatterplot: zˆ fat 0 . 83 z protein For every standard deviation above (below) the mean a sandwich is in protein, we’ll predict that that its fat content is 0.83 standard deviations above (below) the mean. LSRL that models data in real units yˆ b0 b1 x b 0 y - intercept b1 slope b 0 y b1 x Protein Fat x 17 . 2 g y 23 . 5 g s x 14 . 0 g s y 16 . 4 g r 0 . 83 b1 rs y sx LSRL Equation: Interpreting LSRL Slope: One additional gram of protein is associated with an additional 0.97 grams of fat. y-intercept: An item that has zero grams of protein will have 6.8 grams of fat. f aˆ t 6 . 8 0 . 97 protein ALWAYS CHECK TO SEE IF yINTERCEPT MAKES SENSE IN THE CONTEXT OF THE PROBLEM AND DATA Properties of the LSRL The fact that the Sum of Squared Errors (SSE, same as Least Squared Sum)is as small as possible means that for this line: • The sum and mean of the residuals is 0 • The variation in the residuals is as small as possible • The line contains the point of averages x , y Assumptions and Conditions for using LSRL Quantitative Variable Condition Straight Enough Condition if not—re-express Outlier Condition with and without ? Residuals and LSRL • Residuals should be used to see if a linear model is appropriate and in addition the LSRL that was calculated • Residuals are the part of the data that has not been modeled in our linear model Residuals and LSRL What to Look for in a Residual Plot to Satisfy Straight Enough Condition: Looking at a scatterplot of the residuals vs. the x-value is a good way to check the Straight Enough Condition, which determines if a linear model is appropriate. No patterns, no interesting features (like direction or shape), should stretch horizontally with about same scatter throughout, no bends or outliers. The distribution of residuals should be symmetric if the original data is straight enough. Residuals, again When analyzing the relationship between two variables (thus far) ALWAYS: • Plot the data and describe the relationship* Quantitati ve Data • Check Three Regression Straight Enough Assumptions/Conditions Outlier • Compute correlation coefficient • Compute Least Squared Regression Line • Check Residual Plot (Again) • Interpret relationship (intercept, slope, correlation and general conclusion) * Calculate mean and standard deviation for each variable, if possible S1 = mean S2 = s Final Collection 1 84.933333 6.540715 Collection 1 Midterm 83.466667 7.4437574 S1 = mean S2 = s Model of Collection 1Simple Regression Response attribute (numeric): Final Predictor attribute (numeric): Midterm Sample count: 15 Equation of least-squares regression line: Final = 0.752149 Midterm + 22.154 Correlation coefficient, r = 0.855994 r-squared = 0.73273, indicating that 73.273% of the variation in Final is accounted for by Midterm. The best estimate for the slope is 0.752149 +/- 0.272187 at a 95 % confidence level. (The standard error of the slope is 0.125991.) When Midterm = 0 , the predicted value for a future observation of Final is 22.154 +/- 24.0299.