PPT - How do I get a website?

advertisement

Bayes Rule

Rev. Thomas Bayes

(1702-1761)

• The product rule gives us two ways to factor a joint

probability:

P( A, B) P( A | B) P( B) P( B | A) P( A)

P( B | A) P( A)

• Therefore, P( A | B)

P( B)

• Why is this useful?

– Can get diagnostic probability P(Cavity | Toothache) from causal

probability P(Toothache | Cavity)

– Can update our beliefs based on evidence

– Key tool for probabilistic inference

Bayes Rule example

• Marie is getting married tomorrow, at an outdoor ceremony

in the desert. In recent years, it has rained only 5 days each

year (5/365 = 0.014). Unfortunately, the weatherman has

predicted rain for tomorrow. When it actually rains, the

weatherman correctly forecasts rain 90% of the time. When

it doesn't rain, he incorrectly forecasts rain 10% of the time.

What is the probability that it will rain on Marie's wedding?

Bayes Rule example

• Marie is getting married tomorrow, at an outdoor ceremony

in the desert. In recent years, it has rained only 5 days each

year (5/365 = 0.014). Unfortunately, the weatherman has

predicted rain for tomorrow. When it actually rains, the

weatherman correctly forecasts rain 90% of the time. When

it doesn't rain, he incorrectly forecasts rain 10% of the time.

What is the probability that it will rain on Marie's wedding?

P(Rain | Predict )

P(Predict | Rain ) P(Rain )

P(Predict )

P(Predict | Rain ) P(Rain )

P(Predict | Rain ) P(Rain ) P(Predict | Rain ) P(Rain )

0.9 0.014

0.0126

0.111

0.9 0.014 0.1 0.986 0.0126 0.0986

Bayes rule: Another example

• 1% of women at age forty who participate in routine

screening have breast cancer. 80% of women with

breast cancer will get positive mammographies.

9.6% of women without breast cancer will also get

positive mammographies. A woman in this age

group had a positive mammography in a routine

screening. What is the probability that she actually

has breast cancer?

P(Cancer | Positive)

P(Positive | Cancer) P(Cancer)

P(Positive)

P(Positive | Cancer) P(Cancer)

P(Positive | Cancer) P(Cancer) P(Positive | Cancer) P(Cancer)

0.8 0.01

0.008

0.0776

0.8 0.01 0.096 0.99 0.008 0.095

Independence

• Two events A and B are independent if and only if

P(A B) = P(A) P(B)

– In other words, P(A | B) = P(A) and P(B | A) = P(B)

– This is an important simplifying assumption for

modeling, e.g., Toothache and Weather can be

assumed to be independent

• Are two mutually exclusive events independent?

– No, but for mutually exclusive events we have

P(A B) = P(A) + P(B)

• Conditional independence: A and B are conditionally

independent given C iff P(A B | C) = P(A | C) P(B | C)

Conditional independence:

Example

• Toothache: boolean variable indicating whether the patient has a

toothache

• Cavity: boolean variable indicating whether the patient has a cavity

• Catch: whether the dentist’s probe catches in the cavity

• If the patient has a cavity, the probability that the probe catches in it

doesn't depend on whether he/she has a toothache

P(Catch | Toothache, Cavity) = P(Catch | Cavity)

• Therefore, Catch is conditionally independent of Toothache given Cavity

• Likewise, Toothache is conditionally independent of Catch given Cavity

P(Toothache | Catch, Cavity) = P(Toothache | Cavity)

• Equivalent statement:

P(Toothache, Catch | Cavity) = P(Toothache | Cavity) P(Catch | Cavity)

Conditional independence:

Example

• How many numbers do we need to represent the joint

probability table P(Toothache, Cavity, Catch)?

23 – 1 = 7 independent entries

• Write out the joint distribution using chain rule:

P(Toothache, Catch, Cavity)

= P(Cavity) P(Catch | Cavity) P(Toothache | Catch, Cavity)

= P(Cavity) P(Catch | Cavity) P(Toothache | Cavity)

• How many numbers do we need to represent these

distributions?

1 + 2 + 2 = 5 independent numbers

• In most cases, the use of conditional independence

reduces the size of the representation of the joint

distribution from exponential in n to linear in n

Probabilistic inference

• Suppose the agent has to make a decision about

the value of an unobserved query variable X given

some observed evidence variable(s) E = e

– Partially observable, stochastic, episodic environment

– Examples: X = {spam, not spam}, e = email message

X = {zebra, giraffe, hippo}, e = image features

• Bayes decision theory:

– The agent has a loss function, which is 0 if the value of

X is guessed correctly and 1 otherwise

– The estimate of X that minimizes expected loss is the one

that has the greatest posterior probability P(X = x | e)

– This is the Maximum a Posteriori (MAP) decision

MAP decision

• Value x of X that has the highest posterior

probability given the evidence E = e:

P( E e | X x) P( X x)

x* arg max x P( X x | E e)

P ( E e)

arg max x P( E e | X x) P( X x)

P( x | e) P(e | x) P( x)

posterior

likelihood

prior

• Maximum likelihood (ML) decision:

x* argmaxx P(e | x)

Naïve Bayes model

• Suppose we have many different types of observations

(symptoms, features) E1, …, En that we want to use to

obtain evidence about an underlying hypothesis X

• MAP decision involves estimating

P( X | E1 , , En ) P( E1 , , En | X ) P( X )

– If each feature Fi can take on k values, how many entries are in

the (conditional) joint probability table P(E1, …, En |X = x)?

Naïve Bayes model

• Suppose we have many different types of observations

(symptoms, features) E1, …, En that we want to use to

obtain evidence about an underlying hypothesis X

• MAP decision involves estimating

P( X | E1 , , En ) P( E1 , , En | X ) P( X )

• We can make the simplifying assumption that the

different features are conditionally independent

given the hypothesis:

n

P( E1 , , En | X ) P( Ei | X )

i 1

– If each feature can take on k values, what is the complexity of

storing the resulting distributions?

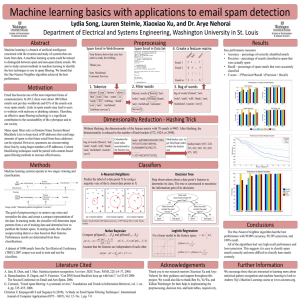

Naïve Bayes Spam Filter

• MAP decision: to minimize the probability of error, we should

classify a message as spam if

P(spam | message) > P(¬spam | message)

Naïve Bayes Spam Filter

• MAP decision: to minimize the probability of error, we should

classify a message as spam if

P(spam | message) > P(¬spam | message)

• We have P(spam | message) P(message | spam)P(spam)

and ¬P(spam | message) P(message | ¬spam)P(¬spam)

Naïve Bayes Spam Filter

• We need to find P(message | spam) P(spam) and

P(message | ¬spam) P(¬spam)

• The message is a sequence of words (w1, …, wn)

• Bag of words representation

– The order of the words in the message is not important

– Each word is conditionally independent of the others given

message class (spam or not spam)

Naïve Bayes Spam Filter

• We need to find P(message | spam) P(spam) and

P(message | ¬spam) P(¬spam)

• The message is a sequence of words (w1, …, wn)

• Bag of words representation

– The order of the words in the message is not important

– Each word is conditionally independent of the others given

message class (spam or not spam)

n

P(m essage| spam) P(w1 ,, wn | spam) P( wi | spam)

i 1

• Our filter will classify the message as spam if

n

n

i 1

i 1

P( spam) P( wi | spam) P(spam) P( wi | spam)

Bag of words illustration

US Presidential Speeches Tag Cloud

http://chir.ag/projects/preztags/

Bag of words illustration

US Presidential Speeches Tag Cloud

http://chir.ag/projects/preztags/

Bag of words illustration

US Presidential Speeches Tag Cloud

http://chir.ag/projects/preztags/

Naïve Bayes Spam Filter

n

P( spam| w1 ,, wn ) P( spam) P( wi | spam)

i 1

posterior

prior

likelihood

Parameter estimation

• In order to classify a message, we need to know the prior

P(spam) and the likelihoods P(word | spam) and

P(word | ¬spam)

– These are the parameters of the probabilistic model

– How do we obtain the values of these parameters?

prior

spam:

¬spam:

0.33

0.67

P(word | spam)

P(word | ¬spam)

Parameter estimation

• How do we obtain the prior P(spam) and the likelihoods

P(word | spam) and P(word | ¬spam)?

– Empirically: use training data

# of word occurrences in spam messages

P(word | spam) =

total # of words in spam messages

– This is the maximum likelihood (ML) estimate, or estimate

that maximizes the likelihood of the training data:

D

nd

P(w

d ,i

| classd ,i )

d 1 i 1

d: index of training document, i: index of a word

Parameter estimation

• How do we obtain the prior P(spam) and the likelihoods

P(word | spam) and P(word | ¬spam)?

– Empirically: use training data

# of word occurrences in spam messages

P(word | spam) =

total # of words in spam messages

• Parameter smoothing: dealing with words that were never

seen or seen too few times

– Laplacian smoothing: pretend you have seen every vocabulary word

one more time than you actually did

# of word occurrences in spam messages + 1

P(word | spam) =

total # of words in spam messages + V

(V: total number of unique words)

Summary of model and parameters

• Naïve Bayes model:

n

P( spam| m essage) P( spam) P( wi | spam)

i 1

n

P(spam| m essage) P(spam) P( wi | spam)

i 1

• Model parameters:

Likelihood

of spam

Likelihood

of ¬spam

P(w1 | spam)

P(w1 | ¬spam)

P(spam)

P(w2 | spam)

P(w2 | ¬spam)

P(¬spam)

…

…

P(wn | spam)

P(wn | ¬spam)

prior

Bag-of-word models for images

Csurka et al. (2004), Willamowski et al. (2005), Grauman & Darrell (2005), Sivic et al. (2003, 2005)

Bag-of-word models for images

1. Extract image features

Bag-of-word models for images

1. Extract image features

Bag-of-word models for images

1. Extract image features

2. Learn “visual vocabulary”

Bag-of-word models for images

1. Extract image features

2. Learn “visual vocabulary”

3. Map image features to visual words

Bayesian decision making:

Summary

• Suppose the agent has to make decisions about

the value of an unobserved query variable X

based on the values of an observed evidence

variable E

• Inference problem: given some evidence E = e,

what is P(X | e)?

• Learning problem: estimate the parameters of

the probabilistic model P(X | E) given a training

sample {(x1,e1), …, (xn,en)}