Research Methods lecture 6 and 7 Slides

advertisement

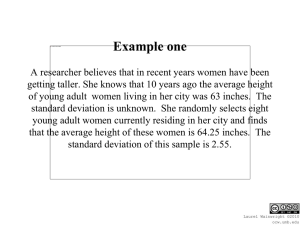

Session 6: Basic Statistics Part 1 (and how not to be frightened by the word) Prof Neville Yeomans Director of Research, Austin LifeSciences So now we’ve got some results? How can we make sense out of them? What I will cover (over 2 sessions, August 28 and September 25) • Sampling populations • Describing the data in the samples • How accurately do those data reflect the ‘real’ population the samples were taken from? • We’ve compared two groups. Are they really from different populations, or are they samples from the same population and the measured differences are just due to chance? What I will cover (contd.) • Tests to answer the question ‘Are the differences likely to be just due to chance?’ – Data consisting of values (e.g. hemoglobin concentration)(‘continuous variables’) – Data consisting of whole numbers – frequencies, proportions (percentages) – Tests for just two groups; tests for multiple groups • Tests that examine relationships between two or more variables (correlation, regression analysis, lifetable) What I will cover (contd.) • How many subjects should I study to find the answer to my question? (Power calculations) • Statistical packages and other resources We’ve got some numbers. How are we going to describe them to others? Suppose we’ve measured heights of a number of females (‘a sample’) picked off the street in Heidelberg Subject # Height (cm) Subject # Height (cm) Subject # Height (cm) 1 179 9 156 17 167 2 162 10 157 18 173 3 150 11 161 19 159 4 155 12 164 20 170 5 168 13 165 21 168 6 175 14 159 22 162 7 159 15 161 23 171 8 152 16 163 24 164 How could H ewe ig h tsmore o f (s aconcisely m p le o f) H edescribe id e lb e rg wthese o m e n data – using just one or two numbers that would give us useful information about the sample as a whole? 185 180 H e ig h t (c m ) 175 170 165 160 155 150 1. A measure of ‘central tendency’ P e rso n n u m b e r values are spread 2. A measure of how widely the 145 0 2 4 6 8 10 12 14 16 18 20 22 24 26 F re q u e n cy o f w o m e n w ith e a ch h e ig h t in sa m p le The median (middle value) = 162.5 3 .5 The range (150-179) a poor measure for describing the whole population because it depends on sample size – range is likely to be wider with larger samples 3 .0 F re q u e n cy 2 .5 2 .0 Interquartile range (25th percentile to 75th percentile of values: 159-168) 1 .5 what we should always use with the median – it’s largely independent of sample size 1 .0 0 .5 145 150 155 160 165 H e ig h t 170 175 180 185 F re q u e n c y d is trib u tio n o f h e ig h t o f H e id e lb e rg w o m e n (amalgamated into 3cm ranges: e.g.141-143, 144-146 etc.) the Mean (average)(Ʃx/N) = 163.3 cm 6 the Standard Deviation* ± 7.2 cm 5 - doesn’t vary much with sample size (except very small samples) - approx. 67% of values will lie within ± 1 SD either side of mean** - approx 95% of values will lie within ± 2 SD either side of mean** F re q u e n cy 4 3 2 1 0 130 140 150 160 170 180 190 H e ig h t *In Excel, enter formula ‘=STDEV(range of cells)’ ** Provided the population is ‘normally distributed’ 200 The ‘Normal distribution’ Mean = Median in a ‘perfect’ normal distribution Standard deviations away from mean We measured the mean height of our sample of 25 women ... (it was 163.3 cm) • But what is the average height of the whole population – of ALL Heidelberg women? • We didn’t have time or resources to track them all down – that’s why we just took what we hoped was a representative sample. • What I’m asking is: how good an estimate of the true population mean is our sample mean? • This is where the Standard Error of the Mean* (or just Standard Error, SE) comes in. *It’s sometimes called the Standard ESTIMATE of the Error of the mean The Standard Error (contd.) • The mean height of our sample of 25 women was 163.3 cm • We calculated the Standard Deviation (SD) of the sample to be 7.3 cm (that value, on either side of the mean, that should contain about 2/3 of those measured) • Standard Error of the mean = SD/√N , i.e. 7.3/ √25 = 7.3/5 = 1.46 • So now we can express our results for the height of our sample as 163.3 ± 1.5 (Mean ± SEM) ...... But what does this really tell us? • The actual true mean height of the whole population of women has a 67% likelihood of lying within 1.5 cm (i.e. 1 SEM) either side of the mean we found in our sample; and a 95% likelihood of lying within 3 cm (i.e. 2 x SEM) either side of that sample mean. (It’s actually 1.96xSEM for a reasonably large sample - e.g. roughly 30 – and wider for small samples, but let’s keep it simple). The concept of the standard error of the mean (SEM) – e.g. serum sodium values True population mean 142 mmol/L (SD=4.0) Sample mean =142.8 mmol/L (SD of sample = 3.4) 1 x SEM = (i.e. 3.4/√10) = 1.1 mmol/L Random sample of 10 normal individuals 130 2 x SEM (~’95% confidence interval’) = 2.2 mmol/L That means: ‘There is a 95% chance (19 chances out of 20) that the actual population mean, estimated from our random sample lies between 140.6 and 145.0 mmol/L)’ 134 138 142 146 150 154 mmol/L Why does Standard Error depend on population SD and sample size? SE = SD/√N A: Narrow population spread (i.e. small SD) B: Wide population spread (i.e. large SD) Increasing N decreases SE of mean. i.e. increases accuracy of our estimate of the population mean based on results of our sample Testing significance of differences S a m p le s o f H e id e lb e rg m e n a n d w o m e n 7 6 Women: Mean = 163.3 SE = 1.46 95% confidence intervals F re q u e n cy 5 4 3 Men: Mean = 176.5 SE = 1.43 2 On a quick, rough,1 check we can see that: (a) the 95% confidence interval for our estimate of the height of women is 0 160.3-166.4 cm (approximately mean ± 2SE). 130 140 150 160 170 180 190 200 (b) our estimate of the mean height of the men sampled is quite a lot outside H e ig h t (cm ) the 95% confidence interval (range)for the women, so it looks improbable that they are from the same population Testing significance of differences .... How likely is it that the two random samples came from the same population? Student’s t-test Composite frequency distribution, created by pooling data from both samples Women Men Mean: 170.7 SD: 10.0 cm How likely is it that these two samples (the pink and the blue) were taken from the SAME population? [this is called the NULL HYPOTHESIS] Tested for statistical significance of difference: p<0.001 i.e. there is less than 1 chance in 1000 that these two samples -2 0 1 2 3 came-3from the same -1population Standard deviation either side of mean In fact, though, running a Student’s t-test on the two samples of height in the Heidelberg men and women slide, gives this error message: Assumptions to be met before testing significance of differences with PARAMETRIC TESTS* – i.e. tests that use the mathematics of the normal curve distribution • The combined data should approximate a normal curve distribution – in this instance the male data were skewed (not evenly distributed around the mean) and spread a bit too far out into the tails of the frequencydistribution curve • The variances (=SD2) of the groups should not differ significantly from each other *Student’s t-test, Paired t-test, Analysis of Variance An example of data where groups have different variance (spread), and one group is skewed obstruction - controls vs smokers The equal varianceAirway test failed (P<0.05), and the normality test almost 100 failed (P=0.08) – so we should not use a parametric test such as t-test Means 90 Means FEV1/FVC 80 Lower limit of ‘normal’ range 70 Medians 60 50 40 30 Controls Smokers So what do we do if we can’t use a parametric test to check for significances of differences? • Use a non-parametric test • These tests, instead of using the actual numerical values of the data, put the data from each group into ascending order and assign a rank number for their place in the combined groups. • The maths of the test is then done on these ranks • Examples: Rank sum test, Wilcoxon Rank Test, Mann Whitney rank test, etc. – (the P value for our slide of heights of Heidelberg men and women was calculated using Wilcoxon test) Example of how a rank-sum (nonparametric) test is constructed manually* Group 1 data 12 13 13 15 19 27 Sum of ranks: Rank when groups combined 1 2.5 2.5 4 6.5 9 Group 2 data 16 19 26 40 78 101 Rank when groups combined 25.5 5 6.5 8 10 11 12 46.5 Mann-Whitney test: P = 0.026 *In reality, these days you’ll just feed the raw data into a program to do it for you Tests to examine significance of differences between 3 or more groups [strictly this should read ... ‘tests to decide how likely are data from 3 or more samples to come from the same population’] • Parametric tests (tests based on the mathematics of the ‘normal curve’) – Analysis of Variance (1-way, 2-way, factorial, etc.) • Non-parametric tests (rank-sum tests) – Kruskal-Wallis test P a ra lle l g ro u p tria l o f p la c e b o v e rs u s N o rm o p re s s tre a tm e n t fo r h yp e rte n s io n 140 M e a n 2 4 h o u r b lo o d p re ssu re M e a n + /- S E 120 100 80 60 40 20 0 0 mg 0 .5 m g N o rm o p re s s d o s e 2 .0 m g P a ra lle l g ro u p tria l o f p la c e b o v e rs u s N o rm o p re s s tre a tm e n t fo r h yp e rte n s io n 140 M e a n 2 4 h o u r b lo o d p re ssu re M e a n + /- S E 120 100 One variable (dose), compared across 3 groups .... So this gets tested with one-way ANOVA 80 60 40 20 0 0 mg 0 .5 m g N o rm o p re s s d o s e 2 .0 m g P a ra lle l g ro u p tria l o f p la c e b o v e rs u s N o rm o p re s s tre a tm e n t fo r h yp e rte n s io n 140 M e a n 2 4 h o u r b lo o d p re ssu re M e a n + /- S E 120 100 80 60 40 20 0 0 mg 0 .5 m g 2 .0 m g N o rm o p re s s d o s e This tells us that it is very unlikely the three groups belong to the same population. . But which differ from which? One Way Analysis of Variance Normality Test: Passed Equal Variance Test: Passed Group Name Control Normopress 0.5 mg Normopress 2.0 mg Source of Variation Between Groups Residual Total Thursday, June 28, 2012, 3:39:25 PM (P = 0.786) (P = 0.694) N Missing 12 0 12 0 12 0 DF 2 33 35 Mean Std Dev 126.500 7.243 125.083 5.551 114.000 5.625 SS MS 1124.389 562.194 1263.917 38.301 2388.306 F 14.679 SEM 2.091 1.602 1.624 P <0.001 The differences in the mean values among the treatment groups are greater than would be expected by chance; there is a statistically significant difference (P = <0.001). All Pairwise Multiple Comparison Procedures (Holm-Sidak method): Overall significance level = 0.05 Comparisons for factor: Comparison Diff of Means t Unadjusted P Control vs. Normopress 2.0 mg 12.500 Normopress 0.5 mg vs. Normopress 2.0 mg 11.083 Control vs. Normopress 0.5 mg 1.417 4.947 4.387 0.561 <0.001 <0.001 0.579 Critical Significant? Level 0.017 Yes 0.025 Yes 0.050 No Before and after data – paired tests • Create a paired analysis of length of hair after going to hairdresser. • Hypothesis: cutting hair makes it shorter Two independent groups Difference between means tested for significance with Student’s t test 20 P=0.58 Hair length (cm) 15 10 9.7±1.9* 8.2±1.7 5 *mean ± SE 0 1 2 Group Our actual data Difference between means tested for significance with paired Student’s t test The variation within each individual is much less than between individuals 20 P=0.025 Hair length (cm) 15 10 5 The paired t-test examines the mean and standard error of the changes In each individual, and tests how likely are the changes due to chance 0 1 Before 2 After Group So far we have been dealing with ‘CONTINUOUS VARIABLES’ – numbers such as heights, laboratory values, velocities, temperatures etc. that could have any value (e.g. many decimal points) if we could measure accurately enough. Now we’ll look at ... ‘DISCONTINUOUS VARIABLES’ – whole numbers, most often as proportions or percentages. Rates and proportions • In 1969, a home for retired pirates has 93 inmates, 42 of whom have only one leg. • In 2004, a subsequent survey finds there are now 62 inmates, 6 of whom have only one leg. Has there been a ‘real’ change (i.e. a change unlikely to be due to chance) in the proportion of one-legged pirates in the home between the two surveys? Year Pirates with 1 leg Pirates with 2 legs (%) Expected (%) 1969 42 (45.2) 29 51 (54.8) 93 2004 6 (9.7) 19 56 (10.3) 62 Totals 48 107 Total pirates 155 Chi-square= 20.282 with 1 degrees of freedom. (P = <0.001) i.e. The likelihood that the difference in proportions of 1-legged inmates between 1969 and 2004 is due to chance ... is less than 1:1000 One trap with chi-square tests and small numbers .... Penicillin treatment for pneumonia Treatme TreatmentNo.No. dead deadNo. No. Totals nt surviving surviving Placebo Placebo 9 9 5 5 14 Penicillin Penicillin 1 1 8 8 9 Totals 10 13 23 Fisher’s exact test: P = 10! x 13! x 14! x 9! 9! x 5! x 1! x 8! x 23! = 0.029 Correlation • Fairly straightforward concept of how likely are two variables to be related to each other • Examples: – Do children’s heights vary with their age, and if so is the relation direct (i.e. get bigger as get older) or converse (get smaller as get older)? – Does respiratory rate increase as pulse rate increases during exertion? • The correlation coefficient, R, tells us how closely the two variables ‘travel together’ • P value is calculated to tell us how likely the relationship is to be ‘only’ by chance Examples of regression (correlation) data 22 18 20 R = 0.965 P<0.0001 14 18 16 12 Y Data M W g e n e ra te d 16 10 14 12 10 R = - 0.37 P = 0.30 8 8 6 6 4 4 0 2 4 6 8 H o u rs o f s u n lig h t 10 12 0 2 4 6 X Data 8 10 12 Some other common statistical analyses • Life-table analyses – Observing and comparing events developing over time; allows us to compensate for dropouts at varying times during the study • Multiple linear and multivariate regression analyses – Looking for relationships between multiple variables Life table analyses Advanced lung cancer. Trial compared motesanib + 2 conventional chemo drugs ... with placebo plus the two other drugs Scagliotti et al. J Clin Oncol 2012; 30: 2829 Multiple regression analysis • Examines the possible effect of more than one variable on the thing we are measuring (the ‘dependent variable’) Perret JL et al. The Interplay between the Effects of Lifetime Asthma, Smoking, and Atopy on Fixed Airflow Obstruction in Middle Age. Am J Respir Crit Med 2013; 187: 42-8. ...from Institute of Breathing and Sleep (Austin), University of Melbourne, Monash University, Alfred Hospital, And others Perret et al. 2013 Sample Size Calculations How many patients, subjects, mice etc. do we need to study to reliably* find the answer to our research question? *We can never be certain to do this, but should aim to be considerably more likely than not to find out the truth about the question Sample size calculations (1) First we need to grapple with two types of ‘error’ in interpreting differences between means and/or medians of groups: • Type 1 (or α) error: ... that we think the difference is ‘real’ (data are from 2 or more different populations) when it is not – This is what we’ve dealt with so far, and the Pvalues assess how likely the differences are due to chance • Type 2 (or β) error: ... that our experiment, and the stats test we’ll apply to the results, will FAIL to show a significant difference when there REALLY IS ONE Sample size calculations (2) • If we end up with a Type 2 () error, it will be because our sample size(s) was too small to persuade us that the actual difference between means was unlikely due to chance (i.e. P<0.05) • The smaller the real difference between population means, the larger the sample size needs to be to detect it as being statistically significant Sample size calculations (3) How do we go about it? Most of the good statistical packages have a function for calculating sample sizes 1. Decide what statistical test will be appropriate to apply to primary endpoint when study completes 2. Estimate the likely size of difference between groups, if the hypothesis is correct 3. Decide how confident you want to be that the difference(s) you observe is unlikely due to chance 4. Decide how much you want to risk missing a true difference (i.e. what power you want the study to have) Note: We really should have done a sample size calculation before we started our experiments, but for this course we needed to deal with the basics of stats tests first Sample size calculations A worked example (i) • We want to see whether drug X will reduce the incidence of peptic ulcer in patients taking aspirin for 6 months 1. Decide what statistical test: chi square, to compare differences in frequencies in 2 groups Sample size calculations A worked example (i) • • • We want to see whether drug X will reduce the incidence of peptic ulcer in patients taking aspirin for 6 months We expect a 10% incidence of ulcers in the controls We hypothesize that a 50% reduction (i.e. 5%) in those treated with X would be clinically worthwhile 2. We’ve now decided the size of the difference between groups we are interested to look for Sample size calculations (4) A worked example (i) • • • • We want to see whether drug X will reduce the incidence of peptic ulcer in patients taking aspirin for 6 months We expect a 10% incidence of ulcers in the controls We hypothesize that a 50% reduction (i.e. 5%) in those treated with X would be clinically worthwhile We decide to be happy with a likelihood of only 1:20 that difference observed is due to chance 3. That is to say, we want to set P0.05 as the level of α (alpha) risk (the risk of concluding the difference is real when it’s actually due to chance) Sample size calculations (4) A worked example (i) • • • • • We want to see whether drug X will reduce the incidence of peptic ulcer in patients taking aspirin for 6 months We expect a 10% incidence of ulcers in the controls 4.We That is, set Power ≥80%(i.e. (1-β) to in hypothesize thatofa the 50%study reduction 5%) detect such a difference (if it exists) those treated with X would be clinically worthwhile We decide to be happy with a likelihood of only 1:20 that difference observed is due to chance We would like to have at least an 80% chance of finding that 50% reduction (20% of missing it, i.e. of β risk) Sample size calculations A worked example (i) Summary of sample size calculation setting: • Estimated ulcer incidence in controls = 10% • Estimated incidence in group receiving drug X = 5% • For P(α) 0.05, and Power (1-β) ≥80% • Data tested by chi square ...................................................................... Calculated required sample size = 449 in each group Sample size calculations A worked example (ii) • • • • • We hypothesize that removing the spleen in rats will result in an increase in haemoglobin (Hb) from the normal mean of 14.0 g/L to 15.0 g/L We already know that the SD (Standard deviation) of Hb values in normal rats is 1.2 g/L (if we don’t know we’ll have to guess!) Testing will be with Student’s t test We’ll set α (likelihood observed difference due to chance) at 0.05 We want a power (1-β) of at least 80% to minimize risk of missing such a difference if its real* *More correctly, we should say if the samples really are from different populations Sample size calculations A worked example (ii) Summary of sample size calculation setting: • Control mean = 14.0 g/L; Operated mean = 15.0 g/L • Estimated SD in both groups = 1.2 g/L • For P(α) 0.05, and Power (1-β) ≥80% • Data tested by Student t ...................................................................... Calculated required sample size = 24 in each group Summary of the most common statistical tests in biomedicine (1. Parametric tests) Test Purpose Comments Student t-test Compare 2 groups of ‘continuous’ data* Only use if data are ‘normally distributed’ and variances of groups similar Paired Student t-test Compare before-after data on the same individuals The differences (between beforeafter) need to be ‘normally distributed’ More powerful than ‘unpaired’ ttest because less variability within individuals than between them 1- way analysis of variance Compare 3 or more groups of continuous data Same requirements as for Student t-test 2-way analysis of variance Compare 3 or more groups , stratified for at least 2 variables As above *For measured values, not numbers of events (frequencies) Summary of the most common statistical tests in biomedicine (2. non-parametric) Test Purpose Comments Rank-sum or MannWhitney test Compare 2 groups of ‘continuous’ data, using their ranks rather than actual values Use if t-test invalid because data not ‘normally distributed’ and/or variances of groups significantly different Signed rank test Rank test to use instead of paired t-test Use instead of paired t-test if the differences (between before and after) are not ‘normally distributed’ Non-parametric analysis of variance Compare 3 or more groups of continuous data As above (it’s the generalised form of Mann-Whitney test when there are >2 groups) Some tools for statistical analyses • Excel spreadsheets – e.g. If column A contains data in the 8 cells, A3 through A10 – Mean : – SD: – SEM: =average(a3:a10) =stdev(a3:a10) =(stdev(a3:a10))/sqrt(8) • Common statistical packages for significance testing – Sigmaplot – SPPS (licence can be downloaded from Unimelb) – STATA Other resources • Armitage P, Berry G, Matthews JNS. Statistical methods in medical research. 4th edn. Oxford: Blackwell Science, 2002. • Dawson B, Trapp R. Basic and clinical biostatistics. 4th edn. New York: McGraw Hill 2004. (Electronic book in Unimelb electronic collection) • Rumsey DJ. Statistics for Dummies. 2nd edn. Oxford: Wiley & sons 2011.