CrossValidation_v2

advertisement

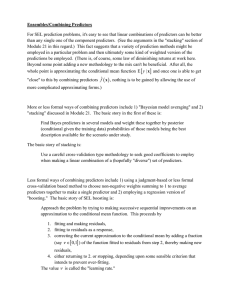

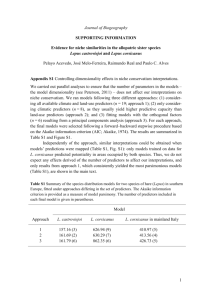

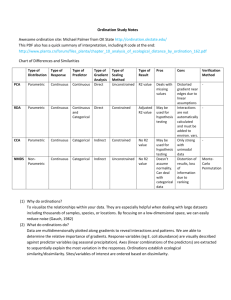

Manu Chandran Outline Background and motivation Over view of techniques Cross validation Bootstrap method Setting up the problem Comparing AIC,BIC,Crossvalidation,Bootstrap For small data set For large data set - iris data set - ellipse data set Finding number of relevant parameters – cancer data set (from class text) Conclusion Background and Motivation Model Selection Parameters to change Overview of error measures and when is it used AIC -> Low data count, strives for less complexity BIC -> High data count, less complexity Cross validation Boot strap methods Motivation for Cross validation Small number of data set Enables re use of data. Basic idea of cross validation K fold cross-validation . K = 5 in this example Simple enough! What more ? Points to consider Why is it important ? Finding the Test Error? Selection of K-fold What K is good enough for given data set ? How is it important – bias, variance Selection of features in “low data-high feature” problem Important do’s and don’ts in feature selection when using cross validation Finds application in bio informatics, where more than often number of parameters too high than data. Overview of error terms Recap from last class In sample error : Errin Expected Error : Err Training error : err True Error : ErrT AIC and BIC attempts to find Errin Crossvalidation attempts to find average error Err Selection of K K = N , N fold CV or Leave One Out Unbiased High varaince K = 5, 5 fold CV Lower variance High Bias Subset p means best set of linear predictors Selection of features using CV Often finds application in bio informatics One way of selecting predictors Screen predictors which show high correlation with class labels Build multivariate classifier Use CV to find tuning parameter Estimate prediction error of final model The problem in this method The CV is done after feature selection. This means the test samples had an effect on selecting predictors Right way to do cross validation Divide samples into K cross validation folds at random Say for K = 5 Find predictors based on the 4 training data Using these predictors, tune the classifier with these 4 sets Test on the left out 5th set Correlation of predictors with outcome Boot strapping Explanation of boot strapping Probability of having ith sample in boot strap sample Given by Poisson distribution with = 1 for large N So Expectation of Error = 0.5*0.368 = 0.184 Far below 0.5 To avoid this leave one out boot strap is suggested