Bayes Inference for Surveys - American Statistical Association

advertisement

The Calibrated Bayes

Approach to Sample Survey

Inference

Roderick Little

Department of Biostatistics, University of Michigan

Associate Director for Research & Methodology,

Bureau of Census

Learning Objectives

1. Understand basic features of alternative modes of

inference for sample survey data.

2. Understand the mechanics of Bayesian inference for finite

population quantitities under simple random sampling.

3. Understand the role of the sampling mechanism in sample

surveys and how it is incorporated in a Calibrated

Bayesian analysis.

4. More specifically, understand how survey design features,

such as weighting, stratification, post-stratification and

clustering, enter into a Bayesian analysis of sample survey

data.

5. Introduction to Bayesian tools for computing posterior

distributions of finite population quantities.

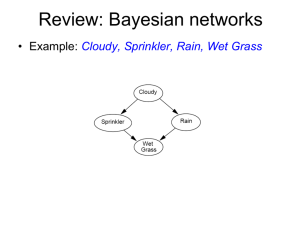

Models for complex surveys 1: introduction

2

Acknowledgement and Disclaimer

• These slides are based in part on a short course on

Bayesian methods in surveys presented by Dr.

Trivellore Raghunathan and I at the 2010 Joint

Statistical Meetings.

• While taking responsibility for errors, I’d like to

acknowledge Dr. Raghunathan’s major

contributions to this material

• Opinions are my own and not the official position

of the U.S. Census Bureau

Models for complex surveys 1: introduction

3

Module 1: Introduction

• Distinguishing features of survey sample inference

• Alternative modes of survey inference

– Design-based, superpopulation models, Bayes

• Calibrated Bayes

Models for complex surveys 1: introduction

4

Distinctive features of survey inference

1. Primary focus on descriptive finite population

quantities, like overall or subgroup means or totals

– Bayes – which naturally concerns predictive

distributions -- is particularly suited to inference about

such quantities, since they require predicting the values

of variables for non-sampled items

– This finite population perspective is useful even for

analytic model parameters:

= model parameter (meaningful only in context of the model)

(Y ) = "estimate" of from fitting model to whole population Y

(a finite population quantity, exists regardless of validity of model)

A good estimate of should be a good estimate of

(if not, then what's being estimated?)

Models for complex surveys 1: introduction

5

Distinctive features of survey inference

2. Analysis needs to account for "complex" sampling

design features such as stratification, differential

probabilities of selection, multistage sampling.

• Samplers reject theoretical arguments suggesting such

design features can be ignored if the model is correctly

specified.

• Models are always misspecified, and model answers are

suspect even when model misspecification is not easily

detected by model checks (Kish & Frankel 1974, Holt, Smith

& Winter 1980, Hansen, Madow & Tepping 1983, Pfeffermann &

Holmes (1985).

• Design features like clustering and stratification can

and should be explicitly incorporated in the model to

avoid sensitivity of inference to model misspecification.

Models for complex surveys 1: introduction

6

Distinctive features of survey inference

3. A production environment that precludes detailed

modeling.

• Careful modeling is often perceived as "too much

work" in a production environment (e.g. Efron 1986).

• Some attention to model fit is needed to do any good

statistics

• “Off-the-shelf" Bayesian models can be developed that

incorporate survey sample design features, and for a

given problem the computation of the posterior

distribution is prescriptive, via Bayes Theorem.

• This aspect would be aided by a Bayesian software

package focused on survey applications.

Models for complex surveys 1: introduction

7

Distinctive features of survey inference

4. Antipathy towards methods/models that involve

strong subjective elements or assumptions.

• Government agencies need to be viewed as objective

and shielded from policy biases.

• Addressed by using models that make relatively weak

assumptions, and noninformative priors that are

dominated by the likelihood.

• The latter yields Bayesian inferences that are often

similar to superpopulation modeling, with the usual

differences of interpretation of probability statements.

• Bayes provides superior inference in small samples

(e.g. small area estimation)

Models for complex surveys 1: introduction

8

Distinctive features of survey inference

5. Concern about repeated sampling (frequentist)

properties of the inference.

• Calibrated Bayes: models should be chosen to have

good frequentist properties

• This requires incorporating design features in the model

(Little 2004, 2006).

Models for complex surveys 1: introduction

9

Approaches to Survey Inference

• Design-based (Randomization) inference

• Superpopulation Modeling

– Specifies model conditional on fixed parameters

– Frequentist inference based on repeated samples from

superpopulation and finite population (hybrid approach)

• Bayesian modeling

– Specifies full probability model (prior distributions on

fixed parameters)

– Bayesian inference based on posterior distribution of

finite population quantities

– argue that this is most satisfying approach

Models for complex surveys 1: introduction

10

Design-Based Survey Inference

Z ( Z1 ,..., Z N ) design variables, known for population

I ( I1 ,..., I N ) = Sample Inclusion Indicators

1, unit included in sample

Ii

0, otherwise

I Z

Y (Y1 ,..., YN ) = population values,

recorded only for sample

Yinc Yinc ( I ) part of Y included in the survey

Note: here I is random variable, (Y , Z ) are fixed

Q Q(Y , Z ) = target finite population quantity

qˆ qˆ ( I , Yinc , Z ) = sample estimate of Q

Vˆ ( I , Y , Z ) = sample estimate of V

1

1

1

0

0

0

0

0

Y

Yinc

[Yexc ]

inc

qˆ 1.96 Vˆ , qˆ 1.96 Vˆ 95% confidence interval for Q

Models for complex surveys 1: introduction

11

Random Sampling

• Random (probability) sampling characterized by:

– Every possible sample has known chance of being selected

– Every unit in the sample has a non-zero chance of being

selected

– In particular, for simple random sampling with replacement:

“All possible samples of size n have same chance of being

selected”

Z {1,..., N } = set of units in the sample frame

N N

N!

1/ , I i n, N

Pr( I | Z )= n i 1

;

n n !( N n)!

0, otherwise

E ( I i | Z ) Pr( I i 1| Z ) n / N

Models for complex surveys 1: introduction

12

Example 1:N Mean for Simple Random Sample

1

Q Y

N

N

y , population mean

i 1

i

Random variable

qˆ ( I ) y I i yi / n, the sample mean

i 1

Fixed quantity, not modeled

N

N

Unbiased for Y : EI I i yi / n EI ( I i ) yi / n (n / N ) yi / n Y

i 1

i 1

i 1

1 N

2

2

2

VarI ( y ) V (1 n / N ) S / n, S

(

y

Y

)

i

N 1 i 1

(1 n / N ) finite population correction

N

N

1

2

Vˆ (1 n / N ) s / n, s = sample variance =

I

(

y

y

)

i i

n 1 i 1

2

2

95% confidence interval for Y y 1.96 Vˆ , y 1.96 Vˆ

Models for complex surveys 1: introduction

13

Example 2: Horvitz-Thompson estimator

Q(Y ) T Y1 ...YN

i E ( I i | Y ) = inclusion probability 0

N

N

N

i 1

i 1

i 1

tˆHT I iYi / i , E I (tˆHT ) E ( I i )Yi / i = iYi / i T

vˆHT Variance estimate, depends on sample design

tˆ

HT

1.96 vˆHT , tˆHT 1.96 vˆHT = 95% CI for T

• Pro: unbiased under minimal assumptions

• Cons:

– variance estimator problematic for some designs (e.g.

systematic sampling)

– can have poor confidence coverage and inefficiency

Models for complex surveys 1: introduction

14

Role of Models in Classical Approach

• Inference not based on model, but models are often

used to motivate the choice of estimator. E.g.:

– Regression model

regression estimator

– Ratio model

ratio estimator

– Generalized Regression estimation: model estimates

adjusted to protect against misspecification, e.g. HT

estimation applied to residuals from the regression estimator

(Cassel, Sarndal and Wretman book).

• Estimates of standard error are then based on the

randomization distribution

• This approach is design-based, model-assisted

Models for complex surveys 1: introduction

15

Model-Based Approaches

• In our approach models are used as the basis for the entire

inference: estimator, standard error, interval estimation

• This approach is more unified, but models need to be

carefully tailored to features of the sample design such as

stratification, clustering.

• One might call this model-based, design-assisted

• Two variants:

– Superpopulation Modeling

– Bayesian (full probability) modeling

• Common theme is “Infer” or “predict” about non-sampled

portion of the population conditional on the sample and

model

Models for complex surveys 1: introduction

16

Superpopulation Modeling

• Model distribution M:

Y ~ f (Y | Z , ), Z = design variables, fixed parameters

• Predict non-sampled values Ŷexc :

yˆi E ( yi | zi , ˆ), ˆ model estimate of I Z

R

S

T

yi , if unit sampled;

~ ~

q Q(Y ), yi

yi , if unit not sampled

( q), over distribution of I and M

v mse

aq 1.96

f

v, q 1.96 v = 95% CI for Q

1

1

1

0

0

0

0

0

Y

Yinc

Ŷexc

In the modeling approach, prediction of nonsampled values

is central

In the design-based approach, weighting is central: “sample

represents … units in the population”

Models for complex surveys 1: introduction

17

Bayesian Modeling

• Bayesian model adds a prior distribution for the parameters:

(Y , ) ~ ( | Z ) f (Y | Z , ), ( | Z ) prior distribution

Inference about is based on posterior distribution from Bayes Theorem:

I Z

Y

p ( | Z , Yinc ) ( | Z ) L( | Z , Yinc ), L = likelihood

Inference about finite population quantitity Q(Y ) based on 1

Yinc

1

p (Q(Y ) | Yinc ) posterior predictive distribution

1

0

of Q given sample values Yinc

0

Ŷexc

0

p (Q(Y ) | Z , Y ) p (Q(Y ) | Z , Y , ) p( | Z , Y ) d

inc

inc

inc

0

0

(Integrates out nuisance parameters )

In the super-population modeling approach, parameters are

considered fixed and estimated

In the Bayesian approach, parameters are random and integrated out

of posterior distribution – leads to better small-sample inference

Models for complex surveys 1: introduction

18

Bayesian Point Estimates

• Point estimate is often used as a single summary

“best” value for the unknown Q

• Some choices are the mean, mode or the median of

the posterior distribution of Q

• For symmetrical distributions an intuitive choice is

the center of symmetry

• For asymmetrical distributions the choice is not

clear. It depends upon the “loss” function.

Models for complex surveys: simple random sampling

19

Bayesian Interval Estimation

• Bayesian analog of confidence interval is posterior

probability or credibility interval

– Large sample: posterior mean +/- z * posterior se

– Interval based on lower and upper percentiles of

posterior distribution – 2.5% to 97.5% for 95% interval

– Optimal: fix the coverage rate 1-a in advance and

determine the highest posterior density region C to

include most likely values of Q totaling 1-a posterior

probability

Models for complex surveys: simple random sampling

20

Bayes for population quantities Q

• Inferences about Q are conveniently obtained by first

conditioning on and then averaging over posterior of .

In particular, the posterior mean is:

E (Q | Yinc ) E E (Q | Yinc , ) | Yinc

and the posterior variance is:

Var (Q | Yinc ) E Var (Q | Yinc , ) | Yinc Var E (Q | Yinc , ) | Yinc

• Value of this technique will become clear in applications

• Finite population corrections are automatically obtained as

differences in the posterior variances of Q and

• Inferences based on full posterior distribution useful in

small samples (e.g. provides “t corrections”)

Models for complex surveys: simple random sampling

21

Simulating Draws from Posterior Distribution

• For many problems, particularly with high-dimensional

it is often easier to draw values from the posterior

distribution, and base inferences on these draws

(d )

• For example, if (1 : d 1,..., D)

is a set of draws from the posterior distribution for a scalar

parameter 1, then

1 D

1

(d )

d 1 1 approximates posterior mean

D

s ( D 1)

2

1

(d )

2

(

)

d 1 1 1 approximates posterior variance

D

(1 1.96 s ) or 2.5th to 97.5th percentiles of draws

approximates 95% posterior credibility interval for

Given a draw ( d ) of , usually easy to draw non-sampled

values of data, and hence finite population quantities

Models for complex surveys: simple random sampling

22

Calibrated Bayes

• Any approach (including Bayes) has properties in

repeated sampling

• We can study the properties of Bayes credibility

intervals in repeated sampling – do 95%

credibility intervals have 95% coverage?

• A Calibrated Bayes approach yields credibility

intervals with close to nominal coverage

• Frequentist methods are useful for forming and

assessing model, but the inference remains

Bayesian

• See Little (2004) for more discussion

Models for complex surveys 1: introduction

23

Summary of approaches

• Design-based:

– Avoids need for models for survey outcomes

– Robust approach for large probability samples

– Less suited to small samples – inference basically

assumes large samples

– Models needed for nonresponse, response errors, small

areas – this leads to “inferential schizophrenia”

Models for complex surveys 1: introduction

24

Summary of approaches

• Superpopulation/Bayes models:

– Familiar: similar to modeling approaches to statistics in

general

– Models needs to reflect the survey design

– Unified approach for large and small samples,

nonresponse and response errors.

– Frequentist superpopulation modeling has the limitation

that uncertainty in predicting parameters is not reflected

in prediction inferences:

– Bayes propagates uncertainty about parameters, making

it preferable for small samples – but needs specification

of a prior distribution

Models for complex surveys 1: introduction

25

Module 2: Bayesian models for

simple random samples

2.1 Continuous outcome: normal model

2.2 Difference of two means

2.3 Regression models

2.4 Binary outcome: beta-binomial model

2.5 Nonparametric Bayes

Models for complex surveys 1: introduction

26

Models for simple random samples

• Consider Bayesian predictive inference for population

quantities

• Focus here on the population mean, but other posterior

distribution of more complex finite population quantities

Q can be derived

• Key is to compute the posterior distribution of Q

conditional on the data and model

– Summarize the posterior distribution using posterior mean,

variance, HPD interval etc

• Modern Bayesian analysis uses simulation technique to

study the posterior distribution

• Here consider simple random sampling: Module 3

considers complex design features

Models for complex surveys: simple random sampling

27

Diffuse priors

• In much practical analysis the prior information is diffuse,

and the likelihood dominates the prior information.

• Jeffreys (1961) developed “noninformative priors” based

on the notion of very little prior information relative to the

information provided by the data.

• Jeffreys derived the noninformative prior requiring

invariance under parameter transformation.

• In general,

( ) | J ( ) |1/2

where

2 log f ( y | )

J ( ) E

t

Models for complex surveys: simple random sampling

28

Examples of noninformative priors

Normal: ( , )

2

2

Binomial: ( ) 1/2 (1 )1/2

Poisson: ( ) 1/2

Normal regression with slopes : ( , 2 ) 2

In simple cases these noninformative

priors result in numerically same answers

as standard frequentist procedures

Models for complex surveys: simple random sampling

29

2.1 Normal simple random sample

Yi ~ iid N ( , 2 ); i 1, 2,..., N

( , )

2

2

simple random sample results in Yinc ( y1 ,..., yn )

ny ( N n)Yexc

Q Y

N

f y (1 f ) Yexc

Derive posterior distribution of Q

Models for complex surveys: simple random sampling

30

2.1 Normal Example

Posterior distribution of (,2)

2

(

y

)

1

2

2 n /21

i

p( , | Yinc ) (2 )

exp 2

2

2

i

inc

1

2 n /21

( )

exp ( yi y ) 2 / 2 n( y ) 2 / 2

2 iinc

The above expressions imply that

(1) 2 | Yinc ~

2

2

(

y

y

)

/

i

n 1

iinc

(2) | Yinc , 2 ~ N ( y , 2 / n)

Models for complex surveys: simple random sampling

31

2.1 Posterior Distribution of Q

2

2

Yexc | , ~ N ,

N

n

2

2

2

2

Yexc | , Yinc ~ N y ,

N

n

n

(1

f

)

n

Q f y (1 f ) Yexc

2

(1

f

)

2

Q | , Yinc ~ N y ,

n

s2

Yexc | Yinc ~ tn 1 y ,

(1 f )n

(1 f ) s 2

Q | Yinc ~ tn 1 y ,

n

Models for complex surveys: simple random sampling

32

2.1 HPD Interval for Q

Note the posterior t distribution of Q is symmetric

and unimodal -- values in the center of the

distribution are more likely than those in the tails.

Thus a (1-a)100% HPD interval is:

y tn 1,1a /2

(1 f ) s 2

n

Like frequentist confidence interval, but

recovers the t correction

Models for complex surveys: simple random sampling

33

2.1 Some other Estimands

• Suppose Q=Median or some other percentile

• One is better off inferring about all non-sampled values

• As we will see later, simulating values of Yexc adds enormous

flexibility for drawing inferences about any finite population

quantity

• Modern Bayesian methods heavily rely on simulating values

from the posterior distribution of the model parameters and

predictive-posterior distribution of the nonsampled values

• Computationally, if the population size, N, is too large then

choose any arbitrary value K large relative to n, the sample

size

– National sample of size 2000

– US population size 306 million

– For numerical approximation, we can choose K=2000/f, for some

small f=0.01 or 0.001.

Models for complex surveys: simple random sampling

34

2.1 Comments

• Even in this simple normal problem, Bayes is

useful:

– t-inference is recovered for small samples by putting a

prior distribution on the unknown variance

– Inference for other quantities, like Q=Median or some

other percentile, is achieved very easily by simulating

the nonsampled values (more on this below)

• Bayes is even more attractive for more complex

problems, as discussed later.

Models for complex surveys: simple random sampling

35

2.2 Comparison of Two Means

• Population 1

• Population 2

Population size N1

Sample size n1

Y1i ind N ( 1 , )

2

1

( 1 , )

2

1

2

1

Population size N 2

Sample size n2

Y2i ind N ( 2 , 22 )

( 2 , 22 ) 22

Sample Statistics : ( y1 , s12 )

Sample Statistics : ( y2 , s22 )

Posterior distributions :

Posterior distributions :

(n1 1) s12 / 12 ~ n21 1

(n2 1) s22 / 22 ~ n22 1

1 ~ N ( y1 , 12 / n1 )

2 ~ N ( y2 , 22 / n2 )

Y1i ~ N ( 1 , 12 ), i exc

Y2i ~ N ( 2 , 22 ), i exc

Models for complex surveys: simple random sampling

36

2.2 Estimands

• Examples

– Y1 Y2 (Finite sample version of Behrens-Fisher Problem)

– Difference Pr(Y1 c) Pr(Y2 c)

– Difference in the population medians

– Ratio of the means or medians

– Ratio of Variances

• It is possible to analytically compute the posterior

distribution of some these quantities

• It is a whole lot easier to simulate values of non's

's

sampled Y1 in Population 1 and Y2 in Population 2

Models for complex surveys: simple random sampling

37

2.3 Ratio and Regression Estimates

• Population: (yi,xi; i=1,2,…N)

• Sample: (yi, iinc, xi, i=1,2,…,N).

For now assume SRS

Objective: Infer about the population mean

N

Q yi

i 1

Excluded Y’s are missing values

y1 x1

y2 x2

. .

. .

. .

yn xn

xn 1

xn 2

.

.

.

Models for complex surveys: simple random sampling

xN

38

2.3 Model Specification

(Yi | xi , , 2 ) ~ ind N ( xi , 2 xi2 g )

i 1, 2,..., N

g known

Prior distribution: ( , 2 ) 2

g=1/2: Classical Ratio estimator. Posterior variance equals

randomization variance for large samples

g=0: Regression through origin. The posterior variance is nearly the

same as the randomization variance.

g=1: HT model. Posterior variance equals randomization variance

for large samples.

Note that, no asymptotic arguments have been used in deriving

Bayesian inferences. Makes small sample corrections and uses tdistributions.

Models for complex surveys: simple random sampling

39

2.3 Posterior Draws for Normal Linear

Regression

g

=

0

ˆ

( , s 2 ) ls estimates of slopes and resid variance

( d )2 (n p 1) s 2 / n2 p1

( d ) ˆ AT z ( d )

n2 p1 = chi-squared deviate with n p 1 df

z ( z1 ,..., z p1 )T , zi ~ N (0,1)

A upper triangular Cholesky factor of (X T X ) 1 :

AT A ( X T X ) 1

Nonsampled values yi | ( d ) , ( d ) ~ N ( ( d ) xi , ( d )2 )

• Easily extends to weighted regression

Models for complex surveys: simple random sampling

40

2.4 Binary outcome: consulting

example

• In India, any person possessing a radio, transistor

or television has to pay a license fee.

• In a densely populated area with mostly makeshift

houses practically no one was paying these fees.

• Target enforcement in areas where the proportion

of households possessing one or more of these

devices exceeds 0.3, with high probability.

Models for complex surveys: simple random sampling

41

2.4 Consulting example (continued)

N Population Size in particular area

1, if household i has a device

Yi

0, otherwise

N

Q Yi / N Proportion of households with a device

i 1

Question of Interest: Pr(Q 0.3)

• Conduct a small scale survey to answer the question of

interest

• Note that question only makes sense under Bayes paradigm

Models for complex surveys: simple random sampling

42

2.4 Consulting example

srs of size n, Yinc {Y1 ,..., Yn }, Yexc {Yn1 ,..., YN }

Yi | ~ iid Bernoulli( )

n

x Yi

i 1

f ( x | ) ( nx ) x (1 ) n x

Model for observable

( ) 1 (0,1)

Prior distribution

N

Q Yi / N x Yi / N

i 1

i n 1

N

Models for complex surveys: simple random sampling

Estimand

43

2.4 Beta Binomial model

The posterior distribution is

f ( x | ) ( )

p ( | x)

f ( x | ) ( )

f ( x | ) ( )d

( ) (1 ) 1

p ( | x)

x

n x

(

)

(1

)

d

n

x

n

x

x

n x

| x ~ Beta ( x 1, n x 1)

Models for complex surveys: simple random sampling

44

2.4 Infinite Population

For N , YN

Pr(YN 0.3 | x) Pr( 0.3 | x)

Compute using cumulative distribution function

of a beta distribution which is a standard function

in most software such as SAS, R

What is the maximum proportion of households in

the population with devices that can be said with

great certainty?

Pr( ? | x) 0.9

Inverse CDF of Beta Distribution

Models for complex surveys: simple random sampling

45

2.5 Bayesian Nonparametric

Inference

•

•

•

•

•

Population: Y1 , Y2 , Y3 ,..., YN

All possible distinct values: d1 , d 2 ,..., d K

Model: Pr(Yi d k ) k

Prior: (1 , 2 ,..., k ) k1 if k 1

k

k

Mean and Variance:

E (Yi | ) d k k

k

Var (Yi | ) 2 d k2 k 2

k

Models for complex surveys: simple random sampling

46

2.5 Bayesian Nonparametric Inference

• SRS of size n with nk equal to number

of dk in the sample

• Objective is to draw inference about

the population mean:Q f y (1 f ) Yexc

• As before we need the posterior

distribution of and 2

Models for complex surveys: simple random sampling

47

2.5 Nonparametric Inference

• Posterior distribution of is Dirichlet:

( | Yinc ) kn 1 if k 1 and nk n

k

k

k

k

• Posterior mean, variance and covariance of

nk

nk (n nk )

E ( k | Yinc ) , Var ( k | Yinc ) 2

n

n (n 1)

nk nl

Cov( k , l | Yinc ) 2

n (n 1)

Models for complex surveys: simple random sampling

48

2.5 Inference for Q

E ( | Yinc ) d k

k

nk

y

n

s2 n 1 2

1

2

Var ( | Yinc )

;s

(

y

y

)

i

n n 1

n 1 iinc

n 1

E ( 2 | Yinc ) s 2

n 1

Hence posterior mean and variance of Q are:

E (Q | Yinc ) f y (1 f ) E ( | Yinc ) y

s2 n 1

Var (Q | Yinc ) (1 f )

n n 1

Models for complex surveys: simple random sampling

49

Module 3: complex sample designs

• Considered Bayesian predictive inference for population

quantities

• Focused here on the population mean, but other

posterior distribution of more complex finite population

quantities Q can be derived

• Key is to compute the posterior distribution of Q

conditional on the data and model

– Summarize the posterior distribution using posterior mean,

variance, HPD interval etc

• Modern Bayesian analysis uses simulation technique to

study the posterior distribution

• Models need to incorporate complex design features like

unequal selection, stratification and clustering

Models for complex surveys: simple random sampling

50

Modeling sample selection

• Role of sample design in model-based (Bayesian)

inference

• Key to understanding the role is to include the sample

selection process as part of the model

• Modeling the sample selection process

– Simple and stratified random sampling

– Cluster sampling, other mechanisms

– See Chapter 7 of Bayesian Data Analysis (Gelman, Carlin,

Stern and Rubin 1995)

Models for complex sample designs

51

Full model for Y and I

p(Y , I | Z , , ) p (Y | Z , ) p ( I | Y , Z , )

Model for

Population

Model for

Inclusion

• Observed data: (Yinc , Z , I ) (No missing values)

• Observed-data likelihood:

L( , | Yinc , Z , I ) p(Yinc , I | Z , , ) p(Y , I | Z , , )dYexc

• Posterior distribution of parameters:

p( , | Yinc , Z , I ) p( , | Z ) L( , | Yinc , Z , I )

Models for complex sample designs

52

Ignoring the data collection process

• The likelihood ignoring the data-collection process is

based on the model for Y alone with likelihood:

L( | Yinc , Z ) p(Yinc | Z , ) p(Y | Z , )dYexc

• The corresponding posteriors for and Yexc are:

p( | Yinc , Z ) p( | Z ) L( | Yinc , Z )

Posterior predictive

distribution of Yexc

p(Yexc | Yinc , Z ) p(Yexc | Yinc , Z , ) p( | Yinc , Z )d

• When the full posterior reduces to this simpler

posterior, the data collection mechanism is called

ignorable for Bayesian inference about ,Yexc .

Models for complex sample designs

53

Bayes inference for probability samples

• A sufficient condition for ignoring the selection mechanism is

that selection does not depend on values of Y, that is:

p ( I | Y , Z , ) p ( I | Z , ) for all Y .

• This holds for probability sampling with design variables Z

• But the model needs to appropriately account for relationship of

survey outcomes Y with the design variables Z.

• Consider how to do this for (a) unequal probability samples, and

(b) clustered (multistage) samples

Models for complex sample designs

54

Ex 1: stratified random sampling

• Population divided into J strata

• Z is set of stratum indicators:

Sample Population

Z Y

Z

1, if unit i is in stratum j;

zi

0, otherwise.

• Stratified random sampling: simple random sample

of n j units selected from population of N j units in stratum j.

• This design is ignorable providing model for

outcomes conditions on the stratum variables Z.

• Same approach (conditioning on Z works for poststratification, with extensions to more than one

margin.

Models for complex sample designs

55

Inference for a mean from a stratified sample

• Consider a model that includes stratum effects:

[ yi | zi j ] ~ ind N ( j , 2j )

• For simplicity assume 2j is known and the flat prior:

p( j | Z ) const.

• Standard Bayesian calculations lead to

[Y | Yinc , Z , { 2j }] ~ N ( yst , st2 )

where:

J

yst Pj y j , Pj N j / N , y j sample mean in stratum j ,

j 1

J

st2 Pj2 (1 f j ) 2j / n j , f j n j / N j

j 1

Models for complex sample designs

56

Bayes for stratified normal model

• Bayes inference for this model is equivalent to

standard classical inference for the population mean

from a stratified random sample

• The posterior mean weights case by inverse of

inclusion probability:

yst N

1

J

N

j 1

j

yj N

1

J

y

j 1 i:xi j

i

/ j,

where j n j / N j selection probability in stratum j.

• With unknown variances, Bayes’ for this model with

flat prior on log(variances) yields useful t-like

corrections for small samples

Models for complex sample designs

57

Suppose we ignore stratum effects?

• Suppose we assume instead that:

[ yi | zi j ] ~ ind N ( , 2 ),

the previous model with no stratum effects.

• With a flat prior on the mean, the posterior mean of Y is then the

unweighted mean

J

2

E (Y | Yinc , Z , ) y p j y j , p j n j / n

j 1

• This is potentially a very biased estimator

if the selection rates

j n j / N j vary across the strata

– The problem is that results from this model are highly sensitive

violations of the assumption of no stratum effects … and

stratum effects are likely in most realistic settings.

– Hence prudence dictates a model that allows for stratum

effects, such as the model in the previous slide.

Models for complex sample designs

58

Design consistency

• Loosely speaking, an estimator is design-consistent if

(irrespective of the truth of the model) it converges to the true

population quantity as the sample size increases, holding design

features constant.

• For stratified sampling, the posterior mean yst based on the

stratified normal model converges to Y , and hence is designconsistent

• For the normal model that ignores stratum effects, the posterior

mean y converges to

J

J

Y j 1 j N j Yj / j 1 j N j

and hence is not design consistent unless j const .

• We generally advocate Bayesian models that yield designconsistent estimates, to limit effects of model misspecification

Models for complex sample designs

59

Ex 2. A continuous (post)stratifier Z

Consider PPS sampling, Z = measure of size

Sample Population

Standard design-based estimator is weighted

Horvitz-Thompson estimate

1 n

yHT yi / i ; i selection prob (HT)

N i 1

Z Y

Z

yHT model-based prediction estimate for

yi ~ Nor( i , 2 i2 ) ("HT model")

When the relationship between Y and Z deviates a lot from the

HT model, HT estimate is inefficient and CI’s can have poor

coverage

Models for complex sample designs

60

Ex 4. One continuous (post)stratifier Z

1 n

ywt yi / i ; i selection prob (HT)

N i 1

Sample Population

Z Y

Z

A modeling alternative to the HT estimator is create

predictions from a more robust model relating Y to Z :

N

1 n

ˆ

ymod = yi yi , yˆi predictions from:

N i 1

i n 1

yi ~ Nor( S ( i ), 2 ik ); S ( i ) = penalized spline of Y on Z

(Zheng and Little 2003, 2005)

Models for complex sample designs

61

Ex 3. Two stage sampling

• Most practical sample designs involve selecting a

cluster of units and measure a subset of units

within the selected cluster

• Two stage sample is very efficient and cost

effective

• But outcome on subjects within a cluster may be

correlated (typically, positively).

• Models can easily incorporate the correlation

among observations

Models for complex sample designs

62

Two-stage samples

• Sample design:

– Stage 1: Sample c clusters from C clusters

– Stage 2: Sample ki units from the selected cluster i=1,2,…,c

K i Population size of cluster i

C

N Ki

i 1

• Estimand of interest: Population mean Q

• Infer about excluded clusters and excluded units within

the selected clusters

Models for complex sample designs

63

Models for two-stage samples

• Model for observables

Yij ~ N ( i , ); i 1,..., C ; j 1, 2,..., K i

2

i ~ iid N ( , 2 )

Assume and are known

• Prior distribution

( ) 1

Models for complex sample designs

64

Estimand of interest and inference strategy

• The population mean can be decomposed as

c

NQ [ki yi ( Ki ki )Yi ,exc ]

i 1

• Posterior mean given Yinc

C

KY

i c 1

i i

c

C

i 1

i c 1

E ( NQ | Yinc , i , i 1, 2,..., c; ) [ki yi ( K i ki ) i ] K i

c

C

i 1

i c 1

E ( NQ | Yinc ) [ki yi ( K i ki ) E ( i | Yinc )] K i E ( | Yinc )

yi (ki / 2 ) ˆ (1/ 2 )

where E ( i | Yinc )

ki / 2 1/ 2

ˆ E ( | Yinc )

2

2

y

/

(

/ ki )

i

i

2

2

1/

(

/ ki )

i

Models

for complex sample designs

65

Posterior Variance

• Posterior variance can be easily computed

c

Var ( NQ | Yinc ) ( Ki ki )( 2 ( Ki ki ) 2 )

i 1

C

2

2

K

(

K

)

i

i

i c 1

Var (Yi ,exc | Yinc ) E[Var (Yi ,exc | Yinc , i ) | Yinc ] Var[ E (Yi ,exc | Yinc , i ) | Yinc ]

2

K i ki

2 , i 1, 2,

,c

Var (Yi | Yinc ) E[Var (Yi | Yinc , i ) | Yinc ] Var[ E (Yi | Yinc , i ) | Yinc ]

2 / K i 2 , i c 1, c 2,

,C

Models for complex sample designs

66

Inference with unknown and

• For unknown and

– Option 1: Plug in maximum likelihood estimates. These can

be obtained using PROC MIXED in SAS. PROC MIXED

actually gives estimates of ,, and E(i|Yinc) (Empirical

Bayes)

– Option 2: Fully Bayes with additional prior

( , 2 , 2 ) 2 2v exp b / (2 2 )

where b and v are small positive numbers

Models for complex sample designs

67

Extensions and Applications

• Relaxing equal variance assumption

Yil ~ N ( i , i2 )

( i , log i ) ~ iid BVN ( , )

• Incorporating covariates (generalization of ratio

and regression estimates)

Yil ~ N ( xil i , i2 )

( i ,log i ) ~ iid MVN ( , )

• Small Area estimation. An application of the

hierarchical model. Here the quantity of interest is

E (Yi | Yinc ) (ki yi ( K i ki ) E (Yi ,exc | Yinc )) / K i

Models for complex sample designs

68

Extensions

• Relaxing normal assumptions

Yil | i ~ Glim( i h( xil i ), 2 v( i ))

v : a known function

i ~ iid MVN ( , )

• Incorporate design features such as stratification

and weighting by modeling explicitly the sampling

mechanism.

Models for complex sample designs

69

Summary

• Bayes inference for surveys must incorporate design

features appropriately

• Stratification and clustering can be incorporated in

Bayes inference through design variables

• Unlike design-based inference, Bayes inference is not

asymptotic, and delivers good frequentist properties

in small samples

Models for complex sample designs

70

Module 4: Short introduction to

Bayesian computation

• A Bayesian analysis uses the entire posterior distribution of the

parameter of interest.

• Summaries of the posterior distribution are used for statistical

inferences

– Means, Median, Modes or measures of central tendency

– Standard deviation, mean absolute deviation or measures of

spread

– Percentiles or intervals

• Conceptually, all these quantities can be expressed analytically

in terms of integrals of functions of parameter with respect to its

posterior distribution

• Computations

– Numerical integration routines

– Simulation techniques – outline here

Models for Complex Surveys: Bayesian Computation

71

Types of Simulation

• Direct simulation (as for normal sample, regression)

• Approximate direct simulation

– Discrete approximation of the posterior density

– Rejection sampling

– Sampling Importance Resampling

• Iterative simulation techniques

– Metropolis Algorithm

– Gibbs sampler

– Software: WINBUGS

Models for Complex Surveys: Bayesian Computation

72

Approximate Direct Simulation

• Approximating the posterior distribution by a normal

distribution by matching the posterior mean and

variance.

– Posterior mean and variance computed using numerical

integration techniques

• An alternative is to use the mode and a measure of

curvature at the mode

– Mode and the curvature can be computed using many

different methods

• Approximate the posterior distribution using a grid of

values of the parameter and compute the posterior

density at each grid and then draw values from the

grid with probability proportional to the posterior

density

Models for Complex Surveys: Bayesian Computation

73

Normal Approximation

Posterior density : ( | x)

Easy to work with log-posterior density

l ( ) log( ( | x))

At the mode, f ( ) l '( ) 0

Curvature : f '( ) l ''( )

For logarithm of the normal density

Mode is the mean and

the curvature at the mode

is negative of the precision

(Precision:reciprocal of variance)

Models for Complex Surveys: Bayesian Computation

74

Rejection Sampling

• Actual Density from

which to draw from

• Candidate density from

which it is easy to draw

• The importance ratio is

bounded

• Sample from g,

accept with

probability p otherwise

redraw from g

( | data)

g ( ), with g ( ) 0 for all

with ( | data) 0

( | data)

M

g ( )

( | data)

p

M g ( )

Models for Complex Surveys: Bayesian Computation

75

Sampling Importance Resampling

• Target density from which to

(

|

data)

draw

g ( ), such that g ( ) 0

• Candidate density from

which it is easy to draw

for all with ( | data) 0

• The importance ratio

( | data)

w( )

g ( )

• Sample M values of from g * , * ,..., *

1

2

M

• Compute the M importance

*

w

(

i ); i 1,2,..., M

ratios and resample with

probability proportional to

the importance ratios.

Models for Complex Surveys: Bayesian Computation

76

Markov Chain Simulation

• In real problems it may be hard to apply direct or

approximate direct simulation techniques.

• The Markov chain methods involve a random walk in the

parameter space which converges to a stationary

distribution that is the target posterior distribution.

– Metropolis-Hastings algorithms

– Gibbs sampling

Models for Complex Surveys: Bayesian Computation

77

Metropolis-Hastings algorithm

• Try to find a Markov Chain whose stationary distribution is

the desired posterior distribution.

• Metropolis et al (1953) showed how and the procedure was

later generalized by Hastings (1970). This is called

Metropolis-Hastings algorithm.

• Algorithm:

– Step 1 At iteration t, draw

y ~ p( y | x (t ) )

y : Candidate Point

p : Candidate Density

Models for Complex Surveys: Bayesian Computation

78

– Step 2: Compute the ratio

f ( y ) / p( y | x (t ) )

w Min 1,

(t )

(t )

f

(

x

)

/

p

(

x

|

y

)

– Step 3: Generate a uniform random number, u

X ( t 1) y if u w

X ( t 1) X ( t ) otherwise

–

–

–

–

This Markov Chain has stationary distribution f(x).

Any p(y|x) that has the same support as f(x) will work

If p(y|x)=f(x) then we have independent samples

Closer the proposal density p(y|x) to the actual density f(x),

faster will be the convergence.

Models for Complex Surveys: Bayesian Computation

79

Gibbs sampling

• Gibbs sampling a particular case of Markov Chain Monte Carlo

method suitable for multivariate problems

x ( x1 , x2 ,..., x p ) ~ f ( x )

f ( xi | x1 , x2 ,..., xi 1 , xi 1 ,..., x p )

Gibbs sequence :

x1( t 1) ~ f ( x1 | x2( t ) , x3( t ) ,..., x (pt ) )

x2( t 1) ~ f ( x2 | x1( t 1) , x3( t ) ,..., x (pt ) )

xi( t 1) ~ f ( xi | x1( t 1) ,..., xi(t11) , xi(t1) ,..., x (pt ) )

x (pt 1) ~ f ( x p | x1( t 1) ,..., x (pt11) )

1. This is also a Markov

Chain whose stationary

Distribution is f(x)

2. This is an easier

Algorithm, if the

conditional densities

are easy to work with

3. If the conditionals are

harder to sample from,

then use MH or Rejection

technique within the

Gibbs sequence

Models for Complex Surveys: Bayesian Computation

80

Conclusion

• Design-based: limited, asymptotic

• Bayesian inference for surveys: flexible, unified,

now feasible using modern computational

methods

• Calibrated Bayes: build models that yield

inferences with good frequentist properties –

diffuse priors, strata and post-strata as covariates,

clustering with mixed effects models

• Software: Winbugs, but software targeted to

surveys would help.

• The future may be Calibrated Bayes!

Models for complex surveys 1: introduction

81