chapter_4part2

advertisement

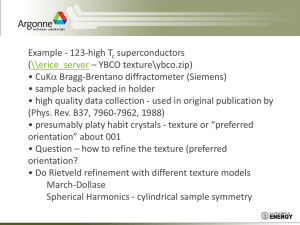

BIT 3193 MULTIMEDIA DATABASE CHAPTER 4 : QUERING MULTIMEDIA DATABASES • The structure of image is much less explicit. • so need to apply techniques that will identify a structure • characterizing the content of visual objects is much more complex and uncertain. • characterized by feature vectors • A feature is an attribute derived from transforming the original visual object by using an image analysis algorithm. • The visual query mode involves matching the input image to pre-extracted features of real objects. • pre-extracted features are held in the database • Purpose: • to extract a set of numerical features that removes redundancy from the image and reduces its dimension • The most commonly used features for content-based image retrieval are shape, color and texture. • A content-based image retrieval (CBIR) system uses image visual content features to retrieve relevant images from an image database. • CBIR systems retrieve images according to specified features that users are interested in. • features such as texture, color, shape, and location properties can reflect the contents of an image Example: Trainable System for Object Detection • A set of positive example images of the object class considered (e.g., images of frontal faces) and a set of negative examples (e.g., any non face image) are collected. • The images are transformed into (feature) vectors in a chosen representation • (e.g., a vector of the size of the image with the values at each pixel location below this is called the “pixel” representation) • The vectors (examples) are used to train a pattern classifier, the Support Vector Machine (SVM), to learn the classification task of separating positive from negative examples. • To detect objects in out-of-sample images, the system slides a fixed size window over an image and uses the trained classifier to decide which patterns show the objects of interest. • At each window position, the system extracts the same set of features as in the training step and feed them into the classifier; the classifier output determines whether or not it is an object of interest. • Representation Technique for Face and People Detection • Pixel Representation • Eigen Vector Representation • Wavelet Representation • Multimedia data, such as images or video, are typically represented or stored as very high-dimensional vectors. • The processing time for searching or performing other operations for such systems is highly impacted by the fact that the data are so high dimensional. • It is therefore practically important to find compact representations of multimedia data, while at the same time not significantly affecting the performance of systems such as detection • Can be based on: • color • using color histograms and color variants • texture • variation in intensity and topography of surfaces • shape • using aspect ratios • circularity and moments for global features • using boundary segments for local features • Can be based on: • position • using spatial indexing • image transformations • using transformations • appearance • using a combination of color, texture and intensity surfaces Table 4.1 : Features used in retrieval Feature Measures Theory Main use Problems Color Histogram Swain and Ballard Color indexing Lighting variations Texture Pixel Intensity -illumination -topography Degrees of -directionality -regularity -periodicity Gabor filters Fractals Indexing Texture thesaurus Shape Global features -aspect ratio -circularity -moments Local features - boundary segments Active contours Shape indexing Object recognition Appearance Global features -curvature -orientation Local features - local curvatures and orientation Transforms Image classification Position Spatial relationships Tessellations (Voronoi) Object Recognition Spikes and holes in objects cause errors in indexing Table 4.2 : Advantages and Disadvantages of features methods of retrieval Feature Advantage Color Can be applied to all colored images, 2D and 3D Texture Distinguishes between image regions with similar color e.g sea and sky Large feature vectors each containing 4000 elements have been used Shape Important in image segmentation Can classify images as stick like, plate like or blob like Representation is difficult Viewpoint change an object’s shape Spikes and holes 3D is very difficult Appearance Important way of judging similarity Can generate invariant measures Describe an image at varying levels of detail Position Can be applied to 2D and 3D images Disadvantage Images must contain objects in defined spatial relationships Spatial indexing not useful unless combined with color and texture • There are two alternative approaches: • use a query image • user can provide an image or compose a target image by selecting and clicking color palettes and texture patterns • use user-defined features • allow user to select a sample image • query process • the distribution of image objects is then recomputed in terms of the distance from sample image • use automatic methods for generating contentdependent metadata • speech recognition techniques is used for the identification of both speakers and the spoken words • factors which influence the complexity of the identification problems encountered include: • isolated words (easier to recognize) • single speaker (one is easier) • vocabulary size (smaller is easier) • grammar (tightly constraint is easier) • users can use query by example (QBE) • the technologies used to achieve this have to be integrated and include: • large vocabulary speech recognition • speaker segmentation • speaker clustering • speaker identification • name spotting • topic classification • story segmentation • Videos are far more complex. • Role of video feature extraction: • image-based features • motion-based features (e.g motion of the camera) • object detection and tracking • speech recognition • speaker identification • word spotting • audio classification Attributes Index Clip 1 Category Title Clip Date Source Duration Theme Duration Story 1 Story m Frame Start Scene / Story Segment Frame End Number of Shots Event Keywords Theme Shot captured between a record and stop camera operation Frame Shot 1 Duration Shot 2 Frame Start Frame End Shot k Camera Audio Level Frame number • Clip • digital video document that can last from a few seconds to a few hours • Scene • sequential collection of shots unified by a common event or locale (background). • a clip have one or more scenes • Shot • fundamental unit • much research has focused on segmenting video by detecting boundary between camera shots • defined as a sequence of frames captured by a single camera in a single continuous action in time and space • example : two people having a conversation • low-level syntactic building blocks of a video sequence • The video operations are: • create • concatenate, union and intersection (based on temporal and spatial conditions) • output • Query example: “ Show the details of movies where a character said “I am not interested in a semantic argument, I just need the protein” Content delivery Access control and rights management User Query Processing Query results Query inputs Query Presentation Video processing and annotation summaries Visual summaries Digital video collection Figure A : Video retrieval system Indexes ISO/IEC 13249 (SQL/MM) • SQL Multimedia and Applications • Standardized in 2001 by ISO subcommittee SC32 Working Group • Provides structured object types , methods to store, manipulate image data by content • Supports OR (Object Relational) Data Model Part 1: Framework Part 2: Full Text Part 3: Spatial Part 5: Still Image Part 6: Data Mining Object types that comply with the first edition of the ISO/IEC 13249-5:2001 SQL MM Part5: StillImage standard SI_AverageColor Object Type Describes the average color feature of an image. SI_Color Object Type Encapsulates color values of a digitized image. SI_ColorHistogram Object Type Describes the relative frequencies of the colors exhibited by samples of an image. SI_FeatureList Object Type Describes an image that is represented by a composite feature. The composite feature is based on up to four basic image features (SI_AverageColor, SI_ColorHistogram, SI_PositionalColor, and SI_Texture) and their associated feature weights. SI_StillImage Object Type Represents digital images with inherent image characteristics such as height, width, format, and so on. SI_PositionalColor Object Type Describes the positional color feature of an image. Assuming that an image is divided into n by m rectangles, the positional color feature characterizes an image by the n by m most significant colors of the rectangles. SI_Texture Object Type Describes the texture feature of the image characterized by the size of repeating items (coarseness), brightness variations (contrast), and predominant direction (directionality). Read the following website for further information on Oracle implementation of SQL/MM Still Image: http://download.oracle.com/docs/cd/B19306_01/appdev.102/ b14297/ch_stimgref.htm#CHDBAGID. Example of media table for still Images defined as per SQL/MM standards Given the following PM.SI_MEDIA table definition in Oracle implementation: CREATE TABLE PM.SI_MEDIA( PRODUCT_ID NUMBER(6), PRODUCT_PHOTO SI_StillImage, AVERAGE_COLOR SI_AverageColor, COLOR_HISTOGRAM SI_ColorHistogram, FEATURE_LIST SI_FeatureList, POSITIONAL_COLOR SI_PositionalColor, TEXTURE SI_Texture, CONSTRAINT id_pk PRIMARY KEY (PRODUCT_ID)); Example1: • Construct an SI_AverageColor object from a specified color using the SI_AverageColor(averageColorSpec) constructor. Solution: DECLARE myColor SI_Color; myAvgColor SI_AverageColor; BEGIN myColor := NEW SI_COLOR(null, null, null); myColor.SI_RGBColor(10, 100, 200); myAvgColor := NEW SI_AverageColor(myColor); INSERT INTO PM.SI_MEDIA (product_id, average_color) VALUES (75, myAvgColor); COMMIT; END; Example 2: • Derive an SI_AverageColor value using the SI_AverageColor(sourceImage) constructor: Solution: DECLARE myimage SI_StillImage; myAvgColor SI_AverageColor; BEGIN SELECT product_photo INTO myimage FROM PM.SI_MEDIA WHERE product_id=1; myAvgColor := NEW SI_AverageColor(myimage); END; Example 3: • Insert into PM.SI_MEDIA table an object with PRODUCT_ID = 1 and have average color of RED = 20, GREEN = 30 and BLUE = 50. Solution: DECLARE myColor SI_Color; myAvgColor SI_AverageColor; BEGIN myColor := NEW SI_COLOR(null, null, null); myColor.SI_RGBColor(20, 30, 50); myAvgColor := NEW SI_AverageColor(myColor); INSERT INTO PM.SI_MEDIA (product_id, average_color) VALUES (1, myAvgColor); COMMIT; END; Example 4: • Derive SI_AverageColor object for image with PRODUCT_ID = 13 using the SI_FindAvgClr() function. Solution: DECLARE myimage SI_StillImage; myAvgColor SI_AverageColor; BEGIN SELECT product_photo INTO myimage FROM PM.SI_MEDIA WHERE product_id=13; myAvgColor := SI_FindAvgClr(myimage); END; • In 2002, ISO subcommittee MPEG published a standard: MPEG-7 • Formally named Multimedia Content Description Interface • MPEG-4, the first Multimedia representation Standard • Object based coding • MPEG-7 , Currently the most complete description standard for multimedia data • Any audio/visual material associated with multimedia data can be indexed & searched • Provides • Set of descriptors (D) Quantitative measures of audio/visual features • Description Scheme (DS) Structure of Descriptors & relationship • MPEG-7 descriptions associated with • Still pictures, graphics, 3D models, audio, speech, video • Composition information about how these elements are combined in a multimedia presentation (scenarios) • MPEG-7 descriptions do not depend on the ways the described content is coded or stored • It is possible to create an MPEG-7 description of an analogue movie or of a picture that is printed on paper, in the same way as of digitized content. • MPEG-7 can exploit the advantages provided by MPEG-4 coded content • Material encoded using MPEG-4 provides the means to encode audio-visual material as • Objects having certain relations in time (synchronization) and space (on the screen for video, or in the room for audio), • Possible to attach descriptions to elements (objects) within the scene, such as audio and visual objects • Same material can be described using different types of features, tuned to the area of application • Eg : A visual material: • Lower abstraction level would be a description of shape, size, texture, color, movement (trajectory) and position (“where in the scene can the object be found?”) • The highest level would give semantic information: “This is a scene with a barking brown dog on the left and a blue ball that falls down on the right, with the sound of passing cars in the background” • Apart from the description what is depicted in the content, Following additional information about the multimedia data: • The form (e.g. JPEG, MPEG-2), • The overall data size (helps determining whether the material can be “read” by the user terminal) • Conditions for accessing the material (Includes links to a registry with intellectual property rights information, and price) • Classification -(Includes parental rating, and content classification into a number of pre-defined categories) • Links to other relevant material -(helps the user speeding up the search) • The context -( The occasion of the recording, Like Olympic Games 1996, final of 200 meter hurdles, men ) • Main elements of the MPEG-7 standard • Description Tools: Descriptors (D), Description Schemes (DS), • A Description Definition Language (DDL) • Defines the syntax of the MPEG-7 Description Tools and to allow the creation of new Description Schemes • System tools • Supports binary coded representation for efficient storage and transmission, transmission mechanisms (both for textual and binary formats), multiplexing of descriptions, synchronization of descriptions with content, management and protection of intellectual property in MPEG-7 descriptions, etc. • The key info that the description tools capture includes • Structural information on spatial, temporal or spatio-temporal components of the content (scene cuts, segmentation in regions, region motion tracking). • Low level features in the content (colors, textures, sound timbres, melody description). • Conceptual information of the reality captured by the content (objects and events, interactions among objects). • Information about how to browse the content in an efficient way (summaries, variations, spatial and frequency subbands,). • Information about collections of objects. • Information about the interaction of the user with the content (user preferences, usage history) Scope of MPEG-7 MPEG-7 Main Elements Abstract representation of possible applications using MPEG-7 Integration of MPEG-7 into MMDBMS • MPEG-7 relies on XML Schema, mapping strategies from XML to database data model is an issue!!! • SQL/MM , Querying • Due the rich description provided by MPEG-7, enhancements in SQL/MM is needed • Operations that manipulate, produce as results, an XML is an option • Indexing methods for multidimensional data can be used to index multimedia data • MPEG-7 Provides methods for semantic indexing!!! More on MPEG-7 can be found from ISO/IEC JTC1/SC29/WG11 CODING OF MOVING PICTURES AND AUDIO- MPEG-7 Overview http://www.chiariglione.org/mpeg/standards/mpeg-7/mpeg-7.htm