Slides.

advertisement

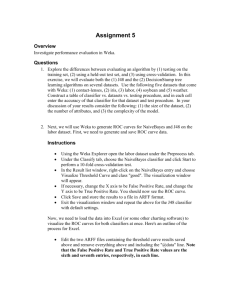

Automatic Transformation of Raw Clinical Data into Clean Data Using Decision Tree Learning Jian Zhang Supervised by: Karen Petrie 1 Background 2 Cancer research has become an extremely data rich environment. Plenty of analysis packages can be used for analyzing the data. Data preprocessing. Rich data environment • There are some factors about breast cancer 3 Raw clinical data sample Yes-No data: yes: yes, Yes, Ye, yed, yef … no: No, n, not … null: don’t know, no data, waiting for lab Positive-Negative data: Positive: +, ++, p, p++… Negative: -, n, neg, n---… Null: no data, ruined sample, waiting for lab 4 Basic version 5 Question? Could we make the process automated? 6 Introduction 7 Decision Tree learning Weka Decision Tree Learning 8 Decision tree learning is a method for approximating discrete-valued functions, which is one of the most popular inductive algorithms. Decision tree sample 9 Weka 10 Weka (Waikato Environment for Knowledge Analysis) is a popular suite of machine learning software written in Java, which contains a collection of algorithms for data analysis and predictive modeling. Experiment Data: Training dataset with 100 instances Test dataset with 100 instances, which has 17 different values from the training dataset Tool: weka 11 Experiment 12 Experiment 1 : training dataset Experiment 2 : training dataset, test dataset Experiment 1 Name of Tree 13 Correctly Classified Instances (%) Testing (%) Root mean squared error BFTree DecisionStump 89 47 99 55 0.0588 0.422 FT J48 J48graft LADTree LMT NBTree RandomForest 87 82 82 81 84 80 83 98 98 98 90 91 98 100 0.1698 0.0976 0.0976 0.2317 0.2344 0.2326 0.0781 RandomTree 83 100 0.0447 REPTree SimpleCart 82 89 98 96 0.0985 0.1511 Experiment 2 Name of Tree 14 Correctly Classified Instances(%) Testing (%) Root mean squared error BFTree 89 88 0.2813 DecisionStump 47 49 0.4318 FT 87 90 0.2194 J48 82 88 0.2098 J48graft 82 88 0.2098 LADTree 81 89 0.2494 LMT 84 89 0.234 NBTree 80 88 0.2569 RandomForest 83 88 0.2095 RandomTree 83 88 0.209 REPTree 82 88 0.2098 SimpleCart 89 87 0.2848 Result 15 Through the results, the decision tree has a good classification and prediction for the existing entries, but for the unknown entries, the prediction is not as good as expected. Future work 16 Find and correct the incorrect prediction in the process Automated transformation for unknown entries Thank you ! 17