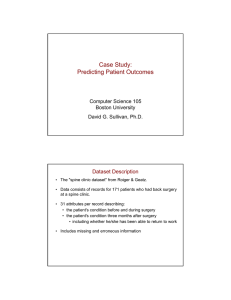

Case Study: Predicting Patient Outcomes Dataset Description Computer Science 105

advertisement

Case Study: Predicting Patient Outcomes Computer Science 105 Boston University Spring 2012 David G. Sullivan, Ph.D. Dataset Description • The "spine clinic dataset" from Roiger & Geatz. • Data consists of records for 171 patients who had back surgery at a spine clinic. • 31 attributes per record describing: • the patient's condition before and during surgery • the patient's condition three months after surgery • including whether he/she has been able to return to work • Includes missing and erroneous information Overview of the Data-Mining Task • Goal: to develop insights into factors that influence patient outcomes – in particular, whether the patient can return to work. • What type of data mining should we perform? • What will the data mining produce? Overview of the Data-Mining Task • Goal: to develop insights into factors that influence patient outcomes – in particular, whether the patient can return to work. • What type of data mining should we perform? • What will the data mining produce? input attributes model return to work? Review: Preparing the Data • Possible steps include: • denormalization several records for a given entity single training example • discretization numeric nominal • nominal numeric • force Weka to realize that a seemingly numeric attribute is really nominal • remove ID attributes and other problematic attributes • perform additional attribute selection Preparing the Data (cont.) • We begin by loading the dataset (a CSV file) into Weka Explorer. • It's helpful to examine each attribute by highlighting its name in the Attribute portion of the Preprocess tab. • helps us to identify missing/anomalous values • can also help us to discover formatting issues that should be addressed Preparing the Data (cont.) • Things worth noting about the attributes in this dataset: • Steps we may want to take: Review: Dividing Up the Data • To allow us to validate the model(s) we learn, we'll divide the examples into two files: • n% for training • 100 – n% for testing • don't touch these until you've finalized your model(s) • You can use Weka to split the dataset: 1) filters/unsupervised/instance/Randomize 2) save the shuffled examples in Arff format 3) filters/unsupervised/instance/RemoveRange • specify the instanceIndices parameter to remove 100 - n% 4) save the remaining examples 5) load the full file of shuffled examples back into Weka 6) use RemoveRange to remove the other n% 7) save the remaining examples Experimenting with Different Techniques • Use Weka to try different techniques on the training data. • For each technique, examine: • the resulting model • the validation results • for classification models: overall accuracy, confusion matrix • for numeric estimation models: correlation coefficient, errors • for association-rule models: support, confidence • If the model is something you can interpret, make sure it seems reasonable. • Try to improve the validation results by: • changing the algorithm used • changing the algorithm's parameters Consider Starting with 1R • If you're performing classification learning, it can be helpful to start with 1R. • to establish a baseline for accuracy • to see which individual attributes best predict the class Cross Validation • When validating classification/estimation models, Weka performs 10-fold cross validation by default: 1) divides the training data into 10 subsets 2) repeatedly does the following: a) holds out one of the 10 subsets b) builds a model using the other 9 subsets c) tests the model using the held-out subset 3) reports results that average the 10 models together • Note: the model reported in the output window is learned from all of the training examples. • the cross-validation results do not actually evaluate it • To see how well the reported model does on the training data, select Using training set in the Test box of the Classify tab. Summary of Experiments • Summary of experiments: Summary of Experiments (cont.) Using the Test Examples • You can use Weka to apply the learned model(s) to the separate test examples. • wait until you've settled on your final models – the ones you intend to include in the final report • Then, in the Test box of the Classify tab: • select Supplied test set • click the Set button to specify the file