CRAWLING THE HIDDEN WEB Authors

advertisement

CRAWLING THE HIDDEN

WEB

Authors: S. Raghavan & H. Garcia-Molina

Presenter: Nga Chung

OUTLINE

Introduction

Challenges

Approach

Experimental Results

Contributions

Pros and Cons

Related Work

INTRODUCTION

Hidden Web

Content stored in databases that can only be

retrieved through user query, such as, medical

research databases, flight schedules, product listings,

news archives

Social media blog posts, comments

So why should we care?

Scale of the web (55 ~ 60 billions of pages) does not

include the deep web or pages behind security walls

[2]

Estimate in 2001, Hidden Web is 500 times the

publicly indexed web

Mike Bergman, “The long-term impact of Deep Web

search had more to do with transforming business

than with satisfying the whims of Web surfers.” [5]

CHALLENGES

From a search engine perspective

Locate the hidden databases

Identify which databases to search for a given user

query

From a crawler’s perspective

Interact with a search form

Search can be form-based, facet/guided navigation, freetext, which are intended for users [3]

Know what keywords to put into the form fields

Filter search results returned from search queries

Define metrics to measure crawler’s performance

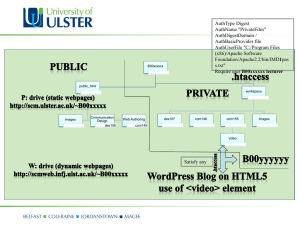

HIDDEN WEB EXPOSER ARCHITECTURE

(HIWE)

URL List

Task

Specific

Database

Parser

Crawl Manager

Label Value Set

(LVS)

Form Analyzer

LVS Manager

…

Data Sources

Form Processor

Form Submission

Feedback

Response

Analyzer

Response

WWW

FORM ANALYSIS

How does a crawler interact with a search form?

Crawler builds an “Internal Form Representation”

F = ({E1, E2, …, En}, S, M)

set of n form

elements

submission

information e.g.

submission URL

meta-information

e.g. URL of form

page, web site

hosting form, links

to form

Label(E1) is descriptive text describing the field e.g. Date

Domain(E1) is set of possible values for the field which can

be finite (select box) of infinite (text box)

FORM ANALYSIS

Label(E1) = Make

Domain(E1) = {Acura, Lexus…}

Label(E5) = Your ZIP

Domain(E5) = {s | s is a text string}

TASK SPECIFIC DATABASE

How does a crawler know what keywords to put

into the form fields?

Crawler has a “task-specific database”

For instance, if the task is to search archives pertaining to

the automobile industry, the database will contain lists of

all car makes and models.

Database has a Label Value Set (LVS) table

Each row contains

L – a label e.g. “Car Make”

V = {v1, …, vn} – a graded set of values e,g, {‘Toyota’,

‘Honda’, ‘Mercedes-Benz’, …}

Membership function Mv assigns weight to each member of

the set V

TASK SPECIFIC DATABASE

LVS table can be populated through

Explicit initialization by human intervention

Built-in entries for commonly used categories e.g. dates

Querying external data sources e.g. Open Directory Project

Categories

Regional:

North America:

United States

Crawler’s encounter with forms that have finite domain fields

TASK SPECIFIC DATABASE

Computing weights M(v1)

Case 1: Precomputed

Case 2: Computed by respective data source wrapper

Case 3: Computed by crawling experience shown below

Extract

Label

Extracted?

yes

no

Find entry that close

resembles Domain(E) and

add Domain(E) to set

Find

Label in

LVS Table

no

Found?

yes

Replace (L, V) with

(L, V U Domain(E)

Add new

entry to LVS

MATCHING FUNCTION

“Matching function” maps values from database to

form field

E1 = Car Make

Match

E2 = Car Model

E1 = Car Make

v1 = Toyota

E2 = Car Model

v2 = Prius

Step 1: Label matching

Normalize form label and use string matching algorithm to

compute minimum edit distance between form label and all

LVS labels

MATCHING FUNCTION

Step 2: Value assignment

Take all possible combinations of value assignments, rank

them, and choose the best set to use for form submission

There are three ranking functions

Fuzzy conjunction

Average

fuz ([E1 v i,...,E n v n ]) minMv (v i )

i

avg ([E1 v i ,...,E n v n ])

Probabilistic

1

M v i (v i )

n i1...n

prob ([E1 v i ,...,E n v n ]) 1 (1 Mv (v i ))

i

Example: form with 2 fields car make and year

Jaguar, 2009 where Mv1(Jaguar) = 0.5 and Mv2(2009) = 1

ρfuz = 0.5

ρavg = ½ (0.5 + 1) = 0.75

ρprob = 1 – [(1 – 0.5) * (1 – 1)] = 1

Toyota, 2010, where Mv1(Toyota) = 1 and Mv2(2010) = 1

ρfuz = 1

ρavg = ½ (1 + 1) = 1

ρprob = 1 – [(1 – 1) * (1 – 1)] = 1

LAYOUT-BASED INFORMATION

EXTRACTION

(LITE)

Label Extraction Method

Prune form page

Identify pieces of

text (candidates)

physically closest

to form element

Choose highest

ranked candidate

as label

Layout prune

page using

custom layout

engine

Rank candidates

based on

position, font

size, etc.

Results

Method

Accuracy

LITE

93%

Textual

Analysis

72%

Common Form

Layout

83%

RESPONSE ANALYSIS

How does crawler determine whether response page contains

results or error message?

Identify significant portion of the response page by removing header,

footer, etc. and find content in middle of the page

See if content matches predefined error messages e.g. “No results,”

“No matches”

Store hash of significant portion and assume that if hash occurs very

often, then hash is that of an error page

METRICS

How to measure the efficiency of the hidden web

crawler?

Define submission efficiency SE

Ntotal = total number of forms submitted

Nsuccess = total number of submissions that resulted in

response page containing search results

Nvalid = number of semantically correct submissions (e.g.

inputting “Orange” for form element labeled “Vegetable” is

semantically incorrect)

SE strict

N success

N total

N valid

SE lenient

N total

EXPERIMENT

Task: Market analyst interested in building an

archive of information about the semiconductor

industry in the past10 years

LVS table populated from online sources such as

Semiconductor Research Corporation, Lycos

Companies Online

Parameter

Valu

e

Number of sites visited

50

Number of forms encountered

218

Number of forms chosen for submission

94

Label matching threshold

0.75

Minimum form size

3

Value assignment ranking function

ρfuz

Minimum acceptable value assignment rank 0.6

EXPERIMENTAL RESULTS –

RANKING FUNCTION

Crawler executed 3 times with different ranking

function

Number of Form Submission

Task 1 Performance with Different

Ranking Functions

5000

4500

88.8%

4000

3500

3000

3214

2853

83.1%

4316

65.1%

3760

3126

2810

2500

Total

2000

Successes

1500

1000

500

0

fuz

avg

prob

Ranking Function

ρfuz and ρavg submission efficiency above 80%

ρfuz does better but less forms are submitted as

compared to ρavg

EXPERIMENTAL RESULTS –

MINIMUM FORM SIZE

Effect of minimum form size – crawler performs

better on larger forms

Task 1 Performance with Different Minimum

Form Size

4000

3735

78.9%

3500

Number of Form Submission

88.77%

3214

2950

3000

2853

88.96%

2800

2491

2500

90%

2000

Ntotal

1560

1404

1500

1000

500

0

2

3

4

Minimum Form Size

5

Successes

CONTRIBUTIONS

Introduces HiWE, one of the first publicly available

techniques for crawling the hidden web

Introduces LITE, a technique to extract form data, by

incorporating the physical layout of the HTML page

Techniques

prior to this were based on pattern recognition of

the underlying HTML

PROS

Defines clear performance metric from which to

analyze the crawler’s efficiency

Points out known limitations of technique from

which future work can be done

Directs readers to technical report which

provides more detailed explanation of HiWE

implementation

CONS

Not an automatic approach, requires human

intervention

Task-specific

Requires creation of LVS table per task

Technique has lots of limitations

Can only retrieve search results from HTML based

forms

Cannot support forms that is driven by Javascript

events e.g. onclick, onselect

No mention of whether forms submitted through

HTTP post were stored/indexed

RELATED WORK

USC ISI Extract Data from Web (1999 - 2001) [7, 8]

Research at UCLA (2005) [4]

Adaptive approach – automatically generate queries by

examining results from previous queries

Google’s Deep-Web Crawler (2008) [1]

Describe relevant information on web page with a formal

grammar and automatically adapt to web page changes

Select only a small number of input combinations that

provides good coverage of content in underlying database

and adds the resulting HTML pages into a search engine

index

DeepPeep [6]

Tracks 45,000 forms across 7 domains and allows users to

search for these forms

Q&A

REFERENCES

[1] J. Madhavan, D. Ko, Ł. Kot, V. Ganapathy, A. Rasmussen, & A. Halev, “Google’s

Deep-Web Crawl,” Proceedings of the VLDB Endowment, 2008. Available:

http://www.cs.cornell.edu/~lucja/Publications/I03.pdf. [Accessed June 13, 2010]

[2] C. Mattmann, “Characterizing the Web,” Available:

http://sunset.usc.edu/classes/cs572_2010/Characterizing_the_Web.ppt. [Accessed

May 19, 2010]

[3] C. Mattmann, “Query Models,” Available:

http://sunset.usc.edu/classes/cs572_2010/Query_Models.ppt. [Accessed June 10,

2010]

[4] A. Ntoulas, P. Zerfos, & J. Cho, “Downloading Textual Hidden Web Content by

Keyword Queries,” Proceedings of the Joint Conference on Digital Libraries, June

2005. Available: http://oak.cs.ucla.edu/~cho/papers/ntoulas-hidden.pdf.

[Accessed June 13, 2010]

[5] A. Wright, “Exploring a ‘Deep Web’ That Google Can’t Grasp,” The New York

Times, February 22, 2009. Available:

http://www.nytimes.com/2009/02/23/technology/internet/23search.html?_r=1&th

&emc=th. [Accessed June 1, 2010]

[6] DeepPeep beta, Available: http://www.deeppeep.org/index.jsp

[7] C. A. Knoblock, K. Lerman, S. Minton, & I. Muslea, “Accurately and Reliably

Extracting Data from the Web: A Machine Learning Approach,” IEEE Data

Engineering Bulletin, 1999. Available: http://www.isi.edu/~muslea/PS/deb-2k.pdf.

[Accessed June 28, 2010]

[8] C. A. Knoblock, S. Minton, & I. Muslea,” Hierarchical Wrapper Induction for

Semistructured Information Sources,” Journal of Autonomous Agents and MultiAgent Systems, 2001. Available: http://www.isi.edu/~muslea/PS/jaamas-2k.pdf.

[Accessed June 28, 2010]