Document

advertisement

Fundamentals of Embedded

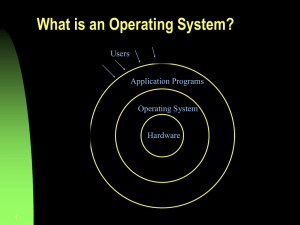

Operating Systems

Operating System

Exploits the hardware resources of one

or more processors

Provides a set of services to system

users

Manages memory (primary and

secondary) and I/O devices

Foreground/Background

Systems

Small, simple systems usually don't have an

OS

Instead, an application consists of an infinite

loop that calls modules (functions) to perform

various actions in the “Background”.

Interrupt Service Routines (ISRs) handle

asynchronous events in the “Foreground”

Foreground/Background System

“Foreground”

ISR

while

loop

ISR

ISR

Code

Execution

“Background”

time

Why Embedded Operating

System?

For complex applications with a

multitude of functionality

mechanisms are required to implement

each functionality independent of the

others, but still make them work cooperatively

Embedded systems need

Multitasking

Multitasking - The ability to execute

more than one task or program at the

same time

CPU switches from one program to

another so quickly that it gives the

appearance of executing all of the

programs at the same time.

Example Multitasking

Telephone Answering Machine

Recording a

phone call

Operating the user’s

control panel

User has picked up

receiver

Nature of Multitasking

Cooperative multitasking -each program can

control the CPU for as long as it needs it. It

allows other programs to use it at times when

it does not use the CPU

Preemptive multitasking - Operating system

parcels out CPU time slices to each program

Complexities of multitasking

Multi-rate tasks

Periodic tasks

Concurrent tasks

Synchronous and asynchronous tasks

EXAMPLE: Complex Timing

Requirements of a compression unit

Uncompressed

Data

Character

Compressor

Compression

Table

Compressed

Data

Bit Queue

Cooperating Tasks

Data may be received and sent at different

rates - example one byte may be compressed to 2 bits

while another may be compressed to 6 bits

Data should be stored in input and output

queues to be read in a specific order

Time for packaging and emitting output

characters should not be so high that input

characters are lost

Fundamentals

How a System works

Wake Up Call -On Power_ON

Execute BIOS - instructions kept in Flash

Memory - type of read-only memory

(ROM) examines system hardware

Power-on self test (POST) checks the CPU,

memory and basic input-output systems

(BIOS) for errors and stores the result in a

special memory location

Processing Sequence

Power Up

BSP/OS

Initialization

Decompression

/Bootloading

Application code begins

Hardware Init

Board Support Package

BSP is a component that provides

board/hardware-specific details to OS

for the OS to provide hardwareabstractions to the tasks that use its

services

BSP is specific to both the board and

the OS for which it is written

BSP startup code

Initializes processor

Sets various parameters required by

processor for

Memory initialization

Clock setup

Setting up various components like cache

BSP also contains drivers for peripherals

Kernel

Most frequently used portion of OS

Resides permanently in main memory

Runs in privileged mode

Responds to calls from processes and

interrupts from devices

Kernel’s responsibility

Managing Processes

Context switching: alternating between the

different processes or tasks

Scheduling: deciding which task/process to

run next

Various scheduling algorithms

Critical sections = providing adequate

memory-protection when multiple

tasks/processes run concurrently

Various solutions to dealing with critical sections

Process

A process is a unique sequential execution of

a program

Execution context of the program

All information the operating system needs to manage

the process

Process Characteristics

Period - Time between successive executions

Rate - Inverse of Period

In a multi-rate system each process executes at

its own rate

Process Control Block (PCB)

Process Control Block

OS structure which holds the pieces of information

associated with a process

Process state: new, ready, running, waited,

halted, etc.

Program counter: contents of the PC

CPU registers: contents of the CPU registers

CPU scheduling information: information on

priority and scheduling parameters

PCB

Memorymanagement information:

Pointers to page or segment tables

Accounting information: CPU and real

time used, time limits, etc.

I/O status information: which I/O

devices (if any) this process has

allocated to it, list of open files, etc.

Process states

Executing

scheduled

Needs

Preempted data

Obtains data,

allocated CPU

Received data

Ready

Waiting

Needs data

Multithreading

Operating system supports multiple

threads of execution within a single

process

An executing process is divided into

threads that run concurrently

Thread – a dispatchable unit of work

Multi-threaded Process Model

Threads in Embedded

Applications

Lightweight processes are ideal for

embedded computing systems since

these platforms typically run only a few

programs

Concurrency within programs better

managed using threads

Context Switch

The CPU’s replacement of the currently

running task with a new one is called a

“context switch”

Simply saves the old context and “restores”

the new one

Actions:

Current task is interrupted

Processor’s registers for that particular task are

saved in a task-specific table

Task is placed on the “ready” list to await the next

time-slice

Context switch

Actions (more)

Task control block stores memory usage,

priority level, etc.

New task’s registers and status are loaded

into the processor

New task starts to run

Involves changing the stack pointer, the PC and

the PSR (program status register)

ARM instructions: Saving the context

STMIA

r13, {r0-r14}^ ; save all user registers

in space pointed to

by r13 in ascending

order

MRS

r0, SPSR

STMDB

r13, {r0, r15}

; get status register and put

it in r0

; save status register

and PC into context

block

ARM Instructions: Loading a new

process

ADR r0, NEWPROC ;

get address for pointer

LDR r13, [r0];

Load next context block in r13

LDMDB r13, {r0, r14};

Get status register and PC

MSR SPSR, r0;

Set status register

LDMIA r13, {r0-r14}^;

Get the registers

MOVS pc, r14;

Restore status register

When A Context-Switch Occur?

Time-slicing

Context switches

Time-slice: period of time a task can run before a

context-switch can replace it

Driven by periodic hardware interrupts from the

system timer

During a clock interrupt, the kernel’s scheduler

can determine if another process should run and

perform a context-switch

However, this doesn’t mean that there is a

context-switch at every time-slice!

Pre-emption

Preemption

Currently running task can be halted and

switched out by a higher-priority active

task

No need to wait until the end of the timeslice

Context Switch Overhead

Frequency of context switch depends upon

application

Overhead for Processor context-switch is the

amount of time required for the CPU to save

the current task’s context and restore the

next task’s context

Overhead for System context-switch is the

amount of time from the point that the task

was ready for context-switching to when it

was actually swapped in

Context switch overhead

How long does a system context-switch

take?

System context-switch time is a measure of

responsiveness

Time-slicing: a time-slice period + processor

context-switch time

Preemption is mostly preferred because it is

more responsive (system context-switch =

processor context-switch)

Process Scheduling

What is the scheduler?

Part of the operating system that decides

which process/task to run next

Uses a scheduling algorithm that enforces

some kind of policy that is designed to

meet some criteria

Scheduling

Criteria may vary

CPU utilization keep the CPU as busy as possible

Throughput maximize the number of processes

completed per time unit

Turnaround time minimize a process’ latency (run

time), i.e., time between task submission and

termination

Response time minimize the wait time for

interactive processes

Real-time must meet specific deadlines to prevent

“bad things” from happening

Scheduling Policies

Firstcome, firstserved (FCFS)

The first task that arrives at the request queue is

executed first, the second task is executed second

and so on

FCFS can make the wait time for a process very

long

Shortest Job First: Schedule processes

according to their run-times

Generally difficult to know the run-time of a

process

Priority Scheduling

ShortestJobFirst is a special case of

priority scheduling

Priority scheduling assigns a priority to

each process. Those with higher

priorities are run first.

Real Time Scheduling

Characteristics of Real-Time

Systems

Event-driven, reactive.

High cost of failure.

Concurrency/multiprogramming.

Stand-alone/continuous operation.

Reliability/fault-tolerance requirements.

Predictable behavior.

Example Real-Time Applications

Many real-time systems are control systems.

Example 1: A simple one-sensor, one-actuator control

system.

reference

input r(t)

A/D

A/D

rk

yk

control-law uk

D/A

computation

y(t)

sensor

u(t)

plant

actuator

The system

being controlled

Simple Control System

Basic Operation

set timer to interrupt periodically with period T;

at each timer interrupt do

do analog-to-digital conversion to get y;

compute control output u;

output u and do digital-to-analog conversion;

end do

T is called the sampling period. T is a key design choice.

Typical range for T: seconds to milliseconds.

Multi-rate Control Systems

More complicated control systems have

multiple sensors and actuators and must

support control loops of different rates.

Example:Helicopter flight controller

Do the following in each 1/180-sec. cycle:

validate sensor data and select data source;

if failure, reconfigure the system

Helicopter Controller

Every sixth cycle do:

keyboard input and mode selection;

data normalization and coordinate

transformation;

tracking reference update

control laws of the outer pitch-control loop;

control laws of the outer roll-control loop;

control laws of the outer yaw- and

collective-control loop

Hierarchical Control Systems

commands sampling

operator-system

rates may

interface

be minutes

or even

state

air traffic

hours

estimator

control

responses

Air traffic-flight

control hierarchy.

from sensors

virtual plant

navigation

flight

management

state

estimator

virtual plant

state

estimator

air data

flight

control

sampling

rates may

be secs.

physical plant or msecs.

Signal-Processing Systems

Signal-processing systems transform

data from one form to another.

Examples:

Digital filtering.

Video and voice

compression/decompression.

Radar signal processing.

Response times range from a few

milliseconds to a few seconds.

Example: Radar System

radar

sampled

digitized

data

memory

track

records

control

status

DSP

DSP

DSP

data

processor

track

records

signal

processors

signal

processing

parameters

Other Real-Time

Real-time

databases.

Applications

Transactions must complete by deadlines.

Main dilemma: Transaction scheduling algorithms and

real-time scheduling algorithms often have conflicting

goals.

Data may be subject to absolute and relative

temporal consistency requirements.

Multimedia.

Want to process audio and video frames at steady rates.

TV video rate is 30 frames/sec. HDTV is 60 frames/sec.

Telephone audio is 16 Kbits/sec. CD audio is 128 Kbits/sec.

Other requirements: Lip synchronization, low jitter,

low end-to-end response times (if interactive).

Are All Systems Real-Time

Systems?

Question: Is a payroll processing

system a real-time system?

It has a time constraint: Print the pay

checks every two weeks.

Perhaps it is a real-time system in a

definitional sense, but it doesn’t pay us

to view it as such.

We are interested in systems for which

it is not a priori obvious how to meet

The “Window of Scarcity”

Resources may be categorized as:

Abundant: Virtually any system design

methodology can be used to realize the timing

requirements of the application.

Insufficient: The application is ahead of the

technology curve; no design methodology can

be used to realize the timing requirements of

the application.

Sufficient but scarce: It is possible to realize

the timing requirements of the application, but

Example: Interactive/Multimedia

Applications

Requirements

(performance, scale)

Interactive

Video

The interesting

real-time

applications

are here

sufficient

but scarce

resources

insufficient

resources

High-quality

Audio

Network

File Access

abundant

resources

Remote

Login

1980

1990

2000

Hardware resources in year X

Hard vs. Soft Real Time

Task: A sequential piece of code.

Job: Instance of a task.

Jobs require resources to execute.

Example resources: CPU, network, disk, critical

section.

We will simply call all hardware resources

“processors”.

Release time of a job: The time instant

the job becomes ready to execute.

Absolute Deadline of a job: The time

instant by which the job must complete

execution.

Relative deadline of a job: “Deadline

Example

0

1

2

3 4

5

6

7

8

9 10 11 12 13 14 15

= job release

= job deadline

• Job is released at time 3.

• Its (absolute) deadline is at time 10.

• Its relative deadline is 7.

• Its response time is 6.

Hard Real-Time Systems

A hard deadline must be met.

If any hard deadline is ever missed, then

the system is incorrect.

Requires a means for validating that

deadlines are met.

Hard real-time system: A real-time

system in which all deadlines are hard.

We mostly consider hard real-time

systems in this course.

Examples: Nuclear power plant

Soft Real-Time Systems

A soft deadline may occasionally be

missed.

Question: How to define “occasionally”?

Soft real-time system: A real-time

system in which some deadlines are

soft.

Defining Use

“Occasionally”

One Approach:

probabilistic

requirements.

For example, 99% of deadlines will be met.

Another Approach: Define a “usefulness”

function for1 each job:

0

relative

deadline

Reference Model

Each job Ji is characterized by its release time

ri, absolute deadline di, relative deadline Di,

and execution time ei.

Sometimes a range of release times is specified: [ri,

ri+]. This range is called release-time jitter.

Likewise, sometimes instead of ei, execution

time is specified to range over [ei, ei+].

Note: It can be difficult to get a precise estimate of

ei (more on this later).

Periodic, Sporadic, Aperiodic Tasks

Periodic task:

We associate a period pi with each task Ti.

pi is the interval between job releases.

Sporadic and Aperiodic tasks: Released

at arbitrary times.

Sporadic: Has a hard deadline.

Aperiodic: Has no deadline or a soft deadline.

Examples

A periodic task Ti with ri = 2, pi = 5, ei = 2, Di =5 executes like this:

0

1

2

3 4

5

6

7

8

9 10 11 12 13 14 15 16 17 18

= job release

= job deadline

Classification of Scheduling

Algorithms

All scheduling

algorithms

static scheduling

(or offline, or clock driven)

dynamic scheduling

(or online, or priority driven)

static-priority

scheduling

dynamic-priority

scheduling

Summary of Lecture So Far

Real-time Systems

characteristics and mis-conceptions

the “window of scarcity”

Example real-time systems

simple control systems

multi-rate control systems

hierarchical control systems

signal processing systems

Terminology

Scheduling algorithms

Real Time Systems and You

Embedded real time systems enable us

to:

manage the vast power generation and

distribution networks,

control industrial processes for chemicals,

fuel, medicine, and manufactured products,

control automobiles, ships, trains and

airplanes,

conduct video conferencing over the

Internet and interactive electronic

commerce, and

Real-Time Systems

Timing requirements

meeting deadlines

Periodic and aperiodic tasks

Shared resources

Interrupts

What’s Important

Real-Time

Time- in Real-Time

Sharing

Systems

Metrics for real-time

systems differ

from that for

Systems

time-sharing systems.

Capacity

High

Schedulabilit

throughput

y

Responsiv Fast average

eness

response

Overload

Fairness

Ensured

worst-case

response

Stability

Scheduling Policies

CPU scheduling policy: a rule to select task to

run next

cyclic executive

rate monotonic/deadline monotonic

earliest deadline first

least laxity first

Assume preemptive, priority scheduling of

tasks

analyze effects of non-preemption later

Rate Monotonic Scheduling

(RMS)

Priorities of periodic tasks are based on their

rates: highest rate gets highest priority.

Theoretical basis

optimal fixed scheduling policy (when deadlines

are at end of period)

analytic formulas to check schedulability

Must distinguish between scheduling and

analysis

rate monotonic scheduling forms the basis for rate

monotonic analysis

however, we consider later how to analyze

systems in which rate monotonic scheduling is not

Rate Monotonic Analysis (RMA)

Rate-monotonic analysis is a set of

mathematical techniques for analyzing sets of

real-time tasks.

Basic theory applies only to independent,

periodic tasks, but has been extended to

address

priority inversion

task interactions

aperiodic tasks

Focus is on RMA, not RMS

Why Are Deadlines Missed?

For a given task, consider

preemption: time waiting for higher priority

tasks

execution: time to do its own work

blocking: time delayed by lower priority tasks

The task is schedulable if the sum of its

preemption, execution, and blocking is less

than its deadline.

Focus: identify the biggest hits among the

three and reduce, as needed, to achieve

schedulability

Example of Priority Inversion

Collision check: {... P ( ) ... V ( ) ...}

Update location: {... P ( ) ... V ( ) ...}

Attempts to lock data

resource (blocked)

Collision

check

Refresh

screen

Update

location

B

Rate Monotonic Theory Experience

Supported by several standards

POSIX Real-time Extensions

Various real-time versions of Linux

Java (Real-Time Specification for Java and

Distributed Real-Time Specification for Java)

Real-Time CORBA

Real-Time UML

Ada 83 and Ada 95

Windows 95/98

…

Summary

Real-time goals are:

fast response,

guaranteed deadlines, and

stability in overload.

Any scheduling approach may be used, but all

real-time systems should be analyzed for

timing.

Rate monotonic analysis

based on rate monotonic scheduling theory

analytic formulas to determine schedulability

framework for reasoning about system timing

behavior

Plan for Lectures

Present basic theory for periodic task sets

Extend basic theory to include

context switch overhead

preperiod deadlines

interrupts

Consider task interactions:

priority inversion

synchronization protocols (time allowing)

Extend theory to aperiodic tasks:

sporadic servers (time allowing)

A

Sample

Problem

Emergency

100 msec

Periodics

t1

Servers

50 msec

20 msec

Data Server

2 msec

150 msec

t2

20 msec

5 msec

Deadline 6 msec

after arrival

40 msec

Comm Server

350 msec

t3

Aperiodics

Routine

40 msec

10 msec

10 msec

2 msec

100 msec

Desired response

20 msec average

t2’s deadline is 20 msec before the end of each period

Rate Monotonic Analysis

Introduction

Periodic tasks

Extending basic theory

Synchronization and priority inversion

Aperiodic servers

A Sample Problem - Periodics

Emergency

Periodics

Servers

Aperiodics

100 msec

t1

50 msec

20 msec

Data Server

2 msec

150 msec

t2

20 msec

Deadline 6 msec

after arrival

40 msec

Comm Server

350 msec

t3

5 msec

Routine

40 msec

10 msec

10 msec

2 msec

100 msec

Desired response

20 msec average

t2’s deadline is 20 msec before the end of each period

Example of Priority

Semantics-Based Priority Assignment

Assignment

1

UIP = 10 = 0.10

11

VIP: UVIP = 25 = 0.44

IP:

VIP:

0

25

misses deadline

IP:

0

10

20

30

Policy-Based Priority Assignment

IP:

0

10

20

30

VIP:

0

25

Schedulability: UB Test

Utilization bound (UB) test: a set of n

independent periodic tasks scheduled by the

rate monotonic algorithm will always meet its

deadlines, for all task phasings, if

U(1)

U(2)

0.724

U(3)

0.720

=C1.0

U(4)

= 0.728

Cn = 0.756

1/ U(7)

n

1

--- + .... + --- < U(n) = n(2 - 1)

Tn U(5) = 0.743

= T0.828

U(8) =

1

= 0.779

U(6) = 0.734

U(9) =

Concepts and Definitions Periodics

Ci

Periodic task

Ui =

Ti

initiated at fixed intervals

must finish before start of next cycle

Task’s CPU utilization:

Ci = worst-case compute time (execution time) for

task ti

Ti = period of task

ti

CPU utilization for a set of tasks

U = U1 + U2 +...+ Un

Sample Problem: Applying UB

C

T

U

Test

Task t

20

100

0.200

1

Task t2

40

150

0.267

Task t3

100

350

0.286

Total utilization is .200 + .267 + .286 = .753

< U(3) = .779

The periodic tasks in the sample problem are

schedulable according to the UB test

Timeline for Sample Problem

0

100

200

t1

t2

t3

Scheduling Points

300

400

Exercise: Applying the UB Test

Given:

Task

t

t2

t

C

1

2

1

T

4

6

10

U

a. What is the total utilization?

b. Is the task set schedulable?

c. Draw the timeline.

d. What is the total utilization if C3 = 2 ?

Solution: Applying the UB Test

a. What is the total utilization? .25 + .34

+ .10 =0 .69 5

20

10

15

Task

t task set schedulable? Yes: .69

b. Is

the

< U(3) = .779

Task t2

c. Draw the timeline.

Task t

d. What is the total utilization if C3 = 2 ?

.25 + .34 + .20 = .79 > U(3)

Toward a More Precise Test

UB test has three possible outcomes:

0 < U < U(n)

Success

U(n) < U < 1.00

Inconclusive

1.00 < U Overload

UB test is conservative.

A more precise test can be applied.

Theorem: The worst-case phasing of a task

occurs when it arrives simultaneously with all its

RT Test

higherSchedulability:

priority tasks.

Theorem: for a set of independent, periodic tasks,

if each task meets its first deadline, with worsti 1 the deadline will always

i

case task phasing,

be

an

where a 0 = C j

met. a n+1 = C i + T C j

j = 1

j = 1

j

Response time (RT) or Completion Time test:

let an = response time of task i. an of task I

may be computed by the following iterative

formula:

• This

test must be repeated for every task ti if required

• i.e. the value of i will change depending upon the task you are looking at

• Stop test once current iteration yields a value of an+1 beyond the deadline (else,

you may never terminate).

• The ‘square bracketish’ thingies represent the ‘ceiling’ function, NOT brackets

Example: Applying RT Test -1

Taking the sample problem, we increase the

compute time of t1 from 20 to 40; is the task

C

T

U

set still schedulable?

40

0.4

Task t:

Task t2:

Task t:

20

40

100

100

150

350

0.200

0.267

0.286

Utilization of first two tasks: 0.667 < U(2)

= 0.828

first two tasks are schedulable by UB test

Utilization of all three tasks: 0.953 > U(3)

= 0.779

Example: Applying RT Test -2

3

Use RT

test to determine if t3 meets its

a = C = C + C + C = 40 + 40 + 100 = 180

j

1 i 2= 3

3

first0 deadline:

j=1

i 1

a1 = C i +

j = 1

a0

Tj

2

Cj = C3 +

j = 1

a0

Tj

Cj

= 100 + 180 ( 40 ) + 180 ( 40 ) = 100 + 80 + 80 = 260

100

150

Example: Applying the RT Test

2 a1

260 (40) + 260 (40) = 00

-3

C = 100

a =C +

+

j

2

3

j = 1 Tj

100

150

2 a2

a =C +

C = 100 + 300 (40) + 300 (40) = 00

3

3

j

T

100

150

j =1 j

a3 = a2 = 300 Done!

Task t3 is schedulable using RT test

a 3 = 300 < T = 350

0

t1

100

200

Timeline for Example

300

t2

t3

t 3 completes its work at t = 300

Exercise: Applying RT Test

Task t1: C1 = 1

Task t2: C2 = 2

Task t3: C3 = 2

T1 = 4

T2 = 6

T3 = 10

a) Apply the UB test

b) Draw timeline

c) Apply RT test

a) UBSolution:

test

Applying RT Test

t and t2 OK -- no change from previous exercise

.25 + .34 + .20 = .79 > .779 ==> Test inconclusive for t

b) RT test and timeline

0

5

10

15

Task t

Task t2

Task t

All work completed at t = 6

20

Solution: Applying RT Test

c) RT test

(cont.)

3

a

0

=

Cj =

C +C +C

1

2

3

= 1 +2 +2

= 5

j=1

2

a 1 = C3 +

j = 1

2

a 2 = C3 +

j = 1

a0

Tj

a1

Tj

Cj = 2 +

5

Cj = 2 +

6

1 +

2 = 2+2+2 = 6

6

4

4

5

1 +

6

2 = 2+2+2 = 6

6

Done

Summary

UB test is simple but conservative.

RT test is more exact but also more

complicated.

To this point, UB and RT tests share the same

limitations:

all tasks run on a single processor

all tasks are periodic and noninteracting

deadlines are always at the end of the period

there are no interrupts

Rate-monotonic priorities are assigned

there is zero context switch overhead

Scheduler

Scheduler saves state of calling process

- copies procedure call state and

registers to memory

Determines next process to be executed

Preemptive Multitasking

Context switch caused by Interrupt

On Interrupt - CPU passes control to

Operating System (OS)

OS tasks

Interrupt Handler saves context of executing

process

OS schedules next process

Context of scheduled process is loaded

On returning from Interrupt - new process

starts executing from where it was

interrupted earlier

Job parameters for Embedded

applications

Temporal parameters - timing constraints

Functional parameters - Intrinsic

properties

Interconnection parameters dependencies on other jobs

Resource parameters - resource

requirements of memory, sequence numbers,

mutexes, database locks etc.

Characterization of an

application

Release time of tasks

Absolute deadline of each task

Relative deadline

Laxity

Execution time - may be varying [ei-, ei+]

Preemptivity - whether a task is preemptive

Resource requirements

Radar System

Example

to

illustrate

the

functioning of a complex system

as a subset of tasks and jobs and

their dependencies

A Radar Signal Processing and

Tracking application

I/O Subsystem - samples and digitizes the

echo signal from the radar and places the

sampled values in shared memory

Array of digital signal processors process the sampled values and produce data

Data processors - analyze data, interface

with display devices, generate commands to

control radar, select parameters to be used by

signal processors for next cycle of data

collection and analysis

Working principle for a

Radar system

If there is an object at distance x from

antenna, echo signal returns to antenna 2x/c

seconds after transmitted pulse

Echo signal collected should be stronger than

when there is no reflected signal

Frequency of reflected signal changes when

object is moving

Analysis of strength and frequency identifies

the position and velocities of objects

Keeping track of objects

Time taken by antenna to collect echo signal

from distance d is divided into interval ranges

of d/c

The echo signals collected in each interval is

placed in a buffer

Fourier transform of each segment performed

Characteristics of the transform determines

the object’s characteristics

A track record for each calculated position

and velocity of object is kept in memory

Calculating temporal and

resource constraints

Time for signal processing dominated

by Fourier Transform computation deterministic O(n log n)

Stored Track records are analyzed for

false returns since there can be noise

Tracking an object

Tracker assigns each measured value to

a trajectory if it is within a threshold

(track gate)

If the trajectory is an existing one, the

object’s position and velocity is updated

If the trajectory is new - the measured

value gives the position and velocity of

a new object

Data association

X1

X3

T1

X2

T2

X1 is assigned to T1 - defines T1

X3 initially assigned to both T1 and T2, then

deleted from T1, assigned to T2, defines T2

X2 initiates new trajectory

Types

of

real

time

tasks

Jittered - When actual release time of a task

is not known but the range of release time is

known [ri-, ri+]

Aperiodic jobs - Released at random time

instants

Sporadic jobs - Inter-release times of these

tasks can be arbitrarily small

Periodic - each task is executed repeatedly at

regular or semiregular time intervals

Periodic tasks

Let T1, T2, .., Tn be a set of tasks

Let Ji,1, Ji,2, ….. Ji,k be individual jobs in Ti

Phase Φi - Release time ri,1 of first job of Ti

H = LCM of Φi, i = 1,..,n

H is called the hyperperiod of the tasks

Period pi of task Ti is the minimum length of

all time intervals between release times of

consecutive jobs in Ti

Hard vs. Soft time constraints

Hard deadline - imposed on a job if

the results produced after the deadline

are disastrous

example - signaling a train

Soft deadline - when a few misses of

deadlines does not cause a disaster

example - transmission of movie

Laxity - specification of constraint type

Nature of tasks

Aperiodic tasks - usually do not have

hard deadlines

Sporadic tasks - have hard deadlines

Periodic tasks - usually have hard

deadlines

Responding to external events

Execute aperiodic or sporadic jobs

Example - pilot changes the autopilot

from cruise mode to stand-by mode system responds by reconfiguring

but continues to execute the control

tasks to fly the airplane

System modeling assumptions

If task is aperiodic or sporadic probability distribution of inter-arrival

times have to be assumed for system

analysis

If execution time is not deterministic maximum time may be assumed - but

may lead to under-utilization of CPU

and unacceptably large designs

Precedence constraints -

Task graph

A partial order relation (< ) may exist

among a set of jobs

(6,13]

(4,11]

(0,7]

(2,9]

(2,5]

(0,5]

(5,8]

(4,8]

(8,11]

(11,14]

(5,20]

(conditional block]

Temporal Dependencies

Temporal dependency between two jobs - if

the jobs are constrained to complete within a

certain amount of time relative to one

another

Temporal distance - difference in

completion times of two jobs

example - for lip synchronization time

between display of each frame and the

generation of the corresponding audio

segment must be no more than 160 msec.

AND/OR precedence

constraints

An AND job is one which has more

than one predecessor and can begin

execution after all its predecessors have

completed

An (m/n) OR job can begin execution as

soon as any m out of its n predecessors

have completed

Other dependencies

Data dependency - shared data

Pipeline - producer-consumer

relationship

Functional parameters

Preemptivity of jobs

Criticality of jobs

Optional jobs - if an optional job

completes late or is not executed,

system performance degrades but

remains satisfactory

Resource requirements for

data association

Data dependent

Memory requirements can be quite high

for multiple-hypothesis tracking

For n established trajectories and m

measured values

time complexity of gating - O(nm log m)

time complexity for tracking - O(nm log

nm)

Controlling the operations of a

radar

Controller may change radar operation mode

- from searching an object to tracking an

object

Controller may alter the signal processing

parameters - threshold

Responsiveness and iteration rate of the

feedback process increase as the total

response time of signal processing and

tracking decreases

Aim of Scheduling in

embedded systems

Satisfy real-time constraints for all

processes

Ensure effective resource utilization

Scheduling

Rate Monotonic or Earliest Deadline First

Number of priority levels supported - 32

minimum - many support between 128-256

FIFO or Round-Robin scheduling for equalpriority threads

Thread priorities may be changed at run-time

Some more Priority Inversion Control - priority inheritance

or Ceiling Protocols

Memory management - mapping virtual

addresses to physical addresses, no paging

Networking - type of networking supported

Real Time task scheduling

Tasks may have soft or hard deadlines

Tasks have priorities - may be changing

Tasks may be preemptible or nonpreemptible

Tasks may be periodic, sporadic or

aperiodic

Scheduling multiple tasks

A set of computations are schedulable on

one or more processors if there exist enough

processor cycles, that is enough time, to

execute all the computations

Each activity has associated temporal

parameters

task activation time / release time - t

deadline by which it is to be completed-

d

execution time - c

Feasibility and optimality of

schedules

A valid schedule is feasible if every job

completes by its deadline

A set of jobs is schedulable if a feasible

schedule exists

A scheduling algorithm is optimal if it can

generate a feasible schedule whenever such a

schedule exists

if an optimal algorithm fails to find a feasible

schedule for a set of tasks then they are not

schedulable

Quality of a schedule

Measured in terms of tardiness

Tardiness of a job measures how late it

completes with respect to its deadline

zero :

if completed before deadline

(time of completion - deadline) :

if completed later

Performance measure in terms

of lateness

Lateness - difference between completion

time and deadline

may be negative

Scheduling may try to minimize absolute

average lateness

Example - transmission of packets in a packet

switched network - each packet of a message

have to be buffered till all of them reach requires buffer space hence large arrival time

jitters will mean more buffer space

requirement - minimizing lateness minimizes

average buffer occupancy time

Response time

Response time = length of time from release

time of task to the instant when it completes

Maximum allowable response time of a job is

called its relative deadline

Most frequently used Performance

measure for soft real time tasks average response time

CPU utilization

The maximum number (N) of jobs in

each hyperperiod = ni=1 (H/pi)

ui - Utilization of task Ti

ui = ei / pi

total utilization of CPU =

ni=1 (ui)

Example

T1, T2, T3 - three periodic tasks

p1 = 3, p2 = 4, p3 = 10

e1 = 1, e2 = 1, e3 = 3

u1 = 0.33, u2 = 0.25, u3 = 0.3

total utilization = 0.88

CPU is utilized 88% of time

Mixed job scheduling

Soft real time jobs - minimize average

response time

Miss rate - percentage of jobs that are

completed late

Loss rate - Percentage of jobs discarded

Hard real time jobs - all to be

completed within deadline

Real time scheduling

approaches

Clock driven scheduling - scheduler runs

at regular spaced time instants

Priority based scheduling - algorithms

that never leave any resource idle

makes locally optimal decisions whenever

necessary

the CPU is given to the highest priority job that is

in the ready state

scheduling policy may decide the priority

Clock driven scheduling

First Come First Serve - strictly according

to system assigned fixed priority

Round Robin - A process is allocated a fixed

unit of time

Weighted Round Robin - Different time

slice allocated to different jobs

jobs have to be preemptive

assigns CPU to jobs from a FIFO ready queue

Problems with clock based

scheduling algorithms

Process characteristics like activation

time, compute time or deadlines are not

taken into consideration at execution

time

Hence feasible schedules may not be

produced even if they exist

Priority-driven scheduling with

process parameters

Priority calculated on the basis of

compute time or deadlines

Static - priority assigned before

execution starts

Dynamic - priority may change during

execution

Mixed - static and dynamic assignments

used simultaneously

Priorities of processes

Static or Fixed priority system - Each

process is assigned a priority before it starts

executing and the priority does not change

Dynamic priority - Priorities of systems

change during execution - priority may be

a function of process parameters like

compute time or slack time

Priority driven scheduling of

periodic tasks

Scheduling decisions are made immediately

upon job releases and completions

Context switch overhead is small

Unlimited priority levels

Fixed priority - same priority for all jobs in a

task

Dynamic priority - priority of task changes

with release and completion of jobs

Earliest Deadline First (EDF)

The ready process with the earliest future

deadline is assigned highest priority and

hence gets the CPU

When preemption is allowed and jobs do not

contend for resources, the EDF algorithm can

produce a feasible schedule of a set of jobs J

with arbitrary release times and deadlines on

a processor if and only if J has a feasible

schedule

Any feasible schedule of a set of jobs

J can be transformed into an EDF

schedule

Ji

Jk

I2

I1

dk d i

Jk

Jk

Jk

Jk

Ji

Ji

Latest Release Time (LRT)

scheduling algorithm

No advantage in completing jobs early if goal is

to meet deadlines only

Deadline is set to release time and Release

time is set to deadline

Start with latest release time and schedule jobs

backwards starting from latest deadline in

order

Guaranteed to find a feasible schedule for a

set of jobs J which do not contend for

resources, have arbitrary release times and

deadlines on a processor if and only if such a

schedule exists

J2,

2(5,8]

J1, 3 (0,6]

J3, 2(2,7]

Start scheduling backwards

to meet deadline but not earlier

J1

0

2

J2

J3

4

6

8

Least Slack Time (LST)

Slack time for a process at time t and

having deadline at time d = (d - t) c´ , where c´ is the remaining execution

time

The process with the least slack time is

given the highest priority

Overheads analysis of

scheduling methods

EDF - produces optimal schedule for a

uni-processor system if one exists

LST - requires execution time

scheduling

Rate Monotonic Scheduling

Assigns fixed priorities in reverse order of

period length

Task - T(periodicity, execution_time)

shorter the period higher the priority

T1 (4,1), T2(5,2), T3(20,5)

R(T1) R(T2)

C(T1) C(T2)

Optimality of Rate monotonic

algorithm

Rate monotonic is an optimal priority

assignment method

if a schedule that meets all deadlines

exists with fixed priorities then RM

will produce a feasible schedule

Deadline-monotonic

scheduling

Priorities assigned according to relative

deadlines

shorter the relative deadline higher the

priority

Let task be represented as Ti (ri, p i, ei, di)

T1(50, 50, 25, 100), T2(0, 62.5, 10, 20),

T3(0,125,25,50)

T2

10

T3

T1

T2

35 50 62.5 72.5

T1

T2

87.5 100 125 135

T3

140

Rate Monotonic Scheduling of

same set of tasks

Let task be represented as Ti (ri, p i, ei, di)

T1(50, 50, 25, 100), T2(0, 62.5, 10, 20),

T3(0,125,25,50)

0 10 35 50 62.5 75 85.5 100

MISSED DEADLINE

125 135 150

175 185

MISSED DEADLINE

Comparison

If DM fails to find a schedule then RM

will definitely fail

DM may be able to produce a feasible

schedule even if RM fails

Table-driven scheduling

Order of process execution is determined at

design time - periodic process scheduling

Processes can also be merged into a

sequence to minimize context switching

overhead

Processes communicating with static data

flow triggered by the same event can be

clustered and scheduled statically

This allows for local data-flow optimization,

including pipelining and buffering.

Event driven Reactive Systems

for unpredictable workloads

can accommodate dynamic variations in

user demands and resource availability

Off-line schedules to handle

aperiodic tasks

Let there be n periodic tasks in the

system

Assume that aperiodic jobs are released

at unexpected time instants and join

aperiodic job queue

Timer sends kth interrupt at time tk

Scheduler schedules tasks from

schedule at timer interrupts

Clock driven scheduler - Cyclic

Executive

Do forever

accept timer interrupt at time instant tk

if current job is not completed take

appropriate action

if a job in current block Lk is not released take

appropriate action

wake up periodic server to execute jobs in

current block

sleep until periodic server completes

Cyclic executive contd.

While the aperiodic job queue is non-empty

wake up the job at head of queue

sleep until aperiodic job completes

remove aperiodic job form queue

endwhile

sleep until next clock interrupt

aperiodic jobs are given least priority bad average response time

Slack Stealing - to improve

average response time

Every periodic job slice must be scheduled in

a frame that ends no later than its deadline

Let the total amount of time allocated to all

slices in frame k be xk

Slack time available in frame k = f - xk

If aperiodic job queue is non-empty at the

beginning of time frame f, aperiodic jobs may

be scheduled for slack time without causing

any deadline miss

Scheduling sporadic jobs

Since sporadic jobs hard relative

deadlines, they should be completed or

rejected immediately if scheduler

cannot find a feasible schedule

When rejected appropriate remedial

actions can be taken in time

Example - Quality control

system using a robot arm

Camera detects defective part on conveyor

belt - robot arm is to remove it

Sporadic task to remove is initiated - have to

be completed before part moves beyond

reach of arm - deadline fixed as function of

conveyor belt speed

If removal cannot be accomplished on time raise alarm to move part manually

EDF scheduling of accepted

jobs

Acceptance Test - to check whether a new

sporadic job can be scheduled along with

existing periodic jobs

Scheduler maintains queue of accepted

sporadic jobs in non-decreasing order of

deadlines

Run Cyclic Executive algorithm to pick up

sporadic jobs from this queue to execute in

slack time

Setting OS parameters for real

time task execution

Set scheduler type

User may want to run task at kernel space

rather than user space - system may hang

Under LINUX - RTLINUX runs at kernel level preempts Linux processes from maintaining

control of system

RTLInux has limited capability - for many

facilities it passes on task to Linux

Sample tasks in VXWorks

Taskspawn(taskid, taskpriority,

taskpointer, other parameters)

Alternatively:

A function in user space can be invoked

by RT Linux interrupt or timer

This function is like a signal handler

though cannot make system calls

Some commercial versions like Lineo

make use of this

Improving the Linux behaviour

RTLinux holds all interrupts and makes

all calls on behalf of Linux

Monta Vista’s Embedded Linux - makes

kernel fully “preemptable” - kernel calls

can be interrupted - leads to system

hang and locks with injudicious

programming

Aspects of OS affecting

application behavior

Computing application time on

an OS

OS is deterministic - if the worst-case

execution time of each of its system calls is

calculable.

For RTOS - real-time behavior is published as

a datasheet providing the minimum, average,

and maximum number of clock cycles

required by each system call.

These numbers may be different for different

processors.

Interrupt Latency

Interrupt latency is the total length of

time from an interrupt signal arriving at

the processor to the start of the

associated interrupt service routine

(ISR).

Interrupt processing executing ISR

Processor must finish executing the

current instruction

Interrupt type must be recognized processor does it without slowing or

suspending the running task

CPU's context is saved and the ISR

associated with the interrupt is started.

Interrupt disabling

Interrupts are disabled within system

calls - the worst-case interrupt latency

increases by the maximum amount of

time that they are turned off.

A real-time project might require a

guaranteed interrupt response time as

short as 1s, while another may require

only 100s.

Designing a simple scheduler

void Scheduler(void)

{

int stop=0, newtick = 0;

while(!stop)

{

while(!newtick); // Wait for timer tick

newtick = 0;

thread1();

thread2();

...

threadn();

if(newtick) // overrun

OverrunHandler(); //Could make reset dependi

}

Preemptive kernels

kernel schedule is checked with a defined

period, each tick

it checks if there is a ready-to-run thread

which has a higher priority than the executing

thread - in that case, the scheduler performs

a context switch

Executing thread goes to ready state

Puts special demands on communication

between threads and handling common

resources

Resource Access Control

Mutually Exclusive resources

mutex

write-lock

connection sockets

printers

Resource allocated to a job on a nonpreemptive basis

Resource conflicts

Two jobs conflict with one another if

they require the same resource

Two jobs contend with one another if

one job requests for a resource already

held by another job

When a job does not get the requested

resource it is blocked - moved from the

ready queue

Resource access protocol

using Critical Sections

Critical section - a segment of a job

beginning at a lock and ending at a

matching unlock

Mutually exclusive Resources can

be used inside a non-preemptive

critical section (NPCS) only

Properties of NPCS

No priority inversion - a job is blocked if

another job is executing its critical

section

Mutual exclusion guaranteed

However, blocking occurs even when

there is no resource contention

Priority inversion

Priority(J1) > Priority(J2) > Priority(J3)

J1 and J3 currently executing

J1 requests for R - R held by J3

J3 blocks J1 - inversion of priority

J2 starts executing - does not need R preempts J3 and executes

J2 lengthens duration of priority

inversion

Priority Inheritance Protocol

At release time t a job starts with its assigned

priority (t)

When job Jk requests for R, if R is free it is

allocated to Jk else Jk is blocked

The job Jl that blocks Jk inherits the current

priority of Jk and executes with that priority

since the blocked process had the

highest priority, the blocking process

inherits it - overall execution time

should reduce

cannot prevent deadlocks

Priority Ceiling Protocol - when

maximum resource use is known

(R) - Priority ceiling of resource R is

the highest priority of all the jobs that

require R

^(t) - current ceiling of system is

equal to the highest priority ceiling of

resources in use at the time

At release time t a job starts with its assigned priority

(t)

When job Jk requests for R,

If R is not free Jk is blocked

If R is free

then if (t) > ^(t), R is allocated to Jk

else if Jk is the job holding the resources whose

priority ceiling is equal to^(t), R is allocated to Jk

else Jk is blocked

The blocking process inherits the highest priority of

the blocked process

Example

P(J0)>P(J1) > P(J2) > P(J3)

J2 books R2 - ^(t) = P(J2)

^(t) = P(J2)

J3 requests R1 - ^(t) = P(J2) > P(J3)

- J3 blocked

J1 requests R1 - ^(t) = P(J2) < P(J1)

- R1 allotted to J1

^(t) = P(J1)

Example continued

J2 requests R1 - J2 is blocked

J1 requests R2 - ^(t) = P(J1) R2 is

allocated to J1

J0 requests R1 - (t) > ^(t) but J0

does not hold any resource - so J0

blocked

J1 inherits P(J0)

Duration of blocking

When resource access of preemptive,

priority driven jobs are controlled by the

priority ceiling protocol, a job can be

blocked for at most one duration of

once critical section

Upper bound on blocking time can

be computed and checked for

generating feasible schedules

A case study

What really happened on Mars

Rover Pathfinder?

Mars Pathfinder mission

1997

Landed on Martian surface on 4th July

Gathered and transmitted voluminous data

back to Earth, including the panoramic

pictures that were a bit hit on the Web

But a few days into the mission of gathering

meteorological data, Pathfinder started

experiencing total system resets, each

resulting in losses of data

Pathfinder software

Was executing on VXWorks

VxWorks implements preemptive

priority scheduling of threads

Tasks on the Pathfinder spacecraft were

executed as threads with priorities

Priorities were assigned reflecting the

relative urgency of these tasks

Information Bus

Pathfinder contained an "information

bus", which was a shared memory area

used for passing information between

different components of the spacecraft

Access to the bus was synchronized

with mutual exclusion locks (mutexes).

Tasks running on Pathfinder

A bus management task ran frequently with

high priority to move certain kinds of data in

and out of the information bus

The meteorological data gathering task ran as

an infrequent, low priority thread, and used

the information bus to publish its data

A communications task that ran with medium

priority

Priority inversion

While meteorological task had lock on

information bus and was working on it

An interrupt caused the bus management

thread to be scheduled

The high priority thread attempted to acquire

the same mutex in order to retrieve published

data - but would be blocked on the mutex,

waiting until the meteorological thread

finished

Things were fine!

Most of the time - meteorological

thread eventually released the bus and

things were fine!

But at times!

While the low priority meteorological

task blocked the high priority bus

management task, the medium priority

communications task interrupted and

started running!

This was a long running task and hence

delayed the bus management task

further

Watchdog timer!

After some time a watch dog timer

sensed that the high priority bus

management task has not run for quite

some time!

“something must have gone wrong

drastically! RESET SYSTEM!”

Debugging

Total system trace - history of execution

along with context switches, use of

synchronization objects and interrupts

Took weeks for engineers to reproduce

the scenario and identify the cause

Priority Inheritance had been

turned off!

To save time

Had it been on the low-priority

meteorological thread would have inherited

the priority of the high-priority bus

management thread that it blocked and

would have been scheduled with higher

priority

than

the

medium-priority

communications task. On finishing the bus

management task would have run first and

then the communications task!

http://catless.ncl.ac.uk/Risks/1

9.49.html

Analysis presented by

David Wilner,

Chief Technical Officer of Wind

River Systems.

Interactive Embedded System

Continuously interacts with its environment

under strict timing constraints - called the

external constraints

It is important for the application developer

to know how these external constraints

translate to time and resource budgets called the internal constraints, on the tasks of

the system.

Knowing these budgets

Reduces the complexity of the system 's

design and validation problem and

helps the designers have a

simultaneous control on the system's

functional as well as temporal

correctness..

Wheel Pulses

Read Speed

Accumulate

Pulses

Compute Total km

Filter Speed

Speedometer

LCD Display Driver

Compute Partial km

Resettable Trip

Odometer

Lifetime

Odometer

Example constraint specification

Task Graph

Embedded Flexible

Applications

Applications that are designed and

implemented to trade off at run-time

the quality of results (services) they

produce for the amounts of time and

resources they use to produce the

results

graceful degradation in result quality or in

timeliness

for voice transmission a poorer quality with

fewer encoded bits may be tolerable but

not transmission delay

Characterizing Flexible

Applications

A Flexible job has an optional component

which can be discarded when necessary in

order to reduce the job’s processor time and

resource demands by deadline

Firm deadlines (m, N) - If at least m jobs

among any consecutive N m jobs in the

task must be complete in time

N is the failure window of the task

Criteria of Optimality

Errors and Rewards

Static Quality metrics

Dynamic Failures and firm deadlines

QoS vs. timeliness

Firm quality - fixed resource demands degrade gracefully by relaxing their

timeliness

Firm deadlines - flexible resource

demands but hard deadlines - degrade

gracefully by relaxing the result quality

Multimedia data

communication

Voice, video and data transfer over ATM

networks

ATM's ABR (available bit rate) service

provides minimum rate guarantees

Guaranteeing QoS

Bandwidth

Delay

Loss

have to be guaranteed in a certain range

Solution: Rate allocation switch algorithm

to ensure general form of fairness to

enhance the bandwidth capability.

QoS-aware middleware

Two major types of QoS-aware middleware

(1) Reservation-based Systems

(2) Adaptation-based Systems

Both of them require application-specific

Quality of Service (QoS) specifications

QoS-aware middleware

Both of them require application-specific

Quality of Service (QoS) specifications

Configuration graphs

Resource requirements

Mobility rendering

Adaptation rules to be provided by application

developers or users.

Executing Flexible tasks

Sieve Method

Milestone method

Multiple Version method

Completion times are more critical than

absolute time of completion

Sieve method

Discardable optional job is a Sieve

Example - MPEG video transmission

transmission of I-frames mandatory

transmission of B- and P-frames optional

MPEG Compression idea

In video often one frame differs very little

from the previous frame

In two sequential frames there's not that

much difference between a single item

Certain frames are encoded in terms of

changes applied to surrounding frames

Amount of information required to describe

changes is much less than the amount of

information it requires to describe the whole

frame again.

Frame 3

Frame 1

Frame 2 - predicted

I, P, and B frames

I frames are coded spatially only

P frames are forward predicted based

on previous I and P frames

B frames are coded based on a forward

prediction from a previous I or P frame,

as well as a backward prediction from a

succeeding I or P frame

Encoding of B frames

First B frame is predicted from the first

I frame and first P frame

Second B frame is predicted from the

second and third P frames

Third B frame is predicted from the

third P frame and the first I frame of

the next group of pictures.

Some information

not there

More information

MPEG video transmission

All I frames to be sent

All P frames to be sent if possible

B frames to be sent optionally

Quality of video produced on receipt

depends on what has been transmitted

Milestone method for

scheduling Flexible

Applications

Monotone Job - A job whose result

converges monotonically to desired

quality with increasing running time

A monotone computation job is

implemented using an incremental

algorithm whose result converges

monotonically to the desired result with

increasing running time

Implementation

Programmer identifies optional parts of

program

During execution - intermediate results are

saved with quality indicator - tracking

error, least mean square error etc.

Runtime decisions to schedule processes are

taken on the basis of current state of results

Example

Layered encoding techniques in

transmission of video, images and

voices

Approximate query processing

Incremental information gathering

Multiple version method

Each flexible job has a primary version

and one or more alternative versions

Primary version produces precise result,

needs more resources and execution

time

Alternative methods has small execution

time, use fewer resources but generate

imprecise result

Multiple version method

scheduling

Scheduler chooses version according to

available resources

A set of jobs scheduled together for

optimal quality

Computational model

J=M+O

Release times and deadlines of M and O

are same as that of J

M is predecessor of O

execution time of J ej = em + eo

Valid schedule

Time given to J is em

J is complete when M is complete

Feasible schedule - one in which every

mandatory job is completed within

deadline

Optimal scheduling algorithms

All mandatory jobs completed on time

Fast convergence to good quality result

Since quality of successor jobs may

depend on quality of predecessor jobs,

maximize the result quality of each

flexible application

Error and rewards

Let maximum error be 1 - when

optional part is discarded completely

If x is the length of the optional job

completed then €(x) denotes the error

in the result produced by this job

€(0) = 1 and €(eo) = 0

Error €(x) = 1 - x/ eo

Quality metrics - Performance

measures

Total error for a set of tasks =

wti

€(xi)

wti is penalty for not completing task i

Minimize average error

A Case Study: Sunrayce car

control/monitoring system:

Data

Analyzer

collecting

Car

Sensor

s

GUI

Technical Details

A workstation, which

collects data from 70

sensors with total

sampling rate of 80Hz.

The needed response

times ranged from 200

to 300 ms

One RT task per sensor

executing with 0.0125

period on a 20Mhz i386based computer

running RT-Linux.

RT Tasks:

communica

ting with

sensors

Linux

processes:

analyzer,

GUI (Based

on QT

widget)

RT-Linux: Scheduling

Priority-based scheduler

Earliest Deadline First (EDF)

Rate Monotonic (RM)

Additional facilities through Linux kernel

module

RT-Linux problems

Problems

Unable to support soft real-time

No schedulability analysis

Unable to support complicated

synchronization protocols(?)

Solutions

Flexible Real Time Logic

Provide both HARD guarantee and

FLEXIBLE behavior at the same time

Approach:

Each task as a sequence of mandatory and

optional components

Separate executions of components in two

scheduling levels

FRTL extension to RTLinux

Mandatory components versus optional

components

Both hard real-time constraints and the

ability to dynamically adapt their responses

to changes in the environment (soft realtime).

Provides a complete schedulability test,

including kernel overhead.

FRTL: Software architecture

Shared Memory Space

Mandatory

1

Task

N

Task

2

Task 1

Mandatory 1

Mandatory M1

Version

1

Task

K

Task

2

Optional 1

Version 1

Version M1

Second-Level Scheduler

First-Level Scheduler

FRTL: Task Model

Tasks -> Components

Mandatory

Optional

Unique Version (UV)

Successive Versions (SV)

Alternative Versions (AV)

FRTL:Smart Car

Mandatory components:

Data collectors

Options components:

Data analysis, GUI

UV

SV

AV

Storage manager

Linux

Version

1

Task

K

Task

2

Optional 1

Version 1

RT

Task

s

Mandatory

1

Task

N

Task

2

Task 1

Other

processes

Version M1

Mandatory 1

Second-Level Scheduler

Mandatory M1

Linux kernel

First-Level Scheduler

Hardware

Design and Implementation

Two level Scheduler

For different kind of components

Strict hierarchy

Reclaimed spare capacity(why and how)

Difference from RT-Linux

Explicit division of optional and mandatory

t

t0

t1

t2

Detailed Design

First level scheduler

Modified scheduler from RT-Linux

Schedule optional components based on available

slack, interval

Second level scheduler

Non-real-time

Optional server

Regular Linux process

Mandatory

Optional

Optional

V

X

Mandatory

Second level scheduler

Customized

To decide on:

Next component to run

Version to be executed

Time to be executed

Tasks constrained by temporal

distance

Temporal distance between two jobs =

difference in their completion times

The distance constraint of task Ti is

Ci if

fi,1 - ri Ci

fi,k+1 - fi,k Ci

If the completion times of all jobs in Ti

according to a schedule satisfy these

inequalities then Ti meets its distance

constraints

Distance Constraint Monotonic

(DCM)

Algorithm

Priorities assigned to tasks on the basis of

their temporal distance constraint - smaller

the distance constraint higher the priority

Find the maximum response time of the

highest priority task

This is used as a release guard to ensure that

subsequent tasks are not released any sooner

than necessary

this allows more low priority tasks to be

scheduled feasibly

Verification of schedules

Final testing on a specific processor or a

coprocessor and with the required sizes of

program and data memory

All hardware and software data other than

those reused must be estimated

Implement each single process with the

target synthesis tool or compiler and then run

them on the (simulated) target platform

Inter Process Communication

Processes communicate with each

other and with kernel to

coordinate their activities

Producer-Consumer Problem

Paradigm for cooperating processes,

producer process produces information

that is consumed by a consumer

process.

unbounded-buffer places no practical limit

on the size of the buffer.

bounded-buffer assumes that there is a

fixed buffer size.

Bounded-Buffer - shared data

#define BUFFER_SIZE 10

typedef struct {

...

} item;

item buffer[BUFFER_SIZE];

int in = 0;

int out = 0;

int counter = 0;

Bounded-Buffer - producer

item nextProduced;

while (1) {

while (counter == BUFFER_SIZE)

; /* do nothing */

buffer[in] = nextProduced;

in = (in + 1) % BUFFER_SIZE;

counter++;

}

Bounded-Buffer

Consumer process

item nextConsumed;

while (1) {

while (counter == 0)

; /* do nothing */

nextConsumed = buffer[out];

out = (out + 1) % BUFFER_SIZE;

counter--;

}

Bounded Buffer

The statements

counter++;

counter--;

must be performed atomically.

Atomic operation means an operation that

completes in its entirety without interruption.

Bounded Buffer

The statement “count++” may be

implemented in machine language as:

register1 = counter

register1 = register1 + 1

counter = register1

The statement “count—” may be

implemented as:

register2 = counter

register2 = register2 – 1

counter = register2

Bounded Buffer

If both the producer and consumer

attempt to update the buffer

concurrently, the assembly language

statements may get interleaved.

Interleaving depends upon how the

producer and consumer processes are

scheduled.

Bounded Buffer

Assume counter is initially 5. One interleaving

of statements is:

producer: register1 = counter (register1 =

5)

producer: register1 = register1 + 1

(register1 = 6)

consumer: register2 = counter (register2 =

5)

consumer: register2 = register2 – 1

(register2 = 4)

producer: counter = register1 (counter = 6)

consumer: counter = register2 (counter =

4)

Race Condition

Race condition: The situation where several

processes access – and manipulate shared

data concurrently. The final value of the

shared data depends upon which process

finishes last.

To prevent race conditions, concurrent

processes must be synchronized.

The

Critical-Section

Problem

n processes all competing to use some

shared data

Each process has a code segment, called

critical section, in which the shared data

is accessed.

Problem – ensure that when one

process is executing in its critical

section, no other process is allowed

to execute in its critical section.

IPC mechanisms

•Pipes/FIFOs, queues

•Mapped Shared Memory

•Semaphores

•Mutexes, Condition Variables

•Signals, RT Signals

•timers/alarms

•sleep() and nanosleep()

•Watchdogs, Task Regs,

•Partitions/Buffers emulated by tool kits

Shared Memory

CPU1

CPU2

Shared Location

write

read

Bus

Pipes for redirection

Pipes are unidirectional byte streams

which connect the standard output from

one process into the standard input of

another process

Processes are unaware of redirection

It is the shell which sets up these

temporary

pipes

between

the

processes.

Example

ls | pr | lpr - pipes the output

from the ls command - into the

standard input of the pr command

which paginates them - finally the

standard output from the pr command is

piped into the standard input of the lpr

command which prints the results on

the default printer

Setting up a PIPE

A pipe is implemented using two file data

structures pointing to the same temporary

VFS inode

VFS inode points at a physical page within

memory

Each file data structure point to different file

operation routine vectors - one for writing to

the pipe, the other for reading from the pipe.

Reading and Writing

Processes do not see the underlying

differences from the generic system calls

which read and write to ordinary files

Writing process copies bytes into the shared

data page

Reading process copies bytes from the shared

data page

OS synchronizes access to the pipe - to make

sure that the reader and the writer of the

pipe are in step - uses locks, wait queues

and signals.

Named Pipes - FIFO

Unlike pipes - FIFOs are not temporary

objects

FIFOs are entities in the file system created through system calls

Processes can use a FIFO if they have

appropriate access rights to it

Using FIFOs

fd_fifo = rtf_create(fifo_num, fifo_size)

rtf_destroy(fifo_num)

open() and close() fifo

read() - read data from FIFO

write() - write data into FIFO

Real-Time Code--Handler for the Control FIFO

int my_handler(unsigned int fifo)

{

struct my_msg_struct msg;

int err;

int handler_fd, rt_fd;

handler_fd = open("/dev/rtf0",

O_NONBLOCK);

while ((err = read(handler_fd, &msg,

sizeof(msg)))

== sizeof(msg)) {

char dest[10];

sprintf(dest,"/dev/rtf%d",msg.task+1

);

rt_fd = open(dest, O_NONBLOCK);

write(rt_fd, &msg, sizeof(msg));

close(rt_fd);

}

close(handler_fd);

if (err < 0)

rtl_printf("Error getting data in

handler,%d\n",err);

return 0;

}